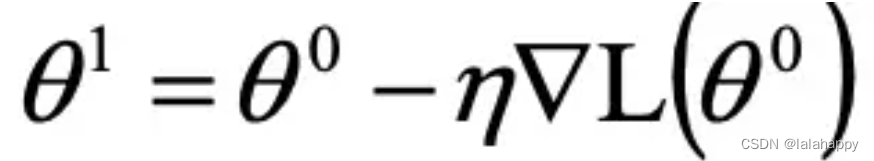

深度学习中,我们需要沿着梯度下降的方向,更新参数。

偏导数是表明了自变量对因变量产生了多大的影响。最理想的状态是偏导数等于0,没有对误差最终的误差产生影响。 ---- 一阶导与极值的关系

在损失函数所代表的“几何图像”上,用损失函数的梯度能够找到损失函数变化最快的方向。

如果正方向是增加的话,那么反方向就是最快减小的方向。

偏导的定义,是基于某点函数有增量;

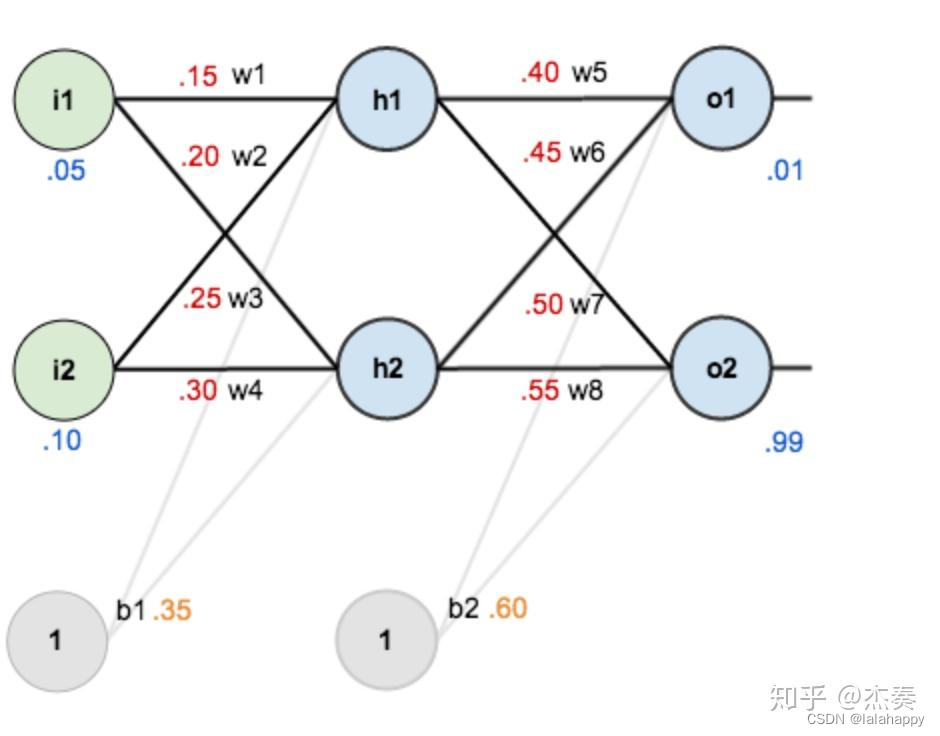

第一层是输入层,包含两个神经元

i

1

i_{1}

i1,

i

2

i_{2}

i2 和截距项

b

1

b_{1}

b1 ;

第二层是隐含层,包含两个神经元

h

1

h_{1}

h1,

h

2

h_{2}

h2 和截距项

b

2

b_{2}

b2 ;

第三层是输出

o

1

o_{1}

o1,

o

2

o_{2}

o2 ;

每条线上标的

w

i

w_{i}

wi 是层与层之间连接的权重,激活函数采用sigmoid函数;

由公式wx+b可知:

w

×

x

=

[

w

1

w

2

w

3

w

4

]

[

i

1

i

2

]

=

[

w

1

i

1

+

w

2

i

2

w

3

i

1

+

w

4

i

2

]

\mathbf{w} \times \mathbf{x} = \begin{bmatrix} w_{1} & w_{2} \\ w_{3}& w_{4} \end{bmatrix} \begin{bmatrix} i_{1} \\ i_{2} \end{bmatrix}=\begin{bmatrix} w_{1} i_{1}+w_{2} i_{2} \\ w_{3} i_{1}+w_{4} i_{2} \end{bmatrix}

w×x=[w1w3w2w4][i1i2]=[w1i1+w2i2w3i1+w4i2]

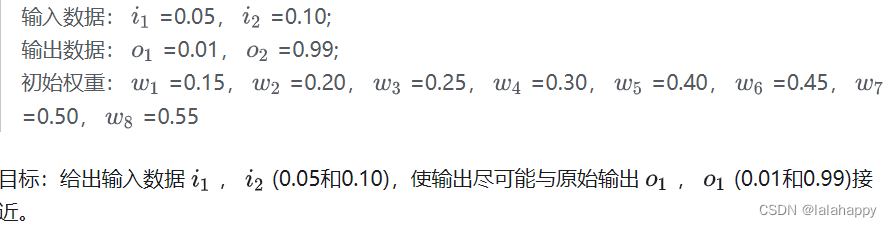

前向传播:

-

输入层 → \rightarrow → 隐藏层:

n e t h 1 = w 1 i 1 + w 2 i 2 + b 1 net_{h_{1}} = w_{1} i_{1}+w_{2} i_{2}+b_{1} neth1=w1i1+w2i2+b1

n e t h 1 = 0.15 ∗ 0.05 + 0.2 ∗ 0.1 + 0.35 = 0.3775 net_{h_{1}} = 0.15*0.05+0.2*0.1+0.35=0.3775 neth1=0.15∗0.05+0.2∗0.1+0.35=0.3775n e t h 2 = w 3 i 1 + w 4 i 2 + b 1 net_{h_{2}} = w_{3} i_{1}+w_{4} i_{2}+b_{1} neth2=w3i1+w4i2+b1

n e t h 2 = 0.25 ∗ 0.05 + 0.3 ∗ 0.1 + 0.35 net_{h_{2}} = 0.25*0.05+0.3*0.1+0.35 neth2=0.25∗0.05+0.3∗0.1+0.35 -

激活函数:

o u t h 1 = s i g m o i d ( n e t h 1 ) = 0.593269992 out_{h_{1}} = sigmoid(net_{h_{1}})=0.593269992 outh1=sigmoid(neth1)=0.593269992

o u t h 2 = s i g m o i d ( n e t h 2 ) = 0.596884378 out_{h_{2}} = sigmoid(net_{h_{2}})=0.596884378 outh2=sigmoid(neth2)=0.596884378 -

隐藏层 → \rightarrow → 输出层:

n e t o 1 = w 5 o u t h 1 + w 6 o u t h 2 + b 2 net_{o_{1}} = w_{5}out_{h_{1}}+w_{6}out_{h_{2}}+b_{2} neto1=w5outh1+w6outh2+b2n e t o 2 = w 7 o u t h 1 + w 8 o u t h 2 + b 2 net_{o_{2}} = w_{7}out_{h_{1}}+w_{8}out_{h_{2}}+b_{2} neto2=w7outh1+w8outh2+b2

-

激活函数:

o u t o 1 = s i g m o i d ( n e t o 1 ) = 0.75136507 out_{o_{1}} = sigmoid(net_{o_{1}})=0.75136507 outo1=sigmoid(neto1)=0.75136507

o u t o 2 = s i g m o i d ( n e t o 2 ) = 0.772928465 out_{o_{2}} = sigmoid(net_{o_{2}})=0.772928465 outo2=sigmoid(neto2)=0.772928465

通过前向传播得到输出值为[0.75136079, 0.772928465],

与实际值 [0.01, 0.99] 相差还很远,现在对误差进行反向传播,更新权值,重新计算输出。

反向传播:

-

计算总误差:

误差公式: E t o t a l = Σ 1 2 ( t a r g e t − o u t p u t ) 2 E_{total} = Σ \frac{1}{2}(target-output)^{2} Etotal=Σ21(target−output)2

有两个输出,所以分别计算 o 1 o_{1} o1 和 o 2 o_{2} o2 的误差,总误差之和为:

E t o t a l = E o 1 + E o 2 = 0.274811083 + 0.023560026 = 0.298371109 E_{total} =E_{o_{1}} +E_{o_{2}} = 0.274811083+0.023560026=0.298371109 Etotal=Eo1+Eo2=0.274811083+0.023560026=0.298371109

-

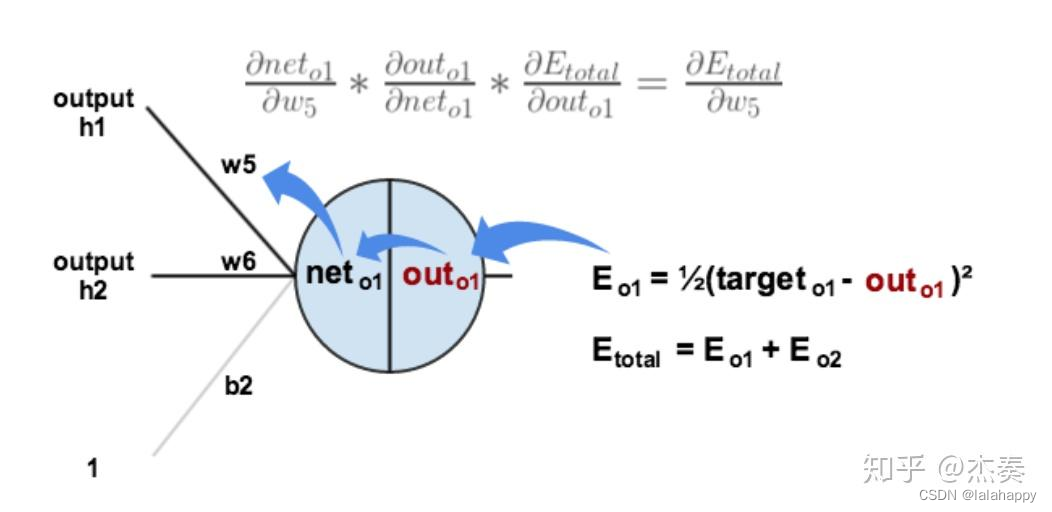

隐藏层 → \rightarrow → 输出层的权值更新:

以权重参数 w 5 w_{5} w5 为例,如果我们想知道 w 5 w_{5} w5 对整体误差产生了多少影响,可以用整体误差对 w 5 w_{5} w5 求偏导求出(链式法则):

∂ E t o t a l ∂ w 5 \frac{\partial E_{total}}{\partial w_{5}} ∂w5∂Etotal = ∂ E t o t a l ∂ o u t o 1 \frac{\partial E_{total}}{\partial out_{o_{1}}} ∂outo1∂Etotal * ∂ o u t o 1 ∂ n e t o 1 \frac{\partial out_{o_{1}}}{\partial net_{o_{1}}} ∂neto1∂outo1 * ∂ n e t o 1 ∂ w 5 \frac{\partial net_{o_{1}}}{\partial w_{5}} ∂w5∂neto1

其中,因为:

E t o t a l = 1 2 ( t a r g e t o 1 − o u t p u t o 1 ) 2 + 1 2 ( t a r g e t o 2 − o u t p u t o 2 ) 2 E_{total} = \frac{1}{2}(target_{o_{1}}-output_{o_{1}})^{2} + \frac{1}{2}(target_{o_{2}}-output_{o_{2}})^{2} Etotal=21(targeto1−outputo1)2+21(targeto2−outputo2)2

即,

∂ E t o t a l ∂ o u t o 1 \frac{\partial E_{total}}{\partial out_{o_{1}}} ∂outo1∂Etotal = ( t a r g e t o 1 − o u t p u t o 1 ) ∗ ( − 1 ) = 0.74136507 (target_{o_{1}}-output_{o_{1}}) * (-1) = 0.74136507 (targeto1−outputo1)∗(−1)=0.74136507 (误差)

因为,

o u t o 1 = s i g m o i d ( n e t o 1 ) out_{o_{1}} = sigmoid(net_{o_{1}}) outo1=sigmoid(neto1)

即,

∂ o u t o 1 ∂ n e t o 1 \frac{\partial out_{o_{1}}}{\partial net_{o_{1}}} ∂neto1∂outo1 = 0.186815602 (sigmoid激活函数)

因为:

n e t o 1 = w 5 o u t h 1 + w 6 o u t h 2 + b 2 net_{o_{1}} = w_{5}out_{h_{1}}+w_{6}out_{h_{2}}+b_{2} neto1=w5outh1+w6outh2+b2

即,

∂ n e t o 1 ∂ w 5 \frac{\partial net_{o_{1}}}{\partial w_{5}} ∂w5∂neto1 = o u t h 1 out_{h_{1}} outh1 = 0.593269992 (conv)

最后可得:

∂ E t o t a l ∂ w 5 \frac{\partial E_{total}}{\partial w_{5}} ∂w5∂Etotal = 0.082167041

更新 w 5 w_{5} w5的值,ŋ 代表学习率,这里取0.5:

w 5 + = w 5 − ŋ ∗ ∂ E t o t a l ∂ w 5 w_{5}^{+} = w_{5} - ŋ * \frac{\partial E_{total}}{\partial w_{5}} w5+=w5−ŋ∗∂w5∂Etotal=0.35891648

-

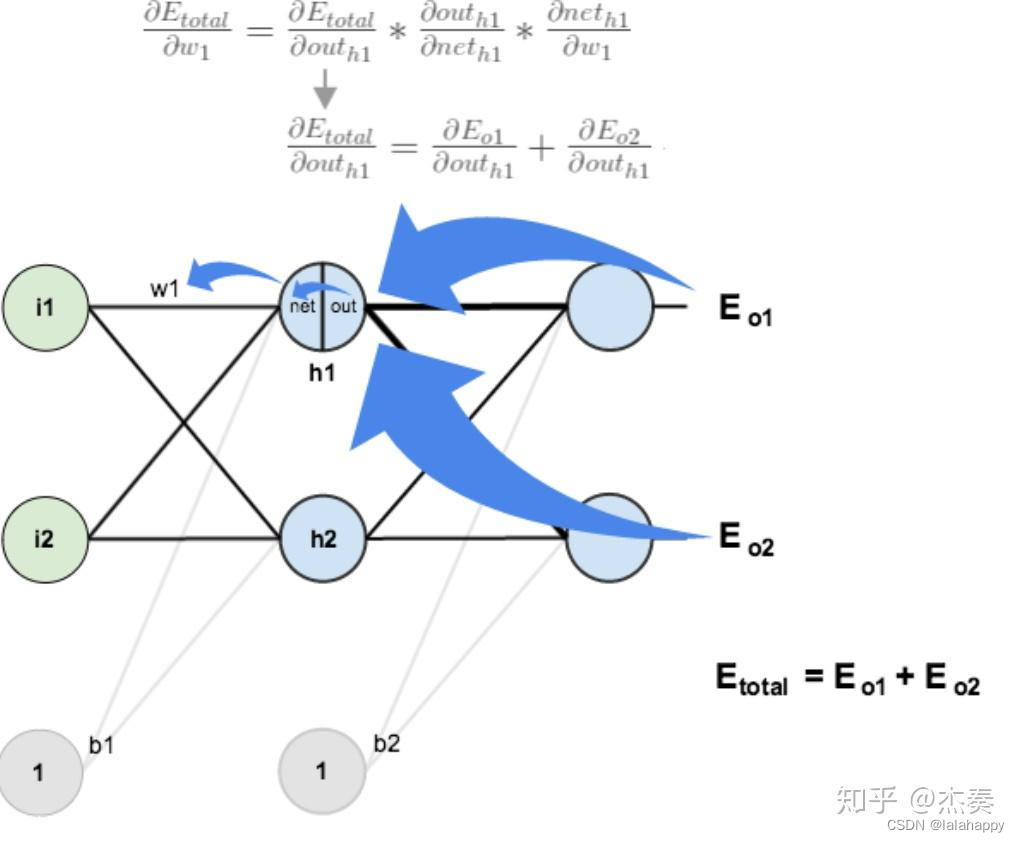

隐藏层 → \rightarrow → 输入层的权值更新:

∂ E t o t a l ∂ w 1 \frac{\partial E_{total}}{\partial w_{1}} ∂w1∂Etotal = ∂ E t o t a l ∂ o u t h 1 \frac{\partial E_{total}}{\partial out_{h_{1}}} ∂outh1∂Etotal * ∂ o u t h 1 ∂ n e t h 1 \frac{\partial out_{h_{1}}}{\partial net_{h_{1}}} ∂neth1∂outh1 * ∂ n e t h 1 ∂ w 1 \frac{\partial net_{h_{1}}}{\partial w_{1}} ∂w1∂neth1其中, ∂ E t o t a l ∂ o u t h 1 \frac{\partial E_{total}}{\partial out_{h_{1}}} ∂outh1∂Etotal = ∂ E o 1 ∂ o u t h 1 \frac{\partial E_{o_{1}}}{\partial out_{h_{1}}} ∂outh1∂Eo1 + ∂ E o 2 ∂ o u t h 1 \frac{\partial E_{o_{2}}}{\partial out_{h_{1}}} ∂outh1∂Eo2

其中, ∂ E o 1 ∂ o u t h 1 \frac{\partial E_{o_{1}}}{\partial out_{h_{1}}} ∂outh1∂Eo1 = ∂ E o 1 ∂ n e t o 1 \frac{\partial E_{o_{1}}}{\partial net_{o_{1}}} ∂neto1∂Eo1 * ∂ n e t o 1 ∂ o u t h 1 \frac{\partial net_{o_{1}}}{\partial out_{h_{1}}} ∂outh1∂neto1

其中, ∂ E o 1 ∂ n e t o 1 \frac{\partial E_{o_{1}}}{\partial net_{o_{1}}} ∂neto1∂Eo1 = ∂ E o 1 ∂ o u t o 1 \frac{\partial E_{o_{1}}}{\partial out_{o_{1}}} ∂outo1∂Eo1 * ∂ o u t o 1 ∂ n e t o 1 \frac{\partial out_{o_{1}}}{\partial net_{o_{1}}} ∂neto1∂outo1

即,

∂ E t o t a l ∂ w 1 \frac{\partial E_{total}}{\partial w_{1}} ∂w1∂Etotal = ( ∂ E o 1 ∂ o u t h 1 \frac{\partial E_{o_{1}}}{\partial out_{h_{1}}} ∂outh1∂Eo1 + ∂ E o 2 ∂ o u t h 1 \frac{\partial E_{o_{2}}}{\partial out_{h_{1}}} ∂outh1∂Eo2) * ∂ o u t h 1 ∂ n e t h 1 \frac{\partial out_{h_{1}}}{\partial net_{h_{1}}} ∂neth1∂outh1 * ∂ n e t h 1 ∂ w 1 \frac{\partial net_{h_{1}}}{\partial w_{1}} ∂w1∂neth1

其中,

∂ E o 1 ∂ o u t h 1 \frac{\partial E_{o_{1}}}{\partial out_{h_{1}}} ∂outh1∂Eo1* ∂ o u t h 1 ∂ n e t h 1 \frac{\partial out_{h_{1}}}{\partial net_{h_{1}}} ∂neth1∂outh1 * ∂ n e t h 1 ∂ w 1 \frac{\partial net_{h_{1}}}{\partial w_{1}} ∂w1∂neth1 = ∂ E o 1 ∂ o u t o 1 \frac{\partial E_{o_{1}}}{\partial out_{o_{1}}} ∂outo1∂Eo1 * ∂ o u t o 1 ∂ n e t o 1 \frac{\partial out_{o_{1}}}{\partial net_{o_{1}}} ∂neto1∂outo1 * ∂ n e t o 1 ∂ o u t h 1 \frac{\partial net_{o_{1}}}{\partial out_{h_{1}}} ∂outh1∂neto1* ∂ o u t h 1 ∂ n e t h 1 \frac{\partial out_{h_{1}}}{\partial net_{h_{1}}} ∂neth1∂outh1 * ∂ n e t h 1 ∂ w 1 \frac{\partial net_{h_{1}}}{\partial w_{1}} ∂w1∂neth1

另,

∂ E o 2 ∂ o u t h 1 \frac{\partial E_{o_{2}}}{\partial out_{h_{1}}} ∂outh1∂Eo2* ∂ o u t h 1 ∂ n e t h 1 \frac{\partial out_{h_{1}}}{\partial net_{h_{1}}} ∂neth1∂outh1 * ∂ n e t h 1 ∂ w 1 \frac{\partial net_{h_{1}}}{\partial w_{1}} ∂w1∂neth1 = ∂ E o 2 ∂ o u t o 2 \frac{\partial E_{o_{2}}}{\partial out_{o_{2}}} ∂outo2∂Eo2 * ∂ o u t o 2 ∂ n e t o 2 \frac{\partial out_{o_{2}}}{\partial net_{o_{2}}} ∂neto2∂outo2 * ∂ n e t o 2 ∂ o u t h 1 \frac{\partial net_{o_{2}}}{\partial out_{h_{1}}} ∂outh1∂neto2* ∂ o u t h 1 ∂ n e t h 1 \frac{\partial out_{h_{1}}}{\partial net_{h_{1}}} ∂neth1∂outh1 * ∂ n e t h 1 ∂ w 1 \frac{\partial net_{h_{1}}}{\partial w_{1}} ∂w1∂neth1

上述公式基于:

n e t o 2 = w 7 o u t h 1 + w 8 o u t h 2 + b 2 net_{o_{2}} = w_{7}out_{h_{1}}+w_{8}out_{h_{2}}+b_{2} neto2=w7outh1+w8outh2+b2

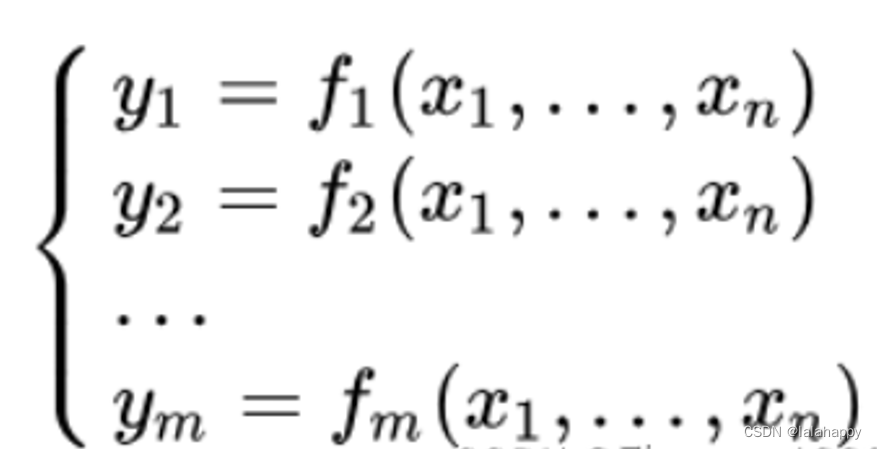

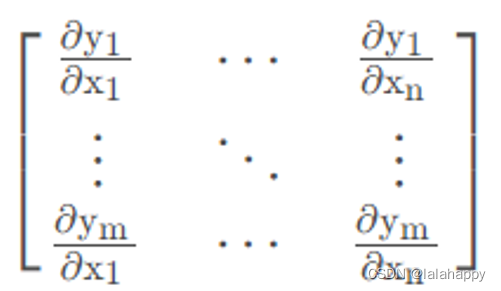

雅可比矩阵 – Jacobian

雅可比矩阵 是一阶偏导数以一定方式排列成的矩阵, 其行列式称为雅可比行列式。

设函数如下:

是一个从欧式 n 维空间转换到欧式 m 维空间的函数;

这个函数由 m 个实函数组成,这些函数的偏导数(如果存在)可以组成一个 m 行 n 列的矩阵, 这就是所谓的雅可比矩阵:

下面公式中,如果只看两步,即 loss(E) → \rightarrow → 激活函数(out),可以得到以下结论:

变量的梯度 = 上游变量的梯度 ✖ 当前变量的 J 矩阵

∂ E t o t a l ∂ w 5 \frac{\partial E_{total}}{\partial w_{5}} ∂w5∂Etotal = ∂ E t o t a l ∂ o u t o 1 \frac{\partial E_{total}}{\partial out_{o_{1}}} ∂outo1∂Etotal * ∂ o u t o 1 ∂ n e t o 1 \frac{\partial out_{o_{1}}}{\partial net_{o_{1}}} ∂neto1∂outo1 * ∂ n e t o 1 ∂ w 5 \frac{\partial net_{o_{1}}}{\partial w_{5}} ∂w5∂neto1

∂ E t o t a l ∂ w 6 \frac{\partial E_{total}}{\partial w_{6}} ∂w6∂Etotal = ∂ E t o t a l ∂ o u t o 1 \frac{\partial E_{total}}{\partial out_{o_{1}}} ∂outo1∂Etotal * ∂ o u t o 1 ∂ n e t o 1 \frac{\partial out_{o_{1}}}{\partial net_{o_{1}}} ∂neto1∂outo1 * ∂ n e t o 1 ∂ w 6 \frac{\partial net_{o_{1}}}{\partial w_{6}} ∂w6∂neto1

∂ E t o t a l ∂ w 7 \frac{\partial E_{total}}{\partial w_{7}} ∂w7∂Etotal = ∂ E t o t a l ∂ o u t o 2 \frac{\partial E_{total}}{\partial out_{o_{2}}} ∂outo2∂Etotal * ∂ o u t o 2 ∂ n e t o 2 \frac{\partial out_{o_{2}}}{\partial net_{o_{2}}} ∂neto2∂outo2 * ∂ n e t o 2 ∂ w 7 \frac{\partial net_{o_{2}}}{\partial w_{7}} ∂w7∂neto2

∂ E t o t a l ∂ w 8 \frac{\partial E_{total}}{\partial w_{8}} ∂w8∂Etotal = ∂ E t o t a l ∂ o u t o 2 \frac{\partial E_{total}}{\partial out_{o_{2}}} ∂outo2∂Etotal * ∂ o u t o 2 ∂ n e t o 2 \frac{\partial out_{o_{2}}}{\partial net_{o_{2}}} ∂neto2∂outo2 * ∂ n e t o 2 ∂ w 8 \frac{\partial net_{o_{2}}}{\partial w_{8}} ∂w8∂neto2

推理公式如下:

[ ∂ E t o t a l ∂ o u t o 1 ∗ ∂ o u t o 1 ∂ n e t o 1 ∂ E t o t a l ∂ o u t o 2 ∗ ∂ o u t o 2 ∂ n e t o 2 ] = [ ∂ E t o t a l ∂ o u t o 1 ∂ E t o t a l ∂ o u t o 2 ] [ ∂ o u t o 1 ∂ n e t o 1 0 0 ∂ o u t o 2 ∂ n e t o 2 ] \begin{bmatrix} \frac{\partial E_{total}}{\partial out_{o_{1}}} * \frac{\partial out_{o_{1}}}{\partial net_{o_{1}}} & \frac{\partial E_{total}}{\partial out_{o_{2}}} * \frac{\partial out_{o_{2}}}{\partial net_{o_{2}}} \end{bmatrix} = \begin{bmatrix} \frac{\partial E_{total}}{\partial out_{o_{1}}} & \frac{\partial E_{total}}{\partial out_{o_{2}}} \end{bmatrix} \begin{bmatrix} \frac{\partial out_{o_{1}}}{\partial net_{o_{1}}} & 0 \\ 0& \frac{\partial out_{o_{2}}}{\partial net_{o_{2}}} \end{bmatrix} [∂outo1∂Etotal∗∂neto1∂outo1∂outo2∂Etotal∗∂neto2∂outo2]=[∂outo1∂Etotal∂outo2∂Etotal][∂neto1∂outo100∂neto2∂outo2]

参考:

- https://zhuanlan.zhihu.com/p/261710847

- https://www.cnblogs.com/charlotte77/p/5629865.html

- https://zhuanlan.zhihu.com/p/641691381

- https://blog.csdn.net/weixin_39354845/article/details/128280556

- https://www.coonote.com/note/principle-of-back-propagation-algorithm.html

221

221

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?