Kubernetes容器编排工具

Kubernetes简介

1. 服务网格是一个基础设施层,功能在于处理服务件通信

2. Kubernetes 是容器集群管理系统,可以实现容器集群自动化部署,自动扩缩容、维护等功能。

3. Kubernetes优点

1. 快速部署应用

2. 快速扩展应用

3. 无缝对接新的应用功能

4. 节省资源,优化硬件资源的使用

4. Kubernetes特点

1. 可移植: 支持公有云,私有云,混合云,多重云(多个公共云)

2. 可扩展: 模块化,插件化,可挂载,可组合

3. 自动化: 自动部署,自动重启,自动复制,自动伸缩/扩展

Kubernetes安装准备

1. 统一环境配置

1. kubernetes要求虚拟机

1. 关闭交换空间(太消耗性能):sudo swapoff -a

2. 避免开机启动交换空间:注释 /etc/fstab 中的 swap

3. 关闭防火墙:ufw disable

2. 安装docker,修改DNS114.114.114.114

3. 安装 kubeadm,kubelet,kubectl

安装系统工具

apt-get update && apt-get install -y apt-transport-https

安装 GPG 证书

curl https://mirrors.aliyun.com/kubernetes/apt/doc/apt-key.gpg | apt-key add -

修改源

cat << EOF >/etc/apt/sources.list.d/kubernetes.list

deb https://mirrors.aliyun.com/kubernetes/apt/ kubernetes-xenial main

EOF

安装

apt-get update && apt-get install -y kubelet kubeadm kubectl

1. kubeadm是 kubernetes 的集群安装工具

2. kubelet是用于启动容器

3. kubectl是命令行工具

4. 同步时区

dpkg-reconfigure tzdata

Asia->ShangHai

apt-get install ntpdate

设置系统时间与网络时间同步(cn.pool.ntp.org 位于中国的公共 NTP 服务器)

ntpdate cn.pool.ntp.org

将系统时间写入硬件时间

hwclock --systohc

5. 修改 cloud.cfg(防止重启主机名修改)

vi /etc/cloud/cloud.cfg

该配置默认为 false,修改为 true 即可

preserve_hostname: true

2. 基于统一环境镜像做模板,建立Kubernetes节点

1. 修改ip

2. 修改主机名

hostnamectl set-hostname kubernetes-master

3. 重启

Kubernetes集群安装

1. 配置kubeadm

1. cd /usr/local/kubernetes/cluster

2. 拉取配置文件

kubeadm config print init-defaults --kubeconfig ClusterConfiguration > kubeadm.yml

3. 修改kubeadm.yml配置文件(下图)

4. 查看所需镜像列表

kubeadm config images list --config kubeadm.yml

拉取镜像

kubeadm config images pull --config kubeadm.yml

# 修改配置为如下内容

apiVersion: kubeadm.k8s.io/v1beta1

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

# 修改为主节点 IP

advertiseAddress: 192.168.79.100

bindPort: 6443

nodeRegistration:

criSocket: /var/run/dockershim.sock

name: kubernetes-master

taints:

- effect: NoSchedule

key: node-role.kubernetes.io/master

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta1

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controlPlaneEndpoint: ""

controllerManager: {}

dns:

type: CoreDNS

etcd:

local:

dataDir: /var/lib/etcd

# 国内不能访问 Google,修改为阿里云

imageRepository: registry.aliyuncs.com/google_containers

kind: ClusterConfiguration

# 修改版本号

kubernetesVersion: v1.16.2

networking:

dnsDomain: cluster.local

# 增加 Calico 的网段配置 不能和kubernetes网段一致

podSubnet: "10.244.0.0/16"

serviceSubnet: 10.96.0.0/12

scheduler: {}

---

# 开启 IPVS 模式

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

featureGates:

SupportIPVSProxyMode: true

mode: ipvs

registry.aliyuncs.com/google_containers/kube-apiserver:v1.16.2 api网关 负责接收指令

registry.aliyuncs.com/google_containers/kube-controller-manager:v1.16.2 通过pod节点管理容器(自动启动等)

registry.aliyuncs.com/google_containers/kube-scheduler:v1.16.2 负责任务调度

registry.aliyuncs.com/google_containers/kube-proxy:v1.16.2 代理外网怎么访问内网的各个节点

registry.aliyuncs.com/google_containers/pause:3.1 容器启停

registry.aliyuncs.com/google_containers/etcd:3.3.15-0 服务注册与发现

registry.aliyuncs.com/google_containers/coredns:1.6.2 跨网段解析

3. 初始化主节点

kubeadm init --config=kubeadm.yml | tee kubeadm-init.log

4. 按照log命令执行,配置kubectl

1. mkdir -p $HOME/.kube

2. cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

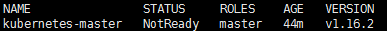

5. kubectl get node查看是否成功

失败的话,kubeadm reset ,删除log文件。重新操作

6. 从节点使用log最后一句加入集群

kubeadm join 192.168.79.100:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:ac903a0260f605a9437f190b561d5df93f509c9d2a58396c3b3a74cae20edb09

在master,使用kubectl get nodes查看所有节点

如果忘记上图命令,可以使用以下命令

token

可以通过安装 master 时的日志查看 token 信息

可以通过 kubeadm token list 命令打印出 token 信息

如果 token 过期,可以使用 kubeadm token create 命令创建新的 token

discovery-token-ca-cert-hash

可以通过安装 master 时的日志查看 sha256 信息

可以通过 openssl x509 -pubkey -in /etc/kubernetes/pki/ca.crt | openssl rsa -pubin -outform der 2>/dev/null | openssl dgst -sha256 -hex | sed 's/^.* //' 命令查看 sha256 信息

7. 节点使用reset停止的话,master中使用delete nodes + NAME删除节点

8. 查看 pod 状态

kubectl get pod -n kube-system -o wide

9.

CNI

1. CNI(Container Network Interface) 是一个标准的,通用的接口。

2. 服务部署在不同的docker之上,服务之间通信,需要在同一个局域网内,docker-Swarm

实现不友好,容器网络解决方案有 flannel,calico,weave。采用calico

安装calico

# 在 Master 节点操作即可(官方网址https://docs.projectcalico.org/v3.10/getting-started/kubernetes/)

kubectl apply -f https://docs.projectcalico.org/v3.10/manifests/calico.yaml

# 确认是否安装完成

watch kubectl get pods --all-namespaces

如果出现ImagePullBackOff,会自动尝试重新下载,不行的话:

1. Master 中删除 Nodes:kubectl delete nodes <NAME>

2. Slave 中重置配置:kubeadm reset

3. Slave 重启计算机:reboot

4. Slave 重新加入集群:kubeadm join

全部Running为成功

运行容器

kubectl run

1.启动nginx

kubectl run nginx --image=nginx --replicas=2 --port=80

2. 查看pods

kubectl get pods

3. 查看已部署的服务

kubectl get deployment

4. 发布服务(暴露端口)

kubectl expose deployment nginx --port=80 --type=LoadBalancer

5. 查看已发布的服务

kubectl get services

6. 如上,即可通过node的ip:30369访问到nginx,且node节点删除或停止nginx,

master会自动重启nginx,停止服务会自动删除

7. 停止服务

kubectl delete deployment nginx

服务删除后,services没有意义,也要删去(services一般不对外开放,内网互通)

kubectl delete service nginx

通过资源配置运行容器

1. kubectl run和docker run一样麻烦,kubectl提供了和docker-compose一样的功能

2. kubectl create -f xxx.yml

kubectl apply -f xxx.yml

nginx.yml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-app

spec:

selector:

matchLabels:

app: nginx

replicas: 2

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: nginx-http

spec:

ports:

- port: 80

targetPort: 80

type: LoadBalancer

selector:

# 和上面对应

app: nginx

Ingress 统一访问入口

采用 Nginx Ingress Controller

1. 安装 Nginx Ingress Controller

1. 下载配置文件

wget https://raw.githubusercontent.com/kubernetes/ingress-nginx/master/deploy/static/mandatory.yaml

2. 修改配置文件,serviceAccountName下面增加一句 hostNetwork: true开启主机网络模式,暴露 Nginx 服务端口 80

3. 部署 Ingress

kubectl create-f mandatory.yaml

4. 查看服务ip

kubectl get pods -n ingress-nginx -o wide

案例

1. 配置2个tomcat

2. 配置ingress,代理访问tomcat

tomcat.yml

# API 版本号

apiVersion: apps/v1

# 类型,如:Pod/ReplicationController/Deployment/Service/Ingress

kind: Deployment

metadata:

name: tomcat-app

spec:

selector:

matchLabels:

app: tomcat

replicas: 2

template:

metadata:

labels:

app: tomcat

spec:

containers:

- name: tomcat

image: tomcat:8.5.43

ports:

- containerPort: 8080

---

apiVersion: v1

kind: Service

metadata:

name: tomcat-http

spec:

ports:

- port: 8080

targetPort: 8080

# ClusterIP NodePort LoadBalancer

type: ClusterIP

selector:

app: tomcat

ingress.yml

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: nginx-web

annotations:

# 指定 Ingress Controller 的类型

kubernetes.io/ingress.class: "nginx"

# 指定我们的 rules 的 path 可以使用正则表达式

nginx.ingress.kubernetes.io/use-regex: "true"

# 连接超时时间,默认为 5s

nginx.ingress.kubernetes.io/proxy-connect-timeout: "600"

# 后端服务器回转数据超时时间,默认为 60s

nginx.ingress.kubernetes.io/proxy-send-timeout: "600"

# 后端服务器响应超时时间,默认为 60s

nginx.ingress.kubernetes.io/proxy-read-timeout: "600"

# 客户端上传文件,最大大小,默认为 20m

nginx.ingress.kubernetes.io/proxy-body-size: "10m"

# URL 重写

nginx.ingress.kubernetes.io/rewrite-target: /

spec:

# 路由规则

rules:

# 主机名,只能是域名,修改为你自己的

- host: k8s.test.com

http:

paths:

- path:

backend:

# 后台部署的 Service Name,与上面部署的 Tomcat 对应

serviceName: tomcat-http

# 后台部署的 Service Port,与上面部署的 Tomcat 对应

servicePort: 8080

通过ingress.yml的host映射kubectl get pods -n ingress-nginx -o wide的ip,

访问host,即可代理到backend映射的服务和端口

Kubernetes数据持久化

1. 在 K8S 中,数据卷是通过 Pod 实现持久化的,如果 Pod 删除,数据卷也会一起删除,

k8s 的数据卷是 docker 数据卷的扩展,K8S 适配各种存储系统,包括本地存储 EmptyDir,

HostPath, 网络存储(NFS,GlusterFS,PV/PVC)等。

2. NFS 基于 RPC实现,其允许一个系统在网络上与它人共享目录和文件。

NFS 是一个非常稳定的,可移植的网络文件系统。具备可扩展和高性能等特性,

达到了企业级应用质量标准。

NFS使用:

1. 用一台2G内存的服务器,硬盘可用LVM技术扩展。

2. 建立/usr/local/kunernetes/volumes文件夹

3. chmod a+rw /usr/local/kubernetes/volumes 增加可读写权限

4. 安装 NFS 服务端

apt-get install -y nfs-kernel-server

5. 配置NFS 服务目录,打开文件 vi /etc/exports,在尾部新增一行

/usr/local/kubernetes/volumes *(rw,sync,no_subtree_check,no_root_squash)

/usr/local/kubernetes/volumes:作为服务目录向客户端开放

*:表示任何 IP 都可以访问

rw:读写权限

sync:同步权限

no_subtree_check:表示如果输出目录是一个子目录,NFS 服务器不检查其父目录的权限

6. 重启服务,使配置生效

/etc/init.d/nfs-kernel-server restart

7. 在要持久化的服务器上安装客户端

apt-get install -y nfs-common

8. 创建 NFS 客户端挂载目录

mkdir -p /usr/local/kubernetes/volumes-mount

9. 将 NFS 服务器的目录挂载到 NFS 客户端的目录

monut 192.168.79.115:/usr/local/kubernetes/volumes /usr/local/kubernetes/volumes-mount

10. 可以使用命令取消挂载

umount /usr/local/kubernetes/volumes-mount

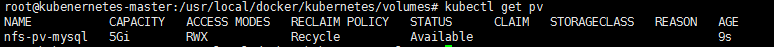

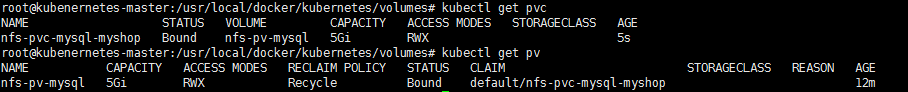

PV

1. Persistent Volume(持久卷),可以从NFS上分配空间,用于存储

2. PV和下面的PVC都在master上部署

3. mkdir /usr/local/kubernetes/volumes

nfs-pv-mysql.yml

apiVersion: v1

kind: PersistentVolume

metadata:

name: nfs-pv-mysql

spec:

# 设置容量

capacity:

storage: 5Gi

# 访问模式

accessModes:

# 该卷能够以读写模式被多个节点同时加载

- ReadWriteMany

# 回收策略,这里是基础擦除 `rm-rf/thevolume/*`

persistentVolumeReclaimPolicy: Recycle

nfs:

# NFS 服务端配置的路径

path: "/usr/local/kubernetes/volumes"

# NFS 服务端地址

server: 192.168.79.115

readOnly: false

1. AccessModes(访问模式)

ReadWriteOnce:该卷能够以读写模式被加载到一个节点上

ReadOnlyMany:该卷能够以只读模式加载到多个节点上

ReadWriteMany:该卷能够以读写模式被多个节点同时加载

2. RecyclingPolicy(回收策略)

Retain:人工重新申请

Recycle:基础擦除(rm-rf/thevolume/*)

Delete:相关的存储资产例如 AWS EBS,GCE PD 或者 OpenStack Cinder 卷一并删除

PVC

1. 在volumes下建立nfs-pvc-mysql-myshop.yml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: nfs-pvc-mysql-myshop

spec:

accessModes:

# 需要使用和 PV 一致的访问模式

- ReadWriteMany

# 按需分配资源

resources:

requests:

storage: 1Gi

kubectl create -f nfs-pvc-mysql-myshop.yml

部署 MySQL8

1. 在每个node节点上安装NFS客户端

apt-get install -y nfs-common

2. 在master /usr/local/kubernetes/service下建里mysql.yml

apiVersion: apps/v1

# 类型,如:Pod/ReplicationController/Deployment/Service/Ingress

kind: Deployment

metadata:

name: mysql-myshop

spec:

selector:

matchLabels:

app: mysql-myshop

replicas: 1

template:

metadata:

labels:

app: mysql-myshop

spec:

containers:

- name: mysql-myshop

image: mysql:8.0.15

# 只有镜像不存在时,才会进行镜像拉取

imagePullPolicy: IfNotPresent

ports:

- containerPort: 3306

# 同 Docker 配置中的 environment

env:

- name: MYSQL_ROOT_PASSWORD

value: "123456"

# 容器中的挂载目录

volumeMounts:

- name: nfs-vol-myshop

mountPath: /var/lib/mysql

volumes:

# 挂载到数据卷

- name: nfs-vol-myshop

persistentVolumeClaim:

claimName: nfs-pvc-mysql-myshop

---

apiVersion: v1

kind: Service

metadata:

name: mysql-myshop

spec:

ports:

- port: 3306

targetPort: 3306

# ClusterIP NodePort LoadBalancer

type: LoadBalancer

selector:

app: mysql-myshop

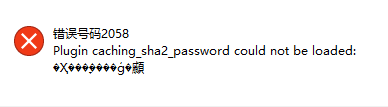

3. kubectl create -f mysql.yml启动后,kubectl get pods -o wide查看在那个Node上,

kubectl get services 查看端口,连接,会出现一下错误,需要采用ConfigMap 配置

ConfigMap

1. ConfigMap 是用来存储配置文件的 Kubernetes 资源对象,类型docker-compose

的command

2. 修改上面的mysql.yml,增加配置

apiVersion: v1

kind: ConfigMap

metadata:

name: mysql-myshop-config

data:

# 这里是键值对数据

mysqld.cnf: |

[client]

port=3306

[mysql]

no-auto-rehash

[mysqld]

skip-host-cache

skip-name-resolve

default-authentication-plugin=mysql_native_password

character-set-server=utf8mb4

collation-server=utf8mb4_general_ci

explicit_defaults_for_timestamp=true

lower_case_table_names=1

---

apiVersion: apps/v1

# 类型,如:Pod/ReplicationController/Deployment/Service/Ingress

kind: Deployment

metadata:

name: mysql-myshop

spec:

selector:

matchLabels:

app: mysql-myshop

replicas: 1

template:

metadata:

labels:

app: mysql-myshop

spec:

containers:

- name: mysql-myshop

image: mysql:8.0.15

# 只有镜像不存在时,才会进行镜像拉取

imagePullPolicy: IfNotPresent

ports:

- containerPort: 3306

# 同 Docker 配置中的 environment

env:

- name: MYSQL_ROOT_PASSWORD

value: "123456"

# 容器中的挂载目录

volumeMounts:

# 以数据卷的形式挂载 MySQL 配置文件目录

- name: cm-vol-myshop

mountPath: /etc/mysql/conf.d

- name: nfs-vol-myshop

mountPath: /var/lib/mysql

volumes:

# 将 ConfigMap 中的内容以文件形式挂载进数据卷

- name: cm-vol-myshop

configMap:

name: mysql-myshop-config

items:

# ConfigMap 中的 Key

- key: mysqld.cnf

# ConfigMap Key 匹配的 Value 写入名为 mysqld.cnf 的文件中

path: mysqld.cnf

# 挂载到数据卷

- name: nfs-vol-myshop

persistentVolumeClaim:

claimName: nfs-pvc-mysql-myshop

---

apiVersion: v1

kind: Service

metadata:

name: mysql-myshop

spec:

ports:

- port: 3306

targetPort: 3306

# ClusterIP NodePort LoadBalancer

type: LoadBalancer

selector:

app: mysql-myshop

Kubernetes Dashboard

1. 拉取yml(直接使用,官方推荐端口不开放,生成时使用,测试开放)

wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.0-beta5/aio/deploy/recommended.yaml

,拉取不成功可以直接复制下面

2. 修改recommended.yaml

# Copyright 2017 The Kubernetes Authors.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

apiVersion: v1

kind: Namespace

metadata:

name: kubernetes-dashboard

---

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

# 开启访问

type: NodePort

ports:

- port: 443

targetPort: 8443

# 固定端口

nodePort: 30001

selector:

k8s-app: kubernetes-dashboard

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-certs

namespace: kubernetes-dashboard

type: Opaque

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-csrf

namespace: kubernetes-dashboard

type: Opaque

data:

csrf: ""

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-key-holder

namespace: kubernetes-dashboard

type: Opaque

---

kind: ConfigMap

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-settings

namespace: kubernetes-dashboard

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

rules:

# Allow Dashboard to get, update and delete Dashboard exclusive secrets.

- apiGroups: [""]

resources: ["secrets"]

resourceNames: ["kubernetes-dashboard-key-holder", "kubernetes-dashboard-certs", "kubernetes-dashboard-csrf"]

verbs: ["get", "update", "delete"]

# Allow Dashboard to get and update 'kubernetes-dashboard-settings' config map.

- apiGroups: [""]

resources: ["configmaps"]

resourceNames: ["kubernetes-dashboard-settings"]

verbs: ["get", "update"]

# Allow Dashboard to get metrics.

- apiGroups: [""]

resources: ["services"]

resourceNames: ["heapster", "dashboard-metrics-scraper"]

verbs: ["proxy"]

- apiGroups: [""]

resources: ["services/proxy"]

resourceNames: ["heapster", "http:heapster:", "https:heapster:", "dashboard-metrics-scraper", "http:dashboard-metrics-scraper"]

verbs: ["get"]

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

rules:

# Allow Metrics Scraper to get metrics from the Metrics server

- apiGroups: ["metrics.k8s.io"]

resources: ["pods", "nodes"]

verbs: ["get", "list", "watch"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: kubernetes-dashboard

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: kubernetes-dashboard

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: kubernetes-dashboard

template:

metadata:

labels:

k8s-app: kubernetes-dashboard

spec:

containers:

- name: kubernetes-dashboard

image: kubernetesui/dashboard:v2.0.0-beta5

imagePullPolicy: Always

ports:

- containerPort: 8443

protocol: TCP

args:

- --auto-generate-certificates

- --namespace=kubernetes-dashboard

# Uncomment the following line to manually specify Kubernetes API server Host

# If not specified, Dashboard will attempt to auto discover the API server and connect

# to it. Uncomment only if the default does not work.

# - --apiserver-host=http://my-address:port

volumeMounts:

- name: kubernetes-dashboard-certs

mountPath: /certs

# Create on-disk volume to store exec logs

- mountPath: /tmp

name: tmp-volume

livenessProbe:

httpGet:

scheme: HTTPS

path: /

port: 8443

initialDelaySeconds: 30

timeoutSeconds: 30

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

runAsUser: 1001

runAsGroup: 2001

volumes:

- name: kubernetes-dashboard-certs

secret:

secretName: kubernetes-dashboard-certs

- name: tmp-volume

emptyDir: {}

serviceAccountName: kubernetes-dashboard

nodeSelector:

"beta.kubernetes.io/os": linux

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

---

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: dashboard-metrics-scraper

name: dashboard-metrics-scraper

namespace: kubernetes-dashboard

spec:

ports:

- port: 8000

targetPort: 8000

selector:

k8s-app: dashboard-metrics-scraper

---

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: dashboard-metrics-scraper

name: dashboard-metrics-scraper

namespace: kubernetes-dashboard

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: dashboard-metrics-scraper

template:

metadata:

labels:

k8s-app: dashboard-metrics-scraper

annotations:

seccomp.security.alpha.kubernetes.io/pod: 'runtime/default'

spec:

containers:

- name: dashboard-metrics-scraper

image: kubernetesui/metrics-scraper:v1.0.1

ports:

- containerPort: 8000

protocol: TCP

livenessProbe:

httpGet:

scheme: HTTP

path: /

port: 8000

initialDelaySeconds: 30

timeoutSeconds: 30

volumeMounts:

- mountPath: /tmp

name: tmp-volume

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

runAsUser: 1001

runAsGroup: 2001

serviceAccountName: kubernetes-dashboard

nodeSelector:

"beta.kubernetes.io/os": linux

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

volumes:

- name: tmp-volume

emptyDir: {}

3. kubectl apply -f recommended.yaml

4. kubectl get pods -n kubernetes-dashboard -o wide查看在那个node上

5. 通过https://ip:30001即可在浏览器(火狐)访问

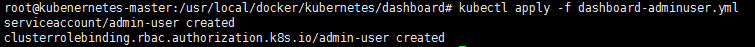

6. 创建用户,绑定到dashboard内,获取令牌访问

dashboard-adminuser.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin-user

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: admin-user

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin-user

namespace: kube-system

7. 获取token

kubectl -n kube-system describe secret $(kubectl -n kube-system get secret | grep admin-user | awk '{print $1}')

8. 登录

Helm

1. Helm 是 Kubernetes 的包管理器。类似于我们在 Ubuntu 中使用的apt。能快速查找、下载和安装软件包。

2. Helm 由客户端组件 helm 和服务端组件 Tiller 组成, 能够将一组K8S资源打包统一管理。

3. Kubernetes部署应用,涉及到deployment,service,配置账号密码,pv,pvc,启动顺序等,不方便管理。

Helm概念

1. helm 是一个命令行工具,用于管理chart

2. Tiller 是服务度,接受Helm的请求,与K8s的apiserver交互,根据chart生成release并管理

3. chart Helm打包的格式叫做chart,是一系列文件

4. release 是helm install 命令在k8s集群中部署的chart

5. Repoistory Helm chart的仓库,helm客户端通过HTTP协议访问库中文件和压缩包

Helm安装

helm安装

1. master中,cd /usr/local/kubernetes/helm

2. wget https://get.helm.sh/helm-v2.15.2-linux-amd64.tar.gz

3. tar -xzvf helm-v2.15.2-linux-amd64.tar.gz

4. cp linux-amd64/helm /usr/local/bin/

Tiller安装

5. helm init --upgrade --tiller-image registry.cn-hangzhou.aliyuncs.com/google_containers/tiller:v2.15.2 --stable-repo-url https://kubernetes.oss-cn-hangzhou.aliyuncs.com/charts

7. tiller相关的rbac授权

tiller-adminuser.yml

apiVersion: v1

kind: ServiceAccount

metadata:

name: tiller

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: tiller

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: tiller

namespace: kube-system

7. kubectl apply -f tiller-adminuser.yml

8. 将角色绑定到tiller

kubectl patch deploy --namespace kube-system tiller-deploy -p '{"spec":{"template":{"spec":{"serviceAccount":"tiller"}}}}'

9. helm version查看结果

10.卸载的指令 helm reset

1832

1832

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?