配置清华源:

Elasticsearch安装

yum安装方式Elasticsearch

1.导入Elasticsearch GPG KEY

rpm --import https://artifacts.elastic.co/GPG-KEY-elasticsearch

2.添加elasticsearch的yum repo文件,使用清华的yum源镜像

默认安装最新版本,如需要指定版本,请下载指定rpm包,并配置java环境变量,放到/usr/bin下

配置好yum源,执行yum localinstall elasticsearch-6.7.0.rpm -y

cd /etc/yum.repos.d

vi elasticsearch.repo

[elasticsearch-6.x]

name=Elasticsearch repository for 6.x packages

baseurl=https://mirrors.tuna.tsinghua.edu.cn/elasticstack/6.x/yum/

gpgcheck=1

gpgkey=https://artifacts.elastic.co/GPG-KEY-elasticsearch

enabled=1

autorefresh=1

type=rpm-md3.修该配置文件

vim /etc/elasticsearch/elasticsearch.yml

修改55行

55 network.host: 0.0.0.0

vim /etc/elasticsearch/jvm.options

修改jvm参数

22 -Xms128m

23 -Xmx128m4.一个进程在VMAs(虚拟内存区域)创建内存映射最大数量

vim /etc/sysctl.conf

vm.max_map_count=6553605. #配置生效

sysctl -p6.启动es

systemctl start elasticsearch

tailf -200 /var/log/elasticsearch/elasticsearch.log{

"name": "dSQV6I8",

"cluster_name": "elasticsearch",

"cluster_uuid": "v5GPTWAtT5emxFdjigFg-w",

"version": {

"number": "6.5.4",

"build_flavor": "default",

"build_type": "tar",

"build_hash": "d2ef93d",

"build_date": "2018-12-17T21:17:40.758843Z",

"build_snapshot": false, "lucene_version": "7.5.0",

"minimum_wire_compatibility_version": "5.6.0",

"minimum_index_compatibility_version": "5.0.0"

},

"tagline": "You Know, for Search"

}安装elasticsearch-head

通过docker安装

#拉取镜像 docker pull mobz/elasticsearch-head:5

#创建容器 docker create --name elasticsearch-head -p 9100:9100 mobz/elasticsearch-head:5

#启动容器 docker start elasticsearch-head通过浏览器进行访问:

vim elasticsearch.yml

http.cors.enabled: true

http.cors.allow-origin: "*"解决head插件提交请求406问题方法:

docker exec -it elasticsearch-head /bin/bash

sed -i 's#application/x-www-form-urlencoded#application/json;charset=UTF-8#g' ./_site/vendor.js

docker restart elasticsearch-head配置了x-pack安全认证之后,就显示连接失败了。。。

通过chrome插件的方式安装不存在该问题。

打开chrome的应用商店,即可安装https://chrome.google.com/webstore/detail/elasticsearch-head/ffmkiejjmecolpfloofpjologoblkegm配置安装kibana

还是基于清华源进行指定版本的yum本地安装

yum localinstall kibana-6.7.0-x86_64.rpm -y编辑配置文件

vim /etc/kibana/kibana.yml

server.port: 5601

server.host: "10.4.7.101"

elasticsearch.hosts: ["http://10.4.7.101:9200"]启动kibana

systemctl start kibana

systemctl enable kibana访问kinaba

http://10.4.7.101:5601/x-pack破解为铂金版

参考文章链接

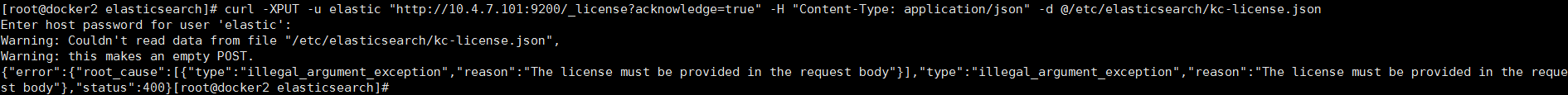

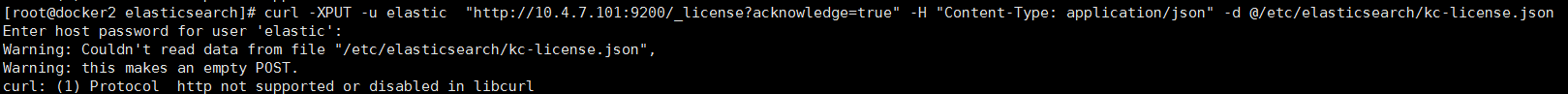

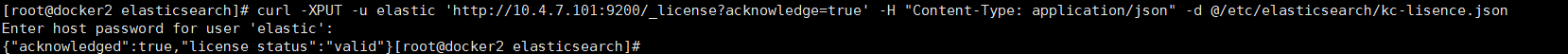

https://blog.csdn.net/weixin_45396564/article/details/103420345yum安装方式破解x-pack填坑大集合

1、上传许可证书的时候上传到/etc/elasticsearch/下(权限660),并修改属主属组

curl -H "Content-Type:application/json" -XPOST http://10.4.7.101:9200/_xpack/license/start_trial?acknowledge=true报错:

bin/elasticsearch-setup-passwords interactive报错:

xpack.security.enabled: true重启es

4、导入ssl证书

证书文件一定放在/etc/elasticsearch/并设置660权限,修改属主属组,否则会有文件读取权限问题

5、导入证书

curl -XPUT -u elastic 'http://10.4.7.101:9200/_license?acknowledge=true' -H "Content-Type: application/json" -d @/etc/elasticsearch/kc-lisence.json报错:

network.host: 10.4.7.101,127.0.0.1生成ssl证书的时候写的ip地址如果为主机ip的话,配置文件要按如上编写

报错:

日志收集配置

1.filebeat收集Nginx的json格式日志

1.普通Nginx日志不足的地方:

- 日志都在一个value里,不能拆分单独显示和搜索

- 索引名称没有意义

2.理想中的情况

{

$remote_addr : 192.168.12.254

- : -

$remote_user : -

[$time_local]: [10/Sep/2019:10:52:08 +0800]

$request: GET /jhdgsjfgjhshj HTTP/1.0

$status : 404

$body_bytes_sent : 153

$http_referer : -

$http_user_agent :ApacheBench/2.3

$http_x_forwarded_for:-

}

3.目标

将Nginx日志转换成json格式

4.修改nginx配置文件使日志转换成json

log_format json '{ "time_local": "$time_local", '

'"remote_addr": "$remote_addr", '

'"referer": "$http_referer", '

'"request": "$request", '

'"status": $status, '

'"bytes": $body_bytes_sent, '

'"agent": "$http_user_agent", '

'"x_forwarded": "$http_x_forwarded_for", '

'"up_addr": "$upstream_addr",'

'"up_host": "$upstream_http_host",'

'"upstream_time": "$upstream_response_time",'

'"request_time": "$request_time"'

' }';

access_log /var/log/nginx/access.log json;

#清空旧日志

[root@db01 ~]# > /var/log/nginx/access.log

#检查并重启nginx

[root@db01 ~]# nginx -t

[root@db01 ~]# systemctl restart nginx

5.修改filebeat配置文件

cat >/etc/filebeat/filebeat.yml<<EOF

filebeat.inputs:

- type: log

enabled: true

paths:

- /var/log/nginx/access.log

json.keys_under_root: true

json.overwrite_keys: true

output.elasticsearch:

hosts: ["10.0.0.51:9200"]

EOF

6.删除旧的ES索引

es-head >> filebeat-6.6.0-2019.11.15 >> 动作 >>删除

7.删除kibana里面的日日志信息

8.重启filebeat

[root@db01 ~]# systemctl restart filebeat

9.curl 一下nginx,并在es-head插件查看

[root@db01 ~]# curl 127.0.0.1

db01-www

2.filebeat自定义ES索引名称

1.理想中的索引名称

filebeat-6.6.0-2020.02.13

nginx-6.6.0-2019.11.15

2.filebeat配置

[root@db01 ~]# cat >/etc/filebeat/filebeat.yml<<EOF

filebeat.inputs:

- type: log

enabled: true

paths:

- /var/log/nginx/access.log

json.keys_under_root: true

json.overwrite_keys: true

output.elasticsearch:

hosts: ["10.0.0.51:9200"]

index: "nginx-%{[beat.version]}-%{+yyyy.MM}"

setup.template.name: "nginx"

setup.template.pattern: "nginx-*"

setup.template.enabled: false

setup.template.overwrite: true

EOF

3.重启filebeat

[root@db01 ~]# systemctl restart filebeat

4.生成新日志并检查

[root@db01 ~]# curl 127.0.0.1

5.es-head插件查看并在中kibana添加

3.filebeat按照服务类型拆分索引

1.理想中的情况:

nginx-access-6.6.0-2020.02

nginx-error-6.6.0-2020.02

2.filebeat配置

#第一种方法:

[root@db01 ~]# cat >/etc/filebeat/filebeat.yml <<EOF

filebeat.inputs:

- type: log

enabled: true

paths:

- /var/log/nginx/access.log

json.keys_under_root: true

json.overwrite_keys: true

- type: log

enabled: true

paths:

- /var/log/nginx/error.log

output.elasticsearch:

hosts: ["10.0.0.51:9200"]

indices:

- index: "nginx-access-%{[beat.version]}-%{+yyyy.MM}"

when.contains:

source: "/var/log/nginx/access.log"

- index: "nginx-error-%{[beat.version]}-%{+yyyy.MM}"

when.contains:

source: "/var/log/nginx/error.log"

setup.template.name: "nginx"

setup.template.pattern: "nginx-*"

setup.template.enabled: false

setup.template.overwrite: true

EOF

#第二种方法:

[root@db01 ~]# cat >/etc/filebeat/filebeat.yml <<EOF

filebeat.inputs:

- type: log

enabled: true

paths:

- /var/log/nginx/access.log

json.keys_under_root: true

json.overwrite_keys: true

tags: ["access"]

- type: log

enabled: true

paths:

- /var/log/nginx/error.log

tags: ["error"]

output.elasticsearch:

hosts: ["10.0.0.51:9200"]

indices:

- index: "nginx-access-%{[beat.version]}-%{+yyyy.MM}"

when.contains:

tags: "access"

- index: "nginx-error-%{[beat.version]}-%{+yyyy.MM}"

when.contains:

tags: "error"

setup.template.name: "nginx"

setup.template.pattern: "nginx-*"

setup.template.enabled: false

setup.template.overwrite: true

EOF

3.重启filebeat

[root@db01 ~]# systemctl restart filebeat

4.生成正确和错误的测试数据

[root@db01 ~]# curl 127.0.0.1/haahha

[root@db01 ~]# curl 127.0.0.1

5.检查是否生成对应的索引

nginx-access-6.6.0-2020.02

nginx-error-6.6.0-2020.02

4.收集多台服务器nginx日志

1.在别的服务器上面安装nginx

#更换官方源

[root@db02 ~]# cat /etc/yum.repos.d/nginx.repo

[nginx-stable]

name=nginx stable repo

baseurl=http://nginx.org/packages/centos/$releasever/$basearch/

gpgcheck=1

enabled=1

gpgkey=https://nginx.org/keys/nginx_signing.key

module_hotfixes=true

[nginx-mainline]

name=nginx mainline repo

baseurl=http://nginx.org/packages/mainline/centos/$releasever/$basearch/

gpgcheck=1

enabled=0

gpgkey=https://nginx.org/keys/nginx_signing.key

module_hotfixes=true

#安装nginx

[root@db02 ~]# yum install nginx -y

2.复制db01的nginx的配置文件

[root@db02 ~]# scp 10.0.0.51:/etc/nginx/nginx.conf /etc/nginx/nginx.conf

[root@db02 ~]# scp 10.0.0.51:/etc/nginx/conf.d/www.conf /etc/nginx/conf.d/

3.创建测试页面

[root@db02 ~]# mkdir /code/www/ -p

[root@db02 ~]# echo "db02-www" > /code/www/index.html

4.重启nginx

[root@db02 ~]# >/var/log/nginx/access.log

[root@db02 ~]# >/var/log/nginx/error.log

[root@db02 ~]# nginx -t

[root@db02 ~]# systemctl restart nginx

5.安装filebeat

[root@db02 ~]# rpm -ivh filebeat-6.6.0-x86_64.rpm

6.复制filebeat配置文件

[root@db02 ~]# scp 10.0.0.51:/etc/filebeat/filebeat.yml /etc/filebeat/

7.启动filebeat

[root@db02 ~]# systemctl restart filebeat

8.生成测试数据

[root@db02 ~]# curl 127.0.0.1/22222222222222

[root@db02 ~]# curl 127.0.0.1

#收集nginx完整的filebeat配置

[root@db01]# cat /etc/filebeat/filebeat.yml

filebeat.inputs:

- type: log

enabled: true

paths:

- /var/log/nginx/access.log

json.keys_under_root: true

json.overwrite_keys: true

- type: log

enabled: true

paths:

- /var/log/nginx/error.log

output.elasticsearch:

hosts: ["10.0.0.51:9200"]

indices:

- index: "nginx-access-%{[beat.version]}-%{+yyyy.MM}"

when.contains:

source: "/var/log/nginx/access.log"

- index: "nginx-error-%{[beat.version]}-%{+yyyy.MM}"

when.contains:

source: "/var/log/nginx/error.log"

setup.template.name: "nginx"

setup.template.pattern: "nginx-*"

setup.template.enabled: false

setup.template.overwrite: true

5.filebeat收集tomcat的json日志

1.安装tomcat、filebeat

tomcat略

在tomcat服务器安装filebeat

filebeat

yum localinstall filebeat-6.7.0-x86_64.rpm -y2.配置tomcat日志格式为json

[root@web01 ~]# /data/apache-tomcat-8.0.33/bin/shutdown.sh

[root@web01 ~]# vim /data/apache-tomcat-8.0.33/conf/server.xml

修改参数日志格式

pattern="{"clientip":"%h","ClientUser":"%l","authenticated":"%u","AccessTime":"%t","method":"%r","status":"%s","SendBytes":"%b","Query?string":"%q","partner":"%{Referer}i","AgentVersion":"%{User-Agent}i"}"/>

3.启动tomcat

/data/apache-tomcat-8.0.33/bin/startup.sh4.配置filebeat

cat >/etc/filebeat/filebeat.yml <<EOF

filebeat.inputs:

- type: log

enabled: true

paths:

- /data/apache-tomcat-8.0.33/logs/localhost_access_log.*.txt

json.keys_under_root: true

json.overwrite_keys: true

tags: ["tomcat"]

output.elasticsearch:

hosts: ["10.4.7.101:9200"]

index: "tomcat_access-%{[beat.version]}-%{+yyyy.MM}"

username: "elastic"

password: "123456"

xpack.monitoring.enabled: true

xpack.monitoring.elasticsearch:

setup.template.name: "tomcat"

setup.template.pattern: "tomcat_*"

setup.template.enabled: false

setup.template.overwrite: true

EOF5.重启filebeat

systemctl restart filebeat6.访问tomcat查看是否有数据和x-pack监控生成

6.filebeat收集java多行匹配模式

1.filebeat配置文件

[root@db01 ~]# cat /etc/filebeat/filebeat.yml

filebeat.inputs:

- type: log

enabled: true

paths:

- /var/log/elasticsearch/elasticsearch.log

multiline.pattern: '^\['

multiline.negate: true

multiline.match: after

output.elasticsearch:

hosts: ["10.0.0.51:9200"]

index: "es-%{[beat.version]}-%{+yyyy.MM}"

username: "elastic"

password: "123456"

xpack.monitoring.enabled: true

xpack.monitoring.elasticsearch:

setup.template.name: "tomcat"

setup.template.pattern: "tomcat_*"

setup.template.enabled: false

setup.template.overwrite: true配置完成重启filebeat

2.filebeat收集tomcat的catalina.out和access访问日志

filebeat.inputs:

- type: log

enabled: true

paths:

- /data/apache-tomcat-8.0.33/logs/localhost_access_log.*.txt

json.keys_under_root: true

json.overwrite_keys: true

tags: ["access"]

- type: log

enabled: true

paths:

- /data/apache-tomcat-8.0.33/logs/catalina.out

tags: ["catalina"]

output.elasticsearch:

hosts: ["10.4.7.101:9200"]

indices:

- index: "tomcat_access-%{[beat.version]}-%{+yyyy.MM}"

when.contains:

tags: "access"

- index: "tomcat_catalina-%{[beat.version]}-%{+yyyy.MM}"

when.contains:

tags: "catalina"

username: "elastic"

password: "123456"

xpack.monitoring.enabled: true

xpack.monitoring.elasticsearch:

setup.template.name: "tomcat"

setup.template.pattern: "tomcat_*"

setup.template.enabled: false

setup.template.overwrite: true配置完成重启filebeat

7.filbeat使用模块收集nginx日志

1.清空并把nginx日志恢复成普通格式

#清空日志

[root@db01 ~]# > /var/log/nginx/access.log

#编辑配置文件

[root@db01 ~]# vim /etc/nginx/nginx.conf

log_format main '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for"';

access_log /var/log/nginx/access.log main;

#检查并重启

[root@db01 ~]# nginx -t

[root@db01 ~]# systemctl restart nginx

2.访问并检查日志是否为普通格式

[root@db01 ~]# curl 127.0.0.1

[root@db01 ~]# tail -f /var/log/nginx/access.log

3.配置filebeat配置文件支持模块

[root@db01 ~]# cat /etc/filebeat/filebeat.yml

filebeat.config.modules:

path: ${path.config}/modules.d/*.yml

reload.enabled: true

reload.period: 10s

output.elasticsearch:

hosts: ["10.0.0.51:9200"]

indices:

- index: "nginx-access-%{[beat.version]}-%{+yyyy.MM}"

when.contains:

event.dataset: "nginx.access"

- index: "nginx-error-%{[beat.version]}-%{+yyyy.MM}"

when.contains:

event.dataset: "nginx.error"

setup.template.name: "nginx"

setup.template.pattern: "nginx-*"

setup.template.enabled: false

setup.template.overwrite: true

4.激活filebeat的nginx模块

[root@db01 ~]# filebeat modules enable nginx

[root@db01 ~]# filebeat modules list

[root@db01 ~]# ll /etc/filebeat/modules.d/nginx.yml

-rw-r--r-- 1 root root 369 Jan 24 2019 /etc/filebeat/modules.d/nginx.yml

5.配置filebeat的nginx模块配置文件

[root@db01 ~]# cat >/etc/filebeat/modules.d/nginx.yml <<EOF

- module: nginx

access:

enabled: true

var.paths: ["/var/log/nginx/access.log"]

error:

enabled: true

var.paths: ["/var/log/nginx/error.log"]

EOF

6.es安装filebeat的nginx模块必要插件并重启

#上传插件

[root@db01 ~]# ll

-rw-r--r-- 1 root root 33255554 Jan 8 08:15 ingest-geoip-6.6.0.zip

-rw-r--r-- 1 root root 62173 Jan 8 08:15 ingest-user-agent-6.6.0.zip

#切换目录并安装插件

[root@db01 ~]# cd /usr/share/elasticsearch/

[root@db01 ~]# ./bin/elasticsearch-plugin install file:///root/ingest-geoip-6.6.0.zip

注意安装时候需要输入 “y” 确认

[root@db01 ~]# ./bin/elasticsearch-plugin install file:///root/ingest-user-agent-6.6.0.zip

[root@db01 ~]# systemctl restart elasticsearch

7.重启filebeat

[root@db01 ~]# systemctl restart filebeat

8.删除es-head插件中原有nginx的数据和ibana中的ngixn数据

生成新的日志数据,es-head插件更新查看,kibana添加

8.filebeat使用模块收集mysql慢日志

#二进制安装

1.下载或上传软件包

wget https://downloads.mysql.com/archives/get/file/mysql-5.6.44-linux-glibc2.12-x86_64.tar.gz

2.解压

[root@db01 ~]# tar xf mysql-5.6.44-linux-glibc2.12-x86_64.tar.gz

[root@db01 ~]# ll

total 321404

drwxr-xr-x 13 root root 191 Oct 31 04:31 mysql-5.6.44-linux-glibc2.12-x86_64

-rw-r--r-- 1 root root 329105487 Oct 30 10:23 mysql-5.6.44-linux-glibc2.12-x86_64.tar.gz

3.安装依赖软件包

[root@db01 ~]# yum install -y autoconf libaio*

4.创建 mysql 用户

[root@db01 ~]# useradd mysql -s /sbin/nologin -M

[root@db01 ~]# id mysql

uid=1000(mysql) gid=1000(mysql) groups=1000(mysql)

5.将解压后的软件包目录移动到 /opt 目录下面并更改文件名

[root@db01 ~]# mv mysql-5.6.44-linux-glibc2.12-x86_64 /opt/mysql-5.6.44

[root@db01 ~]# cd /opt/mysql-5.6.44/

[root@db01 /opt/mysql-5.6.44]# ll

total 40

drwxr-xr-x 2 root root 4096 Oct 31 04:31 bin

-rw-r--r-- 1 7161 31415 17987 Mar 15 2019 COPYING

drwxr-xr-x 3 root root 18 Oct 31 04:30 data

drwxr-xr-x 2 root root 55 Oct 31 04:30 docs

drwxr-xr-x 3 root root 4096 Oct 31 04:30 include

drwxr-xr-x 3 root root 316 Oct 31 04:31 lib

drwxr-xr-x 4 root root 30 Oct 31 04:30 man

drwxr-xr-x 10 root root 291 Oct 31 04:30 mysql-test

-rw-r--r-- 1 7161 31415 2496 Mar 15 2019 README

drwxr-xr-x 2 root root 30 Oct 31 04:30 scripts

drwxr-xr-x 28 root root 4096 Oct 31 04:31 share

drwxr-xr-x 4 root root 4096 Oct 31 04:31 sql-bench

drwxr-xr-x 2 root root 136 Oct 31 04:30 support-files

6.制作软连接

[root@db01 ~]# ln -s /opt/mysql-5.6.44/ /opt/mysql

[root@db01 ~]# ll /opt/mysql

lrwxrwxrwx 1 root root 18 Oct 31 04:37 /opt/mysql -> /opt/mysql-5.6.44/

7.拷贝启动脚本

[root@db01 /opt/mysql-5.6.44]# cd /opt/mysql-5.6.44/support-files/

[root@db01 /opt/mysql-5.6.44/support-files]# cp mysql.server /etc/init.d/mysqld

[root@db01 /opt/mysql-5.6.44/support-files]# ll /etc/init.d/mysqld

-rwxr-xr-x 1 root root 10565 Oct 31 04:40 /etc/init.d/mysqld

8.拷贝配置文件

[root@db01 /opt/mysql-5.6.44/support-files]# cp my-default.cnf /etc/my.cnf

cp: overwrite ‘/etc/my.cnf’? y

[root@db01 /opt/mysql-5.6.44/support-files]# ll /etc/my.cnf

-rw-r--r--. 1 root root 1126 Oct 31 04:41 /etc/my.cnf

9.初始化数据库

[root@db01 /opt/mysql-5.6.44/support-files]# cd ../scripts/

[root@db01 /opt/mysql-5.6.44/scripts]# ll

total 36

-rwxr-xr-x 1 7161 31415 34558 Mar 15 2019 mysql_install_db

[root@db01 /opt/mysql-5.6.44/scripts]# ./mysql_install_db --basedir=/opt/mysql --datadir=/opt/mysql/data --user=mysql

#只要有两个ok就行

10.授权 mysql 目录

[root@db01 /opt/mysql-5.6.44/scripts]# chown -R mysql.mysql /opt/mysql-5.6.44/

[root@db01 /opt/mysql-5.6.44/scripts]# ll /opt/

total 0

lrwxrwxrwx 1 mysql mysql 18 Oct 31 04:37 mysql -> /opt/mysql-5.6.44/

drwxr-xr-x 13 mysql mysql 223 Oct 31 04:43 mysql-5.6.44

11.修改 mysql 启动脚本和程序

[root@db01 /opt/mysql-5.6.44/scripts]# sed -i 's#/usr/local#/opt#g' /etc/init.d/mysqld /opt/mysql/bin/mysqld_safe

12.启动 mysqkl

[root@db01 /opt/mysql-5.6.44/scripts]# /etc/init.d/mysqld start

Starting MySQL.Logging to '/opt/mysql/data/db01.err'.

SUCCESS!

13.添加环境变量

[root@db01 /opt/mysql-5.6.44/scripts]# vim /etc/profile.d/mysql.sh

export PATH="/opt/mysql/bin:$PATH"

[root@db01 /opt/mysql-5.6.44/scripts]# source /etc/profile.d/mysql.sh

14.登录mysql数据库

[root@db01 /opt/mysql-5.6.44/scripts]# mysql

Welcome to the MySQL monitor. Commands end with ; or \g.

Your MySQL connection id is 1

Server version: 5.6.44 MySQL Community Server (GPL)

Copyright (c) 2000, 2019, Oracle and/or its affiliates. All rights reserved.

Oracle is a registered trademark of Oracle Corporation and/or its

affiliates. Other names may be trademarks of their respective

owners.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

mysql>

==============================================================================

#filebeat使用模块收集mysql慢日志

1.配置mysql错误日志和慢日志路径

编辑my.cnf

[root@db01 ~]# vim /etc/my.cnf

[mysqld]

slow_query_log=ON

slow_query_log_file=/opt/mysql/data/slow.log

long_query_time=1

2.重启mysql并制造慢日志

[root@db01 ~]# /etc/init.d/mysqld restart

3.慢日志制造语句

mysql<

select sleep(2) user,host from mysql.user ;

4.确认慢日志和错误日志确实有生成

[root@db01 ~]# mysql -e "show variables like '%slow_query_log%'"

+---------------------+----------------------------------+

| Variable_name | Value |

+---------------------+----------------------------------+

| slow_query_log | ON |

| slow_query_log_file | /opt/mysql/data/slow.log |

+---------------------+----------------------------------+

5.激活filebeat的mysql模块

[root@db01 ~]# filebeat modules enable mysql

6.配置mysql的模块

[root@db01 ~]# cat /etc/filebeat/modules.d/mysql.yml

- module: mysql

# Error logs

error:

enabled: true

var.paths: ["/opt/mysql/data/db01.err"]

# Slow logs

slowlog:

enabled: true

var.paths: ["/opt/mysql/data/slow.log"]

7.配置filebeat根据日志类型做判断

[root@db01 ~]# cat /etc/filebeat/filebeat.yml

filebeat.config.modules:

path: ${path.config}/modules.d/*.yml

reload.enabled: true

reload.period: 10s

output.elasticsearch:

hosts: ["10.0.0.51:9200"]

indices:

- index: "mysql_slow-%{[beat.version]}-%{+yyyy.MM}"

when.contains:

source: "/opt/mysql/data/slow.log"

- index: "mysql_error-%{[beat.version]}-%{+yyyy.MM}"

when.contains:

source: "/opt/mysql/data/db01.err"

setup.template.name: "mysql"

setup.template.pattern: "mysql-*"

setup.template.enabled: false

setup.template.overwrite: true

8.重启filebeat

[root@db01 ~]# systemctl restart filebeat

9.生成慢日志数据

mysql> select sleep(2) user,host from mysql.user ;

+------+-----------+

| user | host |

+------+-----------+

| 0 | 127.0.0.1 |

| 0 | ::1 |

| 0 | db01 |

| 0 | db01 |

| 0 | localhost |

| 0 | localhost |

+------+-----------+

6 rows in set (12.01 sec)

10.登录es-head插件查询和kibana添加查询

收集docker日志

1.filebeat收集docker类型日志 ( 普通版本)

1.安装dockder

[root@db02 ~]# yum install -y yum-utils device-mapper-persistent-data lvm2

[root@db02 ~]# wget -O /etc/yum.repos.d/docker-ce.repo https://download.docker.com/linux/centos/docker-ce.repo

[root@db02 ~]# sed -i 's+download.docker.com+mirrors.tuna.tsinghua.edu.cn/docker-ce+' /etc/yum.repos.d/docker-ce.repo

[root@db02 ~]# yum makecache fast

[root@db02 ~]# yum install docker-ce -y

[root@db02 ~]# mkdir -p /etc/docker

[root@db02 ~]# tee /etc/docker/daemon.json <<-'EOF'

{

"registry-mirrors": ["https://ig2l319y.mirror.aliyuncs.com"]

}

EOF

[root@db02 ~]# systemctl daemon-reload

[root@db02 ~]# systemctl restart docker

2.启动2个Nginx容器并访问测试

[root@db02 ~]# docker run -d -p 80:80 nginx

[root@db02 ~]# docker run -d -p 8080:80 nginx

3.测试数据是否能通

[root@db02 ~]# curl 10.0.0.52

[root@db02 ~]# curl 10.0.0.52:8080

4.配置filebeat

[root@db02 ~]# cat /etc/filebeat/filebeat.yml

filebeat.inputs:

- type: docker

containers.ids:

- '*'

output.elasticsearch:

hosts: ["10.0.0.51:9200"]

index: "docker-%{[beat.version]}-%{+yyyy.MM}"

setup.template.name: "docker"

setup.template.pattern: "docker-*"

setup.template.enabled: false

setup.template.overwrite: true

5.重启filebeat

[root@db02 ~]# systemctl restart filebeat

6.重启es

[root@db02 ~]# systemctl restart elasticsearch

7.访问生成测试数据

[root@db02 ~]# curl 10.0.0.52/1111111111

[root@db02 ~]# curl 10.0.0.52:8080/2222222222

8.登录es-head插件查询和kibana添加

2.filebeat收集docker日志使用docker-compose按服务拆分索引

1.假设的场景

nginx容器 80端口

toncat容器 8080端口

2.理想中的索引名称

docker-nginx-6.6.0-2020.02

docker-tomcat-6.6.0-2020.02

3.理想的日志记录格式

nginx容器日志:

{

"log": "xxxxxx",

"stream": "stdout",

"time": "xxxx",

"service": "nginx"

}

tomcat容器日志:

{

"log": "xxxxxx",

"stream": "stdout",

"time": "xxxx",

"service": "tomcat"

}

4.docker-compose配置

[root@db02 ~]# yum install docker-compose -y

[root@db02 ~]# cat >docker-compose.yml<<EOF

version: '3'

services:

nginx:

image: nginx:latest

labels:

service: nginx

logging:

options:

labels: "service"

ports:

- "80:80"

tomcat:

image: nginx:latest

labels:

service: tomcat

logging:

options:

labels: "service"

ports:

- "8080:80"

EOF

5.删除旧的容器

[root@db02 ~]# docker stop $(docker ps -q)

[root@db02 ~]# docker rm $(docker ps -qa)

6.启动容器

[root@db02 ~]# docker-compose up -d

7.配置filebeat

[root@db02 ~]# cat >/etc/filebeat/filebeat.yml <<EOF

filebeat.inputs:

- type: log

enabled: true

paths:

- /var/lib/docker/containers/*/*-json.log

json.keys_under_root: true

json.overwrite_keys: true

output.elasticsearch:

hosts: ["10.0.0.51:9200"]

indices:

- index: "docker-nginx-%{[beat.version]}-%{+yyyy.MM}"

when.contains:

attrs.service: "nginx"

- index: "docker-tomcat-%{[beat.version]}-%{+yyyy.MM}"

when.contains:

attrs.service: "tomcat"

setup.template.name: "docker"

setup.template.pattern: "docker-*"

setup.template.enabled: false

setup.template.overwrite: true

EOF

8.重启filebeat

[root@db02 ~]# systemctl restart filebeat

9.生成访问日志

[root@db02 ~]# curl 127.0.0.1/nginxxxxxxxxxxx

[root@db02 ~]# curl 127.0.0.1:8080/dbbbbbbbbb

10.es-head插件查看

3.filebeat收集docker日志 ,按照日志类型,access/error拆分

1.之前收集的docker日志目前不完善的地方

正常日志和报错日志放在一个索引里了

2.理想中的索引名称

docker-nginx-access-6.6.0-2020.02

docker-nginx-error-6.6.0-2020.02

docker-db-access-6.6.0-2020.02

docker-db-error-6.6.0-2020.02

3.filebeat配置文件

[root@db02 ~]# cat >/etc/filebeat/filebeat.yml <<EOF

filebeat.inputs:

- type: log

enabled: true

paths:

- /var/lib/docker/containers/*/*-json.log

json.keys_under_root: true

json.overwrite_keys: true

output.elasticsearch:

hosts: ["10.0.0.51:9200"]

indices:

- index: "docker-nginx-access-%{[beat.version]}-%{+yyyy.MM}"

when.contains:

attrs.service: "nginx"

stream: "stdout"

- index: "docker-nginx-error-%{[beat.version]}-%{+yyyy.MM}"

when.contains:

attrs.service: "nginx"

stream: "stderr"

- index: "docker-tomcat-access-%{[beat.version]}-%{+yyyy.MM}"

when.contains:

attrs.service: "tomcat"

stream: "stdout"

- index: "docker-tomcat-error-%{[beat.version]}-%{+yyyy.MM}"

when.contains:

attrs.service: "tomcat"

stream: "stderr"

setup.template.name: "docker"

setup.template.pattern: "docker-*"

setup.template.enabled: false

setup.template.overwrite: true

EOF

4.重启filebeat

[root@db02 ~]# systemctl restart filebeat

5.生成测试数据

[root@db02 ~]# curl 127.0.0.1/nginxxxxxxxxxxx

[root@db02 ~]# curl 127.0.0.1:8080/dbbbbbbbbb

6.登录es-head插件查看

4.filebeat收集docker日志优化版

1.需求分析

json格式并且按照下列索引生成

docker-nginx-access-6.6.0-2020.02

docker-tomcat-access-6.6.0-2020.02

docker-tomcat-error-6.6.0-2020.02

docker-nginx-error-6.6.0-2020.02

2.停止并且删除以前的容器

[root@db02 ~]# docker stop $(docker ps -qa)

[root@db02 ~]# docker rm $(docker ps -qa)

3.创建新容器并将容器内的日志映射出来

[root@db02 ~]# docker run -d -p 80:80 -v /opt/nginx:/var/log/nginx/ nginx

[root@db02 ~]# docker run -d -p 8080:80 -v /opt/tomcat:/var/log/nginx/ nginx

[root@db02 ~]# ll /opt/

drwxr-xr-x 2 root root 41 Mar 1 10:24 nginx

drwxr-xr-x 2 root root 41 Mar 1 10:25 tomcat

4.准备json格式的nginx配置文件,将其他机器的nginx的配置文件复制到本台服务器上面

[root@db02 ~]# scp 10.0.0.51:/etc/nginx/nginx.conf /root/

[root@db02 ~]# ll

-rw-r--r-- 1 root root 1358 Mar 1 10:27 nginx.conf

#将日志格式个更改为json格式

[root@db02 ~]# grep "access_log" nginx.conf

access_log /var/log/nginx/access.log json;

5.拷贝到容器里并重启

#查看容器id

[root@db02 ~]# docker ps

[root@db02 ~]# docker cp nginx.conf Nginx容器的ID:/etc/nginx/

[root@db02 ~]# docker cp nginx.conf tomcat容器的ID:/etc/nginx/

[root@db02 ~]# docker stop $(docker ps -qa)

[root@db02 ~]# docker start Nginx容器的ID

[root@db02 ~]# docker start tomcat容器的ID

6.删除ES已经存在的索引( 在 es-head 插件中删除 )

7.配置filebeat配置文件

[root@db02 ~]# cat >/etc/filebeat/filebeat.yml <<EOF

filebeat.inputs:

- type: log

enabled: true

paths:

- /opt/nginx/access.log

json.keys_under_root: true

json.overwrite_keys: true

tags: ["nginx_access"]

- type: log

enabled: true

paths:

- /opt/nginx/error.log

tags: ["nginx_err"]

- type: log

enabled: true

paths:

- /opt/tomcat/access.log

json.keys_under_root: true

json.overwrite_keys: true

tags: ["tomcat_access"]

- type: log

enabled: true

paths:

- /opt/tomcat/error.log

tags: ["tomcat_err"]

output.elasticsearch:

hosts: ["10.0.0.51:9200"]

indices:

- index: "docker-nginx-access-%{[beat.version]}-%{+yyyy.MM}"

when.contains:

tags: "nginx_access"

- index: "docker-nginx-error-%{[beat.version]}-%{+yyyy.MM}"

when.contains:

tags: "nginx_err"

- index: "docker-tomcat-access-%{[beat.version]}-%{+yyyy.MM}"

when.contains:

tags: "tomcat_access"

- index: "docker-tomcat-error-%{[beat.version]}-%{+yyyy.MM}"

when.contains:

tags: "tomcat_err"

setup.template.name: "docker"

setup.template.pattern: "docker-*"

setup.template.enabled: false

setup.template.overwrite: true

EOF

8.重启filebeat

[root@db02 ~]# systemctl restart filebeat

9.访问并测试

[root@db02 ~]# curl 127.0.0.1/hahaha

[root@db02 ~]# curl 127.0.0.1:8080/hahaha

[root@db02 ~]# cat /opt/nginx/access.log

[root@db02 ~]# cat /opt/tomcat/access.log

9.es-head查看

使用redis优化方案

1.filebeat引入redis缓存 (redis 单节点)

filebeat收集日志传给redis,因为redis和es不能直接通信,需要中间件logstash从redis中取数据传给es,es在传给kibana展示数据

1.安装redis

[root@db01 ~]# yum install redis

[root@db01 ~]# sed -i 's#^bind 127.0.0.1#bind 127.0.0.1 10.0.0.51#' /etc/redis.conf

[root@db01 ~]# systemctl start redis

[root@db01 ~]# netstat -lntup|grep redis

[root@db01 ~]# redis-cli -h 10.0.0.51

2.停止docker容器

[root@db01 ~]# docker stop $(docker ps -q)

3.停止filebeat

[root@db01 ~]# systemctl stop filebeat

4.删除旧的ES索引

5.确认nginx日志为json格式

[root@db01 ~]# grep "access_log" nginx.conf

access_log /var/log/nginx/access.log json;

6.修改filebeat配置文件

[root@db01 ~]# cat >/etc/filebeat/filebeat.yml <<EOF

filebeat.inputs:

- type: log

enabled: true

paths:

- /var/log/nginx/access.log

json.keys_under_root: true

json.overwrite_keys: true

tags: ["access"]

- type: log

enabled: true

paths:

- /var/log/nginx/error.log

tags: ["error"]

output.redis:

hosts: ["10.0.0.51"]

keys:

- key: "nginx_access"

when.contains:

tags: "access"

- key: "nginx_error"

when.contains:

tags: "error"

setup.template.name: "nginx"

setup.template.pattern: "nginx_*"

setup.template.enabled: false

setup.template.overwrite: true

EOF

7.重启filebaet和nginx

[root@db01 ~]# systemctl restart nginx

[root@db01 ~]# systemctl restart filebeat

8.生成测试数据

[root@db01 ~]# curl 127.0.0.1/haha

9.检查

[root@db01 ~]# redis-cli -h 10.0.0.51

keys *

TYPE nginx_access

LLEN nginx_access

LRANGE nginx_access 0 -1

确认是否为json格式

10.安装logstash

[root@db01 ~]# rpm -ivh jdk-8u102-linux-x64.rpm

[root@db01 ~]# rpm -ivh logstash-6.6.0.rpm

11.配置redis将数据传给logstash的配置文件

[root@db01 ~]# cat >/etc/logstash/conf.d/redis.conf<<EOF

input {

redis {

host => "10.0.0.51"

port => "6379"

db => "0"

key => "nginx_access"

data_type => "list"

}

redis {

host => "10.0.0.51"

port => "6379"

db => "0"

key => "nginx_error"

data_type => "list"

}

}

filter {

mutate {

convert => ["upstream_time", "float"]

convert => ["request_time", "float"]

}

}

output {

stdout {}

if "access" in [tags] {

elasticsearch {

hosts => "http://10.0.0.51:9200"

manage_template => false

index => "nginx_access-%{+yyyy.MM}"

}

}

if "error" in [tags] {

elasticsearch {

hosts => "http://10.0.0.51:9200"

manage_template => false

index => "nginx_error-%{+yyyy.MM}"

}

}

}

EOF

12.前台启动测试

[root@db01 ~]# /usr/share/logstash/bin/logstash -f /etc/logstash/conf.d/redis.conf

13.检查

logstash输出的内容有没有解析成json

es-head上有没有索引生成

redis里的列表数据有没有在减少

14.将logstash放在后台运行

ctrl+c

[root@db01 ~]# systemctl start logstash

听风扇声音,开始转的时候表示logstash启动了

15.后台启动后生成数据并在es-head中查看

2.filebeat引入redis完善方案 (使用两台服务器完成redis高可用)

因为filebeat只支持把数据传到redis单节点上面(filebeat不支持传输给redis哨兵或集群,logstash也不支持从redis哨兵或集群里读取数据),所以在filebeat和redis之前配置nginx代理服务,引用keepalives高可用来完成转换,即在redis前面使用nginx做keepalived,假如节点1挂掉了,节点2还能接收filebeat数据传给redis,logstash也连接keepalived,

1.前提条件

- filebeat不支持传输给redis哨兵或集群

- logstash也不支持从redis哨兵或集群里读取数据

2.安装配置redis(db01、db02安装)

[root@db01 ~]# yum install redis -y

[root@db02 ~]# yum install redis -y

[root@db01 ~]# sed -i 's#^bind 127.0.0.1#bind 127.0.0.1 10.0.0.51#' /etc/redis.conf

[root@db02 ~]# sed -i 's#^bind 127.0.0.1#bind 127.0.0.1 10.0.0.52#' /etc/redis.conf

[root@db01 ~]# systemctl start redis

[root@db02 ~]# systemctl start redis

3.安装配置nginx

配置官方源

[root@db01 ~]# yum install nginx -y

[root@db02 ~]# yum install nginx -y

放在nginx.conf最后一行的}后面,不要放在conf.d里面

stream {

upstream redis {

server 10.0.0.51:6379 max_fails=2 fail_timeout=10s;

server 10.0.0.52:6379 max_fails=2 fail_timeout=10s backup;

}

server {

listen 6380;

proxy_connect_timeout 1s;

proxy_timeout 3s;

proxy_pass redis;

}

}

#检查并启动nginx

[root@db01 ~]# nginx -t

[root@db02 ~]# nginx -t

[root@db01 ~]# systemctl start nginx

[root@db02 ~]# systemctl start nginx

4.安装配置keepalived

[root@db01 ~]# yum install keepalived -y

[root@db02 ~]# yum install keepalived -y

#db01的配置 =======(# 虚拟ip 10.0.100)

[root@db01 ~]# cat /etc/keepalived/keepalived.conf

global_defs {

router_id db01

}

vrrp_instance VI_1 {

state MASTER

interface eth0

virtual_router_id 50

priority 150

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

10.0.0.100

}

}

#db02的配置 =======(# 虚拟ip 10.0.100)

[root@db02 ~]# cat /etc/keepalived/keepalived.conf

global_defs {

router_id db02

}

vrrp_instance VI_1 {

state BACKUP

interface eth0

virtual_router_id 50

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

10.0.0.100

}

}

[root@db01 ~]# systemctl start keepalived

[root@db02 ~]# systemctl start keepalived

[root@db01 ~]# ip addr |grep 10.0.0.100

5.测试访问能否代理到redis

[root@db01 ~]# redis-cli -h 10.0.0.100 -p 6380

#把db01的redis停掉,测试还能不能连接redis

6.配置filebeat #(只在一台器机器上执行即可)

[root@db01 ~]# cat >/etc/filebeat/filebeat.yml <<EOF

filebeat.inputs:

- type: log

enabled: true

paths:

- /var/log/nginx/access.log

json.keys_under_root: true

json.overwrite_keys: true

tags: ["access"]

- type: log

enabled: true

paths:

- /var/log/nginx/error.log

tags: ["error"]

output.redis:

hosts: ["10.0.0.100:6380"] #注意此处ip为虚拟ip:10.0.0.100

keys:

- key: "nginx_access"

when.contains:

tags: "access"

- key: "nginx_error"

when.contains:

tags: "error"

setup.template.name: "nginx"

setup.template.pattern: "nginx_*"

setup.template.enabled: false

setup.template.overwrite: true

EOF

7.测试访问filebeat能否传输到redis

[root@db01 ~]# curl 127.0.0.1/haha

[root@db01 ~]# redis-cli -h 10.0.0.51 #应该有数据

[root@db02 ~]# redis-cli -h 10.0.0.52 #应该没数据

[root@db01 ~]# redis-cli -h 10.0.0.100 -p 6380 #应该有数据

8.配置logstash

[root@db01 ~]# cat >/etc/logstash/conf.d/redis.conf<<EOF

input {

redis {

host => "10.0.0.100" #注意此处ip为虚拟ip:10.0.0.100

port => "6380"

db => "0"

key => "nginx_access"

data_type => "list"

}

redis {

host => "10.0.0.100" #注意此处ip为虚拟ip:10.0.0.100

port => "6380"

db => "0"

key => "nginx_error"

data_type => "list"

}

}

filter {

mutate {

convert => ["upstream_time", "float"]

convert => ["request_time", "float"]

}

}

output {

stdout {}

if "access" in [tags] {

elasticsearch {

hosts => "http://10.0.0.51:9200"

manage_template => false

index => "nginx_access-%{+yyyy.MM}"

}

}

if "error" in [tags] {

elasticsearch {

hosts => "http://10.0.0.51:9200"

manage_template => false

index => "nginx_error-%{+yyyy.MM}"

}

}

}

EOF

9.启动测试

/usr/share/logstash/bin/logstash -f /etc/logstash/conf.d/redis.conf

#测试完毕后台启动

systemctl start logstash

10.最终测试

ab -n 10000 -c 100 10.0.0.100/

检查es-head上索引条目是否为10000条

关闭db01的redis,在访问,测试logstash正不正常

恢复db01的redis,再测试

11.登录es-head查看日志数据

3.filebeat引入redis优化方案

1.新增加一个日志路径需要修改4个地方:

- filebat 2个位置

- logstash 2个位置

2.优化之后需要修改的地方2个地方

- filebat 1个位置

- logstash 1个位置

3.filebeat配置文件

cat /etc/filebeat/filebeat.yml

filebeat.inputs:

- type: log

enabled: true

paths:

- /var/log/nginx/access.log

json.keys_under_root: true

json.overwrite_keys: true

tags: ["access"]

- type: log

enabled: true

paths:

- /var/log/nginx/error.log

tags: ["error"]

output.redis:

hosts: ["10.0.0.100:6380"]

key: "nginx_log"

setup.template.name: "nginx"

setup.template.pattern: "nginx_*"

setup.template.enabled: false

setup.template.overwrite: true

4.优化后的logstash

cat /etc/logstash/conf.d/redis.conf

input {

redis {

host => "10.0.0.100"

port => "6380"

db => "0"

key => "nginx_log"

data_type => "list"

}

}

filter {

mutate {

convert => ["upstream_time", "float"]

convert => ["request_time", "float"]

}

}

output {

stdout {}

if "access" in [tags] {

elasticsearch {

hosts => "http://10.0.0.51:9200"

manage_template => false

index => "nginx_access-%{+yyyy.MM}"

}

}

if "error" in [tags] {

elasticsearch {

hosts => "http://10.0.0.51:9200"

manage_template => false

index => "nginx_error-%{+yyyy.MM}"

}

}

}使用kafka缓存方案/kibana画图

1.ELK使用kafka作为缓存

#============注意es和kibana需要先启动、zook和kafak页需要java环境=============#

0.配置密钥和host解析 #解析需要三台都配置

[root@db01 ~]# cat >/etc/hosts<<EOF

10.0.0.51 db01

10.0.0.52 db02

10.0.0.53 db03

EOF

#生成秘钥对并分发秘钥

[root@db01 ~]# ssh-keygen

[root@db01 ~]# ssh-copy-id 10.0.0.52

[root@db01 ~]# ssh-copy-id 10.0.0.53

1.安装zook

###db01操作

[root@db01 ~]# yum install -y rsync

[root@db01 ~]# cd /data/soft

[root@db01 ~]# tar zxf zookeeper-3.4.11.tar.gz -C /opt/

[root@db01 ~]# ln -s /opt/zookeeper-3.4.11/ /opt/zookeeper

[root@db01 ~]# mkdir -p /data/zookeeper

[root@db01 ~]# cat >/opt/zookeeper/conf/zoo.cfg<<EOF

tickTime=2000

initLimit=10

syncLimit=5

dataDir=/data/zookeeper

clientPort=2181

server.1=10.0.0.51:2888:3888

server.2=10.0.0.52:2888:3888

server.3=10.0.0.53:2888:3888

EOF

[root@db01 ~]# echo "1" > /data/zookeeper/myid

[root@db01 ~]# cat /data/zookeeper/myid

1

[root@db01 ~]# rsync -avz /opt/zookeeper* 10.0.0.52:/opt/

[root@db01 ~]# rsync -avz /opt/zookeeper* 10.0.0.53:/opt/

###db02操作

[root@db02 ~]# yum install -y rsync

[root@db02 ~]# mkdir -p /data/zookeeper

[root@db02 ~]# echo "2" > /data/zookeeper/myid

[root@db02 ~]# cat /data/zookeeper/myid

2

###db03操作

[root@db03 ~]# yum install -y rsync

[root@db03 ~]# mkdir -p /data/zookeeper

[root@db03 ~]# echo "3" > /data/zookeeper/myid

[root@db03 ~]# cat /data/zookeeper/myid

3

2.启动zookeeper(三台机器都需要启动)

[root@db01 ~]# /opt/zookeeper/bin/zkServer.sh start

[root@db02 ~]# /opt/zookeeper/bin/zkServer.sh start

[root@db03 ~]# /opt/zookeeper/bin/zkServer.sh start

3.检查启动是否成功(三台机器都需要启动)

[root@db01 ~]# /opt/zookeeper/bin/zkServer.sh status

[root@db02 ~]# /opt/zookeeper/bin/zkServer.sh status

[root@db03 ~]# /opt/zookeeper/bin/zkServer.sh status

#如果启动正常mode应该是

2个follower

1个leader

4.测试zookeeper通讯是否正常

在一个节点上执行,创建一个频道

/opt/zookeeper/bin/zkCli.sh -server 10.0.0.51:2181

create /test "hello"

在其他节点上看能否接收到

/opt/zookeeper/bin/zkCli.sh -server 10.0.0.52:2181

get /test

5.安装kafka

###db01操作

[root@db01 ~]# cd /data/soft/

[root@db01 ~]# tar zxf kafka_2.11-1.0.0.tgz -C /opt/

[root@db01 ~]# ln -s /opt/kafka_2.11-1.0.0/ /opt/kafka

[root@db01 ~]# mkdir /opt/kafka/logs

[root@db01 ~]# cat >/opt/kafka/config/server.properties<<EOF

broker.id=1

listeners=PLAINTEXT://10.0.0.51:9092

num.network.threads=3

num.io.threads=8

socket.send.buffer.bytes=102400

socket.receive.buffer.bytes=102400

socket.request.max.bytes=104857600

log.dirs=/opt/kafka/logs

num.partitions=1

num.recovery.threads.per.data.dir=1

offsets.topic.replication.factor=1

transaction.state.log.replication.factor=1

transaction.state.log.min.isr=1

log.retention.hours=24

log.segment.bytes=1073741824

log.retention.check.interval.ms=300000

zookeeper.connect=10.0.0.51:2181,10.0.0.52:2181,10.0.0.53:2181

zookeeper.connection.timeout.ms=6000

group.initial.rebalance.delay.ms=0

EOF

[root@db01 ~]# rsync -avz /opt/kafka* 10.0.0.52:/opt/

[root@db01 ~]# rsync -avz /opt/kafka* 10.0.0.53:/opt/

###db02操作

[root@db02 ~]# sed -i "s#10.0.0.51:9092#10.0.0.52:9092#g" /opt/kafka/config/server.properties

[root@db02 ~]# sed -i "s#broker.id=1#broker.id=2#g" /opt/kafka/config/server.properties

###db03操作

[root@db03 ~]# sed -i "s#10.0.0.51:9092#10.0.0.53:9092#g" /opt/kafka/config/server.properties

[root@db03 ~]# sed -i "s#broker.id=1#broker.id=3#g" /opt/kafka/config/server.properties

6.先前台启动kafka测试 (三台机器都需要启动)

[root@db01 ~]# /opt/kafka/bin/kafka-server-start.sh /opt/kafka/config/server.properties

[root@db02 ~]# /opt/kafka/bin/kafka-server-start.sh /opt/kafka/config/server.properties

[root@db03 ~]# /opt/kafka/bin/kafka-server-start.sh /opt/kafka/config/server.properties

7.检查是否启动 (三台机器都需要启动)

jps

8.kafka前台启动测试命令发送消息

创建命令

/opt/kafka/bin/kafka-topics.sh --create --zookeeper 10.0.0.51:2181,10.0.0.52:2181,10.0.0.53:2181 --partitions 3 --replication-factor 3 --topic messagetest

测试获取所有的频道

/opt/kafka/bin/kafka-topics.sh --list --zookeeper 10.0.0.51:2181,10.0.0.52:2181,10.0.0.53:2181

测试发送消息

/opt/kafka/bin/kafka-console-producer.sh --broker-list 10.0.0.51:9092,10.0.0.52:9092,10.0.0.53:9092 --topic messagetest

其他节点测试接收

/opt/kafka/bin/kafka-console-consumer.sh --zookeeper 10.0.0.51:2181,10.0.0.52:2181,10.0.0.53:2181 --topic messagetest --from-beginning

9.测试成功之后,可以放在后台启动 (三台都启动)

按ctrl + c 停止kafka的前台启动,切换到后台启动

[root@db01 ~]# /opt/kafka/bin/kafka-server-start.sh -daemon /opt/kafka/config/server.properties

[root@db02 ~]# /opt/kafka/bin/kafka-server-start.sh -daemon /opt/kafka/config/server.properties

[root@db03 ~]# /opt/kafka/bin/kafka-server-start.sh -daemon /opt/kafka/config/server.properties

10.配置filebeat

[root@db01 ~]# cat >/etc/filebeat/filebeat.yml <<EOF

filebeat.inputs:

- type: log

enabled: true

paths:

- /var/log/nginx/access.log

json.keys_under_root: true

json.overwrite_keys: true

tags: ["access"]

- type: log

enabled: true

paths:

- /var/log/nginx/error.log

tags: ["error"]

output.kafka:

hosts: ["10.0.0.51:9092", "10.0.0.52:9092", "10.0.0.53:9092"]

topic: 'filebeat'

setup.template.name: "nginx"

setup.template.pattern: "nginx_*"

setup.template.enabled: false

setup.template.overwrite: true

EOF

重启filebeat

[root@db01 ~]# systemctl restart filebeat

11.访问并检查kafka里有没有收到日志

[root@db01 ~]# curl 10.0.0.51

#获取filebeat的频道

[root@db01 ~]# /opt/kafka/bin/kafka-topics.sh --list --zookeeper 10.0.0.51:2181,10.0.0.52:2181,10.0.0.53:2181

#接收filebeat频道发来的消息

[root@db01 ~]# /opt/kafka/bin/kafka-console-consumer.sh --zookeeper 10.0.0.51:2181,10.0.0.52:2181,10.0.0.53:2181 --topic filebeat --from-beginning

12.logstash配置文件

[root@db01 ~]# cat > /etc/logstash/conf.d/kafka.conf<<EOF

input {

kafka{

bootstrap_servers=>["10.0.0.51:9092,10.0.0.52:9092,10.0.0.53:9092"]

topics=>["filebeat"]

group_id=>"logstash"

codec => "json"

}

}

filter {

mutate {

convert => ["upstream_time", "float"]

convert => ["request_time", "float"]

}

}

output {

stdout {}

if "access" in [tags] {

elasticsearch {

hosts => "http://10.0.0.51:9200"

manage_template => false

index => "nginx_access-%{+yyyy.MM}"

}

}

if "error" in [tags] {

elasticsearch {

hosts => "http://10.0.0.51:9200"

manage_template => false

index => "nginx_error-%{+yyyy.MM}"

}

}

}

EOF

13.前台启动logatash测试

#先清空ES以前生成的索引

[root@db01 ~]# /usr/share/logstash/bin/logstash -f /etc/logstash/conf.d/kafka.conf

生成访问日志

[root@db01 ~]# curl 127.0.0.1

测试:

原数据:

1.停掉db03的zookeeper

#听到zookeeper

[root@db03 ~]# /opt/zookeeper/bin/zkServer.sh stop

ZooKeeper JMX enabled by default

Using config: /opt/zookeeper/bin/../conf/zoo.cfg

Stopping zookeeper ... STOPPED

#查看jps,原来3个

[root@db03 ~]# jps

71553 Kafka

72851 Jps

#测试生成数据====db01测试

[root@db01 ~]# curl 127.0.0.1

db01-www

#登录es-head查看

2.停掉db02的zookeeper

#查看jps数据

[root@db02 ~]# jps

74467 QuorumPeerMain

78053 Jps

76628 Kafka

#停掉db02的zookeeper

[root@db02 ~]# /opt/zookeeper/bin/zkServer.sh stop

ZooKeeper JMX enabled by default

Using config: /opt/zookeeper/bin/../conf/zoo.cfg

Stopping zookeeper ... STOPPED

#查看jps,剩两条

[root@db02 ~]# jps

78210 Jps

76628 Kafka

#测试生成数据====db01测试

[root@db01 ~]# curl 127.0.0.1

db01-www

#登录es-head查看

3.停掉db01的kafa

#查看jps数据

[root@db01 ~]# jps

76902 Kafka

48472 Logstash

78089 Logstash

79034 Jps

74509 QuorumPeerMain

#停掉db01的kafa

[root@db01 ~]# /opt/kafka/bin/kafka-server-stop.sh

#查看jps数据

[root@db01 ~]# jps

79251 Jps

48472 Logstash

78089 Logstash

74509 QuorumPeerMain

#测试生成数据====db01测试

[root@db01 ~]# curl 127.0.0.1

db01-www

#登录es-head查看

#总结kafka实验

1.前提条件

- kafka和zook都是基于java的,所以需要java环境

- 这俩比较吃资源,内存得够

2.安装zook注意

- 每台机器的myid要不一样,而且要和配置文件里的id对应上

- 启动测试,角色为leader和follower

- 测试发送和接受消息

3.安装kafka注意

- kafka依赖于zook,所以如果zook不正常,kafka不能工作

- kafka配置文件里要配上zook的所有IP的列表

- kafka配置文件里要注意,写自己的IP地址

- kafka配置文件里要注意,自己的ID是zook里配置的myid

- kafka启动要看日志出现started才算是成功

4.测试zook和kafka

- 一端发送消息

- 两端能实时接收消息

5.配置filebeat

- output要配上kafka的所有的IP列表

6.配置logstash

- input要写上所有的kafka的IP列表,别忘了[]

- 前台启动测试成功后再后台启动

7.毁灭测试结果

- 只要还有1个zook和1个kafka节点,就能正常收集日志

2.kibana画图展示

8851

8851

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?