kafkaStreaming 技术:将一个topic中的消息,进行处理,再写入另一个topic

官方指导文档(含例子):》》》点这里《《《

一、将一个Topic消息写入另一个Topic

不做任何操作,只是将一个topic中的消息读取出来,写入另一个topic

创建Topic

kafka-topics.sh --zookeeper hadoop100:2181 --create --topic mystreamingin --partitions 1 --replication-factor 1

kafka-topics.sh --zookeeper hadoop100:2181 --create --topic mystreamingout --partitions 1 --replication-factor 1

代码实现

package Kafka.stream;

import org.apache.kafka.common.serialization.Serdes;

import org.apache.kafka.streams.KafkaStreams;

import org.apache.kafka.streams.StreamsBuilder;

import org.apache.kafka.streams.StreamsConfig;

import org.apache.kafka.streams.Topology;

import java.util.Properties;

import java.util.concurrent.CountDownLatch;

public class KafkaStremDemo {

public static void main(String[] args) {

Properties properties = new Properties();

properties.put(StreamsConfig.APPLICATION_ID_CONFIG,"myStreaming");

properties.put(StreamsConfig.BOOTSTRAP_SERVERS_CONFIG,"192.168.XXX.100:9092");

properties.put(StreamsConfig.DEFAULT_KEY_SERDE_CLASS_CONFIG, Serdes.String().getClass());

properties.put(StreamsConfig.DEFAULT_VALUE_SERDE_CLASS_CONFIG,Serdes.String().getClass());

//创建流构造器

StreamsBuilder builder = new StreamsBuilder();

//利用创建好的流将 mystreamingin topic 写入 mystreamingout 中

builder.stream("mystreamingin").to("mystreamingout"); //核心就是这句话

//构建 拓扑

Topology topo = builder.build();

//将 拓扑结构 和 配置信息 放入 KafkaStream

KafkaStreams kafkaStreams = new KafkaStreams(topo, properties);

//以下为固定写法

CountDownLatch latch = new CountDownLatch(1);

Runtime.getRuntime().addShutdownHook(new Thread("线程1"){

@Override

public void run() {

kafkaStreams.close();

latch.countDown();

}

});

kafkaStreams.start();

try {

latch.await();

} catch (InterruptedException e) {

e.printStackTrace();

}

System.exit(0);

}

}

二、统计数字之和

创建两个topic

kafka-topics.sh --zookeeper hadoop100:2181 --create --topic sumInput --partitions 1 --replication-factor 1

kafka-topics.sh --zookeeper hadoop100:2181 --create --topic sumOutput --partitions 1 --replication-factor 1

package Kafka.stream;

import org.apache.kafka.clients.consumer.ConsumerConfig;

import org.apache.kafka.common.serialization.Serdes;

import org.apache.kafka.streams.*;

import org.apache.kafka.streams.kstream.KStream;

import org.apache.kafka.streams.kstream.KTable;

import java.util.Properties;

import java.util.concurrent.CountDownLatch;

public class SumStreaming {

public static void main(String[] args) {

Properties properties = new Properties();

properties.put(StreamsConfig.APPLICATION_ID_CONFIG,"sumStreaming");

properties.put(StreamsConfig.BOOTSTRAP_SERVERS_CONFIG,"192.168.XXX.100:9092");

properties.put(StreamsConfig.DEFAULT_KEY_SERDE_CLASS_CONFIG, Serdes.String().getClass());

properties.put(StreamsConfig.DEFAULT_VALUE_SERDE_CLASS_CONFIG,Serdes.String().getClass());

properties.put(ConsumerConfig.AUTO_OFFSET_RESET_CONFIG,"earliest");

properties.put(StreamsConfig.COMMIT_INTERVAL_MS_CONFIG,3000);

properties.put(ConsumerConfig.ENABLE_AUTO_COMMIT_CONFIG,false);

StreamsBuilder builder = new StreamsBuilder();

KStream<Object, Object> source = builder.stream("sumInput");

KTable<String, String> sum1 = source.map((key, value) ->

new KeyValue<String, String>("sum", value.toString())

)

.groupByKey()

.reduce((x, y) -> {

Integer sum = Integer.valueOf(x) + Integer.valueOf(y);

System.out.println(sum);

return sum.toString();

});

sum1.toStream().to("sumOutput");

Topology topo = builder.build();

KafkaStreams kafkaStreams = new KafkaStreams(topo, properties);

CountDownLatch latch = new CountDownLatch(1);

Runtime.getRuntime().addShutdownHook(new Thread("线程1"){

@Override

public void run() {

kafkaStreams.close();

latch.countDown();

}

});

kafkaStreams.start();

try {

latch.await();

} catch (InterruptedException e) {

e.printStackTrace();

}

System.exit(0);

}

}

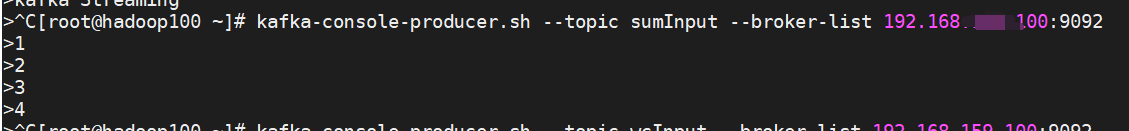

运行结果:

从生产者输入数据

消费到的数据

由于在生产的时候,输入数据的速度太快,所以并不是一次处理一个

三、WordCount

统计单词个数

创建两个topic

kafka-topics.sh --zookeeper hadoop100:2181 --create --topic wcInput --partitions 1 --replication-factor 1

kafka-topics.sh --zookeeper hadoop100:2181 --create --topic wcOutput --partitions 1 --replication-factor 1

代码实现

package Kafka.stream;

import org.apache.kafka.clients.consumer.ConsumerConfig;

import org.apache.kafka.common.serialization.Serdes;

import org.apache.kafka.streams.*;

import org.apache.kafka.streams.kstream.KStream;

import org.apache.kafka.streams.kstream.KTable;

import java.util.ArrayList;

import java.util.Arrays;

import java.util.List;

import java.util.Properties;

import java.util.concurrent.CountDownLatch;

public class WordCount {

public static void main(String[] args) {

Properties properties = new Properties();

properties.put(StreamsConfig.APPLICATION_ID_CONFIG,"sumStreaming");

properties.put(StreamsConfig.BOOTSTRAP_SERVERS_CONFIG,"192.168.XXX.100:9092");

properties.put(StreamsConfig.DEFAULT_KEY_SERDE_CLASS_CONFIG, Serdes.String().getClass());

properties.put(StreamsConfig.DEFAULT_VALUE_SERDE_CLASS_CONFIG,Serdes.String().getClass());

properties.put(ConsumerConfig.AUTO_OFFSET_RESET_CONFIG,"earliest");

properties.put(StreamsConfig.COMMIT_INTERVAL_MS_CONFIG,3000);

properties.put(ConsumerConfig.ENABLE_AUTO_COMMIT_CONFIG,false);

StreamsBuilder builder = new StreamsBuilder();

KStream<Object, Object> source = builder.stream("wcInput");

KTable<String, Long> res = source.flatMapValues((value) -> {

String[] split = value.toString().split("\\s");

List<String> list = Arrays.asList(split);

return list;

}).map((key, value) -> {

KeyValue<String, String> keyValue = new KeyValue<>(value.toString(), "1");

return keyValue;

}).groupByKey().count();

// res.toStream().foreach((key,value)->{

// System.out.println(key+":"+value);

// });

res.toStream().map((key,value)->{

return new KeyValue<String,String>(key,key+":"+value.toString());

}).to("wcOutput");

Topology topo = builder.build();

//将 拓扑结构 和 配置信息 放入 KafkaStream

KafkaStreams kafkaStreams = new KafkaStreams(topo, properties);

CountDownLatch latch = new CountDownLatch(1);

Runtime.getRuntime().addShutdownHook(new Thread("线程1"){

@Override

public void run() {

kafkaStreams.close();

latch.countDown();

}

});

kafkaStreams.start();

try {

latch.await();

} catch (InterruptedException e) {

e.printStackTrace();

}

System.exit(0);

}

}

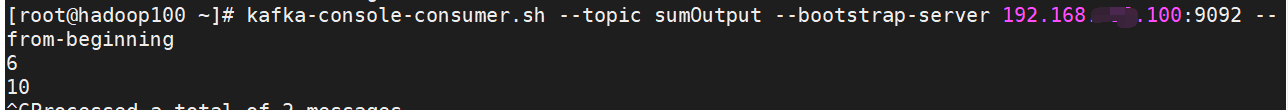

运行结果:

输入

输出

963

963

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?