Framework

Given a swarm of particles and their initial positions, we define a fitness function, which is also seen as an objective function and used to measure how good position every particle is at to serve as a good solution. It implies that every pariticle is a feasible solution to our optimization problem.

Preparation: we declaim two variables named pbest and gbest to respectively record the best one among those positions the individual visited, the best one among those positions the swarm visited.

First, we calculate the fitness value for each particle, using the fitness function we defined;

Secondly, check whether or not it is necessary to update pbest and gbest;

Thirdly, update each particle’s velocity and position. In details, the velocity update depends on three components, which are inertia, cognitive component, social component. Let me explain these three components one by one. Put simply, inertia is the previous velocity of this particle, cognitive component shows how to reach the best position this individual has visited, and social component shows how to reach the best position this swarm has visited.

Forthly, go to the next iteration, or say the first step.

Even though PSO never gurantees to find a global optimum. it is efficient to solve high-dimension optimization problems.

Example 1

The simple example is offered by the author of Grokking Artificial Intelligence Algorithms in the Chapter 7.

import math

import random

# The function that is being optimized. Namely the Booth function.

# Reference: https://en.wikipedia.org/wiki/Test_functions_for_optimization

def calculate_booth(x, y):

return math.pow(x + 2 * y - 7, 2) + math.pow(2 * x + y - 5, 2)

# Particle Swarm Optimization (PSO)

# Representing the concept of a particle:

# - Position: The position of the particle in all dimensions.

# - Best position: The best position found using the fitness function.

# - Velocity: The current velocity of the particle’s movement.

class Particle:

# Initialize a particle; including its position, inertia, cognitive constant, and social constant

def __init__(self, x, y, inertia, cognitive_constant, social_constant):

self.x = x

self.y = y

self.fitness = math.inf

self.velocity = 0

# best_x,best_y means the best position this particle have visited

self.best_x = x

self.best_y = y

self.best_fitness = math.inf

self.inertia = inertia

self.cognitive_constant = cognitive_constant

self.social_constant = social_constant

self.update_fitness()

# Get the fitness of the particle

def get_fitness(self):

return self.fitness

# Update the particle's fitness based on the function we're optimizing for

def update_fitness(self):

self.fitness = calculate_booth(self.x, self.y)

if self.fitness < self.best_fitness:

self.best_fitness = self.fitness

self.best_x = self.x

self.best_y = self.y

# Calculate the inertia component for the particle

# inertia * current velocity

@staticmethod

def calculate_inertia(inertia, current_velocity):

return inertia * current_velocity

# Calculate the cognitive component for the particle

# cognitive acceleration * (particle best solution - current position)

# lqq, I think it unnecessary to declaim this function as an oriented-object method,

# ?? you can declare it as a class method by using @staticmethod

def calculate_cognitive(self,

cognitive_constant,

cognitive_random,

particle_best_position_x,

particle_best_position_y,

particle_current_position_x,

particle_current_position_y):

cognitive_acceleration = self.calculate_acceleration(cognitive_constant, cognitive_random)

cognitive_distance = math.sqrt(((particle_best_position_x-particle_current_position_x)**2)

+ ((particle_best_position_y-particle_current_position_y)**2))

return cognitive_acceleration * cognitive_distance

#

# # Calculate the social component for the particle

# # social acceleration * (swarm best position - current position)

def calculate_social(self,

social_constant,

social_random,

swarm_best_position_x,

swarm_best_position_y,

particle_current_position_x,

particle_current_position_y):

social_acceleration = self.calculate_acceleration(social_constant, social_random)

social_distance = math.sqrt(((swarm_best_position_x-particle_current_position_x)**2)

+ ((swarm_best_position_y-particle_current_position_y)**2))

return social_acceleration * social_distance

# Calculate acceleration for the particle

@staticmethod

def calculate_acceleration(constant, random_factor):

return constant * random_factor

# Calculate the new velocity for the particle

@staticmethod

def calculate_updated_velocity(inertia, cognitive, social):

return inertia + cognitive + social

# Calculate the new position for the particle

@staticmethod

def calculate_position(current_position_x, current_position_y, updated_velocity):

return current_position_x + updated_velocity, current_position_y + updated_velocity

# Perform the update on inertia component, cognitive component, social component, velocity, and position

def update(self, swarm_best_x, swarm_best_y):

i = self.calculate_inertia(self.inertia, self.velocity)

print('Inertia: ', i)

c = self.calculate_cognitive(self.cognitive_constant, random.random(), self.x, self.y, self.best_x, self.best_y)

print('Cognitive: ', c) # current_position VS particle_best_position

s = self.calculate_social(self.social_constant, random.random(), self.x, self.y, swarm_best_x, swarm_best_y)

print('Social: ', s) # current_position VS swarm_best_position

v = self.calculate_updated_velocity(i, c, s)

self.velocity = v

print('Velocity: ', v)

p = self.calculate_position(self.x, self.y, v)

self.x = p[0]

self.y = p[1]

print('Position: ', p)

def to_string(self):

print('Inertia: ', self.inertia)

print('Velocity: ', self.velocity)

print('Position: ', self.x, ',', self.y)

# Ant Colony Optimization (ACO).

# lqq, Particle swarm optimization

# The general lifecycle of a particle swarm optimization algorithm is as follows:

# - Initialize the population of particles: This involves determining the number of particles to be used and initialize

# each particle to a random position in the search space.

# - Calculate the fitness of each particle: Given the position of each particle, determine the fitness of that particle

# at that position.

# - Update the position of each particle: This involves repetitively updating the position of all the particles using

# principles of swarm intelligence. Particles will explore then converge to good solutions.

# - Determine stopping criteria: This involves determining when the particles stop updating and the algorithm stops.

class Swarm:

# Initialize a swarm of particles randomly

def __init__(self,

inertia,

cognitive_constant,

social_constant,

random_swarm,

number_of_particles,

number_of_iterations):

self.inertia = inertia

self.cognitive_constant = cognitive_constant

self.social_constant = social_constant

self.number_of_iterations = number_of_iterations

self.swarm = []

if random_swarm:

self.swarm = self.get_random_swarm(number_of_particles)

else:

self.swarm = self.get_sample_swarm()

# Return a static swarm of particles

@staticmethod

def get_sample_swarm():

p1 = Particle(7, 1, INERTIA, COGNITIVE_CONSTANT, SOCIAL_CONSTANT)

p2 = Particle(-1, 9, INERTIA, COGNITIVE_CONSTANT, SOCIAL_CONSTANT)

p3 = Particle(5, -1, INERTIA, COGNITIVE_CONSTANT, SOCIAL_CONSTANT)

p4 = Particle(-2, -5, INERTIA, COGNITIVE_CONSTANT, SOCIAL_CONSTANT)

particles = [p1, p2, p3, p4]

return particles

# Return a randomized swarm of particles

@staticmethod

def get_random_swarm(number_of_particles):

particles = []

for p in range(number_of_particles):

particles.append(Particle(random.randint(-10, 10),

random.randint(-10, 10),

INERTIA, COGNITIVE_CONSTANT, SOCIAL_CONSTANT))

return particles

# Get the best particle in the swarm based on its fitness

def get_best_in_swarm(self):

best = math.inf

best_particle = None

for p in self.swarm:

p.update_fitness()

if p.fitness < best:

best = p.fitness

best_particle = p

return best_particle

# Run the PSO lifecycle for every particle in the swarm

def run_pso(self):

for t in range(0, self.number_of_iterations):

best_particle = self.get_best_in_swarm()

for p in self.swarm:

p.update(best_particle.x, best_particle.y)

print('Best particle fitness: ', best_particle.fitness)

# Set the hyper parameters for the PSO

INERTIA = 0.4

COGNITIVE_CONSTANT = 0.3

SOCIAL_CONSTANT = 0.7

RANDOM_CHANCE = True

NUMBER_OF_PARTICLES = 200

NUMBER_OF_ITERATIONS = 500

# Initialize and execute the PSO algorithm

swarm = Swarm(INERTIA, COGNITIVE_CONSTANT, SOCIAL_CONSTANT, RANDOM_CHANCE, NUMBER_OF_PARTICLES, NUMBER_OF_ITERATIONS)

swarm.run_pso()

Example 2: PSO-SVM

This interesting example forked from github.com applies Particle Swarm Algorithm to find the best parameter combination for SVM classification task. Here, the best parameter combination is made of gamma and C. And we define the fitness function as the error rate on the training and testing dataset after classification.

👏👏👏👏Thank the author for sharing his coding so that I could learn how to put Particle Swarm Algorithm into use.

In order to better understand the code , I added some English notes to the original one.

# -*- coding: utf-8 -*-

# @Time : 2020/6/2

# @Author : JWDUAN

# @Email : 494056012@qq.com

# @File : utils.py

# @Software: PyCharm

from sklearn.model_selection import train_test_split

import matplotlib.pyplot as plt

import csv

import pandas as pd

import numpy as np

def plot(position):

# according to particles' 2-dim positions, we plot those particles

x = [] # gamma, the first param of SVM

y = [] # C, the second param of SVM

for i in range(0,len(position)):

x.append(position[i][0])

y.append(position[i][1])

colors = (0,0,0)

plt.scatter(x, y, c = colors, alpha = 0.1)

plt.xlabel('C')

plt.ylabel('gamma')

plt.axis([0,10,0,10])

plt.gca().set_aspect('equal', adjustable='box') # ?? for what

return plt.show()

def data_handle_v2(data_path):

# way1: read data from some specific file

colnames = ['x1', 'x2', 'x3', 'x4', 'x5', 'x6', 'x7', 'x8', 'x9', 'x10', 'x11', 'x12', 'x13', 'y']

data = pd.read_csv(data_path, sep=' ', header=None, names=colnames)

X = data.drop('y', axis=1)

X = (X - X.mean()) / X.std()

y = data['y']

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.20, random_state=42)

return X_train, X_test, y_train, y_test

def data_handle_v1(csv_data_path):

# way2: read data from some specific file

def change_float(row):

out = [float(i) for i in row]

return out

# 读取并分组

with open(csv_data_path, 'r')as file:

reader = csv.reader(file)

datas = [row for row in reader]

datas = datas[1:]

datas = [change_float(row) for row in datas]

data = [row[0:-2] for row in datas]

lables = [row[-2] for row in datas]

x = np.array(data)

y = np.array(lables)

###数据先归一化,待做。。。###

X_train, X_test, y_train, y_test = train_test_split(x, y, test_size=0.3, random_state=420)

return X_train, X_test, y_train, y_test

Next, we wrote a configuration file to set some hyperparameters, such as the number of iterations, the number of particles, learning factor of cognitive component, learning factor of social component and so on.

# -*- coding: utf-8 -*-

# @Time : 2020/6/2

# @Author : JWDUAN

# @Email : 494056012@qq.com

# @File : config.py

# @Software: PyCharm

# 数据源

data_src = '1'

if data_src == '1':

data_path = 'data/heart.dat'

elif data_src == '2':

data_path = 'data/Statlog_heart_Data.csv'

# 粒子群算法参数配置

class args:

W = 0.5 # 惯性权重 # inertia weight

c1 = 0.2 # 局部学习因子 # learning factor of cognitive component # local

c2 = 0.5 # 全局学习因子 # learning factor of social component # global

n_iterations = 10 # 迭代步数

n_particles = 100 # 粒子数

# SVM配置

kernel = 'rbf' # ["linear","poly","rbf","sigmoid"]

Next, this part is the main body of PSO algorithm.

# -*- coding: utf-8 -*-

# @Time : 2020/6/2

# @Author : JWDUAN

# @Email : 494056012@qq.com

# @File : pso_svm.py

# @Software: PyCharm

import numpy as np

import random

from sklearn.svm import SVC

from sklearn.metrics import confusion_matrix

from utils import plot

from utils import data_handle_v1, data_handle_v2

from config.config import args, kernel, data_src, data_path

def fitness_function(position,data):

X_train, X_test, y_train, y_test = data

svclassifier = SVC(kernel=kernel, gamma = position[0], C = position[1] )

svclassifier.fit(X_train, y_train)

y_train_pred = svclassifier.predict(X_train)

y_test_pred = svclassifier.predict(X_test)

return confusion_matrix(y_train,y_train_pred)[0][1] + confusion_matrix(y_train,y_train_pred)[1][0], confusion_matrix(y_test,y_test_pred)[0][1] + confusion_matrix(y_test,y_test_pred)[1][0]

def pso_svm(data):

# 初始化参数, every particle has two parameters to optimize

particle_position_vector = np.array([np.array([random.random() * 10, random.random() * 10])

for _ in range(args.n_particles)])

pbest_position = particle_position_vector # the best one among all the positions these particles visited

pbest_fitness_value = np.array([float('inf') for _ in range(args.n_particles)])

gbest_fitness_value = np.array([float('inf'), float('inf')]) # train_err,test_err

gbest_position = np.array([float('inf'), float('inf')])

velocity_vector = ([np.array([0, 0]) for _ in range(args.n_particles)])

iteration = 0

while iteration < args.n_iterations:

plot(particle_position_vector)

for i in range(args.n_particles):

fitness_cadidate = fitness_function(particle_position_vector[i], data) # train_err,test_err

print("error of particle-", i, "is (training, test)", fitness_cadidate, " At (gamma, c): ",

particle_position_vector[i])

if (pbest_fitness_value[i] > fitness_cadidate[1]):

# found one solution better than the currently best one in this individual's history

pbest_fitness_value[i] = fitness_cadidate[1] # update only one value

pbest_position[i] = particle_position_vector[i]

if (gbest_fitness_value[1] > fitness_cadidate[1]): # ?? what is gbest_fitness_value[0]

gbest_fitness_value = fitness_cadidate # !! update two values

gbest_position = particle_position_vector[i]

elif (gbest_fitness_value[1] == fitness_cadidate[1] and gbest_fitness_value[0] > fitness_cadidate[0]):

# option of updating global_best info: if test_err unchange, then check if train_err is improved or not

gbest_fitness_value = fitness_cadidate

gbest_position = particle_position_vector[i]

for i in range(args.n_particles):

new_velocity = ((args.W * velocity_vector[i]) + (args.c1 * random.random()) * (

pbest_position[i] - particle_position_vector[i])

+ (args.c2 * random.random()) * (

gbest_position - particle_position_vector[i]))

new_position = new_velocity + particle_position_vector[i]

particle_position_vector[i] = new_position

iteration = iteration + 1

def main():

if data_src == '1':

X_train, X_test, y_train, y_test = data_handle_v2(data_path)

else:

X_train, X_test, y_train, y_test = data_handle_v1(data_path)

data = [X_train, X_test, y_train, y_test]

pso_svm(data)

if __name__ == '__main__':

main()

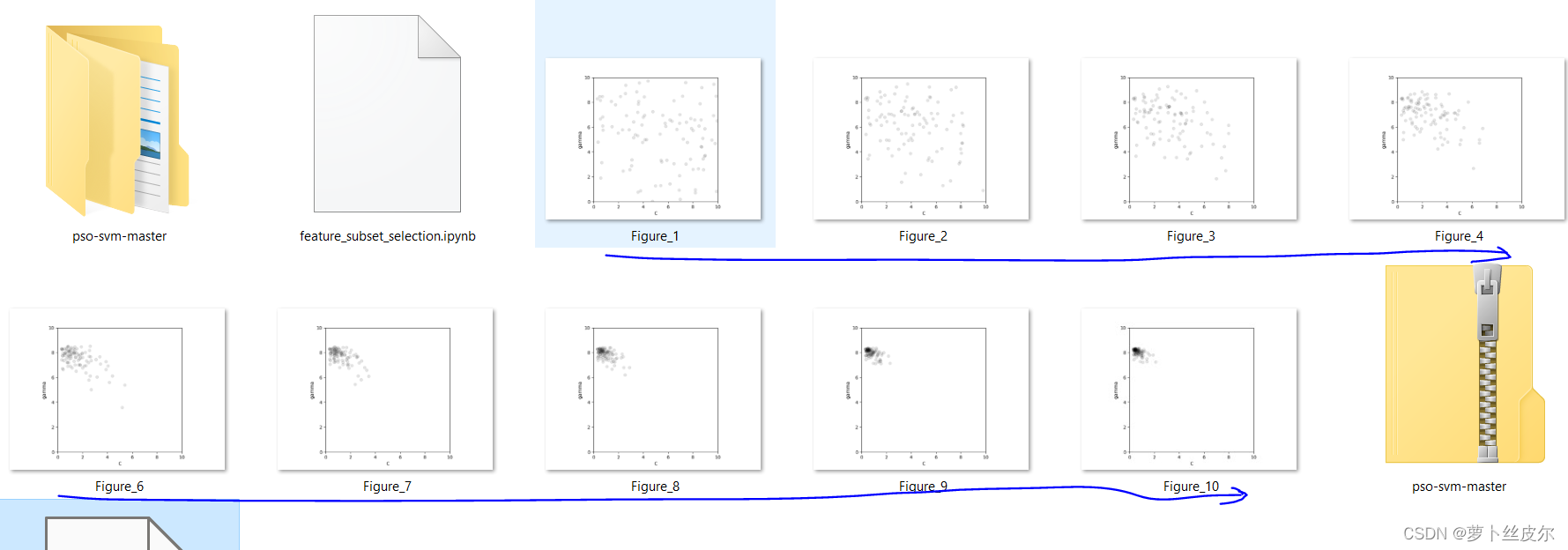

Result of Example 2

In every iteration, as the code says, we make a scatter plot for positions of the swarm of particles. We display a series of its results as follows:

From Figure_1 to Figure_10, we can see that the swarm of particles tend to converge on one position, which is the best solution, to some degree. Since PSO never gurantees to produce a global best solution, we cannot say the best solution we found as the above picture as the global optimum.

585

585

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?