线性回归:

参考:https://blog.csdn.net/dong_lxkm/article/details/80551795

通过阶梯递减,训练出我们想要的参数:

事先给出一个我我们想要的值,比如说:1

然后通过这个参数算出结果于已知的结果做对比,看是加上还是减去一定的数值;

eg:代码:

package com.example.dtest.regressionArithmetic;

import java.util.Random;

/**

* 参考:https://blog.csdn.net/dong_lxkm/article/details/80551795

* */

public class LinearRegression {

public static void main(String[] args) {

//模拟的表达式: y=3*x1+4*x2+5*x3+10

Random random = new Random();

double[] results = new double[100];

double[][] features = new double[100][3];

for (int i = 0; i < 100; i++) {

for (int j = 0; j < features[i].length; j++) {

// 模拟数据:

features[i][j] = random.nextDouble();

}

results[i] = 3 * features[i][0] + 4 * features[i][1] + 5 * features[i][2] + 10;

}

double[] parameters = new double[] { 1.0, 1.0, 1.0, 1.0 };

double learningRate = 0.01;

for (int i = 0; i < 30; i++) {

SGD(features, results, learningRate, parameters);

}

parameters = new double[] { 1.0, 1.0, 1.0, 1.0 };

System.out.println("==========================");

for (int i = 0; i < 3000; i++) {

BGD(features, results, learningRate, parameters);

}

}

private static void SGD(double[][] features, double[] results, double learningRate, double[] parameters) {

for (int j = 0; j < results.length; j++) {

double gradient = (parameters[0] * features[j][0] + parameters[1] * features[j][1]

+ parameters[2] * features[j][2] + parameters[3] - results[j]) * features[j][0];

parameters[0] = parameters[0] - 2 * learningRate * gradient;

gradient = (parameters[0] * features[j][0] + parameters[1] * features[j][1] + parameters[2] * features[j][2]

+ parameters[3] - results[j]) * features[j][1];

parameters[1] = parameters[1] - 2 * learningRate * gradient;

gradient = (parameters[0] * features[j][0] + parameters[1] * features[j][1] + parameters[2] * features[j][2]

+ parameters[3] - results[j]) * features[j][2];

parameters[2] = parameters[2] - 2 * learningRate * gradient;

gradient = (parameters[0] * features[j][0] + parameters[1] * features[j][1] + parameters[2] * features[j][2]

+ parameters[3] - results[j]);

parameters[3] = parameters[3] - 2 * learningRate * gradient;

}

double totalLoss = 0;

for (int j = 0; j < results.length; j++) {

totalLoss = totalLoss + Math.pow((parameters[0] * features[j][0] + parameters[1] * features[j][1]

+ parameters[2] * features[j][2] + parameters[3] - results[j]), 2);

}

System.out.println(parameters[0] + " " + parameters[1] + " " + parameters[2] + " " + parameters[3]);

System.out.println("totalLoss:" + totalLoss);

}

private static void BGD(double[][] features, double[] results, double learningRate, double[] parameters) {

double sum = 0;

for (int j = 0; j < results.length; j++) {

sum = sum + (parameters[0] * features[j][0] + parameters[1] * features[j][1]

+ parameters[2] * features[j][2] + parameters[3] - results[j]) * features[j][0];

}

double updateValue = 2 * learningRate * sum / results.length;

parameters[0] = parameters[0] - updateValue;

sum = 0;

for (int j = 0; j < results.length; j++) {

sum = sum + (parameters[0] * features[j][0] + parameters[1] * features[j][1]

+ parameters[2] * features[j][2] + parameters[3] - results[j]) * features[j][1];

}

updateValue = 2 * learningRate * sum / results.length;

parameters[1] = parameters[1] - updateValue;

sum = 0;

for (int j = 0; j < results.length; j++) {

sum = sum + (parameters[0] * features[j][0] + parameters[1] * features[j][1]

+ parameters[2] * features[j][2] + parameters[3] - results[j]) * features[j][2];

}

updateValue = 2 * learningRate * sum / results.length;

parameters[2] = parameters[2] - updateValue;

sum = 0;

for (int j = 0; j < results.length; j++) {

sum = sum + (parameters[0] * features[j][0] + parameters[1] * features[j][1]

+ parameters[2] * features[j][2] + parameters[3] - results[j]);

}

updateValue = 2 * learningRate * sum / results.length;

parameters[3] = parameters[3] - updateValue;

double totalLoss = 0;

for (int j = 0; j < results.length; j++) {

totalLoss = totalLoss + Math.pow((parameters[0] * features[j][0] + parameters[1] * features[j][1]

+ parameters[2] * features[j][2] + parameters[3] - results[j]), 2);

}

System.out.println(parameters[0] + " " + parameters[1] + " " + parameters[2] + " " + parameters[3]);

System.out.println("totalLoss:" + totalLoss);

}

}

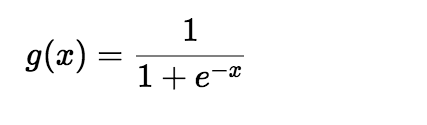

2、逻辑回归:思想和线性回归差不多,但多了一步函数映射转换,将数据映射再0到1上去:映射到:

Sigmoid函数中(将我们的函数值y,作为该函数的x带入):

参考:https://blog.csdn.net/ccblogger/article/details/81739200

举证公式为:

a为训练速率,x为已知的输入值,e是输出值和预期值之差y-y0

代码如下:

<!-- 用于矩阵运算 -->

<dependency>

<groupId>org.ujmp</groupId>

<artifactId>ujmp-core</artifactId>

<version>0.3.0</version>

</dependency>

package com.example.dtest.regressionArithmetic.logisticregression;

import org.ujmp.core.DenseMatrix;

import org.ujmp.core.Matrix;

/**

* 参考:https://blog.csdn.net/weixin_45040801/article/details/102542209

* https://blog.csdn.net/ccblogger/article/details/81739200

* */

public class LogisticRegression {

public static double[] train(double[][] data, double[] classValues) {

if (data != null && classValues != null && data.length == classValues.length) {

// 期望矩阵

// Matrix matrWeights = DenseMatrix.Factory.zeros(data[0].length + 1, 1);

Matrix matrWeights = DenseMatrix.Factory.zeros(data[0].length, 1);

System.out.println("data[0].length + 1========"+data[0].length + 1);

// 数据矩阵

// Matrix matrData = DenseMatrix.Factory.zeros(data.length, data[0].length + 1);

Matrix matrData = DenseMatrix.Factory.zeros(data.length, data[0].length);

// 标志矩阵

Matrix matrLable = DenseMatrix.Factory.zeros(data.length, 1);

// 训练速率矩阵

// Matrix matrRate = DenseMatrix.Factory.zeros(data[0].length + 1,data.length);

// 统计difference的总体失误的辅助矩阵

Matrix matrDiffUtil = DenseMatrix.Factory.zeros(data[0].length,data.length);

for(int i=0;i<data.length;i++){

for(int j=0;j<data[0].length;j++) {

matrDiffUtil.setAsDouble(1, j, i);

}

}

System.out.println("matrDiffUtil="+matrDiffUtil);

/**

* 行数应该和期望矩阵一致列数

* 列数应该和标志矩阵一致

* */

// System.out.println("matrRate======="+matrRate);

// 设置训练速率矩阵

// for(int i=0;i<data[0].length + 1;i++){

// for(int j=0;j<data.length;j++){

// }

// }

for (int i = 0; i < data.length; i++) {

// matrData.setAsDouble(1.0, i, 0);

// 初始化标志矩阵

matrLable.setAsDouble(classValues[i], i, 0);

for (int j = 0; j < data[0].length; j++) {

// 初始化数据矩阵

// matrData.setAsDouble(data[i][j], i, j + 1);

matrData.setAsDouble(data[i][j], i, j);

if (i == 0) {

// 初始化期望矩阵

// matrWeights.setAsDouble(1.0, j+1, 0);

matrWeights.setAsDouble(1.0, j, 0);

}

}

}

// matrWeights.setAsDouble(-0.5, data[0].length, 0);

// matrRate = matrData.transpose().times(0.9);

// System.out.println("matrRate============"+matrRate);

double step = 0.011;

int maxCycle = 5000000;

// int maxCycle = 5;

System.out.println("matrData======"+matrData);

System.out.println("matrWeights"+matrWeights);

System.out.println("matrLable"+matrLable);

/**

* 使用梯度下降法的思想:

* 矩阵运算,参考:https://blog.csdn.net/lionel_fengj/article/details/53400715

*

* */

for (int i = 0; i < maxCycle; i++) {

// 将想要函数转换为sigmoid函数并得到的值

Matrix h = sigmoid(matrData.mtimes(matrWeights));

// System.out.println("h=="+h);

// 求出预期和真实的差值

Matrix difference = matrLable.minus(h);

// System.out.println("difference "+difference);

// matrData转置后和difference相乘,得到预期和真实差值的每一个值

// 公式:@0 = @0 - ax(y0-y),可以参考:https://blog.csdn.net/ccblogger/article/details/81739200

matrWeights = matrWeights.plus(matrData.transpose().mtimes(difference).times(step));

// matrWeights = matrWeights.plus(matrRate.mtimes(difference).times(step));

// matrWeights = matrWeights.plus(matrDiffUtil.mtimes(difference).times(step));

}

double[] rtn = new double[(int) matrWeights.getRowCount()];

for (long i = 0; i < matrWeights.getRowCount(); i++) {

rtn[(int) i] = matrWeights.getAsDouble(i, 0);

}

return rtn;

}

return null;

}

public static Matrix sigmoid(Matrix sourceMatrix) {

Matrix rtn = DenseMatrix.Factory.zeros(sourceMatrix.getRowCount(), sourceMatrix.getColumnCount());

for (int i = 0; i < sourceMatrix.getRowCount(); i++) {

for (int j = 0; j < sourceMatrix.getColumnCount(); j++) {

rtn.setAsDouble(sigmoid(sourceMatrix.getAsDouble(i, j)), i, j);

}

}

return rtn;

}

public static double sigmoid(double source) {

return 1.0 / (1 + Math.exp(-1 * source));

}

// 测试预测值:

public static double getValue(double[] sourceData, double[] model) {

// double logisticRegressionValue = model[0];

double logisticRegressionValue = 0;

for (int i = 0; i < sourceData.length; i++) {

// logisticRegressionValue = logisticRegressionValue + sourceData[i] * model[i + 1];

logisticRegressionValue = logisticRegressionValue + sourceData[i] * model[i];

}

logisticRegressionValue = sigmoid(logisticRegressionValue);

return logisticRegressionValue;

}

}

package com.example.dtest.regressionArithmetic.logisticregression;

public class LogisicRegressionTest {

public static void main(String[] args) {

double[][] sourceData = new double[][] { { -1, 1 }, { 0, 1 }, { 1, -1 }, { 1, 0 }, { 0, 0.1 }, { 0, -0.1 }, { -1, -1.1 }, { 1, 0.9 } };

double[] classValue = new double[] { 1, 1, 0, 0, 1, 0, 0, 0 };

double[] modle = LogisticRegression.train(sourceData, classValue);

// double logicValue = LogisticRegression.getValue(new double[] { 0, 0 }, modle);

double logicValue0 = LogisticRegression.getValue(new double[] { -1, 1 }, modle);

double logicValue1 = LogisticRegression.getValue(new double[] { 0, 1 }, modle);

double logicValue2 = LogisticRegression.getValue(new double[] { 1, -1}, modle);//3.1812246935599485E-60无限趋近于0

double logicValue3 = LogisticRegression.getValue(new double[] { 1, 0}, modle);//3.091713602147872E-30无限趋近于0

double logicValue4 = LogisticRegression.getValue(new double[] { 0, 0.1}, modle);//3.091713602147872E-30无限趋近于0

double logicValue5 = LogisticRegression.getValue(new double[] { 0, -0.1 }, modle);//3.091713602147872E-30无限趋近于0

System.out.println("---model---");

for (int i = 0; i < modle.length; i++) {

System.out.println(modle[i]);

}

System.out.println("-----------");

// System.out.println(logicValue);

System.out.println(logicValue0);

System.out.println(logicValue1);

System.out.println(logicValue2);

System.out.println(logicValue3);

System.out.println(logicValue4);

System.out.println(logicValue5);

// 绘制散点图

// double[][][] chartData = new double[3][][];

// double[][] c0 = new double[2][5];

// double[][] c1 = new double[2][3];

// c1[0][0] = sourceData[0][0];

// c1[1][0] = sourceData[0][1];

//

// c1[0][1] = sourceData[1][0];

// c1[1][1] = sourceData[1][1];

//

// c0[0][0] = sourceData[2][0];

// c0[1][0] = sourceData[2][1];

//

// c0[0][1] = sourceData[3][0];

// c0[1][1] = sourceData[3][1];

//

// c1[0][2] = sourceData[4][0];

// c1[1][2] = sourceData[4][1];

//

// c0[0][2] = sourceData[5][0];

// c0[1][2] = sourceData[5][1];

//

// c0[0][3] = sourceData[6][0];

// c0[1][3] = sourceData[6][1];

//

// c0[0][4] = sourceData[7][0];

// c0[1][4] = sourceData[7][1];

//

// String[] c = new String[] { "1", "0", "L" };

// double[][] c2 = new double[2][21];

// int ind = 0;

// for (double x = -1; x <= 1; x = x + 0.1) {

// c2[0][ind] = x;

// c2[1][ind] = (-modle[0] - modle[1] * x) / modle[2];

// ind++;

// }

//

// chartData[0] = c0;

// chartData[1] = c1;

// chartData[2] = c2;

// ScatterPlot.showScatterPlotChart("LogisticRegression", c, chartData);

}

}

553

553

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?