Kafka单机搭建(zk+kafka)

1、kafka、zk版本

2、安装zk和kafka

2.1解压zk和kafka

先安装zk

– 解压 –

tar -zxvf 你的文件

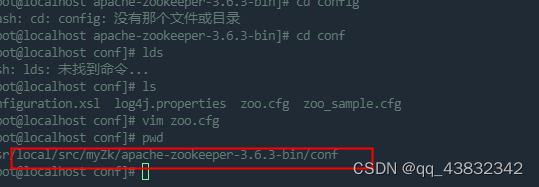

解压后进入文件conf目录下

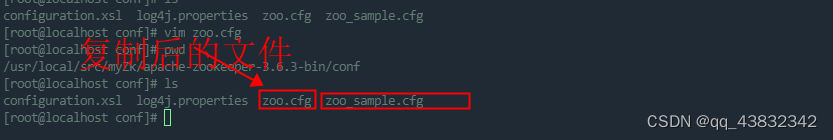

复制conf 文件中 zoo_sample.cfg(不复制zk是启动不成功的)

cp zoo_sample.cfg zoo.cfg

编辑zoo.cfg文件

vim zoo.cfg

然后按 i 进入编辑模式

# The number of milliseconds of each tick

tickTime=2000

# The number of ticks that the initial

# synchronization phase can take

initLimit=10

# The number of ticks that can pass between

# sending a request and getting an acknowledgement

syncLimit=5

# the directory where the snapshot is stored.

# do not use /tmp for storage, /tmp here is just

# example sakes.

dataDir=/tmp/zookeeper

# the port at which the clients will connect

clientPort=2181

# the maximum number of client connections.

# increase this if you need to handle more clients

#maxClientCnxns=60

#

# Be sure to read the maintenance section of the

# administrator guide before turning on autopurge.

#

# http://zookeeper.apache.org/doc/current/zookeeperAdmin.html#sc_maintenance

#

# The number of snapshots to retain in dataDir

#autopurge.snapRetainCount=3

# Purge task interval in hours

# Set to "0" to disable auto purge feature

#autopurge.purgeInterval=1

## Metrics Providers

#

# https://prometheus.io Metrics Exporter

#metricsProvider.className=org.apache.zookeeper.metrics.prometheus.PrometheusMetricsProvider

#metricsProvider.httpPort=7000

#metricsProvider.exportJvmInfo=true

~

~

退出

先按 esc 退出编辑,然后 :wq保存退出

安装kafka

– 解压 –

tar -zxvf kafka文件名

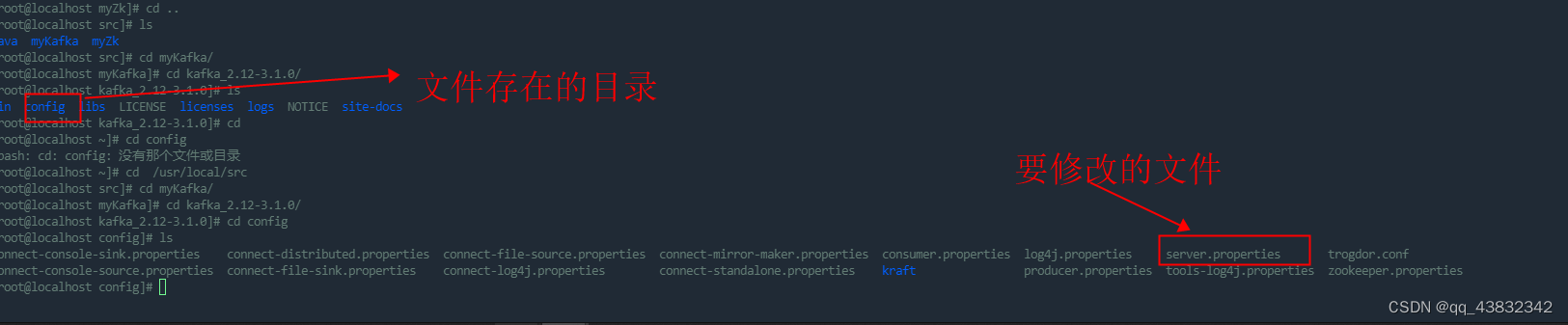

解压后

进入kafka 文件中修改配置文件

vim server.properties

# Licensed to the Apache Software Foundation (ASF) under one or more

# contributor license agreements. See the NOTICE file distributed with

# this work for additional information regarding copyright ownership.

# The ASF licenses this file to You under the Apache License, Version 2.0

# (the "License"); you may not use this file except in compliance with

# the License. You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

# see kafka.server.KafkaConfig for additional details and defaults

############################# Server Basics #############################

# The id of the broker. This must be set to a unique integer for each broker.

broker.id=0

############################# Socket Server Settings #############################

# The address the socket server listens on. It will get the value returned from

# java.net.InetAddress.getCanonicalHostName() if not configured.

# FORMAT:

# listeners = listener_name://host_name:port

# EXAMPLE:

host.name=192.168.184.128

# listeners = PLAINTEXT://your.host.name:9092

listeners=PLAINTEXT://192.168.184.128:9092

# zk和kafka配置在同一服务器,所以是本地

zookeeper.connect=127.0.0.1:2181

#ip要是你服务器的ip ,这样才能让外部访问到,如果配置了还是访问不了建议检查防火墙,开放9092 、2181端口,最后在建议重启一下试试

advertised.listeners=PLAINTEXT://192.168.184.128:9092

# Hostname and port the broker will advertise to producers and consumers. If not set,

# it uses the value for "listeners" if configured. Otherwise, it will use the value

# returned from java.net.InetAddress.getCanonicalHostName().

#advertised.listeners=PLAINTEXT://your.host.name:9092

# Maps listener names to security protocols, the default is for them to be the same. See the config documentation for more details

#listener.security.protocol.map=PLAINTEXT:PLAINTEXT,SSL:SSL,SASL_PLAINTEXT:SASL_PLAINTEXT,SASL_SSL:SASL_SSL

# The number of threads that the server uses for receiving requests from the network and sending responses to the network

num.network.threads=1

# The number of threads that the server uses for processing requests, which may include disk I/O

num.io.threads=8

# The send buffer (SO_SNDBUF) used by the socket server

socket.send.buffer.bytes=102400

# The receive buffer (SO_RCVBUF) used by the socket server

socket.receive.buffer.bytes=102400

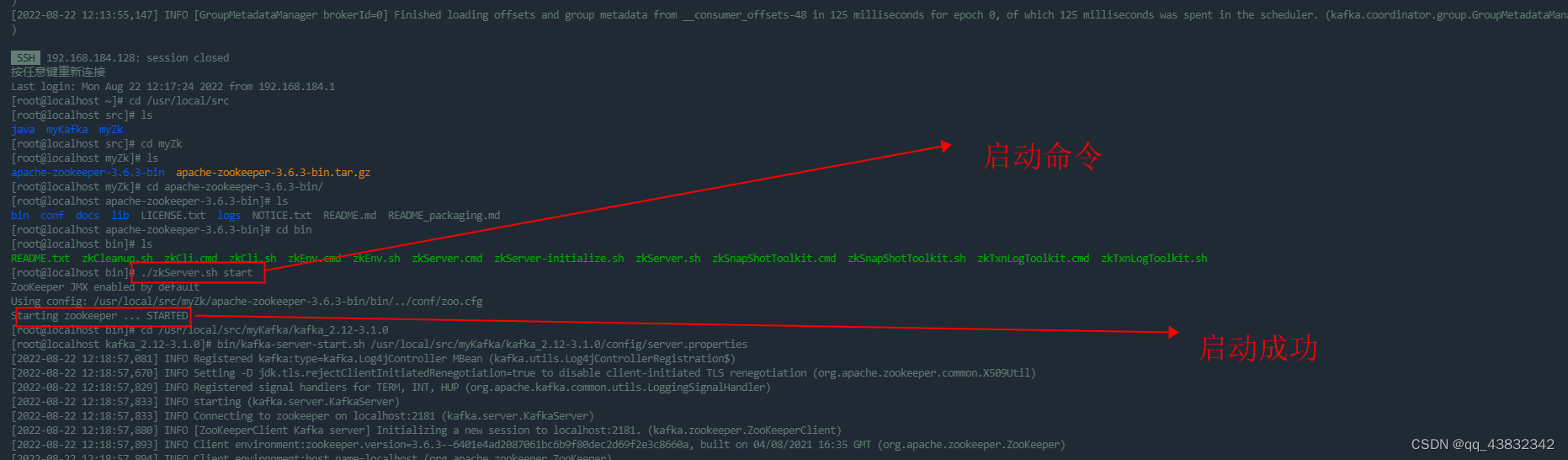

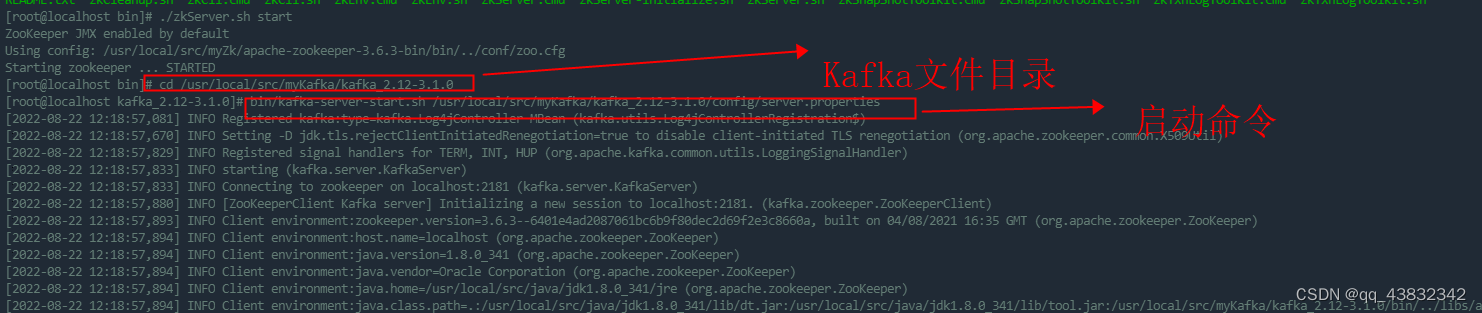

2.2 启动zk和kafka

进入到你自定义安装zk的目录下,进入bin文件夹

启动命令

./zkServer.sh start

启动kafka

进入你自定义存放kafka文件

启动命令

注意是kafka文件夹下不是bin文件夹,启动命令路径根据自己修改

bin/kafka-server-start.sh /usr/local/src/myKafka/kafka_2.12-3.1.0/config/server.properties

3、kafka常用命令

bin/kafka-server-start.sh /usr/local/src/myKafka/kafka_2.12-3.1.0/config/server.properties

lsof -i :9092

kill -9 进程id

ps -ef | grep kafka

bin/kafka-topics.sh --create --topic xhb --bootstrap-server 127.0.0.1:9092 -partitions 3 -replication-factor 1

bin/kafka-topics.sh --list --zookeeper localhost:2181

bin/kafka-console-consumer.sh --bootstrap-server 192.168.184.128:9092 --topic xhb

java配置代码

public class KafkaMian {

public static void main(String[] args) {

Properties properties = new Properties();

// 2. 给 kafka 配置对象添加配置信息:bootstrap.servers

properties.put(ProducerConfig.BOOTSTRAP_SERVERS_CONFIG,

"192.168.184.128:9092");

// key,value 序列化(必须):key.serializer,value.serializer

properties.put(ProducerConfig.KEY_SERIALIZER_CLASS_CONFIG,

"org.apache.kafka.common.serialization.StringSerializer");

properties.put(ProducerConfig.VALUE_SERIALIZER_CLASS_CONFIG,

"org.apache.kafka.common.serialization.StringSerializer");

// 3. 创建 kafka 生产者对象

KafkaProducer<String, String> kafkaProducer = new

KafkaProducer<String, String>(properties);

// 4. 调用 send 方法,发送消息

for (int i = 0; i < 5; i++) {

kafkaProducer.send(new

ProducerRecord<>("xhb","hhhh" + i));

}

// 5. 关闭资源

kafkaProducer.close();

}

}

7351

7351

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?