k8s环境搭建

创建三个虚拟机,保证私有网络使用同一个网卡,在同一个网段上

kubeadm创建集群

预备环境(机器都执行)

# ( sudo yum -y install docker-ce-20.10.7 docker-ce-cli-20.10.7 containerd.io-1.4.6 )

# 设置docker开机自启,并且现在启动

systemctl enable docker --now

# 设置主机名

hostnamectl set-hostname k8s-master

hostnamectl set-hostname k8s-node1

hostnamectl set-hostname k8s-node2

# 查看

hostname

# linux默认的安全策略

sed -i 's/enforcing/disabled/' /etc/selinux/config

setenforce 0

# 关闭防火墙 (云服务器下不用设置)

systemctl stop firewalld

systemctl disable firewalld

# 关闭swap

swapoff -a #临时关闭

sed -ri 's/.*swap.*/#&/' /etc/fstab #永久关闭

free -g #验证,swap必须为0

# 允许iptable检查桥接流量,将桥接的IPV4流量传递到iptables的链

cat > /etc/modules-load.d/k8s.conf <<EOF

br_netfilter

EOF

cat > /etc/sysctl.d/k8s.conf <<EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

# 加载配置

sysctl --system

安装kubectl,kubeadm,kubelet

# 添加阿里与Yum源

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

# 安装 1.20.9

yum install -y kubelet-1.20.9 kubeadm-1.20.9 kubectl-1.20.9 disableexcludes=kubernetes

# 启动

systemctl enable kubelet --now

# 查看kubectl状态 (就绪状态,不是一直运行的)

systemctl status kubelet

kubeadm引导集群

- 创建一个images.sh的文件

#!/bin/bash

images=(

kube-apiserver:v1.20.9

kube-proxy:v1.20.9

kube-controller-manager:v1.20.9

kube-scheduler:v1.20.9

coredns:1.7.0

etcd:3.4.13-0

pause:3.2

)

for imageName in ${images[@]} ; do

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/$imageName

# docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/$imageName k8s.gcr.io/$imageName

done

- 修改权限并且执行

chmod +x ./images.sh && ./images.sh

设置hosts

vi /etc/hosts

172.31.0.4 cluster-endpoint

172.31.0.2 node1

172.31.0.3 node2

# 编辑完成尝试ping

ping cluster-endpoint

ping node1

ping node2

初始化kubeadm(仅在master上运行)

kubeadm init \

--apiserver-advertise-address=172.31.0.4 \

--control-plane-endpoint=cluster-endpoint \

--image-repository registry.cn-hangzhou.aliyuncs.com/lfy_k8s_images \

--kubernetes-version v1.20.9 \

--service-cidr=10.96.0.0/16 \

--pod-network-cidr=192.168.0.0/16

# 如果要改,保证又有ip不重叠 (不是云服务器中--pod-network-cidr=10.244.0.0/16 修改成其他的 要不然和本地的端口冲突了)

- 初始化完成master节点 信息先保存

- 先配置网络,在加入从节点

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

# 现在主节点执行。。

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

# 如果是管理员可以执行这个命令

export KUBECONFIG=/etc/kubernetes/admin.conf

# 你要配置那个网络插件呀

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of control-plane nodes by copying certificate authorities

and service account keys on each node and then running the following as root:

# 你想要加入master节点 在执行

kubeadm join cluster-endpoint:6443 --token tbi1jv.9qp9rzap3tjhp61y \

--discovery-token-ca-cert-hash sha256:46847d2f47dd4fd8a567eb947d244722bfbea6f780ebb66f1a3d66fa1aa3a82d \

--control-plane

Then you can join any number of worker nodes by running the following on each as root:

# 如果你想加入工作node节点 执行

kubeadm join cluster-endpoint:6443 --token tbi1jv.9qp9rzap3tjhp61y \

--discovery-token-ca-cert-hash sha256:46847d2f47dd4fd8a567eb947d244722bfbea6f780ebb66f1a3d66fa1aa3a82d

此时看master节点查看节点信息

# 先执行

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

# 在查看节点信息

[root@i-wgt9mh3x ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master NotReady control-plane,master 6m11s v1.20.9

设置k8s的连接网络calico

[root@i-wgt9mh3x ~]# curl https://docs.projectcalico.org/manifests/calico.yaml -O

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 197k 100 197k 0 0 16514 0 0:00:12 0:00:12 --:--:-- 22016

[root@i-wgt9mh3x ~]# ls

calico.yaml images.sh

[root@i-wgt9mh3x ~]# kubectl apply -f calico.yaml

configmap/calico-config created

.....

# 如果上面网络不是设置的 --pod-network-cidr=192.168.0.0/16 需要修改文件中此处配置

[root@i-wgt9mh3x ~]# cat calico.yaml | grep 192.168

# value: "192.168.0.0/16"

# 查看节点配置

[root@i-wgt9mh3x ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master Ready control-plane,master 16m v1.20.9

# 如果你想加入工作node节点 (在node1,node2节点中执行)

kubeadm join cluster-endpoint:6443 --token tbi1jv.9qp9rzap3tjhp61y \

--discovery-token-ca-cert-hash sha256:46847d2f47dd4fd8a567eb947d244722bfbea6f780ebb66f1a3d66fa1aa3a82d

# 再次查看节点

[root@i-wgt9mh3x ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master Ready control-plane,master 20m v1.20.9

k8s-node1 NotReady <none> 4s v1.20.9

k8s-node2 NotReady <none> 1s v1.20.9

等待pod全部是运行状态 ,节点就是部署成功了,节点都是运行状态

# 如果集群节点令牌过期

# 生成新的令牌

[root@i-wgt9mh3x ~]# kubeadm token create --print-join-command

kubeadm join cluster-endpoint:6443 --token 7flayn.le264xh12n2ry3hj --discovery-token-ca-cert-hash sha256:46847d2f47dd4fd8a567eb947d244722bfbea6f780ebb66f1a3d66fa1aa3a82d

# 使用这个令牌让工作节点加入即可

k8s-dashboard

对应的版本下载

# 下载并应用文件

kubectl apply -f https://raw.githubusercontent.com/kubernetes/dashboard/v2.3.1/aio/deploy/recommended.yaml

# 如果下载不下来 使用迅雷下载完在拖入到服务器中 使用本地文件的方式直接运行

[root@i-wgt9mh3x ~]# kubectl apply -f recommended.yaml

namespace/kubernetes-dashboard created

serviceaccount/kubernetes-dashboard created

service/kubernetes-dashboard created

secret/kubernetes-dashboard-certs created

secret/kubernetes-dashboard-csrf created

secret/kubernetes-dashboard-key-holder created

configmap/kubernetes-dashboard-settings created

role.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrole.rbac.authorization.k8s.io/kubernetes-dashboard created

rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

deployment.apps/kubernetes-dashboard created

service/dashboard-metrics-scraper created

deployment.apps/dashboard-metrics-scraper created

默认这个只能在集群内部访问(Cluster-IP模式,修改为NodePort开放这个端口)

# 编辑并应用这个配置

kubectl edit svc kubernetes-dashboard -n kubernetes-dashboard

# 搜索/type 修改为type: NodePort

修改之前

修改之后

在安全组设置暴露这个端口 在使用外网访问

创建认证文件 dashboard-svc-account.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: dashboard-admin

namespace: kube-system

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: dashboard-admin

subjects:

- kind: ServiceAccount

name: dashboard-admin

namespace: kube-system

roleRef:

kind: ClusterRole

name: cluster-admin

apiGroup: rbac.authorization.k8s.io

执行认证文件

kubectl apply -f dashboard-svc-account.yaml

令牌获取

[root@i-wgt9mh3x ~]# kubectl get secret -n kube-system |grep admin|awk '{print $1}'

dashboard-admin-token-qt2n5

# 上一部的结果输入到下面

[root@i-wgt9mh3x ~]# kubectl describe secret dashboard-admin-token-qt2n5 -n kube-system|grep '^token'|awk '{print $2}'

# 得到的token值

eyJhbGciOiJSUzI1NiIsImtpZCI6InVsemhnZWtOVFByZERYVHk0cEl0Z1BteURYVHRsUXBqOWw1WHNxMjFwcU0ifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJkYXNoYm9hcmQtYWRtaW4tdG9rZW4tcXQybjUiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC5uYW1lIjoiZGFzaGJvYXJkLWFkbWluIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQudWlkIjoiZGQxZmMxNjktNzJiMS00ZmNkLWJmMTAtN2FlMTcxYzFiYzI2Iiwic3ViIjoic3lzdGVtOnNlcnZpY2VhY2NvdW50Omt1YmUtc3lzdGVtOmRhc2hib2FyZC1hZG1pbiJ9.BAtp47SKRI6RbsCiYlgtcpQQUB2mUNwZiQH44jiMdPCOxro9YCyqGU_-HZhcX_EU8yQX1cVx7__F7HhKpHHRTKmX7mscjuDrdiT1IBuaKo-rcPP91snNwUtyDATGLSJaouZ7h5_H3nfbU05nTysUHLxUJARWwT1jzZL_nVyWQVb9GCr2zw2kl9h5RLb4LsuLgLRKQtfgl8emWKQfckD2wn2pO2V9ugOT9_weCcMYPXmxhSrh9IYS8dh84B-7TJjPplnFXFrbC4qNZM2ijOcgJ1-gCbMvSvpAn8FmjdOMffbhrc_QEtan6wC3nsJxJuB47hLeX-lVFhKV0lr2NcxpRQ

访问 复制令牌登录

k8s简单使用

名称空间

资源隔离效果 但是网络不隔离

# 创建名称空间(命令行)

kubectl create ns dev

# 删除名称空间(命令行)

kubectl delete ns dev

[root@i-wgt9mh3x ~]# vi dev-ns.yaml

apiVersion: v1

kind: NameSpace

metadata:

name: dev

# 创建名称空间(yaml)

[root@i-wgt9mh3x ~]# kubectl apply -f dev-ns.yaml

namespace/dev created

[root@i-wgt9mh3x ~]# kubectl get ns

NAME STATUS AGE

default Active 166m

dev Active 5s

kube-node-lease Active 166m

kube-public Active 166m

kube-system Active 166m

kubernetes-dashboard Active 117m

# 删除名称空间

[root@i-wgt9mh3x ~]# kubectl delete -f dev-ns.yaml

namespace "dev" deleted

[root@i-wgt9mh3x ~]# kubectl get ns

NAME STATUS AGE

default Active 168m

kube-node-lease Active 168m

kube-public Active 168m

kube-system Active 168m

kubernetes-dashboard Active 120m

[root@i-wgt9mh3x ~]#

- create apply区别 create只能创建资源 apply可以创建或者更新资源(配置文件有变化,会进行更新资源)

Pod

pod是k8s的最小运行单位

# 使用nginx镜像运行一个名字叫mynginx一个pod

[root@k8s-master ~]# kubectl run mynginx --image=nginx

pod/mynginx created

# 查看默认名称空间下的pod

[root@k8s-master ~]# kubectl get pod mynginx -w

NAME READY STATUS RESTARTS AGE

mynginx 0/1 ContainerCreating 0 22s

mynginx 1/1 Running 0 52s

# 查看更多信息

[root@k8s-master ~]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

mynginx 1/1 Running 0 2m11s 192.168.169.131 k8s-node2 <none> <none>

# 访问测试(只有集群内部才能访问)

[root@k8s-master ~]# curl 192.168.169.131

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

。。。。。

# 进入容器内部

[root@k8s-master ~]# kubectl exec -it mynginx -- /bin/bash

root@mynginx:/# cd /usr/share/nginx/html/

# 修改首页index.html内容

root@mynginx:/usr/share/nginx/html# echo "hello nginx..." > index.html

root@mynginx:/usr/share/nginx/html# exit

exit

[root@k8s-master ~]# curl 192.168.169.131

hello nginx...

[root@k8s-master ~]#

在可视化控制台相关操作

- pod细节

使用页面yaml形式创建一个pod

等待镜像拉取

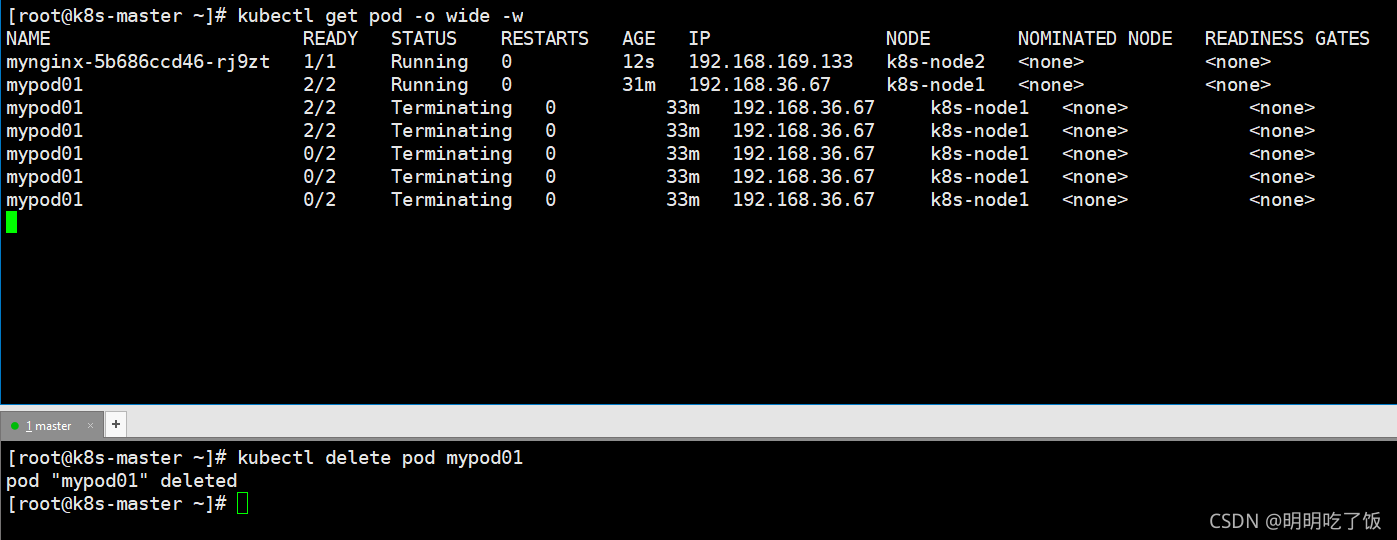

## 为pod运行两个容器

[root@k8s-master ~]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

mypod01 2/2 Running 0 11m 192.168.36.67 k8s-node1 <none> <none>

# Q:容器内部可以使用127.0.0.1访问吗

在tomcat上访问nginx 是可以的

图解一个pod网络之间(由于在同一个ip下可以互相访问) (一个pod中容器的端口号不能重复,如:不能部署两个nginx)

- 补充

# pod中有两个容器 使用--container指定进入到那个容器 (如果不指定默认进入到第一个容器中)

[root@k8s-master ~]# kubectl exec -it mypod01 --container=nginx01 -- /bin/bash

root@mypod01:/#

Deployment

自愈能力

部署一次应用

[root@k8s-master ~]# kubectl create deploy mynginx --image=nginx

如果pod删除会在健康机器重新拉取一个(而使用pod创建的删除就真的没了)

- 删除普通pod

- 删除deployment的pod (会在健康的机器拉取一个pod创建)(自愈能力)

扩缩容

命令行扩缩容

# 扩容

kubectl scale --replicas=5 deploy mynginx

# 监控deploy

kubectl get deploy -w

- 监控

修改为三个副本数量 并应用

kubectl edit deploy mynginx -n default

会在创建两个pod

-

为什么没有在master节点上创建呢?

-

在k8s中master设置有污点,禁止调度,当然可以取消这个污点

-

[root@k8s-master ~]# kubectl describe nodes k8s-master | grep Taint Taints: node-role.kubernetes.io/master:NoSchedule -

k8s-master禁止调度

滚动升级

在部署完成阶段修改镜像版本,会启动一个容器杀死一个老的容器,确保应用不掉线

# 设置新的镜像 (设置--record可以查看到这次变动历史)

[root@k8s-master ~]# kubectl set image deploy mynginx nginx=nginx:1.16.1 --record

deployment.apps/mynginx image updated

# 监控状态

[root@k8s-master ~]# kubectl get pod -w

NAME READY STATUS RESTARTS AGE

mynginx-5b686ccd46-6jpr9 1/1 Running 0 42m

mynginx-5b686ccd46-f625f 1/1 Running 0 47m

mynginx-5b686ccd46-rdsdq 1/1 Running 0 23m

mynginx-f9cbbdc9f-bxqj2 0/1 Pending 0 0s

mynginx-f9cbbdc9f-bxqj2 0/1 Pending 0 0s

mynginx-f9cbbdc9f-bxqj2 0/1 ContainerCreating 0 0s

mynginx-f9cbbdc9f-bxqj2 0/1 ContainerCreating 0 0s

mynginx-f9cbbdc9f-bxqj2 1/1 Running 0 52s

mynginx-5b686ccd46-rdsdq 1/1 Terminating 0 24m

mynginx-f9cbbdc9f-2n25n 0/1 Pending 0 0s

mynginx-f9cbbdc9f-2n25n 0/1 Pending 0 0s

mynginx-f9cbbdc9f-2n25n 0/1 ContainerCreating 0 0s

mynginx-5b686ccd46-rdsdq 1/1 Terminating 0 24m

mynginx-5b686ccd46-rdsdq 0/1 Terminating 0 24m

mynginx-f9cbbdc9f-2n25n 0/1 ContainerCreating 0 1s

mynginx-f9cbbdc9f-2n25n 1/1 Running 0 4s

mynginx-5b686ccd46-f625f 1/1 Terminating 0 48m

mynginx-f9cbbdc9f-sjvsc 0/1 Pending 0 0s

mynginx-f9cbbdc9f-sjvsc 0/1 Pending 0 0s

mynginx-f9cbbdc9f-sjvsc 0/1 ContainerCreating 0 0s

mynginx-5b686ccd46-f625f 1/1 Terminating 0 48m

mynginx-f9cbbdc9f-sjvsc 0/1 ContainerCreating 0 1s

mynginx-5b686ccd46-f625f 0/1 Terminating 0 48m

mynginx-5b686ccd46-rdsdq 0/1 Terminating 0 24m

mynginx-5b686ccd46-rdsdq 0/1 Terminating 0 24m

mynginx-5b686ccd46-f625f 0/1 Terminating 0 48m

mynginx-5b686ccd46-f625f 0/1 Terminating 0 48m

mynginx-f9cbbdc9f-sjvsc 1/1 Running 0 53s

mynginx-5b686ccd46-6jpr9 1/1 Terminating 0 44m

mynginx-5b686ccd46-6jpr9 1/1 Terminating 0 44m

mynginx-5b686ccd46-6jpr9 0/1 Terminating 0 44m

mynginx-5b686ccd46-6jpr9 0/1 Terminating 0 44m

mynginx-5b686ccd46-6jpr9 0/1 Terminating 0 44m

版本回退

# 查看版本历史

[root@k8s-master ~]# kubectl rollout history deploy mynginx

deployment.apps/mynginx

REVISION CHANGE-CAUSE

1 <none>

2 kubectl set image deploy mynginx nginx=nginx:1.16.1 --record=true

# 回退到某个版本(也是滚动更新)(--to-revision=1 回滚到那个历史版本)

[root@k8s-master ~]# kubectl rollout undo deploy mynginx --to-revision=1

deployment.apps/mynginx rolled back

# 查看确实回退到之前版本镜像的nginx

[root@k8s-master ~]# kubectl get deploy mynginx -oyaml |grep image

f:imagePullPolicy: {}

f:image: {}

- image: nginx

imagePullPolicy: Always

# 可以使用的命令

Available Commands:

history View rollout history

pause Mark the provided resource as paused

restart Restart a resource

resume Resume a paused resource

status Show the status of the rollout

undo Undo a previous rollout

- 其他工作负载

Service

ClusterIp

只能在集群内部访问

为外界提供公共的ip地址

# 部署的mynginx 80端口映射到service的

[root@k8s-master ~]# kubectl expose deploy mynginx --port=8000 --target-port=80

service/mynginx exposed

[root@k8s-master ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 8h

mynginx ClusterIP 10.96.189.140 <none> 8000/TCP 2s

修改三个容器的首页

echo 1111 > /usr/share/nginx/html/index.html

echo 2222 > /usr/share/nginx/html/index.html

echo 3333 > /usr/share/nginx/html/index.html

访问

[root@k8s-master ~]# curl 10.96.189.140:8000

1111

[root@k8s-master ~]# curl 10.96.189.140:8000

2222

[root@k8s-master ~]# curl 10.96.189.140:8000

3333

- 默认是使用集群内部的ip Clusterip 默认外部是访问不了的

- 在容器内部还可以使用域名访问 ,规则【服务名.名称空间.svc】

NodePort

在每个节点暴露一个随机的ip(30000-32767之间)

[root@k8s-master ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 21h

# 暴露端口到8000 以节点端口方式暴露

[root@k8s-master ~]# kubectl expose deploy mynginx --port=8000 --target-port=80 --type=NodePort

service/mynginx exposed

[root@k8s-master ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 21h

mynginx NodePort 10.96.29.32 <none> 8000:30096/TCP 5s

# 使用服务器ip访问

[root@k8s-master ~]# curl 139.198.164.86:30096

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

html { color-scheme: light dark; }

body { width: 35em; margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif; }

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

ingress

安装

# 拉取yaml文件

wget https://raw.githubusercontent.com/kubernetes/ingress-nginx/controller-v0.47.0/deploy/static/provider/baremetal/deploy.yaml

#修改镜像

vi deploy.yaml

#将image的值改为如下值:

registry.cn-hangzhou.aliyuncs.com/lfy_k8s_images/ingress-nginx-controller:v0.46.0

# 检查安装的结果

kubectl get pod,svc -n ingress-nginx

# 查看svc

[root@k8s-master ~]# kubectl get svc -A | grep ingress

ingress-nginx ingress-nginx-controller NodePort 10.96.81.134 <none> 80:30533/TCP,443:30726/TCP 5m12s

测试访问

http

https

使用

准备部署文件 ingress-deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: hello-server

spec:

replicas: 2

selector:

matchLabels:

app: hello-server

template:

metadata:

labels:

app: hello-server

spec:

containers:

- name: hello-server

image: registry.cn-hangzhou.aliyuncs.com/lfy_k8s_images/hello-server

ports:

- containerPort: 9000

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: nginx-demo

name: nginx-demo

spec:

replicas: 2

selector:

matchLabels:

app: nginx-demo

template:

metadata:

labels:

app: nginx-demo

spec:

containers:

- image: nginx

name: nginx

---

apiVersion: v1

kind: Service

metadata:

labels:

app: nginx-demo

name: nginx-demo

spec:

selector:

app: nginx-demo

ports:

- port: 8000

protocol: TCP

targetPort: 80

---

apiVersion: v1

kind: Service

metadata:

labels:

app: hello-server

name: hello-server

spec:

selector:

app: hello-server

ports:

- port: 8000

protocol: TCP

targetPort: 9000

准备规则文件 ingress-test.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ingress-host-bar

spec:

ingressClassName: nginx

rules:

- host: "hello.k8s.com" # 如果访问域名hello.k8s.com就会转发到hello-server:8000这个服务

http:

paths:

- pathType: Prefix

path: "/"

backend:

service:

name: hello-server

port:

number: 8000

- host: "demo.k8s.com"

http:

paths:

- pathType: Prefix

path: "/nginx" # 把请求会转给下面的服务,下面的服务一定要能处理这个路径,不能处理就是404

backend:

service:

name: nginx-demo ## java,比如使用路径重写,去掉前缀nginx

port:

number: 8000

# 应用规则并查看

[root@k8s-master ~]# kubectl apply -f ingress-test.yaml

ingress.networking.k8s.io/ingress-host-bar created

[root@k8s-master ~]# kubectl get ing -A

NAMESPACE NAME CLASS HOSTS ADDRESS PORTS AGE

default ingress-host-bar nginx hello.k8s.com,demo.k8s.com 80 3s

配置主机域名映射

在主机上访问测试 确实访问到了pod上的nginx

这个nginx原因是访问ingress中的nginx路径不匹配,被ingress返回的

测试路径重写,参照官方文档)

修改规则文件

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ingress-host-bar

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /$2 # 配置路径重写

spec:

ingressClassName: nginx

rules:

- host: "hello.k8s.com"

http:

paths:

- pathType: Prefix

path: "/"

backend:

service:

name: hello-server

port:

number: 8000

- host: "demo.k8s.com"

http:

paths:

- pathType: Prefix

path: /nginx(/|$)(.*) # 如果访问 【demo.k8s.com/nginx 就转到 demo.k8s.com/】 【demo.k8s.com/nginx/111 就转到 demo.k8s.com/111】

backend:

service:

name: nginx-demo

port:

number: 8000

编辑文件ingress-test.yaml

# 编辑完成,在应用(或者删除完成,编辑,重新创建)

kubectl apply -f ingress-test.yaml

- ingress限流使用

创建ingress-test01.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ingress-limit-rate

annotations:

nginx.ingress.kubernetes.io/limit-rps: "1"

spec:

ingressClassName: nginx

rules:

- host: "haha.k8s.com"

http:

paths:

- pathType: Exact

path: "/"

backend:

service:

name: nginx-demo

port:

number: 8000

应用限流规则

# 配置域名映射

139.198.164.86 haha.k8s.com

# 应用

kubectl create -f ingress-test01.yaml

- 正确

- 访问比较快

- k8s网络模型图

存储抽象

为什么使用NFS文件服务器呢?

在k8s中进行了目录挂载(node1),如果pod被销毁,在node2服务器了拉起一个pod,备份的数据在node1上导致数据不同步。

环境搭建

安装NFS工具

#所有机器安装

yum install -y nfs-utils

- 主节点(让master作为主节点(server))

# nfs主节点

echo "/nfs/data/ *(insecure,rw,sync,no_root_squash)" > /etc/exports

mkdir -p /nfs/data

systemctl enable rpcbind --now

systemctl enable nfs-server --now

#配置生效

exportfs -r

测试环境

- 从节点(让其他客户端节点加入server)

# 在从节点上 这个服务器有哪些目录你可以挂载

showmount -e 172.31.0.4

#执行以下命令挂载 nfs 服务器上的共享目录到本机路径 /root/nfsmount

mkdir -p /nfs/data

# 你想将/nfs/data挂载到哪里

mount -t nfs 172.31.0.4:/nfs/data /nfs/data

# 写入一个测试文件

echo "hello nfs server" > /nfs/data/test.txt

# 在master检查 确实写入成功

[root@k8s-master data]# cat test.txt

hello nfs server222

原生挂载方式

创建部署文件nfs-test.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: nginx-pv-demo

name: nginx-pv-demo

spec:

replicas: 2

selector:

matchLabels:

app: nginx-pv-demo

template:

metadata:

labels:

app: nginx-pv-demo

spec:

containers:

- image: nginx

name: nginx

volumeMounts:

- name: html

mountPath: /usr/share/nginx/html

volumes:

- name: html # 上面的挂载上面name:html

nfs:

server: 172.31.0.4 ## NFS服务器地址

path: /nfs/data/nginx-pv # 挂载到那个位置

# 要先创建这个文件夹/nfs/data/nginx-pv ,要不然pod启动不起来

mkdir /nfs/data/nginx-pv

# 应用部署

kubectl apply -f nfs-test.yaml

# 设置首页

echo "hello nginx" > /nfs/data/nginx-pv/index.html

# 查看在集群内的端口

[root@k8s-master nginx-pv]# kubectl get pods -o wide |grep nginx-pv-demo

nginx-pv-demo-5ffb6666b-dqm7q 1/1 Running 0 5m27s 192.168.36.87 k8s-node1 <none> <none>

nginx-pv-demo-5ffb6666b-qmllk 1/1 Running 0 5m27s 192.168.169.150 k8s-node2 <none> <none>

# 访问测试

[root@k8s-master nginx-pv]# curl 192.168.36.87

hello nginx

[root@k8s-master nginx-pv]# curl 192.168.169.150

hello nginx

PV&PVC

PV:持久卷(Persistent Volume),将应用需要持久化的数据保存到指定位置

PVC:持久卷申明(Persistent Volume Claim),申明需要使用的持久卷规格

- 创建pv池

pv静态供应

# nfs主节点

mkdir -p /nfs/data/01

mkdir -p /nfs/data/02

mkdir -p /nfs/data/03

- 创建pv (持久卷声明)

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv01-10m

spec:

capacity:

storage: 10M

accessModes:

- ReadWriteMany

storageClassName: nfs

nfs:

path: /nfs/data/01

server: 172.31.0.4

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv02-1gi

spec:

capacity:

storage: 1Gi

accessModes:

- ReadWriteMany

storageClassName: nfs

nfs:

path: /nfs/data/02

server: 172.31.0.4

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv03-3gi

spec:

capacity:

storage: 3Gi

accessModes:

- ReadWriteMany

storageClassName: nfs

nfs:

path: /nfs/data/03

server: 172.31.0.4

# 应用

[root@k8s-master ~]# kubectl apply -f pv-test.yaml

persistentvolume/pv01-10m created

persistentvolume/pv02-1gi created

persistentvolume/pv03-3gi created

pvc申请绑定到nfs的pv上

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: nginx-pvc

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 200Mi

storageClassName: nfs

# 应用并查看状态

[root@k8s-master ~]# kubectl apply -f pvc.yml

persistentvolumeclaim/nginx-pvc created

[root@k8s-master ~]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pv01-10m 10M RWX Retain Available nfs 19m

pv02-1gi 1Gi RWX Retain Bound default/nginx-pvc nfs 19m

pv03-3gi 3Gi RWX Retain Available nfs 19m

[root@k8s-master ~]# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

nginx-pvc Bound pv02-1gi 1Gi RWX nfs 11s

[root@k8s-master ~]#

- 创建pod绑定pvc

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: nginx-deploy-pvc

name: nginx-deploy-pvc

spec:

replicas: 2

selector:

matchLabels:

app: nginx-deploy-pvc

template:

metadata:

labels:

app: nginx-deploy-pvc

spec:

containers:

- image: nginx

name: nginx

volumeMounts:

- name: html

mountPath: /usr/share/nginx/html

volumes:

- name: html

persistentVolumeClaim:

claimName: nginx-pvc

。。。创建这次部署

这次挂载是挂载到pv02

编辑pv02查看

[root@k8s-master ~]# cd /nfs/data/02

[root@k8s-master 02]# echo 111 > index.html

[root@k8s-master 02]# kubectl get pod -o wide | grep pvc

nginx-deploy-pvc-79fc8558c7-crg8q 1/1 Running 0 7m51s 192.168.36.89 k8s-node1 <none> <none>

nginx-deploy-pvc-79fc8558c7-ld89r 1/1 Running 0 7m51s 192.168.169.152 k8s-node2 <none> <none>

[root@k8s-master 02]# curl 192.168.36.89

111

[root@k8s-master 02]# curl 192.168.169.152

111

pv池 动态供应

pod想要多少空间,就会创建多大内存空间共这个pod进行使用

ConfigMap

配置集

1.先把配置文件创建好

[root@k8s-master ~]# echo "appendonly yes" > redis.conf

[root@k8s-master ~]# kubectl get cm | grep redis

redis-conf 1 17s

# 查看配置集的详细内容

[root@k8s-master ~]# kubectl describe cm redis-conf

Name: redis-conf

Namespace: default

Labels: <none>

Annotations: <none>

Data

====

redis.conf:

----

appendonly yes

Events: <none>

接下来把配置集redis-conf中的redis.conf挂载到容器中的/redis-master文件夹中

创建pod

apiVersion: v1

kind: Pod

metadata:

name: redis

spec:

containers:

- name: redis

image: redis

command:

- redis-server

- "/redis-master/redis.conf" #指的是redis容器内部的位置

ports:

- containerPort: 6379

volumeMounts:

- mountPath: /data

name: data

- mountPath: /redis-master # 与容器那个路径进行挂载

name: config

volumes:

- name: data

emptyDir: {}

- name: config

configMap: # 挂载类型

name: redis-conf # cm下的那个资源

items:

- key: redis.conf

path: redis.conf # 创建出来在容器中的名字

等待pod创建完成

# 进入容器检查

[root@k8s-master ~]# kubectl exec -it redis -- /bin/bash

root@redis:/data# cat /redis-master/redis.conf

appendonly yes

root@redis:/data# exit

# 编辑cm配置

[root@k8s-master ~]# kubectl edit cm redis-conf

在配置项中在添加一项

# 再次检查(稍等一分钟)

[root@k8s-master ~]# kubectl exec -it redis -- /bin/bash

# 同步成功

root@redis:/data# cat /redis-master/redis.conf

appendonly yes

requirepass 123456

# 进入redis控制台

root@redis:/data# redis-cli

127.0.0.1:6379> config get appendonly

1) "appendonly"

2) "yes"

127.0.0.1:6379> config get requirepass

1) "requirepass"

2) "" # 没生效原因 是因为配置文件是启动时加载,需要重启pod才ok

127.0.0.1:6379>

尝试停止pod

# 查看redis在哪里运行起来的

[root@k8s-master ~]# kubectl get pod redis -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

redis 1/1 Running 1 20m 192.168.169.153 k8s-node2 <none> <none>

# 在k8s-node2节点上停止容器

[root@k8s-node2 data]# docker ps | grep redis

1dcd88b0608b redis "redis-server /redis…" 13 minutes ago Up 13 minutes k8s_redis_redis_default_6b5912eb-d879-4c1b-b171-69530e97ca0d_0

32b2a2524c56 registry.cn-hangzhou.aliyuncs.com/lfy_k8s_images/pause:3.2 "/pause" 14 minutes ago Up 14 minutes k8s_POD_redis_default_6b5912eb-d879-4c1b-b171-69530e97ca0d_0

[root@k8s-node2 data]# docker stop 1dcd88b0608b

1dcd88b0608b

# 停止完成等待容器重启,再次进入容器

[root@k8s-master ~]# kubectl exec -it redis -- /bin/bash

root@redis:/data# redis-cli

127.0.0.1:6379> set aa bb

(error) NOAUTH Authentication required. # 现在需要认证(输入密码)

127.0.0.1:6379> exit

# 使用密码进入

root@redis:/data# redis-cli -a 123456

Warning: Using a password with '-a' or '-u' option on the command line interface may not be safe.

127.0.0.1:6379> set aa bb

OK

127.0.0.1:6379> config get requirepass

1) "requirepass"

2) "123456"

Secret

- 场景

docker认证 就是登录即可

没有登录情况 私有仓库的镜像拉取不了(不登录推送也不行)

在k8s中可以使用Secret进行认证

尝试拉取私有仓库

创建私有的nginx文件尝试拉取

apiVersion: v1

kind: Pod

metadata:

name: private-nginx

spec:

containers:

- name: private-nginx

image: zhaoxingyudocker/nginx-zhaoxingyu:1.0

应用完成,查看pod

[root@k8s-master data]# kubectl get pod private-nginx

NAME READY STATUS RESTARTS AGE

private-nginx 0/1 ImagePullBackOff 0 35s

# 查看详细

[root@k8s-master data]# kubectl describe pod private-nginx |grep Warning

Warning Failed 21s (x4 over 96s) kubelet Error: ImagePullBackOff

Warning Failed 2s (x4 over 96s) kubelet Failed to pull image "zhaoxingyudocker/nginx-zhaoxingyu:1.0": rpc error: code = Unknown desc = Error response from daemon: pull access denied for zhaoxingyudocker/nginx-zhaoxingyu, repository does not exist or may require 'docker login': denied: requested access to the resource is denied

Warning Failed 2s (x4 over 96s) kubelet Error: ErrImagePull

# 拉取失败 出现警告(提示是私有镜像,需要登录获取)

- 使用Secret

# 创建认证注册信息

kubectl create secret docker-registry zhaoxingyu-docker \

--docker-username=zhaoxingyudocker \

--docker-password=z1549165516 \

--docker-email=2920991307@qq.com

##命令格式

kubectl create secret docker-registry regcred \

--docker-server=<你的镜像仓库服务器> \

--docker-username=<你的用户名> \

--docker-password=<你的密码> \

--docker-email=<你的邮箱地址>

## 查看创建的secret

[root@k8s-master data]# kubectl get secret zhaoxingyu-docker

NAME TYPE DATA AGE

zhaoxingyu-docker kubernetes.io/dockerconfigjson 1 4m5s

apiVersion: v1

kind: Pod

metadata:

name: private-nginx

spec:

containers:

- name: private-nginx

image: zhaoxingyudocker/nginx-zhaoxingyu:1.0

imagePullSecrets:

- name: zhaoxingyu-docker # 使用我创建secret认证进行拉取

- 删除之前的pod(private-nginx)

- 应用这次的yaml

[root@k8s-master data]# kubectl get pod private-nginx

NAME READY STATUS RESTARTS AGE

private-nginx 0/1 ImagePullBackOff 0 17m

# 删除之前拉取失败的

[root@k8s-master data]# kubectl delete -f private-nginx.yaml

pod "private-nginx" deleted

[root@k8s-master data]# kubectl apply -f private-nginx.yaml

pod/private-nginx created

[root@k8s-master data]# kubectl get pod private-nginx

NAME READY STATUS RESTARTS AGE

private-nginx 0/1 ContainerCreating 0 4s

[root@k8s-master data]# kubectl get pod private-nginx

NAME READY STATUS RESTARTS AGE

private-nginx 1/1 Running 0 14s

# 使用账户拉取时成功的

685

685

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?