基于k8s集群部署,接上文:使用kubeadm部署k8s集群-CSDN博客

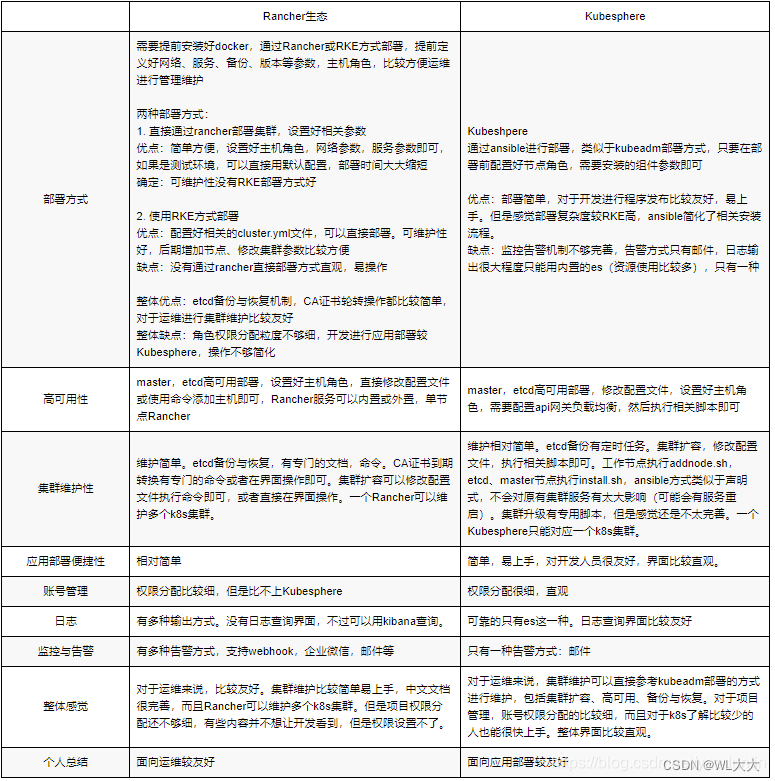

Rancher 和 kubesphere对比

很纠结说实话,这两个都可以,Rancher适合管理多套集群包括云上也能管理,kubesphere多租户包括cicd流程配置权限比较细,综合考虑了一下平时部署项目偏多包括开发打包测试还是得kubesphere合适一点。

kubesphere管理平台官方文档:

https://kubesphere.io/zh/docs/v3.4/installing-on-kubernetes/introduction/prerequisites/

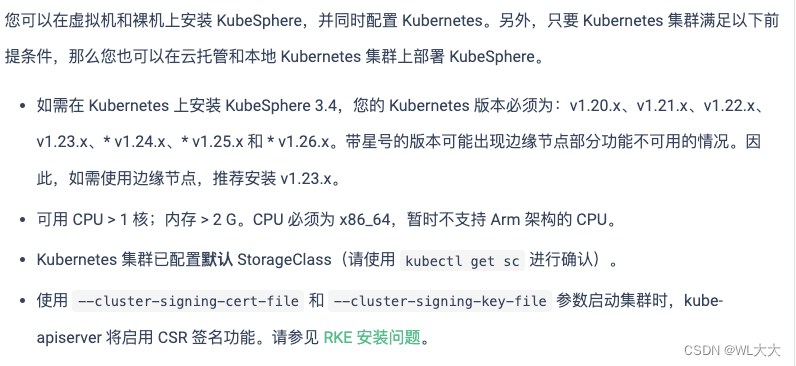

安装kubesphere前提

一、配置默认的存储类

1. 确认是否配置值默认StorageClass

[root@longxi-01 ~]# kubectl get storageclass

No resources found

并未设置2. 创建StorageClass ,使用的nfs

安装nfs这里是所有节点都要安装的nfs-utils并启动,ip:10.211.55.5

[root@longxi-01 ~]# yum install -y nfs-utils创建存放数据目录,并启动

[root@longxi-01 home]# mkdir -p k8s/data

[root@longxi-01 k8s]# vim /etc/exports

/home/k8s/data *(rw,no_root_squash)

[root@longxi-01 k8s]# systemctl start nfs-server.service

systemctl enable nfs-server.service 3. 配置默认存储

创建yaml

vi sc.yaml

## 创建了一个存储类

apiVersion: storage.k8s.io/v1

#存储类的资源名称

kind: StorageClass

metadata:

#存储类的名称,自定义

name: nfs-storage

annotations:

#注解,是否是默认的存储,注意:KubeSphere默认就需要个默认存储,因此这里注解要设置为“默认”的存储系统,表示为"true",代表默认。

storageclass.kubernetes.io/is-default-class: "true"

#存储分配器的名字,自定义

provisioner: k8s-sigs.io/nfs-subdir-external-provisioner

parameters:

archiveOnDelete: "true" ## 删除pv的时候,pv的内容是否要备份

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: nfs-client-provisioner

labels:

app: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

spec:

#只运行一个副本应用

replicas: 1

#描述了如何用新的POD替换现有的POD

strategy:

#Recreate表示重新创建Pod

type: Recreate

#选择后端Pod

selector:

matchLabels:

app: nfs-client-provisioner

template:

metadata:

labels:

app: nfs-client-provisioner

spec:

serviceAccountName: nfs-client-provisioner #创建账户

containers:

- name: nfs-client-provisioner

image: registry.cn-hangzhou.aliyuncs.com/lfy_k8s_images/nfs-subdir-external-provisioner:v4.0.2 #使用NFS存储分配器的镜像

# resources:

# limits:

# cpu: 10m

# requests:

# cpu: 10m

volumeMounts:

- name: nfs-client-root #定义个存储卷,

mountPath: /persistentvolumes #表示挂载容器内部的路径

env:

- name: PROVISIONER_NAME #定义存储分配器的名称

value: k8s-sigs.io/nfs-subdir-external-provisioner #需要和上面定义的保持名称一致

- name: NFS_SERVER #指定NFS服务器的地址,你需要改成你的NFS服务器的IP地址

value: 10.211.55.5 ## 指定自己nfs服务器地址

- name: NFS_PATH

value: /home/k8s/data ## nfs服务器共享的目录 #指定NFS服务器共享的目录

volumes:

- name: nfs-client-root #存储卷的名称,和前面定义的保持一致

nfs:

server: 10.211.55.5 #NFS服务器的地址,和上面保持一致,这里需要改为你的IP地址

path: /home/k8s/data #NFS共享的存储目录,和上面保持一致

---

apiVersion: v1

kind: ServiceAccount #创建个SA账号

metadata:

name: nfs-client-provisioner #和上面的SA账号保持一致

# replace with namespace where provisioner is deployed

namespace: default

---

#以下就是ClusterRole,ClusterRoleBinding,Role,RoleBinding都是权限绑定配置,不在解释。直接复制即可。

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: nfs-client-provisioner-runner

rules:

- apiGroups: [""]

resources: ["nodes"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create", "update", "patch"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: run-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

roleRef:

kind: ClusterRole

name: nfs-client-provisioner-runner

apiGroup: rbac.authorization.k8s.io

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

rules:

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

roleRef:

kind: Role

name: leader-locking-nfs-client-provisioner

apiGroup: rbac.authorization.k8s.io以上文件,只需要改动两个部分:就是把两处的IP地址,改为自己的NFS服务器的IP地址,即可。

启动

[root@longxi-01 ~]# kubectl create -f nfs-sc.yaml

[root@longxi-01 ~]# kubectl get pods

# 查看存储类

[root@longxi-01 ~]# kubectl get sc

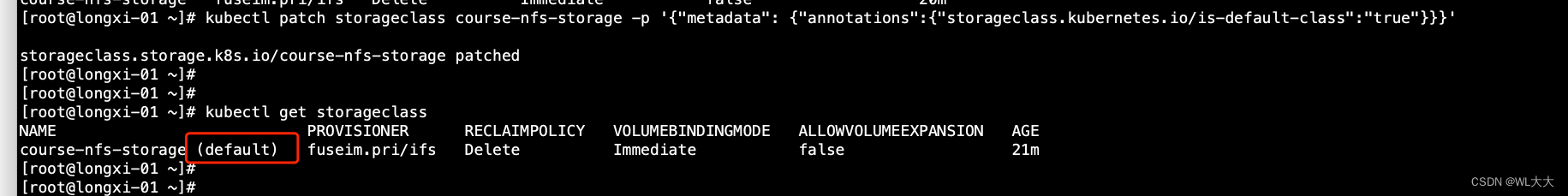

设置storage为k8s默认存储(下名命令中nfs-storage 需要和自己storageclass名称的一致即可)不用执行已经是默认了!

kubectl patch storageclass nfs-storage -p '{"metadata": {"annotations":{"storageclass.kubernetes.io/is-default-class":"true"}}}'

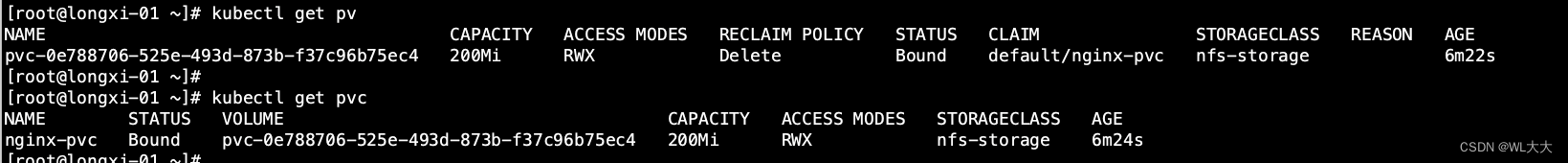

4.存储类创建个pv并绑定pvc测试一下

说明:上面说到采用StorageClass的方法,可以动态生成PV,上面我们已经创建好了StorageClass,下面我们在没有任何PV的情况下来创建个PVC,看看PVC是否能立即绑定到PV。如果能就说明成功自动创建了PV,并进行了绑定。

vi pvc.yaml

kind: PersistentVolumeClaim #创建PVC资源

apiVersion: v1

metadata:

name: nginx-pvc #PVC的名称

spec:

accessModes: #定义对PV的访问模式,代表PV可以被多个PVC以读写模式挂载

- ReadWriteMany

resources: #定义PVC资源的参数

requests: #设置具体资源需求

storage: 200Mi #表示申请200MI的空间资源

storageClassName: nfs-storage #指定存储类的名称,就指定上面创建的那个存储类。

启动:[root@longxi-01 ~]# kubectl apply -f pvc.yaml

二、最小化安装kubesphere

1. 部署私有仓库

最小化安装不用装私有仓库,离线安装的话需要,这里安装是因为打算后面测试一下cicd流水线就一起部署了,这次不介绍,不安装仓库可以直接执行第5步

申请自定义签名,域名指定:harbor.docker.com

[root@longxi-01 ~]# mkdir -p certs

[root@longxi-01 ~]# openssl req -newkey rsa:4096 -nodes -sha256 -keyout certs/domain.key -x509 -days 36500 -out certs/domain.crt

Generating a RSA private key

...................................................++++

........................................................++++

writing new private key to 'certs/domain.key'

-----

You are about to be asked to enter information that will be incorporated

into your certificate request.

What you are about to enter is what is called a Distinguished Name or a DN.

There are quite a few fields but you can leave some blank

For some fields there will be a default value,

If you enter '.', the field will be left blank.

-----

Country Name (2 letter code) [XX]:

State or Province Name (full name) []:

Locality Name (eg, city) [Default City]:

Organization Name (eg, company) [Default Company Ltd]:

Organizational Unit Name (eg, section) []:

Common Name (eg, your name or your server's hostname) []:harbor.docker.com

Email Address []:2. 启动docker仓库

mkdir /mnt/registry

docker run -d \

--restart=always \

--name registry \

-v "$(pwd)"/certs:/certs \

-v /mnt/registry:/var/lib/registry \

-e REGISTRY_HTTP_ADDR=0.0.0.0:443 \

-e REGISTRY_HTTP_TLS_CERTIFICATE=/certs/domain.crt \

-e REGISTRY_HTTP_TLS_KEY=/certs/domain.key \

-p 443:443 \

registry:23. 配置仓库

在 /etc/hosts 中添加一个条目,将主机名(即仓库域名;在本示例中是

harbor.docker.com)映射到所有机器的私有 IP 地址,如下所示。

vim /etc/hosts

10.211.55.5 harbor.docker.com执行以下命令,复制证书到指定目录,并使 Docker 信任该证书。

mkdir -p /etc/docker/certs.d/dockerhub.kubekey.local

cp certs/domain.crt /etc/docker/certs.d/dockerhub.kubekey.local/ca.crt4. 修改docker配置加入私有仓库

[root@longxi-01 ~]# vim /usr/lib/systemd/system/docker.service

ExecStart=/usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sock --insecure-registry harbor.docker.com

[root@longxi-01 ~]# systemctl daemon-reload

[root@longxi-01 ~]# systemctl restart docker5. 安装镜像

[root@longxi-01 ~]# kubectl apply -f https://github.com/kubesphere/ks-installer/releases/download/v3.4.0/kubesphere-installer.yaml

[root@longxi-01 ~]# kubectl apply -f https://github.com/kubesphere/ks-installer/releases/download/v3.4.0/cluster-configuration.yaml启动了插件功能需修改cluster-configuration.yaml,具体看官网

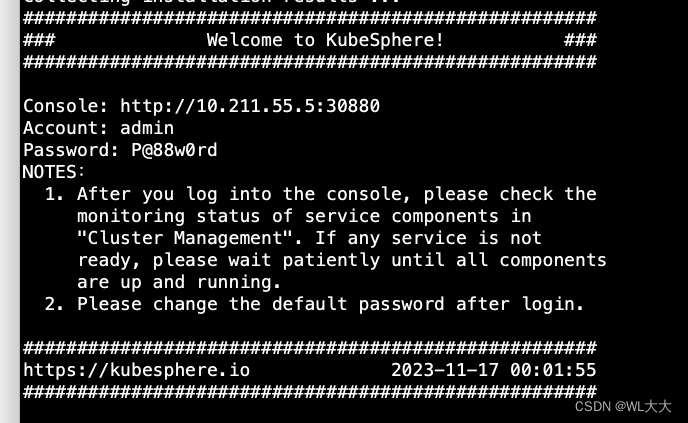

6. 检查日志

[root@longxi-01 ~]# kubectl logs -n kubesphere-system $(kubectl get pod -n kubesphere-system -l 'app in (ks-install, ks-installer)' -o jsonpath='{.items[0].metadata.name}') -f

出现这个部署完成。具体使用下次空了在说。

4443

4443

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?