爬虫——海大起点论坛房屋租赁信息

多协程

CSV存储

引用包

import gevent

from gevent import monkey

monkey.patch_all(select=False)

from gevent.queue import Queue

import csv

import re

import requests

from bs4 import BeautifulSoup

from fake_useragent import UserAgent

爬取流程

代码

class CZW_Spider(object):

def __init__(self):

# 初始化数据

try:

self.fake_user = UserAgent()

except Exception as ex:

print(ex)

print('>>> 正在修复异常功能模块')

get_funcModule('fake_useragent', Update=True)

self.user = user

self.password = password

self.out_path = out_fp

def layer_01(self):

"""

DEMO 采集层, 完成目标网站的试探性采集

:return:

"""

def get_user_id(trace=None, user_name=None):

"""

依据 用户昵称及页面元素确定用户id,当用户匿名时id=0

:param trace: 暂时弃用,正则语法

:param user_name: 用户名

:return: host_id

"""

if user_name == '匿名':

user_id = '0'

return user_id

else:

try:

user_id = tag.find('cite').a['href']

user_id = user_id.split('=')[-1]

return user_id

except TypeError:

print(user_name, '该用户ID获取进程发生未知错误')

# HTML下载器,默认页面为房屋租赁首页(page=1)

def Handle(target=None):

"""

数据请求句柄

:return: Response 对象

"""

# 当任务队列为空时,默认访问qdRent

if target is None:

target = qdRent

# 生成随机请求头

try:

headers = {'user-agent': self.fake_user.random}

except AttributeError:

headers = 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/' \

'537.36 (KHTML, like Gecko) Chrome/83.0.4103.61 Safari/537.36'

try:

Response = requests.get(target, headers=headers)

Response.raise_for_status()

# Response.encoding = 'utf-8'

return Response.text

except Exception as e:

raise e

res = Handle()

soup = BeautifulSoup(res, 'html.parser')

# 定位表单

tags = soup.find('div', id='threadlist').find_all('tbody')

Out_flow = []

for tag in tags[2:]:

# 获取项目名

item = tag.find(attrs={'class': 's xst'})

# 获取作者名

host_name = tag.find(attrs={'class': 'by'}).text.strip().split('\n')[0]

# 获取作者ID

host_id = get_user_id(user_name=host_name)

# 获取项目链接

href = qdHome + tag.find_all('a')[-1]['href']

Out_flow.append([item.text.strip(), host_id, host_name, href])

# print(href)

# print('id:{}'.format(host_id))

# print('作者:{}\n'.format(host_name))

return Out_flow

def layer_02(self):

# 所有房屋租赁列表URL采集

def QUEUE(url_list):

work = Queue()

for url in url_list:

work.put_nowait(url)

return work

def get_HTML(target=None):

"""

数据请求句柄

:return: Response 对象

"""

# 当任务队列为空时,默认访问qdRent

if target is None:

target = qdRent

# 生成随机请求头

try:

headers = {'User-Agent': self.fake_user.random}

except AttributeError:

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) ' \

'Chrome/84.0.4147.105 Safari/537.36 '}

try:

Response = requests.get(target, headers=headers)

Response.raise_for_status()

# Response.encoding = 'utf-8'

return Response.text

except Exception as e:

raise e

def crwaler__01():

def Parser__01(response):

bs = BeautifulSoup(response, 'html.parser')

tags = bs.find_all('tbody', id=re.compile('^normalthread_[0-9]{6}'))

URL_lists = []

for tag in tags:

URL_Program = qdHome + tag.find('a')['href']

URL_lists.append(URL_Program)

return URL_lists

while not work_0.empty():

url = work_0.get_nowait()

html = get_HTML(url)

URL_LIST = Parser__01(html)

out_flow_01.append(URL_LIST)

work_0 = Queue()

qdurl = 'http://www.ihain.cn/forum.php?mod=forumdisplay&fid=175&typeid=' \

'24&typeid=24&filter=typeid&page={page1}'

out_flow_01 = []

for x in range(1, 200):

url = qdurl.format(page1=x)

work_0.put_nowait(url)

self.Multi_program(crwaler__01)

work = Queue()

for list in out_flow_01:

for url in list:

work.put_nowait(url)

def crwaler_02():

def get_url(tag):

href = tag.find('td', class_='plc ptm pbn vwthd').find('span', class_='xg1').a['href'].strip()

url = qdHome + href

return url

def get_item(tag):

# 项目名

try:

item = tag.find('h1', class_='ts').find('span', id='thread_subject').text

print(item)

return item

except AttributeError as e:

print('项目名获取进程错误')

def get_date(tag):

element = tag.find('div', class_='pti').find('div', class_='authi').find('em')

if element.find('span') is None:

date = element.text.replace('发表于 ', '')

print(date)

return date

else:

date = element.find('span')['title']

print(date)

return date

def get_user_name_id(tag, href):

element_1 = tag.find('td', class_='pls', rowspan='2').find('div', class_='pi')

try:

element_2 = element_1.find('div')

except TypeError:

print(href + '网页在ID处出现意外元素')

if element_2 is None:

host_name = element_1.text

print('匿名')

host_id = None

else:

host_name = element_2.text.replace('\n', '')

id = element_2.a['href']

host_id = id.split('=')[-1]

return host_name, host_id

def get_item_intro(tag, href):

element = tag.find('div', class_='pcb').td

if element.find('div', align='left') is not None:

try:

# 不存在图片

intro = element.find('div', align='left').text.replace(' ', '')

intro = intro.replace('\n', '')

except TypeError:

print(href + '网页出现意外元素')

else:

intro = tag.text.strip()

return intro

def get_weixin(text):

text.replace(' ', '')

weixin = re.search('(微信[:: ]?|weixin[:: ]?|VX[:: ]?)?([a-zA-Z0-9\-\_]{6,16})', text, re.I)

if weixin is not None:

weixin = weixin.group(2)

return weixin

else:

return None

def get_QQ(text):

text.replace(' ', '')

qq = re.search('(QQ[:: ]?)([0-9]{6,10})', text, re.I)

if qq is not None:

qq = qq.group(2)

return qq

else:

return None

def get_cellphone(text):

text.replace(' ', '')

phone = re.search('(电话[:: ]?|联系.*[:: ]?|手机.[:: ]?|cellphone[:: ]?|mobilephone[:: ]?)?(1[0-9]{10})',

text, re.I)

if phone is not None:

phone = phone.group(2)

return phone

else:

return None

def get_item_attribute(title):

if re.search('女.*', title) is not None:

attribute_1 = '仅限女生居住'

if re.search('研究生.*', title) is not None:

attribute = attribute_1 + '研究生可咨询'

else:

attribute = attribute_1

return attribute

else:

if re.search('研究生.*', title) is not None:

attribute = '研究生可咨询'

return attribute

else:

return None

def get_ortherpost(tags, host_name):

data = {}

list1 = []

list2 = []

if len(tags) <= 1:

print(host_name + "无帖回复")

return None

else:

for x in range(1, len(tags)):

Ans_name = tags[x].find('td', class_='pls', rowspan='2').find('div', class_='pi').text

try:

quetion = tags[x].find('div', class_='t_fsz').find('td', class_='t_f').get_text()

except Exception as e:

print('获取回复贴进程错误')

quetion = tags[x].find('div', class_='t_fsz').text

if host_name == Ans_name:

list1.append(quetion)

# 其他人询问或答复

else:

list2.append(quetion)

data[host_name] = list1

data[Ans_name] = list2

return data

def Parser__02(response):

bs = BeautifulSoup(response, 'html.parser')

# 定位所有楼层列表的元素

postlist = bs.find_all('div', id=re.compile('^post_[0-9]+'))

# 项目名

item = get_item(bs)

# 项目链接

href = get_url(bs)

# 日期

date = get_date(postlist[0])

# 发布者名和ID

host_name, host_id = get_user_name_id(postlist[0], href)

# 项目简介

item_intro = get_item_intro(postlist[0], href)

# 联系方式

Wechat = get_weixin(item_intro)

qq = get_QQ(item_intro)

phone = get_cellphone(item_intro)

contact = {'微信': Wechat, 'QQ': qq, '电话': phone}

print(contact)

# 项目属性

attribute = get_item_attribute(item)

print(attribute)

# 项目回复(其他楼层):

dic_post = get_ortherpost(postlist, host_name)

Out_flow = [item, host_id, host_name, href, date, item_intro, contact, attribute, dic_post]

return Out_flow

while not work.empty():

URL = work.get_nowait()

HTML = get_HTML(URL)

results = Parser__02(HTML)

writer.writerow(results)

writer = self.outFlow()

self.Multi_program(crwaler_02)

def Multi_program(self, crawler):

def __tasks__(crawler1):

tasks_list = []

for x in range(10):

task = gevent.spawn(crawler1)

tasks_list.append(task)

return tasks_list

task_list = __tasks__(crawler)

gevent.joinall(task_list)

def outFlow(self):

"""

弹出数据

:param dataFlow: list(list)

:return:

"""

f = open(self.out_path, 'w', encoding='utf-8', newline='')

writer = csv.writer(f)

writer.writerow(['项目名', '用户ID', "用户昵称", '项目链接', '发布日期', '项目简介', '联系方式', '项目属性', '项目回复'])

return writer

def run(self):

"""爬虫入口"""

# 连接数据库,建立数据库中的表结构

# 运行demo

dataFlow = self.layer_02()

if __name__ == '__main__':

czw = CZW_Spider()

czw.run()

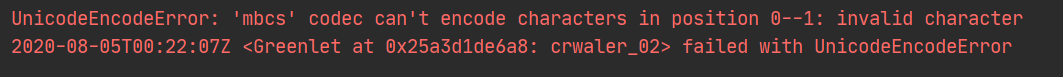

问题

1239

1239

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?