天行健,君子以自强不息(大家共勉,坚持学习)

请先在Ubuntu18.04版本创建python3.X虚拟环境

不会的请查看:http://t.csdnimg.cn/KOK2a

然后使用

ipython

如果提示没有

在虚拟环境中使用

pip install ipython进入Python代码编辑

import urllib

dir(urllib)

urllib.parse

urllib.request

import urllib.request

from urllib.request import urlopen

r=urlopen('http://httpbin.org/get')

type(r)

dir(r)

text=r.read()

text.decode('utf-8')

print(text.decode('utf-8'))

import json

json.loads(text)

r.status

r.reason

dir(r)

r.headers

dir(r.headers)

r.headers.get_all('Content-Type')

r.headers.key()

r.headers_headers

dict(r.headers_headers)上述代码执行情况如下:

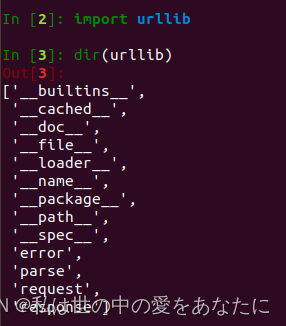

In [2]: import urllib

In [3]: dir(urllib)

Out[3]:

['__builtins__',

'__cached__',

'__doc__',

'__file__',

'__loader__',

'__name__',

'__package__',

'__path__',

'__spec__',

'error',

'parse',

'request',

'response']

In [4]: urllib.parse

Out[4]: <module 'urllib.parse' from '/usr/lib/python3.6/urllib/parse.py'>

In [5]: urllib.request

Out[5]: <module 'urllib.request' from '/usr/lib/python3.6/urllib/request.py'>

In [6]: import urllib.request

In [7]: from urllib.request import urlopen

In [8]: r = urlopen('http://httpbin.org/get')

In [9]: type(r)

Out[9]: http.client.HTTPResponse

In [10]: dir(r)

Out[10]:

['__abstractmethods__',

'__class__',

'__del__',

'__delattr__',

'__dict__',

'__dir__',

'__doc__',

'__enter__',

'__eq__',

'__exit__',

'__format__',

'__ge__',

'__getattribute__',

'__gt__',

'__hash__',

'__init__',

'__init_subclass__',

'__iter__',

'__le__',

'__lt__',

'__module__',

'__ne__',

'__new__',

'__next__',

'__reduce__',

'__reduce_ex__',

'__repr__',

'__setattr__',

'__sizeof__',

'__str__',

'__subclasshook__',

'_abc_cache',

'_abc_negative_cache',

'_abc_negative_cache_version',

'_abc_registry',

'_checkClosed',

'_checkReadable',

'_checkSeekable',

'_checkWritable',

'_check_close',

'_close_conn',

'_get_chunk_left',

'_method',

'_peek_chunked',

'_read1_chunked',

'_read_and_discard_trailer',

'_read_next_chunk_size',

'_read_status',

'_readall_chunked',

'_readinto_chunked',

'_safe_read',

'_safe_readinto',

'begin',

'chunk_left',

'chunked',

'close',

'closed',

'code',

'debuglevel',

'detach',

'fileno',

'flush',

'fp',

'getcode',

'getheader',

'getheaders',

'geturl',

'headers',

'info',

'isatty',

'isclosed',

'length',

'msg',

'peek',

'read',

'read1',

'readable',

'readinto',

'readinto1',

'readline',

'readlines',

'reason',

'seek',

'seekable',

'status',

'tell',

'truncate',

'url',

'version',

'will_close',

'writable',

'write',

'writelines']

In [11]: r.read()

Out[11]: b'{\n "args": {}, \n "headers": {\n "Accept-Encoding": "identity", \n "Host": "httpbin.org", \n "User-Agent": "Python-urllib/3.6", \n "X-Amzn-Trace-Id": "Root=1-6548d2b0-53e1a25a54268a4a7f242d61"\n }, \n "origin": "112.82.210.252", \n "url": "http://httpbin.org/get"\n}\n'

In [12]: text =r.read()

In [13]: text

Out[13]: b''

In [14]: text.decode('utf-8')

Out[14]: ''

In [15]: r.read()

Out[15]: b''

In [16]: r = urlopen('http://httpbin.org/get')

In [17]: text=r.read()

In [18]: text

Out[18]: b'{\n "args": {}, \n "headers": {\n "Accept-Encoding": "identity", \n "Host": "httpbin.org", \n "User-Agent": "Python-urllib/3.6", \n "X-Amzn-Trace-Id": "Root=1-6548d350-33e155812a20f36611451eaa"\n }, \n "origin": "112.82.210.252", \n "url": "http://httpbin.org/get"\n}\n'

In [19]: text.decode('utf-8')

Out[19]: '{\n "args": {}, \n "headers": {\n "Accept-Encoding": "identity", \n "Host": "httpbin.org", \n "User-Agent": "Python-urllib/3.6", \n "X-Amzn-Trace-Id": "Root=1-6548d350-33e155812a20f36611451eaa"\n }, \n "origin": "112.82.210.252", \n "url": "http://httpbin.org/get"\n}\n'

In [20]: print(text.decode('utf-8'))

{

"args": {},

"headers": {

"Accept-Encoding": "identity",

"Host": "httpbin.org",

"User-Agent": "Python-urllib/3.6",

"X-Amzn-Trace-Id": "Root=1-6548d350-33e155812a20f36611451eaa"

},

"origin": "112.82.210.252",

"url": "http://httpbin.org/get"

}

In [21]: import json

In [22]: json.loads(text)

Out[22]:

{'args': {},

'headers': {'Accept-Encoding': 'identity',

'Host': 'httpbin.org',

'User-Agent': 'Python-urllib/3.6',

'X-Amzn-Trace-Id': 'Root=1-6548d350-33e155812a20f36611451eaa'},

'origin': '112.82.210.252',

'url': 'http://httpbin.org/get'}

In [23]: r.status

Out[23]: 200

In [24]: r.reason

Out[24]: 'OK'

In [25]: dir(r)

Out[25]:

['__abstractmethods__',

'__class__',

'__del__',

'__delattr__',

'__dict__',

'__dir__',

'__doc__',

'__enter__',

'__eq__',

'__exit__',

'__format__',

'__ge__',

'__getattribute__',

'__gt__',

'__hash__',

'__init__',

'__init_subclass__',

'__iter__',

'__le__',

'__lt__',

'__module__',

'__ne__',

'__new__',

'__next__',

'__reduce__',

'__reduce_ex__',

'__repr__',

'__setattr__',

'__sizeof__',

'__str__',

'__subclasshook__',

'_abc_cache',

'_abc_negative_cache',

'_abc_negative_cache_version',

'_abc_registry',

'_checkClosed',

'_checkReadable',

'_checkSeekable',

'_checkWritable',

'_check_close',

'_close_conn',

'_get_chunk_left',

'_method',

'_peek_chunked',

'_read1_chunked',

'_read_and_discard_trailer',

'_read_next_chunk_size',

'_read_status',

'_readall_chunked',

'_readinto_chunked',

'_safe_read',

'_safe_readinto',

'begin',

'chunk_left',

'chunked',

'close',

'closed',

'code',

'debuglevel',

'detach',

'fileno',

'flush',

'fp',

'getcode',

'getheader',

'getheaders',

'geturl',

'headers',

'info',

'isatty',

'isclosed',

'length',

'msg',

'peek',

'read',

'read1',

'readable',

'readinto',

'readinto1',

'readline',

'readlines',

'reason',

'seek',

'seekable',

'status',

'tell',

'truncate',

'url',

'version',

'will_close',

'writable',

'write',

'writelines']

In [26]: r.headers

Out[26]: <http.client.HTTPMessage at 0x7f300d93db70>

In [27]: dir(r.headers)

Out[27]:

['__bytes__',

'__class__',

'__contains__',

'__delattr__',

'__delitem__',

'__dict__',

'__dir__',

'__doc__',

'__eq__',

'__format__',

'__ge__',

'__getattribute__',

'__getitem__',

'__gt__',

'__hash__',

'__init__',

'__init_subclass__',

'__iter__',

'__le__',

'__len__',

'__lt__',

'__module__',

'__ne__',

'__new__',

'__reduce__',

'__reduce_ex__',

'__repr__',

'__setattr__',

'__setitem__',

'__sizeof__',

'__str__',

'__subclasshook__',

'__weakref__',

'_charset',

'_default_type',

'_get_params_preserve',

'_headers',

'_payload',

'_unixfrom',

'add_header',

'as_bytes',

'as_string',

'attach',

'defects',

'del_param',

'epilogue',

'get',

'get_all',

'get_boundary',

'get_charset',

'get_charsets',

'get_content_charset',

'get_content_disposition',

'get_content_maintype',

'get_content_subtype',

'get_content_type',

'get_default_type',

'get_filename',

'get_param',

'get_params',

'get_payload',

'get_unixfrom',

'getallmatchingheaders',

'is_multipart',

'items',

'keys',

'policy',

'preamble',

'raw_items',

'replace_header',

'set_boundary',

'set_charset',

'set_default_type',

'set_param',

'set_payload',

'set_raw',

'set_type',

'set_unixfrom',

'values',

'walk']

In [28]: r.headrs.get_all('Content-Type')

---------------------------------------------------------------------------

AttributeError Traceback (most recent call last)

<ipython-input-28-f4c170801b11> in <module>

----> 1 r.headrs.get_all('Content-Type')

AttributeError: 'HTTPResponse' object has no attribute 'headrs'

In [29]: r.headers.get_all('Content-Type')

Out[29]: ['application/json']

In [30]: r.headers.keys()

Out[30]:

['Date',

'Content-Type',

'Content-Length',

'Connection',

'Server',

'Access-Control-Allow-Origin',

'Access-Control-Allow-Credentials']

In [31]: r.headers._headers

Out[31]:

[('Date', 'Mon, 06 Nov 2023 11:51:44 GMT'),

('Content-Type', 'application/json'),

('Content-Length', '275'),

('Connection', 'close'),

('Server', 'gunicorn/19.9.0'),

('Access-Control-Allow-Origin', '*'),

('Access-Control-Allow-Credentials', 'true')]

In [32]: dict(r.headers._headers)

Out[32]:

{'Date': 'Mon, 06 Nov 2023 11:51:44 GMT',

'Content-Type': 'application/json',

'Content-Length': '275',

'Connection': 'close',

'Server': 'gunicorn/19.9.0',

'Access-Control-Allow-Origin': '*',

'Access-Control-Allow-Credentials': 'true'}

In [33]: exit

下面这些代码使用pycharm执行

import urllib.request

import json

#接受一个字符串作为参数

r=urllib.request.urlopen('http://httpbin.org/get')

#读取response的内容

text=r.read()

#http返回状态码和msg

print(r.status,r.reason)

#返回的内容是json歌手,直接用Load函数加兹安

obj = json.loads(text)

print(obj)

#r.headers是一个HTTPMessage的对象

print(r.headers)

for k ,v in r.headers._headers:

print('%s: %s' % (k,v))

ua ='Mozilla/5.0 (Windows NT 10.0; Win64; x64'\

'AppleWebKit/537.36 (KHTML, like Gecko)'\

'Chrome/119.0.0.0 Safari/537.36 Edg/119.0.0.0'

#添加自定义的头信息

req=urllib.request.Request('http://httpbin.org/user-agent')

req.add_header('User-agent',ua)

#接受一个urllib.request.Request对象作为参数

r =urllib.request.urlopen(req)

resp=json.load(r)

#打印出httpbin网站返回信息里的user-agent

print("user-agent: ",resp["user-agent"])

# auth_handler =urllib.request.HTTPBasicAuthHandler()

# auth_handler.add_password(realm='httpbin auth',#相当于一句话,随便写

# uri='basic-auth/jpy/123',#从域名往后的部分

# user='jpy',

# passwd='123')

# opener=urllib.request.build_opener(auth_handler)

# urllib.request.install_opener(opener)

# r=urllib.request.urlopen('http://httpbin.org')

# print(r.read().decode('utf-8'))

#使用GET参数

params =urllib.parse.urlencode({'spam':1,'eggs':2,'bacon':2})

url='http://httpbin.org/get?%s' %params

with urllib.request.urlopen(url) as f:

print(json.load(f))

#使用POST方法传递参数

data = urllib.parse.urlencode({'name':'小明','age':2})

data =data.encode()

with urllib.request.urlopen('http://httpbin.org/post',data) as f:

print(json.load(f))

#使用远程代理IP请求url

proxy_handler=urllib.request.ProxyHandler({

'http':'http://iguye.com:41801'})#里面传的是一个字典

#proxy_auth_handler=urllib.request.ProxyBasicAuthHandler()#解决带用户名密码的代理情况

opener =urllib.request.build_opener(proxy_handler)

r=opener.open('http://httpbin.org/ip')

print(r.read())

#urlparse模块

o=urllib.parse.urlparse('http://httpbin.org/get')

注意:urllib parse的模块也使用的python虚拟环境进行学习

In [1]: import urllib.parse

In [2]: o=urllib.parse.urlparse('http://httpbin.org/get')

In [3]: dir(o)

Out[3]:

['__add__',

'__class__',

'__contains__',

'__delattr__',

'__dir__',

'__doc__',

'__eq__',

'__format__',

'__ge__',

'__getattribute__',

'__getitem__',

'__getnewargs__',

'__gt__',

'__hash__',

'__init__',

'__init_subclass__',

'__iter__',

'__le__',

'__len__',

'__lt__',

'__module__',

'__mul__',

'__ne__',

'__new__',

'__reduce__',

'__reduce_ex__',

'__repr__',

'__rmul__',

'__setattr__',

'__sizeof__',

'__slots__',

'__str__',

'__subclasshook__',

'_asdict',

'_encoded_counterpart',

'_fields',

'_hostinfo',

'_make',

'_replace',

'_source',

'_userinfo',

'count',

'encode',

'fragment',

'geturl',

'hostname',

'index',

'netloc',

'params',

'password',

'path',

'port',

'query',

'scheme',

'username']

In [4]: o.port

In [5]: o.scheme

Out[5]: 'http'

In [6]: o.username

In [7]: o.geturl()

Out[7]: 'http://httpbin.org/get'

In [8]: o.netloc

Out[8]: 'httpbin.org'

In [9]: o.fragement

---------------------------------------------------------------------------

AttributeError Traceback (most recent call last)

<ipython-input-9-c901b5d2147e> in <module>

----> 1 o.fragement

AttributeError: 'ParseResult' object has no attribute 'fragement'

In [10]: o.fragment

Out[10]: ''

In [11]: o=urllib.parse.urlparse('http://guye:123@httpbin.org/get?a=1&b=2#test'

...: )

In [12]: o.netloc

Out[12]: 'guye:123@httpbin.org'

In [13]: o.path

Out[13]: '/get'

In [14]: o.query

Out[14]: 'a=1&b=2'

In [15]: o.fragment

Out[15]: 'test'

In [16]: o.geturl()

Out[16]: 'http://guye:123@httpbin.org/get?a=1&b=2#test'

In [17]: dir(o)

Out[17]:

['__add__',

'__class__',

'__contains__',

'__delattr__',

'__dir__',

'__doc__',

'__eq__',

'__format__',

'__ge__',

'__getattribute__',

'__getitem__',

'__getnewargs__',

'__gt__',

'__hash__',

'__init__',

'__init_subclass__',

'__iter__',

'__le__',

'__len__',

'__lt__',

'__module__',

'__mul__',

'__ne__',

'__new__',

'__reduce__',

'__reduce_ex__',

'__repr__',

'__rmul__',

'__setattr__',

'__sizeof__',

'__slots__',

'__str__',

'__subclasshook__',

'_asdict',

'_encoded_counterpart',

'_fields',

'_hostinfo',

'_make',

'_replace',

'_source',

'_userinfo',

'count',

'encode',

'fragment',

'geturl',

'hostname',

'index',

'netloc',

'params',

'password',

'path',

'port',

'query',

'scheme',

'username']

In [18]: o.username

Out[18]: 'guye'

In [19]: o.password

Out[19]: '123'

In [20]: o.params

Out[20]: ''

In [21]: type(o)

Out[21]: urllib.parse.ParseResult

In [22]: dir( urllib.parse.ParseResult)

Out[22]:

['__add__',

'__class__',

'__contains__',

'__delattr__',

'__dir__',

'__doc__',

'__eq__',

'__format__',

'__ge__',

'__getattribute__',

'__getitem__',

'__getnewargs__',

'__gt__',

'__hash__',

'__init__',

'__init_subclass__',

'__iter__',

'__le__',

'__len__',

'__lt__',

'__module__',

'__mul__',

'__ne__',

'__new__',

'__reduce__',

'__reduce_ex__',

'__repr__',

'__rmul__',

'__setattr__',

'__sizeof__',

'__slots__',

'__str__',

'__subclasshook__',

'_asdict',

'_encoded_counterpart',

'_fields',

'_hostinfo',

'_make',

'_replace',

'_source',

'_userinfo',

'count',

'encode',

'fragment',

'geturl',

'hostname',

'index',

'netloc',

'params',

'password',

'path',

'port',

'query',

'scheme',

'username']

In [23]: list(o)

Out[23]: ['http', 'guye:123@httpbin.org', '/get', '', 'a=1&b=2', 'test']

In [24]: for i in o:

...: print(i)

...:

http

guye:123@httpbin.org

/get

a=1&b=2

test

list列表特有的函数(用于大致判断是否可能是list)

__len__dict字典特有的函数(用于大致判断是否可能是dict)

__setitem__

595

595

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?