目录

P19-使用Decision Trees建模with Gini and Entropy

P20-使用Random Forests Classifiers(分类器)&Regressor(回归器)两种方式建模

一、随机森林对于离散数据的处理 Random Forest Classifier

缩减特征变量X,对于成百上千特征变量的大数据集有非常重要的意义

二、随机森林对于连续数据的处理 Random Forest for Regression

Feature Scaling(特征缩放/标准化) Scikit-Learn's StandardScaler

Evaluating the Regression Algorithm

K-Nearest Neighbor classifier(KNN)建模 using GridSearchCV

K-Nearest Neighbor classifier(KNN)建模 Using RandomizedSearchCV

Gaussian Naive Bayes建模 with Gaussian Process Classifier

P22-Bagging&Boosting 使用Xgboost(极限梯度提升树)和Gradient Boosting(梯度提升树)建模

P23-K Nearest NeighbourKNN(K最近邻)建模

(3)SVM 对于 direct marketing campaigns (phone calls)数据集的处理

(1)应用Gaussian Naive Bayes预测沉船存活人数

(2)应用Multinomial Naive Bayes处理垃圾邮件

Sklearn Pipeline 老外真的很懒,发明了pipeline替代transform几行代码

P26-Votingclassifier及11种算法全自动建模预测输出结果之完整源代码

Preprocessing using min max scaler

MinMaxScaler和StandardScaler的区别:

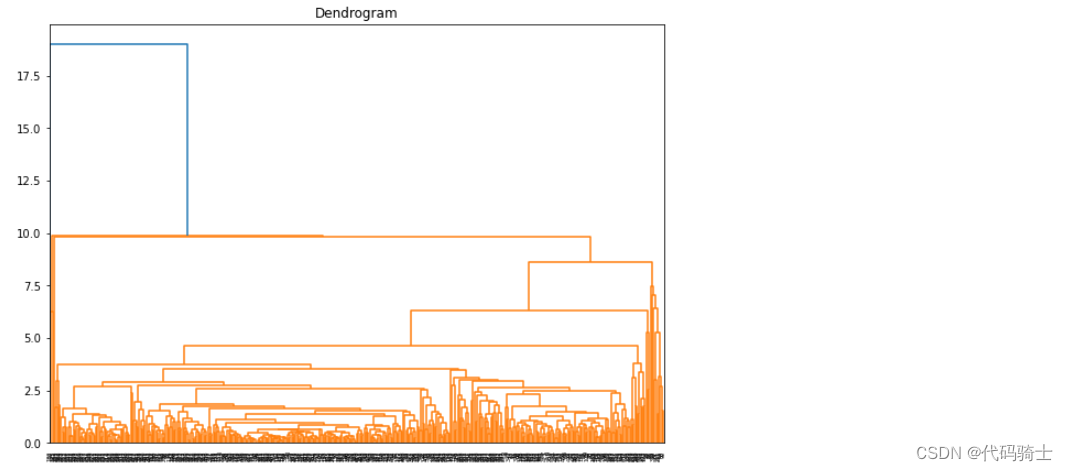

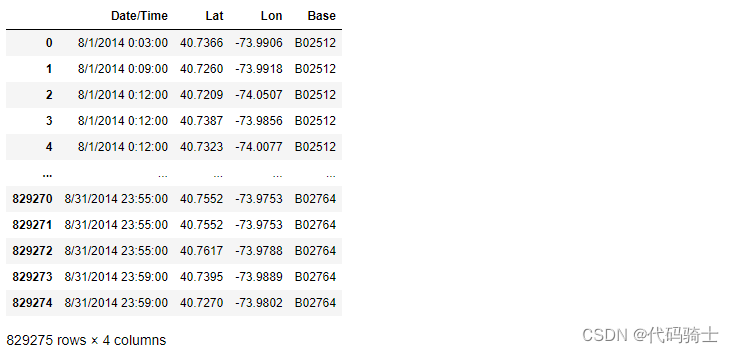

P28-Hierarchical Clustering哪些存量客户是新产品的目标用户

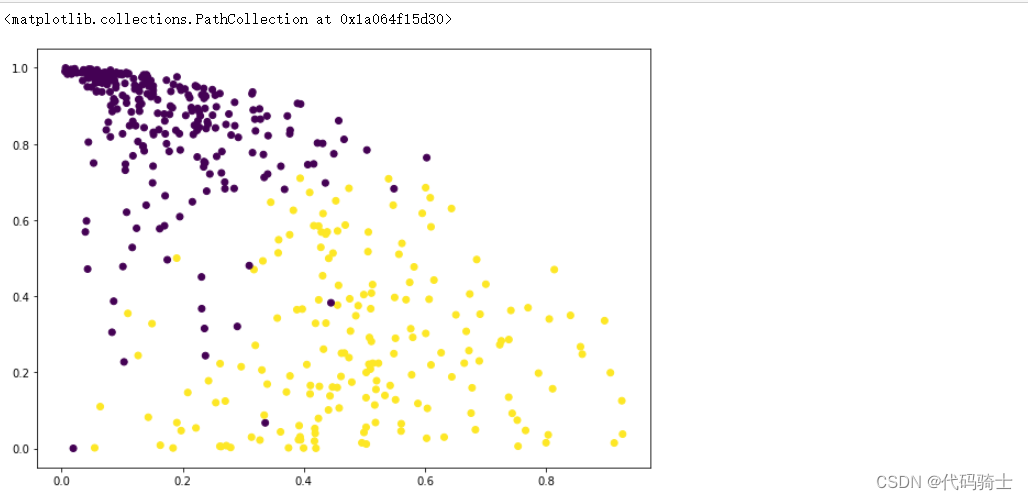

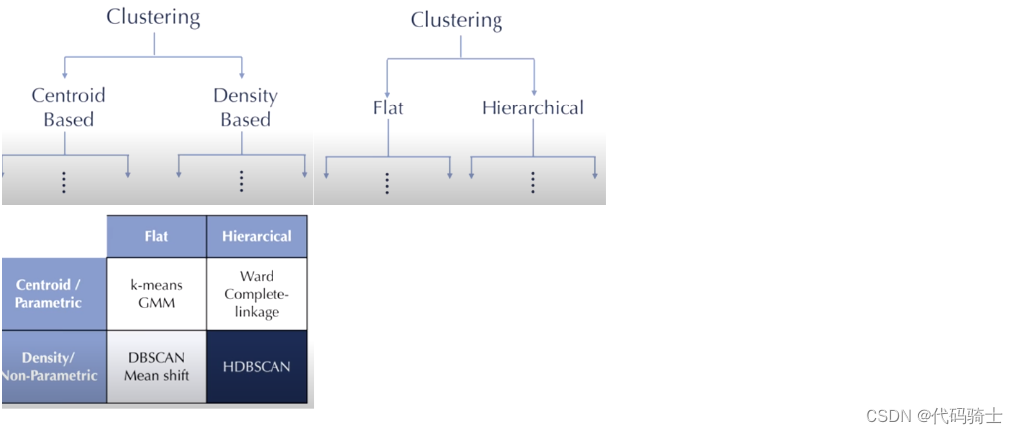

P29-DBSCAN聚类(基于密度的空间聚类应用噪声)与K means(K均值)及Hierarchical Clustering(层次聚类)区别

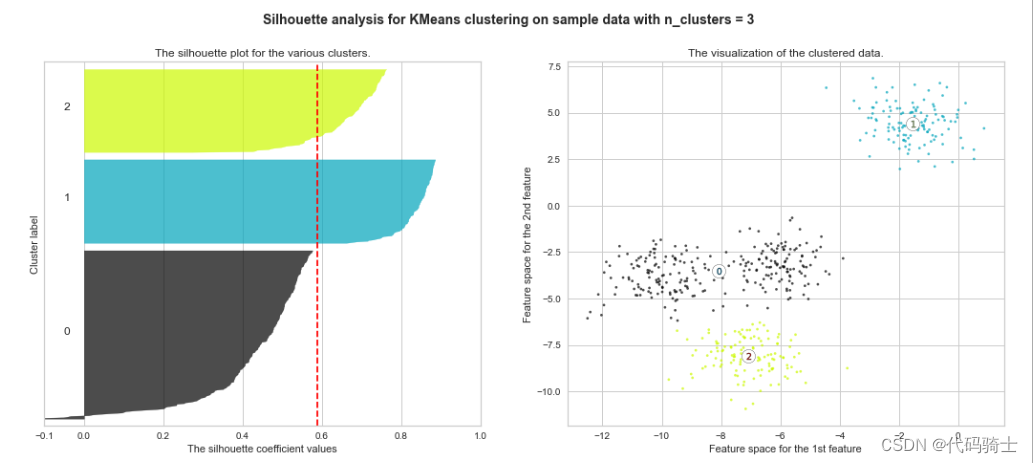

P31-KMeans clustering如何验证K点最佳 silhouette analysis

使用轮廓分析(silhouette analysis)在KMeans聚类中选择簇的数量

P32-无监督学习Principal Component AnalysisPCA精简高维数据(降维)

P19-使用Decision Trees建模with Gini and Entropy

决策树是一种常用的机器学习算法,它的主要原理是通过一系列的问题将数据集进行划分,每个节点代表一个问题,每个分支代表一个答案,最后的每个叶节点代表一个预测结果。

决策树的优点主要包括:

首先,决策树的模型很容易可视化,这使得非专家也能理解其决策过程;

其次,该算法完全不受数据缩放的影响,特征的尺度完全不一样时或者二元特征和连续特征同时存在时,决策树的效果很好;

此外,决策树可以用于小数据集,并且其时间复杂度较小。

最后,值得一提的是,决策树是random forests和gradient boosted tree的基模型,一切高级的树模型都是基于决策树。

然而,决策树也有其缺点:

首先,即使做了预剪枝,决策树也经常会过拟合,泛化性能很差;

其次,在处理大型数据集时,由于必须评估树中每个节点的所有可能拆分,所以计算量会很大。

导入函数库

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import seaborn as sns

from sklearn import tree

%matplotlib inline加载数据集

import ssl

ssl._create_default_https_context = ssl._create_unverified_context

data=sns.load_dataset('iris')

划分特征集和响应集

X = data.drop(['species'], axis=1)

y = data['species']

划分训练集和测试集

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size = 0.30, random_state = 42)加载决策树算法类

from sklearn.tree import DecisionTreeClassifier使用两种不同的决策树模型规范做训练、预测

#决策树模型的两种规范(criterion)形式:gini、entropy

cls_gini = DecisionTreeClassifier(criterion='gini', max_depth=3, random_state=0)#max_depth=3-最长的决策深度为3

cls_entropy = DecisionTreeClassifier(criterion='entropy', random_state=0)

cls_gini.fit(X_train, y_train)

cls_entropy.fit(X_train, y_train)

y_pred_gini = cls_gini.predict(X_test)

y_pred_entropy = cls_entropy.predict(X_test)

分别求得两种不同决策树的准确值

from sklearn.metrics import accuracy_score

print('Model accuracy score with criterion gini index: {0:0.4f}'.format(accuracy_score(y_test, y_pred_gini)))

# y_pred_gini are the predicted class labels in the test-set.

#from sklearn.metrics import accuracy_score

print('Model accuracy score with criterion entropy index: {0:0.4f}'.format(accuracy_score(y_test, y_pred_entropy)))

# y_pred_entropy are the predicted class labels in the test-set.

#Check for overfitting and underfitting:

print('Training set score: {:.4f}'.format(cls_gini.score(X_train, y_train)))

print('Test set score: {:.4f}'.format(cls_gini.score(X_test, y_test)))

print('Training set score: {:.4f}'.format(cls_entropy.score(X_train, y_train)))

print('Test set score: {:.4f}'.format(cls_entropy.score(X_test, y_test)))

绘制两种不同决策树规范的图像:

#Plot decision tree:

plt.figure(figsize=(12,8))

tree.plot_tree(cls_gini.fit(X_train, y_train))

#Plot decision tree:

plt.figure(figsize=(12,8))

tree.plot_tree(cls_entropy.fit(X_train, y_train))

决策树

Gini规范

Entropy规范

P20-使用Random Forests Classifiers(分类器)&Regressor(回归器)两种方式建模

重点:

-Feature_Importances_

-Feature Scaling Scikit-Learn's StandardScaler

-调参随机森林决策树的大小n_estimators

随机森林既可以处理离散量(可以做分类)也可以处理连续量(可以做回归)。

随机森林是一种集成学习方法,它由多个决策树构成,可以用于处理分类和回归问题。这种算法的名字源于它灵活、易用的特性,它可以整合多个决策树的输出以生成单一结果。

每个决策树都是一个基本问题的拆分数据的方法,例如,“我应该去冲浪吗?”然后会有一系列的问题来确定答案,如“海浪涌动的时间很长吗?”或者“风是吹向海面的吗?”这些问题构成决策树中的决策节点。每个问题都有助于个人做出最终决定,最终决定将由叶节点表示。符合条件的观测值将进入“是”分支,而不符合条件的观测值将进入备用路径。

随机森林的优点包括可以在内部进行评估,无偏估计,抗噪能力,抗缺省值等。然而,它的缺点可能包括模型过于复杂,训练时间过长,预测结果的解释性较差等。

在Python中,随机森林的实现可以使用scikit-learn库来完成。总的来说,随机森林是一个强大且灵活的工具,适用于各种机器学习任务。

随机森林是一种高度灵活且对用户友好的机器学习算法,适用于分类和回归任务。以下是随机森林算法的一些主要优点:

1. 表现性能优秀:随机森林算法具有很高的准确性,并且在许多情况下都优于其他算法。

2. 能够处理高维度数据:随机森林不需要进行特征选择或降维处理,它能直接处理含有大量特征的数据集。

3. 抗噪声能力强:随机森林对于噪声数据有优秀的鲁棒性,即使在数据噪音较大的情况下也不容易过拟合。

4. 训练速度快:随机森林的训练过程迅速,并且可以并行化处理,进一步提高训练效率。

5. 提供特征重要性评估:随机森林可以给出各个特征对于预测结果的重要性排序。

6. 能处理缺失值问题:当数据集中存在大量缺失值时,随机森林仍能维持其预测准确性。

7. 可以平衡误差:对于不平衡数据集,随机森林提供了有效的方法来平衡误差。然而,尽管随机森林有许多优点,但也存在一些缺点:

1. 空间和时间需求大:当决策树的数量增加时,为了进行模型训练,所需的存储空间和计算时间也会相应增加。这可能会影响模型在实时性要求较高的应用场景中的使用效率。

2. 可能产生过拟合:虽然随机森林具有较强的抗噪能力,但在数据噪音过大的情况下仍有可能过拟合。

导入函数库

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import seaborn as sns

%matplotlib inline一、随机森林对于离散数据的处理 Random Forest Classifier

加载数据集

data=sns.load_dataset('iris') #参照第19课和第11课

data查看数据集

划分特征变量与响应变量

X=data[['sepal_length', 'sepal_width', 'petal_length', 'petal_width']] # Features

y=data['species'] # Labels

划分训练集和测试集

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size = 0.30, random_state = 42)导入随机森林分类器函数进行模型训练和预测

#Import Random Forest Model

from sklearn.ensemble import RandomForestClassifier

#Create a Gaussian Classifier

clf=RandomForestClassifier(n_estimators=100)#n_estimators=100:用100棵树进行建模

#Train the model using the training sets y_pred=clf.predict(X_test)

clf.fit(X_train,y_train)

y_pred=clf.predict(X_test)

导入metrics库查看随机森林分类模型的准确性

#Import scikit-learn metrics module for accuracy calculation

from sklearn import metrics

# Model Accuracy, how often is the classifier correct?

print("Accuracy:",metrics.accuracy_score(y_test, y_pred))#此方法不能求回归器的准确性

特征变量权重分析 Feature_Importances_

特征变量权重分析(Feature Importances)是一种评估模型中各个特征对预测结果的贡献程度的方法。在机器学习和统计分析中,特征选择和特征工程是非常重要的步骤,因为它们可以帮助我们了解哪些特征对模型的预测性能有显著影响。

特征变量权重分析通常用于以下场景:

-

特征选择:通过计算特征的重要性,我们可以识别出对模型预测性能影响最大的特征,从而减少不必要的特征,提高模型的训练速度和预测性能。

-

特征工程:了解特征的重要性有助于我们进行特征工程,例如创建新的特征、转换现有特征等。

-

解释模型:特征重要性可以帮助我们理解模型是如何根据输入特征进行预测的,从而提高模型的可解释性。

常用的特征变量权重分析方法有:

-

基于树的方法(如决策树、随机森林、梯度提升树等):这些方法通过构建决策树来计算每个特征的重要性。常见的度量标准有基尼系数、信息增益、均方误差等。

-

基于线性模型的方法(如线性回归、逻辑回归等):这些方法通过计算特征的系数来评估其重要性。对于线性模型,特征的系数表示当该特征增加一个单位时,目标变量预期的变化量。

-

基于L1正则化的方法(如Lasso回归):L1正则化会使得一些特征的系数变为零,从而实现特征选择。通过观察被选中的特征,我们可以了解它们的重要性。

-

基于包裹式方法(如递归特征消除、基于遗传算法的特征选择等):这些方法通过反复训练模型并调整特征子集来评估特征的重要性。

总之,特征变量权重分析是一种评估模型中各个特征对预测结果的贡献程度的方法,它可以帮助我们进行特征选择、特征工程和解释模型。

feature_list=list(X.columns)#获取特征列标签列表

feature_imp = pd.Series(clf.feature_importances_,index=feature_list).sort_values(ascending=False)#求出每个特征的权重大小

绘制权重占比图像

# Creating a bar plot

sns.barplot(x=feature_imp, y=feature_imp.index)

# Add labels to your graph

plt.xlabel('Feature Importance Score')

plt.ylabel('Features')

plt.title("Visualizing Important Features")

plt.legend(feature_imp.index)

plt.show()

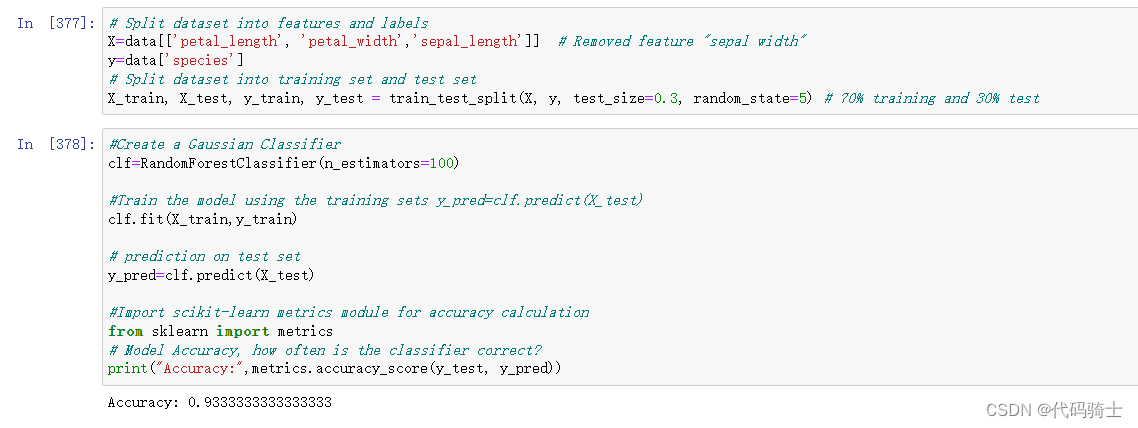

缩减特征变量X,对于成百上千特征变量的大数据集有非常重要的意义

# Split dataset into features and labels

X=data[['petal_length', 'petal_width','sepal_length']] # Removed feature "sepal width"

y=data['species']

# Split dataset into training set and test set

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.3, random_state=5) # 70% training and 30% test#Create a Gaussian Classifier

clf=RandomForestClassifier(n_estimators=100)

#Train the model using the training sets y_pred=clf.predict(X_test)

clf.fit(X_train,y_train)

# prediction on test set

y_pred=clf.predict(X_test)

#Import scikit-learn metrics module for accuracy calculation

from sklearn import metrics

# Model Accuracy, how often is the classifier correct?

print("Accuracy:",metrics.accuracy_score(y_test, y_pred))

二、随机森林对于连续数据的处理 Random Forest for Regression

dataset = pd.read_csv('./Lesson20-petrol_consumption.csv')

#dataset = pd.read_csv('C:/Users/86185/Desktop/TempDesktop/研究内容/Python学习/Py机深文字教程+源码/LessonPythonCode-main/Lesson20-petrol_consumption.csv')

#C:\Users\86185\Desktop\TempDesktop\研究内容\Python学习\Py机深文字教程+源码\LessonPythonCode-main

#C:/Users/86185/Desktop/TempDesktop/研究内容/Python学习/Py机深文字教程+源码/LessonPythonCode-main

划分特征变量和响应量

X = dataset.iloc[:, 0:4]

y = dataset.iloc[:, 4]

划分训练集和测试集

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=0)Feature Scaling(特征缩放/标准化) Scikit-Learn's StandardScaler

特征标准化:各个特征中数值差异较大,因此直接使用训练的模型效果可能很差,这就需要我们进行特征标准化处理。缩小数据之间的差异,60%的数据值会缩小在-1到1之间。

Petrol_tax——Average_income——Paved_Highways——Population_Driver_licence(%)

9.00—— 3571—— 1976—— 0.525

Feature Scaling(特征缩放)是一种数据预处理技术,用于将不同尺度的特征转换为统一的尺度。这有助于提高机器学习算法的性能和收敛速度。Scikit-Learn是一个流行的Python机器学习库,其中的StandardScaler(标准缩放器)是实现特征缩放的一种方法。

StandardScaler通过计算特征的均值和标准差,将每个特征值减去均值并除以标准差,从而将特征值转换到均值为0、标准差为1的分布上。这种标准化方法使得具有较大尺度的特征对模型的影响减小,从而提高了模型的稳定性和泛化能力。

from sklearn.preprocessing import StandardScaler

sc = StandardScaler()

X_train = sc.fit_transform(X_train)

X_test = sc.transform(X_test)

加载模型进行训练与评估

from sklearn.ensemble import RandomForestRegressor

regressor = RandomForestRegressor(n_estimators=100, random_state=0)

regressor.fit(X_train, y_train)

y_pred = regressor.predict(X_test)Evaluating the Regression Algorithm

from sklearn import metrics

print('Mean Absolute Error:', metrics.mean_absolute_error(y_test, y_pred))

print('Mean Squared Error:', metrics.mean_squared_error(y_test, y_pred))

print('Root Mean Squared Error:', np.sqrt(metrics.mean_squared_error(y_test, y_pred)))

这是机器学习中常用的三种误差度量指标,用于评估模型的预测结果与真实值之间的差异。

-

Mean Absolute Error(平均绝对误差):表示所有样本点上,预测值与真实值的绝对差值的平均数。该指标对异常值比较敏感,因为它会将每个错误都视为相同的重要贡献。

-

Mean Squared Error(均方误差):表示所有样本点上,预测值与真实值的平方差的平均值。相对于平均绝对误差,均方误差对于异常值的影响较小。

-

Root Mean Squared Error(均方根误差):是均方误差的平方根,它衡量了预测值与真实值之间的平均偏差程度。它是衡量回归模型性能的一种常用指标。

在判断回归模型的好坏时,我们通常需要用多个不同的指标来评估整体性能,因为每个指标都有其独特的侧重点和适用情况。以下是根据这三个指标对回归模型进行评估的一般方法:

-

平均绝对误差(Mean Absolute Error, MAE):这个指标衡量了所有样本点上,预测值与真实值的绝对差值的平均数。如果MAE值较小,则说明模型预测的准确性较高;反之,如果MAE值较大,则说明模型预测的准确性较低。不过,需要注意的是,MAE对异常值比较敏感。

-

均方误差(Mean Squared Error, MSE):这个指标衡量了所有样本点上,预测值与真实值的平方差的平均值。如果MSE值较小,则说明模型预测的准确性较高;反之,如果MSE值较大,则说明模型预测的准确性较低。相比于MAE,MSE对于异常值的影响较小。

-

均方根误差(Root Mean Squared Error, RMSE):这是均方误差的平方根,它衡量了预测值与真实值之间的平均偏差程度。如果RMSE值较小,则说明模型预测的准确性较高;反之,如果RMSE值较大,则说明模型预测的准确性较低。

总的来说,我们需要结合使用这些不同的指标,而不是仅仅依赖单一指标来评估模型的性能。同时,我们还需要考虑偏差的风险、错误的大小以及在实践中使用模型时可能产生的影响。此外,通常与基准进行比较也是判断模型好坏的最有效方法。

调参随机森林决策树的大小n_estimators

rmse=nestimators=[]

for n in [20,30,50,80,100,200,300,400,500,600,700,800]:

regressor = RandomForestRegressor(n_estimators=n, random_state=0)

regressor.fit(X_train, y_train)

y_pred = regressor.predict(X_test)

print('-------------------')

print('n_estimators={}'.format(n))

print('Mean Absolute Error:', metrics.mean_absolute_error(y_test, y_pred))

print('Mean Squared Error:', metrics.mean_squared_error(y_test, y_pred))

print('Root Mean Squared Error:', np.sqrt(metrics.mean_squared_error(y_test, y_pred)))

rmse=np.append(rmse,np.sqrt(metrics.mean_squared_error(y_test, y_pred)))

nestimators=np.append(nestimators,n)通过遍历寻找合适的 n_estimators 参数值(默认是100)

直观显示参数与误差之间的关系(寻找一个误差y最小时的参数x)

# Creating a bar plot

sns.set_style('whitegrid')

plt.plot(nestimators,rmse,'ro',linestyle='dashed',linewidth=1,markersize=10)

# Add labels to your graph

plt.xlabel('Feature Importance Score')

plt.ylabel('Features')

plt.title("Visualizing Important Features/RMSE")

plt.show()

P21-使用Adaboost建模及工作环境下的数据分析整理

https://www.youtube.com/watch?v=1_3s_hwiCO4&list=PLGkfh2EpdoKU3OssXkTl3y7c9tw7jjvHm&index=25

Adaboost,英文全称"Adaptive Boosting",意为自适应增强,是一种基于Boosting集成学习的算法。Boosting是一种试图从多个弱分类器中创建一个强分类器的集合技术。Adaboost的核心思想是通过从训练数据构建模型,然后创建第二个模型来尝试修正第一个模型的错误。

Adaboost算法最初由Yoav Freund和Robert Schapire在1995年提出。该算法的主要目标是通过反复学习不断改变训练样本的权重和弱分类器的权值,最终筛选出权值系数最小的弱分类器组合成一个最终强分类器。

Adaboost,全称为Adaptive Boosting,是一种有效且实用的Boosting算法。它的核心思想是以一种高度自适应的方式按顺序训练弱学习器,针对分类问题,根据前一次的分类效果调整数据的权重。

具体来说,Adaboost算法可以简述为以下三个步骤:

1. 初始化训练数据的权值分布。假设有N个训练样本数据,每一个训练样本最开始时,都被赋予相同的权值:w1=1/N。

2. 训练弱分类器hi。在训练过程中,如果某个训练样本点被弱分类器hi准确地分类,那么在构造下一个训练集中,它对应的权值要减小;相反,如果某个训练样本点被错误分类,那么它的权值就应该增大。权值更新过的样本集被用于训练下一个分类器,整个训练过程如此迭代地进行下去。

3. 将各个训练得到的弱分类器组合成一个强分类器。各个弱分类器的训练过程结束后,加大分类误差率小的弱分类器的权重,使其在最终的分类函数中起着较大的决定作用,而降低分类误差率大的弱分类器的权重,使其在最终的分类函数中起着较小的决定作用。此外,Adaboost也是一种加法模型的学习算法,其损失函数为指数函数。通过不断重复调整权重和训练弱学习器,直到误分类数低于预设值或迭代次数达到指定最大值,最终得到一个强学习器。值得一提的是,Adaboost具有很高的精度,并且充分考虑了每个分类器的权重。但是,Adaboost迭代次数也就是弱分类器数目不太好设定,可以使用交叉验证来进行确定。

adaboost与random forest的区别

Adaboost和Random Forest都是集成学习的算法,然而它们在许多方面存在显著的差异。首先,Adaboost是一种基于Boosting的加法模型学习算法,它反复进行学习,不断调整训练样本的权重和弱分类器的权值,最终选取权值系数最小的弱分类器组合成一个强分类器。其常用的弱学习器是决策树和神经网络。

另一方面,随机森林也是一种集成学习的算法,但它属于Bagging流派。随机森林通过建立并结合多个决策树的输出来得到一个最终结果,这旨在提高预测的准确性。不同于Adaboost一次只使用一个弱分类器,随机森林允许同时使用所有的决策树,并且每棵树的建立都考虑了样本随机性和特征随机性,这样可以减少过拟合的风险。

总结来说,虽然Adaboost和Random Forest都是集成学习的算法,但它们在建模方法、基学习器的选择等方面存在明显的区别。

Boosting与Bagging流派区别

Bagging和Boosting都是将已有的分类或回归算法通过一定方式组合起来,形成一个性能更加强大的分类器。虽然二者都是集成学习的方法,但是存在一些显著的差异。

Bagging,也称为套袋法,其算法过程如下:从原始样本集中抽取训练集。每轮从原始样本集中使用Bootstraping的方法抽取n个训练样本(在训练集中,有些样本可能被多次抽取到,而有些样本可能一次都没有被抽中)。共进行k轮抽取,得到k个训练集。(k个训练集之间是相互独立的) 每次使用一个训练集得到一个模型,k个训练集共得到k个模型。对分类问题:将上步得到的k个模型采用投票的方式得到分类结果;对回归问题,计算上述模型的均值作为最后的结果。(所有模型的重要性相同)。

而Boosting的主要思想是将弱分类器组装成一个强分类器。在PAC(概率近似正确)学习框架下,则一定可以将弱分类器组装成一个强分类器。关于Boosting有两个核心问题: 1. 在每一轮如何改变训练数据的权值或概率分布?通过提高那些在前一轮被弱分类器分错样例的权值,减小前一轮分对样例的权值,来使得分类器重点关注那些被误分的数据,直至所有的样本都被正确分类。2. 通过什么方式来组合弱分类器?通过加法模型将弱分类器进行线性组合,比如AdaBoost通过加权多数表决的方式,即增大错误率小的分类器的权值,同时减小错误率较大的分类器的权值。

从以上描述可以看出,Bagging和Boosting两个流派的区别主要体现在以下几个方面:样本选择、样例权重、预测函数、并行计算以及思路。

为了更好的在编译器中显示图片需要安装python第三方库:

pip install ipython

# pip install ipython

from IPython.display import Image

Image(filename='C:\\Users\\86185\\Desktop\\TempDesktop\\研究内容\\Python学习\\Py机深文字教程+源码\\LessonPythonCode-main\\Lesson21-adaboost.jpg')

#C:\Users\86185\Desktop\TempDesktop\研究内容\Python学习\Py机深文字教程+源码\LessonPythonCode-main

#Image(filename='D:/python/Project0-Python-MachineLearning/Lesson21-adaboost.jpg')

Adaboost算法图解

泰坦尼克号分析(工作环境)

import pandas as pd

import numpy as np

from matplotlib import pyplot as plt

import seaborn as sns

%matplotlib inline

import warnings ## importing warnings library.

warnings.filterwarnings('ignore') ## Ignore warning

import os ## imporing os

print(os.listdir("./"))

## Importing Titanic datasets from www.kaggle.com

train = pd.read_csv("./Lesson21-titanic_train.csv")

test = pd.read_csv("./Lesson21-titanic_test.csv")

#./seaborn-data/raw/titanic

#train = pd.read_csv("./titanic/Lesson21-titanic_train.csv")

Python可以引入任何图形及图形可视化工具

#导入一个HTML数据分析网页

%%HTML

<div class='tableauPlaceholder' id='viz1516349898238' style='position: relative'><noscript><a href='#'><img alt='An Overview of Titanic Training Dataset ' src='https://public.tableau.com/static/images/Ti/Titanic_data_mining/Dashboard1/1_rss.png' style='border: none' /></a></noscript><object class='tableauViz' style='display:none;'><param name='host_url' value='https%3A%2F%2Fpublic.tableau.com%2F' /> <param name='embed_code_version' value='3' /> <param name='site_root' value='' /><param name='name' value='Titanic_data_mining/Dashboard1' /><param name='tabs' value='no' /><param name='toolbar' value='yes' /><param name='static_image' value='https://public.tableau.com/static/images/Ti/Titanic_data_mining/Dashboard1/1.png' /> <param name='animate_transition' value='yes' /><param name='display_static_image' value='yes' /><param name='display_spinner' value='yes' /><param name='display_overlay' value='yes' /><param name='display_count' value='yes' /><param name='filter' value='publish=yes' /></object></div> <script type='text/javascript'> var divElement = document.getElementById('viz1516349898238'); var vizElement = divElement.getElementsByTagName('object')[0]; vizElement.style.width='100%';vizElement.style.height=(divElement.offsetWidth*0.75)+'px'; var scriptElement = document.createElement('script'); scriptElement.src = 'https://public.tableau.com/javascripts/api/viz_v1.js'; vizElement.parentNode.insertBefore(scriptElement, vizElement); </script>

passengerid = test.PassengerId

print (train.info())

print ("*"*80)

print (test.info())<class 'pandas.core.frame.DataFrame'> RangeIndex: 891 entries, 0 to 890 Data columns (total 12 columns): # Column Non-Null Count Dtype --- ------ -------------- ----- 0 PassengerId 891 non-null int64 1 Survived 891 non-null int64 2 Pclass 891 non-null int64 3 Name 891 non-null object 4 Sex 891 non-null object 5 Age 714 non-null float64 6 SibSp 891 non-null int64 7 Parch 891 non-null int64 8 Ticket 891 non-null object 9 Fare 891 non-null float64 10 Cabin 204 non-null object 11 Embarked 889 non-null object dtypes: float64(2), int64(5), object(5) memory usage: 83.7+ KB None ******************************************************************************** <class 'pandas.core.frame.DataFrame'> RangeIndex: 418 entries, 0 to 417 Data columns (total 11 columns): # Column Non-Null Count Dtype --- ------ -------------- ----- 0 PassengerId 418 non-null int64 1 Pclass 418 non-null int64 2 Name 418 non-null object 3 Sex 418 non-null object 4 Age 332 non-null float64 5 SibSp 418 non-null int64 6 Parch 418 non-null int64 7 Ticket 418 non-null object 8 Fare 417 non-null float64 9 Cabin 91 non-null object 10 Embarked 418 non-null object dtypes: float64(2), int64(4), object(5) memory usage: 36.0+ KB None

写个小程序统计缺失值

# total percentage of the missing values

# 统计缺失值

def missing_percentage(df):

"""This function takes a DataFrame(df) as input and returns two columns, total missing values and total missing values percentage"""

total = df.isnull().sum().sort_values(ascending = False)

percent = round(df.isnull().sum().sort_values(ascending = False)/len(df)*100,2)

return pd.concat([total, percent], axis=1, keys=['Total','Percent'])missing_percentage(train)

missing_percentage(test)

def percent_value_counts(df, feature):

percent = pd.DataFrame(round(df.loc[:,feature].value_counts(dropna=False, normalize=True)*100,2))

## creating a df with th

total = pd.DataFrame(df.loc[:,feature].value_counts(dropna=False))

## concating percent and total dataframe

total.columns = ["Total"]

percent.columns = ['Percent']

return pd.concat([total, percent], axis = 1)percent_value_counts(train, 'Embarked')

train[train.Embarked.isnull()]

sns.set_style('darkgrid')

fig, ax = plt.subplots(figsize=(16,12),ncols=2)

ax1 = sns.boxplot(x="Embarked", y="Fare", hue="Pclass", data=train, ax = ax[0]);

ax2 = sns.boxplot(x="Embarked", y="Fare", hue="Pclass", data=test, ax = ax[1]);

ax1.set_title("Training Set", fontsize = 18)

ax2.set_title('Test Set', fontsize = 18)

## Fixing legends

leg_1 = ax1.get_legend()

leg_1.set_title("PClass")

legs = leg_1.texts

legs[0].set_text('Upper')

legs[1].set_text('Middle')

legs[2].set_text('Lower')

fig.show()

Here, in both training set and test set, the average fare closest to $80 are in the C Embarked values where pclass is 1. So, let's fill in the missing values as "C"

在这里,训练集和测试集中平均票价最接近80美元的乘客登船地点(C Embarked)值都是pclass为1。因此,让我们将缺失值填充为“C”

## Replacing the null values in the Embarked column with the mode.

train.Embarked.fillna("C", inplace=True)print("Train Cabin missing: " + str(train.Cabin.isnull().sum()/len(train.Cabin)))

print("Test Cabin missing: " + str(test.Cabin.isnull().sum()/len(test.Cabin)))Train Cabin missing: 0.7710437710437711 Test Cabin missing: 0.7822966507177034

## Concat train and test into a variable "all_data"

survivers = train.Survived

train.drop(["Survived"],axis=1, inplace=True)

all_data = pd.concat([train,test], ignore_index=False)

## Assign all the null values to N

all_data.Cabin.fillna("N", inplace=True)all_data.Cabin = [i[0] for i in all_data.Cabin]percent_value_counts(all_data, "Cabin")

all_data.groupby("Cabin")['Fare'].mean().sort_values()

def cabin_estimator(i):

"""Grouping cabin feature by the first letter"""

a = 0

if i<16:

a = "G"

elif i>=16 and i<27:

a = "F"

elif i>=27 and i<38:

a = "T"

elif i>=38 and i<47:

a = "A"

elif i>= 47 and i<53:

a = "E"

elif i>= 53 and i<54:

a = "D"

elif i>=54 and i<116:

a = 'C'

else:

a = "B"

return a

with_N = all_data[all_data.Cabin == "N"]

without_N = all_data[all_data.Cabin != "N"]##applying cabin estimator function.

with_N['Cabin'] = with_N.Fare.apply(lambda x: cabin_estimator(x))

## getting back train.

all_data = pd.concat([with_N, without_N], axis=0)

## PassengerId helps us separate train and test.

all_data.sort_values(by = 'PassengerId', inplace=True)

## Separating train and test from all_data.

train = all_data[:891]

test = all_data[891:]

# adding saved target variable with train.

train['Survived'] = survivers

missing_value = test[(test.Pclass == 3) &

(test.Embarked == "S") &

(test.Sex == "male")].Fare.mean()

## replace the test.fare null values with test.fare mean

test.Fare.fillna(missing_value, inplace=True)missing_value12.718872

test[test.Fare.isnull()]Passenger Id Pclass Name Sex Age SibSpParch Ticket Fare Cabin Embarked

print ("Train age missing value: " + str((train.Age.isnull().sum()/len(train))*100)+str("%"))

print ("Test age missing value: " + str((test.Age.isnull().sum()/len(test))*100)+str("%"))Train age missing value: 19.865319865319865% Test age missing value: 20.574162679425836%

import seaborn as sns

pal = {'male':"green", 'female':"Pink"}

sns.set(style="darkgrid")

plt.subplots(figsize = (15,8))

ax = sns.barplot(x = "Sex",

y = "Survived",

data=train,

palette = pal,

linewidth=5,

order = ['female','male'],

capsize = .05,

)

plt.title("Survived/Non-Survived Passenger Gender Distribution", fontsize = 25,loc = 'center', pad = 40)

plt.ylabel("% of passenger survived", fontsize = 15, )

plt.xlabel("Sex",fontsize = 15);

pal = {1:"seagreen", 0:"gray"}

sns.set(style="darkgrid")

plt.subplots(figsize = (15,8))

ax = sns.countplot(x = "Sex",

hue="Survived",

data = train,

linewidth=4,

palette = pal

)

## Fixing title, xlabel and ylabel

plt.title("Passenger Gender Distribution - Survived vs Not-survived", fontsize = 25, pad=40)

plt.xlabel("Sex", fontsize = 15);

plt.ylabel("# of Passenger Survived", fontsize = 15)

## Fixing xticks

#labels = ['Female', 'Male']

#plt.xticks(sorted(train.Sex.unique()), labels)

## Fixing legends

leg = ax.get_legend()

leg.set_title("Survived")

legs = leg.texts

legs[0].set_text("No")

legs[1].set_text("Yes")

plt.show()

temp = train[['Pclass', 'Survived', 'PassengerId']].groupby(['Pclass', 'Survived']).count().reset_index()

temp_df = pd.pivot_table(temp, values = 'PassengerId', index = 'Pclass',columns = 'Survived')

names = ['No', 'Yes']

temp_df.columns = names

r = [0,1,2]

totals = [i+j for i, j in zip(temp_df['No'], temp_df['Yes'])]

No_s = [i / j * 100 for i,j in zip(temp_df['No'], totals)]

Yes_s = [i / j * 100 for i,j in zip(temp_df['Yes'], totals)]

## Plotting

plt.subplots(figsize = (15,10))

barWidth = 0.60

names = ('Upper', 'Middle', 'Lower')

# Create green Bars

plt.bar(r, No_s, color='Red', edgecolor='white', width=barWidth)

# Create orange Bars

plt.bar(r, Yes_s, bottom=No_s, color='Green', edgecolor='white', width=barWidth)

# Custom x axis

plt.xticks(r, names)

plt.xlabel("Pclass")

plt.ylabel('Percentage')

# Show graphic

plt.show()

plt.subplots(figsize = (15,10))

sns.barplot(x = "Pclass",

y = "Survived",

data=train,

linewidth=6,

capsize = .05,

errcolor='blue',

errwidth = 3

)

plt.title("Passenger Class Distribution - Survived vs Non-Survived", fontsize = 25, pad=40)

plt.xlabel("Socio-Economic class", fontsize = 15);

plt.ylabel("% of Passenger Survived", fontsize = 15);

names = ['Upper', 'Middle', 'Lower']

#val = sorted(train.Pclass.unique())

val = [0,1,2] ## this is just a temporary trick to get the label right.

plt.xticks(val, names);

# Kernel Density Plot

fig = plt.figure(figsize=(15,8),)

## I have included to different ways to code a plot below, choose the one that suites you.

ax=sns.kdeplot(train.Pclass[train.Survived == 0] ,

color='blue',

shade=True,

label='not survived')

ax=sns.kdeplot(train.loc[(train['Survived'] == 1),'Pclass'] ,

color='g',

shade=True,

label='survived',

)

plt.title('Passenger Class Distribution - Survived vs Non-Survived', fontsize = 25, pad = 40)

plt.ylabel("Frequency of Passenger Survived", fontsize = 15, labelpad = 20)

plt.xlabel("Passenger Class", fontsize = 15,labelpad =20)

## Converting xticks into words for better understanding

labels = ['Upper', 'Middle', 'Lower']

plt.xticks(sorted(train.Pclass.unique()), labels);

# Kernel Density Plot

fig = plt.figure(figsize=(15,8),)

ax=sns.kdeplot(train.loc[(train['Survived'] == 0),'Fare'] , color='blue',shade=True,label='not survived')

ax=sns.kdeplot(train.loc[(train['Survived'] == 1),'Fare'] , color='g',shade=True, label='survived')

plt.title('Fare Distribution Survived vs Non Survived', fontsize = 25, pad = 40)

plt.ylabel("Frequency of Passenger Survived", fontsize = 15, labelpad = 20)

plt.xlabel("Fare", fontsize = 15, labelpad = 20);

train[train.Fare > 280]

# Kernel Density Plot

fig = plt.figure(figsize=(15,8),)

ax=sns.kdeplot(train.loc[(train['Survived'] == 0),'Age'] , color='blue',shade=True,label='not survived')

ax=sns.kdeplot(train.loc[(train['Survived'] == 1),'Age'] , color='g',shade=True, label='survived')

plt.title('Age Distribution - Surviver V.S. Non Survivors', fontsize = 25, pad = 40)

plt.xlabel("Age", fontsize = 15, labelpad = 20)

plt.ylabel('Frequency', fontsize = 15, labelpad= 20);

pal = {1:"seagreen", 0:"gray"}

g = sns.FacetGrid(train,size=5, col="Sex", row="Survived", margin_titles=True, hue = "Survived",

palette=pal)

g = g.map(plt.hist, "Age", edgecolor = 'white');

g.fig.suptitle("Survived by Sex and Age", size = 25)

plt.subplots_adjust(top=0.90)

g = sns.FacetGrid(train,size=5, col="Sex", row="Embarked", margin_titles=True, hue = "Survived",

palette = pal

)

g = g.map(plt.hist, "Age", edgecolor = 'white').add_legend();

g.fig.suptitle("Survived by Sex and Age", size = 25)

plt.subplots_adjust(top=0.90)

g = sns.FacetGrid(train, size=5,hue="Survived", col ="Sex", margin_titles=True,

palette=pal,)

g.map(plt.scatter, "Fare", "Age",edgecolor="w").add_legend()

g.fig.suptitle("Survived by Sex, Fare and Age", size = 25)

plt.subplots_adjust(top=0.85)

## factor plot

sns.factorplot(x = "Parch", y = "Survived", data = train,kind = "point",size = 8)

plt.title("Factorplot of Parents/Children survived", fontsize = 25)

plt.subplots_adjust(top=1)

sns.factorplot(x = "SibSp", y = "Survived", data = train,kind = "point",size = 8)

plt.title('Factorplot of Sibilings/Spouses survived', fontsize = 25)

plt.subplots_adjust(top=0.85)

# Placing 0 for female and

# 1 for male in the "Sex" column.

train['Sex'] = train.Sex.apply(lambda x: 0 if x == "female" else 1)

test['Sex'] = test.Sex.apply(lambda x: 0 if x == "female" else 1)train.describe()

# Overview(Survived vs non survied)

survived_summary = train.groupby("Survived")

survived_summary.mean().reset_index()

survived_summary = train.groupby("Sex")

survived_summary.mean().reset_index()

survived_summary = train.groupby("Pclass")

survived_summary.mean().reset_index()

pd.DataFrame(abs(train.corr()['Survived']).sort_values(ascending = False))

## get the most important variables.

corr = train.corr()**2

corr.Survived.sort_values(ascending=False)

## heatmeap to see the correlation between features.

# Generate a mask for the upper triangle (taken from seaborn example gallery)

import numpy as np

mask = np.zeros_like(train.corr(), dtype=np.bool)

mask[np.triu_indices_from(mask)] = True

sns.set_style('whitegrid')

plt.subplots(figsize = (15,12))

sns.heatmap(train.corr(),

annot=True,

mask = mask,

cmap = 'RdBu', ## in order to reverse the bar replace "RdBu" with "RdBu_r"

linewidths=.9,

linecolor='white',

fmt='.2g',

center = 0,

square=True)

plt.title("Correlations Among Features", y = 1.03,fontsize = 20, pad = 40);

male_mean = train[train['Sex'] == 1].Survived.mean()

female_mean = train[train['Sex'] == 0].Survived.mean()

print ("Male survival mean: " + str(male_mean))

print ("female survival mean: " + str(female_mean))

print ("The mean difference between male and female survival rate: " + str(female_mean - male_mean))Male survival mean: 0.18890814558058924 female survival mean: 0.7420382165605095 The mean difference between male and female survival rate: 0.5531300709799203

# separating male and female dataframe.

import random

male = train[train['Sex'] == 1]

female = train[train['Sex'] == 0]

## empty list for storing mean sample

m_mean_samples = []

f_mean_samples = []

for i in range(50):

m_mean_samples.append(np.mean(random.sample(list(male['Survived']),50,)))

f_mean_samples.append(np.mean(random.sample(list(female['Survived']),50,)))

# Print them out

print (f"Male mean sample mean: {round(np.mean(m_mean_samples),2)}")

print (f"Female mean sample mean: {round(np.mean(f_mean_samples),2)}")

print (f"Difference between male and female mean sample mean: {round(np.mean(f_mean_samples) - np.mean(m_mean_samples),2)}")Male mean sample mean: 0.18 Female mean sample mean: 0.74 Difference between male and female mean sample mean: 0.56

train.Name0 Braund, Mr. Owen Harris

1 Cumings, Mrs. John Bradley (Florence Briggs Th...

2 Heikkinen, Miss. Laina

3 Futrelle, Mrs. Jacques Heath (Lily May Peel)

4 Allen, Mr. William Henry

...

886 Montvila, Rev. Juozas

887 Graham, Miss. Margaret Edith

888 Johnston, Miss. Catherine Helen "Carrie"

889 Behr, Mr. Karl Howell

890 Dooley, Mr. Patrick

Name: Name, Length: 891, dtype: object

# Creating a new colomn with a

train['name_length'] = [len(i) for i in train.Name]

test['name_length'] = [len(i) for i in test.Name]

def name_length_group(size):

a = ''

if (size <=20):

a = 'short'

elif (size <=35):

a = 'medium'

elif (size <=45):

a = 'good'

else:

a = 'long'

return a

train['nLength_group'] = train['name_length'].map(name_length_group)

test['nLength_group'] = test['name_length'].map(name_length_group)

## Here "map" is python's built-in function.

## "map" function basically takes a function and

## returns an iterable list/tuple or in this case series.

## However,"map" can also be used like map(function) e.g. map(name_length_group)

## or map(function, iterable{list, tuple}) e.g. map(name_length_group, train[feature]]).

## However, here we don't need to use parameter("size") for name_length_group because when we

## used the map function like ".map" with a series before dot, we are basically hinting that series

## and the iterable. This is similar to .append approach in python. list.append(a) meaning applying append on list.

## cuts the column by given bins based on the range of name_length

#group_names = ['short', 'medium', 'good', 'long']

#train['name_len_group'] = pd.cut(train['name_length'], bins = 4, labels=group_names)## get the title from the name

train["title"] = [i.split('.')[0] for i in train.Name]

train["title"] = [i.split(',')[1] for i in train.title]

## Whenever we split like that, there is a good change that

#we will end up with white space around our string values. Let's check that.print(train.title.unique())[' Mr' ' Mrs' ' Miss' ' Master' ' Don' ' Rev' ' Dr' ' Mme' ' Ms' ' Major' ' Lady' ' Sir' ' Mlle' ' Col' ' Capt' ' the Countess' ' Jonkheer']

## Let's fix that

train.title = train.title.apply(lambda x: x.strip())## We can also combile all three lines above for test set here

test['title'] = [i.split('.')[0].split(',')[1].strip() for i in test.Name]

## However it is important to be able to write readable code, and the line above is not so readable. ## Let's replace some of the rare values with the keyword 'rare' and other word choice of our own.

## train Data

train["title"] = [i.replace('Ms', 'Miss') for i in train.title]

train["title"] = [i.replace('Mlle', 'Miss') for i in train.title]

train["title"] = [i.replace('Mme', 'Mrs') for i in train.title]

train["title"] = [i.replace('Dr', 'rare') for i in train.title]

train["title"] = [i.replace('Col', 'rare') for i in train.title]

train["title"] = [i.replace('Major', 'rare') for i in train.title]

train["title"] = [i.replace('Don', 'rare') for i in train.title]

train["title"] = [i.replace('Jonkheer', 'rare') for i in train.title]

train["title"] = [i.replace('Sir', 'rare') for i in train.title]

train["title"] = [i.replace('Lady', 'rare') for i in train.title]

train["title"] = [i.replace('Capt', 'rare') for i in train.title]

train["title"] = [i.replace('the Countess', 'rare') for i in train.title]

train["title"] = [i.replace('Rev', 'rare') for i in train.title]

## Now in programming there is a term called DRY(Don't repeat yourself), whenever we are repeating

## same code over and over again, there should be a light-bulb turning on in our head and make us think

## to code in a way that is not repeating or dull. Let's write a function to do exactly what we

## did in the code above, only not repeating and more interesting.## we are writing a function that can help us modify title column

def fuse_title(feature):

"""

This function helps modifying the title column

"""

result = ''

if feature in ['the Countess','Capt','Lady','Sir','Jonkheer','Don','Major','Col', 'Rev', 'Dona', 'Dr']:

result = 'rare'

elif feature in ['Ms', 'Mlle']:

result = 'Miss'

elif feature == 'Mme':

result = 'Mrs'

else:

result = feature

return result

test.title = test.title.map(fuse_title)

train.title = train.title.map(fuse_title)print(train.title.unique())

print(test.title.unique())['Mr' 'Mrs' 'Miss' 'Master' 'rare'] ['Mr' 'Mrs' 'Miss' 'Master' 'rare']

## Family_size seems like a good feature to create

train['family_size'] = train.SibSp + train.Parch+1

test['family_size'] = test.SibSp + test.Parch+1## bin the family size.

def family_group(size):

"""

This funciton groups(loner, small, large) family based on family size

"""

a = ''

if (size <= 1):

a = 'loner'

elif (size <= 4):

a = 'small'

else:

a = 'large'

return a## apply the family_group function in family_size

train['family_group'] = train['family_size'].map(family_group)

test['family_group'] = test['family_size'].map(family_group)train['is_alone'] = [1 if i<2 else 0 for i in train.family_size]

test['is_alone'] = [1 if i<2 else 0 for i in test.family_size]#train.Ticket.value_counts().sample(10)

train.Ticket.value_counts()CA. 2343 7

347082 7

1601 7

3101295 6

347088 6

..

12460 1

STON/O2. 3101282 1

349242 1

A/5 21172 1

A/5. 851 1

Name: Ticket, Length: 681, dtype: int64

train.drop(['Ticket'], axis=1, inplace=True)

test.drop(['Ticket'], axis=1, inplace=True)## Calculating fare based on family size.

train['calculated_fare'] = train.Fare/train.family_size

test['calculated_fare'] = test.Fare/test.family_sizedef fare_group(fare):

"""

This function creates a fare group based on the fare provided

"""

a= ''

if fare <= 4:

a = 'Very_low'

elif fare <= 10:

a = 'low'

elif fare <= 20:

a = 'mid'

elif fare <= 45:

a = 'high'

else:

a = "very_high"

return a

train['fare_group'] = train['calculated_fare'].map(fare_group)

test['fare_group'] = test['calculated_fare'].map(fare_group)

#train['fare_group'] = pd.cut(train['calculated_fare'], bins = 4, labels=groups)train['fare_group']0 Very_low

1 high

2 low

3 high

4 low

...

886 mid

887 high

888 low

889 high

890 low

Name: fare_group, Length: 891, dtype: object

train.drop(['PassengerId'], axis=1, inplace=True)

test.drop(['PassengerId'], axis=1, inplace=True)train = pd.get_dummies(train, columns=['title',"Pclass", 'Cabin','Embarked','nLength_group', 'family_group', 'fare_group'], drop_first=False)

test = pd.get_dummies(test, columns=['title',"Pclass",'Cabin','Embarked','nLength_group', 'family_group', 'fare_group'], drop_first=False)

train.drop(['family_size','Name', 'Fare','name_length'], axis=1, inplace=True)

test.drop(['Name','family_size',"Fare",'name_length'], axis=1, inplace=True)

## rearranging the columns so that I can easily use the dataframe to predict the missing age values.

train = pd.concat([train[["Survived", "Age", "Sex","SibSp","Parch"]], train.loc[:,"is_alone":]], axis=1)

test = pd.concat([test[["Age", "Sex"]], test.loc[:,"SibSp":]], axis=1)

RandomForestRegressor预测年龄

## Importing RandomForestRegressor

from sklearn.ensemble import RandomForestRegressor

## writing a function that takes a dataframe with missing values and outputs it by filling the missing values.

def completing_age(df):

## gettting all the features except survived

age_df = df.loc[:,"Age":]

temp_train = age_df.loc[age_df.Age.notnull()] ## df with age values

temp_test = age_df.loc[age_df.Age.isnull()] ## df without age values

y = temp_train.Age.values ## setting target variables(age) in y

x = temp_train.loc[:, "Sex":].values

rfr = RandomForestRegressor(n_estimators=1500, n_jobs=-1)

rfr.fit(x, y)

predicted_age = rfr.predict(temp_test.loc[:, "Sex":])

df.loc[df.Age.isnull(), "Age"] = predicted_age

return df

## Implementing the completing_age function in both train and test dataset.

completing_age(train)

completing_age(test);## Let's look at the his

plt.subplots(figsize = (22,10),)

sns.distplot(train.Age, bins = 100, kde = True, rug = False, norm_hist=False);

## create bins for age

def age_group_fun(age):

"""

This function creates a bin for age

"""

a = ''

if age <= 1:

a = 'infant'

elif age <= 4:

a = 'toddler'

elif age <= 13:

a = 'child'

elif age <= 18:

a = 'teenager'

elif age <= 35:

a = 'Young_Adult'

elif age <= 45:

a = 'adult'

elif age <= 55:

a = 'middle_aged'

elif age <= 65:

a = 'senior_citizen'

else:

a = 'old'

return a

## Applying "age_group_fun" function to the "Age" column.

train['age_group'] = train['Age'].map(age_group_fun)

test['age_group'] = test['Age'].map(age_group_fun)

## Creating dummies for "age_group" feature.

train = pd.get_dummies(train,columns=['age_group'], drop_first=True)

test = pd.get_dummies(test,columns=['age_group'], drop_first=True);# separating our independent and dependent variable

X = train.drop(['Survived'], axis = 1)

y = train["Survived"]from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(X, y,test_size = .33, random_state=0)

## getting the headers

headers = X_train.columns

X_train.head()

# Feature Scaling

## We will be using standardscaler to transform

from sklearn.preprocessing import StandardScaler

std_scale = StandardScaler()

## transforming "train_x"

X_train = std_scale.fit_transform(X_train)

## transforming "test_x"

X_test = std_scale.transform(X_test)

## transforming "The testset"

#test = st_scale.transform(test)pd.DataFrame(X_train, columns=headers).head()

LogisticRegression建模

A regression model that uses L1 regularization technique is called Lasso Regression and model which uses L2 is called Ridge Regression. The key difference between these two is the penalty term. Ridge regression adds “squared magnitude” of coefficient as penalty term to the loss function

# import LogisticRegression model in python.

from sklearn.linear_model import LogisticRegression

from sklearn.metrics import mean_absolute_error, accuracy_score

## call on the model object

logreg = LogisticRegression(solver='liblinear',

penalty= 'l1',random_state = 42

)

## fit the model with "train_x" and "train_y"

logreg.fit(X_train,y_train)

y_pred = logreg.predict(X_test)

from sklearn.metrics import classification_report, confusion_matrix

# printing confision matrix

pd.DataFrame(confusion_matrix(y_test,y_pred),\

columns=["Predicted Not-Survived", "Predicted Survived"],\

index=["Not-Survived","Survived"] )

from sklearn.metrics import confusion_matrix

from sklearn.metrics import ConfusionMatrixDisplay

y_pred = logreg.predict(X_test)

cm = confusion_matrix(y_test, y_pred)

cm_display = ConfusionMatrixDisplay(cm,display_labels='').plot()

from sklearn.metrics import accuracy_score

accuracy_score(y_test, y_pred)0.8305084745762712

from sklearn.metrics import recall_score

recall_score(y_test, y_pred)0.7927927927927928

from sklearn.metrics import precision_score

precision_score(y_test, y_pred)0.7652173913043478

from sklearn.metrics import classification_report, balanced_accuracy_score

print(classification_report(y_test, y_pred))precision recall f1-score support

0 0.87 0.85 0.86 184

1 0.77 0.79 0.78 111

accuracy 0.83 295

macro avg 0.82 0.82 0.82 295

weighted avg 0.83 0.83 0.83 295

from sklearn.metrics import roc_curve, auc

#plt.style.use('seaborn-pastel')

y_score = logreg.decision_function(X_test)

FPR, TPR, _ = roc_curve(y_test, y_score)

ROC_AUC = auc(FPR, TPR)

print (ROC_AUC)

plt.figure(figsize =[11,9])

plt.plot(FPR, TPR, label= 'ROC curve(area = %0.2f)'%ROC_AUC, linewidth= 4)

plt.plot([0,1],[0,1], 'k--', linewidth = 4)

plt.xlim([0.0,1.0])

plt.ylim([0.0,1.05])

plt.xlabel('False Positive Rate', fontsize = 18)

plt.ylabel('True Positive Rate', fontsize = 18)

plt.title('ROC for Titanic survivors', fontsize= 18)

plt.show()

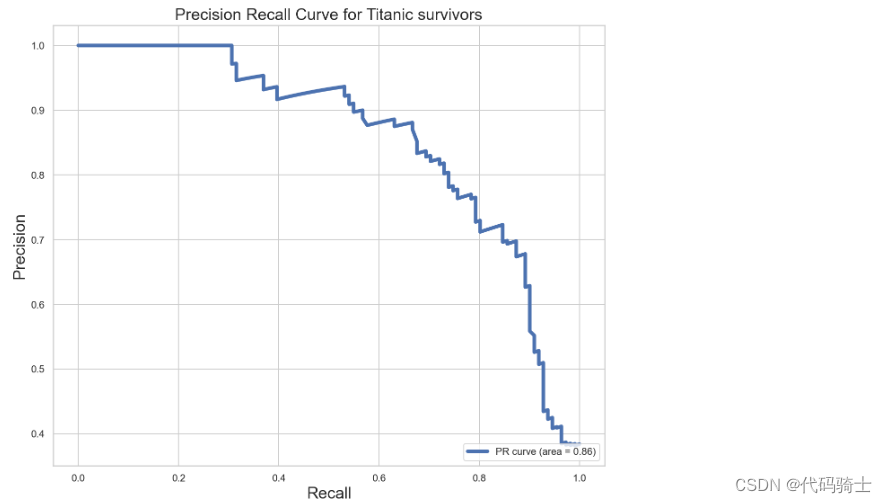

from sklearn.metrics import precision_recall_curve

y_score = logreg.decision_function(X_test)

precision, recall, _ = precision_recall_curve(y_test, y_score)

PR_AUC = auc(recall, precision)

plt.figure(figsize=[11,9])

plt.plot(recall, precision, label='PR curve (area = %0.2f)' % PR_AUC, linewidth=4)

plt.xlabel('Recall', fontsize=18)

plt.ylabel('Precision', fontsize=18)

plt.title('Precision Recall Curve for Titanic survivors', fontsize=18)

plt.legend(loc="lower right")

plt.show()

引入GridSearchCV

GridSearch stands for the fact that we are searching for optimal parameter/parameters over a "grid." These optimal parameters are also known as Hyperparameters. The Hyperparameters are model parameters that are set before fitting the model and determine the behavior of the model.

from sklearn.model_selection import GridSearchCV

from sklearn.model_selection import StratifiedShuffleSplit

# C_vals is the alpla value of lasso and ridge regression(as

# alpha increases the model complexity decreases,)

## remember effective alpha scores are 0<alpha<infinity

C_vals = [0.2,0.3,0.4,0.5,0.6,0.7,0.8,0.9,1]

## Choose a cross validation strategy.

cv = StratifiedShuffleSplit(n_splits = 10, test_size = .25)

## setting param for param_grid in GridSearchCV.

param = {'C': C_vals}

logreg = LogisticRegression()

## Calling on GridSearchCV object.

grid = GridSearchCV(

estimator=LogisticRegression(),

param_grid = param,

scoring = 'accuracy',

n_jobs =-1,

cv = cv

)

## Fitting the model

grid.fit(X, y)GridSearchCV(cv=StratifiedShuffleSplit(n_splits=10, random_state=None, test_size=0.25,

train_size=None),

error_score=nan,

estimator=LogisticRegression(C=1.0, class_weight=None, dual=False,

fit_intercept=True,

intercept_scaling=1, l1_ratio=None,

max_iter=100, multi_class='auto',

n_jobs=None, penalty='l2',

random_state=None, solver='lbfgs',

tol=0.0001, verbose=0,

warm_start=False),

iid='deprecated', n_jobs=-1,

param_grid={'C': [0.2, 0.3, 0.4, 0.5, 0.6, 0.7, 0.8, 0.9, 1]},

pre_dispatch='2*n_jobs', refit=True, return_train_score=False,

scoring='accuracy', verbose=0)

## Getting the best of everything.

print (grid.best_score_)

print (grid.best_params_)

print(grid.best_estimator_)0.8251121076233184

{'C': 0.9}

LogisticRegression(C=0.9, class_weight=None, dual=False, fit_intercept=True,

intercept_scaling=1, l1_ratio=None, max_iter=100,

multi_class='auto', n_jobs=None, penalty='l2',

random_state=None, solver='lbfgs', tol=0.0001, verbose=0,

warm_start=False)

### Using the best parameters from the grid-search.

logreg_grid = grid.best_estimator_

logreg_grid.score(X,y)引入RandomizedSearchCV

Randomized search is a close cousin of grid search. It doesn't always provide the best result but its fast.

from sklearn.model_selection import RandomizedSearchCV

rand1 = RandomizedSearchCV(

estimator=LogisticRegression(),

param_distributions = param,

scoring = 'accuracy',

n_jobs =-1,

cv = cv

)

## Fitting the model

rand1.fit(X, y)RandomizedSearchCV(cv=StratifiedShuffleSplit(n_splits=10, random_state=None, test_size=0.25,

train_size=None),

error_score=nan,

estimator=LogisticRegression(C=1.0, class_weight=None,

dual=False, fit_intercept=True,

intercept_scaling=1,

l1_ratio=None, max_iter=100,

multi_class='auto', n_jobs=None,

penalty='l2', random_state=None,

solver='lbfgs', tol=0.0001,

verbose=0, warm_start=False),

iid='deprecated', n_iter=10, n_jobs=-1,

param_distributions={'C': [0.2, 0.3, 0.4, 0.5, 0.6, 0.7, 0.8,

0.9, 1]},

pre_dispatch='2*n_jobs', random_state=None, refit=True,

return_train_score=False, scoring='accuracy', verbose=0)

## Getting the best of everything.

print (rand1.best_score_)

print (rand1.best_params_)

print(rand1.best_estimator_)0.8210762331838565

{'C': 0.4}

LogisticRegression(C=0.4, class_weight=None, dual=False, fit_intercept=True,

intercept_scaling=1, l1_ratio=None, max_iter=100,

multi_class='auto', n_jobs=None, penalty='l2',

random_state=None, solver='lbfgs', tol=0.0001, verbose=0,

warm_start=False)

logreg_rand = rand1.best_estimator_

logreg_rand.score(X,y)0.8361391694725028

Decision Tree建模

from sklearn.tree import DecisionTreeClassifier

max_depth = range(1,30)

max_feature = [21,22,23,24,25,26,28,29,30,'auto']

criterion=["entropy", "gini"]

param = {'max_depth':max_depth,

'max_features':max_feature,

'criterion': criterion}

grid = GridSearchCV(DecisionTreeClassifier(),

param_grid = param,

verbose=False,

cv=StratifiedShuffleSplit(n_splits=20, random_state=15),

n_jobs = -1)

grid.fit(X, y) GridSearchCV(cv=StratifiedShuffleSplit(n_splits=20, random_state=15, test_size=None,

train_size=None),

error_score=nan,

estimator=DecisionTreeClassifier(ccp_alpha=0.0, class_weight=None,

criterion='gini', max_depth=None,

max_features=None,

max_leaf_nodes=None,

min_impurity_decrease=0.0,

min_impurity_split=None,

min_samples_leaf=1,

min_samples_split=2,

min_weight_fraction_leaf=0.0,

presort='deprecated',

random_state=None,

splitter='best'),

iid='deprecated', n_jobs=-1,

param_grid={'criterion': ['entropy', 'gini'],

'max_depth': range(1, 30),

'max_features': [21, 22, 23, 24, 25, 26, 28, 29, 30,

'auto']},

pre_dispatch='2*n_jobs', refit=True, return_train_score=False,

scoring=None, verbose=False)

print( grid.best_params_)

print (grid.best_score_)

print (grid.best_estimator_){'criterion': 'gini', 'max_depth': 8, 'max_features': 26}

0.8211111111111112

DecisionTreeClassifier(ccp_alpha=0.0, class_weight=None, criterion='gini',

max_depth=8, max_features=26, max_leaf_nodes=None,

min_impurity_decrease=0.0, min_impurity_split=None,

min_samples_leaf=1, min_samples_split=2,

min_weight_fraction_leaf=0.0, presort='deprecated',

random_state=None, splitter='best')

dectree_grid = grid.best_estimator_

## using the best found hyper paremeters to get the score.

dectree_grid.score(X,y)0.8574635241301908

RandomForest建模

from sklearn.model_selection import GridSearchCV, StratifiedKFold, StratifiedShuffleSplit

from sklearn.ensemble import RandomForestClassifier

n_estimators = [140,145,150,155,160];

max_depth = range(1,10);

criterions = ['gini', 'entropy'];

cv = StratifiedShuffleSplit(n_splits=10, test_size=.30, random_state=15)

parameters = {'n_estimators':n_estimators,

'max_depth':max_depth,

'criterion': criterions

}

grid = GridSearchCV(estimator=RandomForestClassifier(max_features='auto'),

param_grid=parameters,

cv=cv,

n_jobs = -1)

grid.fit(X,y) GridSearchCV(cv=StratifiedShuffleSplit(n_splits=10, random_state=15, test_size=0.3,

train_size=None),

error_score=nan,

estimator=RandomForestClassifier(bootstrap=True, ccp_alpha=0.0,

class_weight=None,

criterion='gini', max_depth=None,

max_features='auto',

max_leaf_nodes=None,

max_samples=None,

min_impurity_decrease=0.0,

min_impurity_split=None,

min_samples_leaf=1,

min_samples_split=2,

min_weight_fraction_leaf=0.0,

n_estimators=100, n_jobs=None,

oob_score=False,

random_state=None, verbose=0,

warm_start=False),

iid='deprecated', n_jobs=-1,

param_grid={'criterion': ['gini', 'entropy'],

'max_depth': range(1, 10),

'n_estimators': [140, 145, 150, 155, 160]},

pre_dispatch='2*n_jobs', refit=True, return_train_score=False,

scoring=None, verbose=0)print (grid.best_score_)

print (grid.best_params_)

print (grid.best_estimator_)0.835820895522388

{'criterion': 'entropy', 'max_depth': 6, 'n_estimators': 150}

RandomForestClassifier(bootstrap=True, ccp_alpha=0.0, class_weight=None,

criterion='entropy', max_depth=6, max_features='auto',

max_leaf_nodes=None, max_samples=None,

min_impurity_decrease=0.0, min_impurity_split=None,

min_samples_leaf=1, min_samples_split=2,

min_weight_fraction_leaf=0.0, n_estimators=150,

n_jobs=None, oob_score=False, random_state=None,

verbose=0, warm_start=False)

rf_grid = grid.best_estimator_

rf_grid.score(X,y)0.8574635241301908

Feature Importance

column_names = X1.columns

feature_importances = pd.DataFrame(rf_grid.feature_importances_,

index = column_names,

columns=['importance'])

feature_importances.sort_values(by='importance', ascending=False).head(15)

AdaBoost建模

from sklearn.ensemble import AdaBoostClassifier

adaBoost = AdaBoostClassifier(base_estimator=None,

learning_rate=1.0,

n_estimators=100)

adaBoost.fit(X_train, y_train)

y_pred = adaBoost.predict(X_test)

from sklearn.metrics import accuracy_score

accuracy_score(y_test, y_pred)0.8033898305084746

from sklearn.model_selection import GridSearchCV

from sklearn.model_selection import StratifiedShuffleSplit

n_estimators = [80,100,140,145,150,160, 170,175,180,185];

cv = StratifiedShuffleSplit(n_splits=10, test_size=.30, random_state=15)

learning_r = [0.1,1,0.01,0.5]

parameters = {'n_estimators':n_estimators,

'learning_rate':learning_r

}

grid = GridSearchCV(AdaBoostClassifier(base_estimator= None, ## If None, then the base estimator is a decision tree.

),

param_grid=parameters,

cv=cv,

n_jobs = -1)

grid.fit(X,y) GridSearchCV(cv=StratifiedShuffleSplit(n_splits=10, random_state=15, test_size=0.3,

train_size=None),

error_score=nan,

estimator=AdaBoostClassifier(algorithm='SAMME.R',

base_estimator=None,

learning_rate=1.0, n_estimators=50,

random_state=None),

iid='deprecated', n_jobs=-1,

param_grid={'learning_rate': [0.1, 1, 0.01, 0.5],

'n_estimators': [80, 100, 140, 145, 150, 160, 170, 175,

180, 185]},

pre_dispatch='2*n_jobs', refit=True, return_train_score=False,

scoring=None, verbose=0)

## Getting the best of everything.

print (grid.best_score_)

print (grid.best_params_)

print(grid.best_estimator_)0.825

{'learning_rate': 0.1, 'n_estimators': 80}

AdaBoostClassifier(algorithm='SAMME.R', base_estimator=None, learning_rate=0.1,

n_estimators=80, random_state=None)

ada_grid = grid.best_estimator_

ada_grid.score(X,y)0.8316498316498316

Gradient Boosting梯度提升建模

# Gradient Boosting Classifier

from sklearn.ensemble import GradientBoostingClassifier

gradient_boost = GradientBoostingClassifier()

gradient_boost.fit(X_train, y_train)

y_pred = gradient_boost.predict(X_test)

gradient_accy = round(accuracy_score(y_pred, y_test), 3)

print(gradient_accy)0.817

grid = GridSearchCV(GradientBoostingClassifier(),

param_grid=parameters,

cv=cv,

n_jobs = -1)

grid.fit(X,y) GridSearchCV(cv=StratifiedShuffleSplit(n_splits=10, random_state=15, test_size=0.3,

train_size=None),

error_score=nan,

estimator=GradientBoostingClassifier(ccp_alpha=0.0,

criterion='friedman_mse',

init=None, learning_rate=0.1,

loss='deviance', max_depth=3,

max_features=None,

max_leaf_nodes=None,

min_impurity_decrease=0.0,

min_impurity_split=None,

min_samples_leaf...

n_iter_no_change=None,

presort='deprecated',

random_state=None,

subsample=1.0, tol=0.0001,

validation_fraction=0.1,

verbose=0, warm_start=False),

iid='deprecated', n_jobs=-1,

param_grid={'learning_rate': [0.1, 1, 0.01, 0.5],

'n_estimators': [80, 100, 140, 145, 150, 160, 170, 175,

180, 185]},

pre_dispatch='2*n_jobs', refit=True, return_train_score=False,

scoring=None, verbose=0)

## Getting the best of everything.

print (grid.best_score_)

print (grid.best_params_)

print(grid.best_estimator_)0.8376865671641791

{'learning_rate': 0.01, 'n_estimators': 140}

GradientBoostingClassifier(ccp_alpha=0.0, criterion='friedman_mse', init=None,

learning_rate=0.01, loss='deviance', max_depth=3,

max_features=None, max_leaf_nodes=None,

min_impurity_decrease=0.0, min_impurity_split=None,

min_samples_leaf=1, min_samples_split=2,

min_weight_fraction_leaf=0.0, n_estimators=140,

n_iter_no_change=None, presort='deprecated',

random_state=None, subsample=1.0, tol=0.0001,

validation_fraction=0.1, verbose=0,

warm_start=False)

gb_grid = grid.best_estimator_

gb_grid.score(X,y)0.8406285072951739

Support Vector Machine建模

from sklearn.svm import SVC

Cs = [0.001, 0.01, 0.1, 1,1.5,2,2.5,3,4,5, 10] ## penalty parameter C for the error term.

gammas = [0.0001,0.001, 0.01, 0.1, 1]

param_grid = {'C': Cs, 'gamma' : gammas}

cv = StratifiedShuffleSplit(n_splits=10, test_size=.30, random_state=15)

grid_search = GridSearchCV(SVC(kernel = 'rbf', probability=True), param_grid, cv=cv) ## 'rbf' stands for gaussian kernel

grid_search.fit(X,y)GridSearchCV(cv=StratifiedShuffleSplit(n_splits=10, random_state=15, test_size=0.3,

train_size=None),

error_score=nan,

estimator=SVC(C=1.0, break_ties=False, cache_size=200,

class_weight=None, coef0=0.0,

decision_function_shape='ovr', degree=3,

gamma='scale', kernel='rbf', max_iter=-1,

probability=True, random_state=None, shrinking=True,

tol=0.001, verbose=False),

iid='deprecated', n_jobs=None,

param_grid={'C': [0.001, 0.01, 0.1, 1, 1.5, 2, 2.5, 3, 4, 5, 10],

'gamma': [0.0001, 0.001, 0.01, 0.1, 1]},

pre_dispatch='2*n_jobs', refit=True, return_train_score=False,

scoring=None, verbose=0)

print(grid_search.best_score_)

print(grid_search.best_params_)

print(grid_search.best_estimator_)0.835820895522388

{'C': 1, 'gamma': 0.01}

SVC(C=1, break_ties=False, cache_size=200, class_weight=None, coef0=0.0,

decision_function_shape='ovr', degree=3, gamma=0.01, kernel='rbf',

max_iter=-1, probability=True, random_state=None, shrinking=True, tol=0.001,

verbose=False)

# using the best found hyper paremeters to get the score.

svm_grid = grid_search.best_estimator_

svm_grid.score(X,y)0.8372615039281706

svm_grid.predict(X_test)array([0, 0, 0, 1, 1, 0, 1, 1, 0, 1, 0, 1, 0, 1, 1, 1, 0, 0, 0, 1, 0, 1,

0, 0, 1, 1, 0, 1, 1, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

1, 0, 0, 1, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 0, 1, 0,

1, 0, 1, 1, 1, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 1, 1, 0, 0, 1, 1, 1,

1, 0, 0, 0, 1, 1, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0, 1, 1, 1, 0, 0, 1,

0, 1, 0, 1, 0, 1, 1, 1, 0, 1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 1, 0,

0, 1, 0, 0, 0, 1, 0, 0, 0, 1, 0, 1, 1, 1, 0, 1, 1, 0, 0, 1, 1, 0,

1, 0, 1, 0, 1, 1, 0, 0, 1, 1, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 1, 0,

1, 0, 0, 1, 0, 0, 0, 0, 0, 0, 1, 0, 0, 1, 1, 0, 1, 1, 0, 0, 0, 1,

0, 0, 0, 1, 0, 1, 0, 0, 1, 0, 1, 0, 0, 0, 0, 1, 0, 0, 0, 1, 0, 1,

0, 1, 1, 0, 0, 0, 0, 1, 0, 0, 0, 1, 1, 1, 0, 0, 1, 1, 1, 0, 0, 1,

0, 0, 1, 1, 1, 0, 0, 1, 0, 0, 0, 0, 0, 1, 1, 0, 0, 0, 0, 0, 0, 0,

0, 0, 1, 0, 0, 1, 0, 0, 1, 1, 0, 0, 0, 0, 1, 1, 0, 1, 0, 1, 0, 0,

0, 0, 0, 0, 0, 0, 1, 1, 1], dtype=int64)

Xgboost建模

from xgboost import XGBClassifier

XGBC = XGBClassifier()

XGBC.fit(X_train, y_train)

y_pred = XGBC.predict(X_test)

XGBC_accy = round(accuracy_score(y_pred, y_test), 3)

print(XGBC_accy)0.841

grid = GridSearchCV(XGBClassifier(base_estimator= 100,

),

param_grid=parameters,

cv=cv,

n_jobs = -1)

grid.fit(X,y) GridSearchCV(cv=StratifiedShuffleSplit(n_splits=10, random_state=15, test_size=0.3,

train_size=None),

error_score=nan,

estimator=XGBClassifier(base_estimator=100, base_score=0.5,

booster='gbtree', colsample_bylevel=1,

colsample_bynode=1, colsample_bytree=1,

gamma=0, learning_rate=0.1,

max_delta_step=0, max_depth=3,

min_child_weight=1, missing=None,

n_estimators=100...one,

objective='binary:logistic',

random_state=0, reg_alpha=0, reg_lambda=1,

scale_pos_weight=1, seed=None, silent=None,

subsample=1, verbosity=1),

iid='deprecated', n_jobs=-1,

param_grid={'learning_rate': [0.1, 1, 0.01, 0.5],

'n_estimators': [80, 100, 140, 145, 150, 160, 170, 175,

180, 185]},

pre_dispatch='2*n_jobs', refit=True, return_train_score=False,

scoring=None, verbose=0)

## Getting the best of everything.

print (grid.best_score_)

print (grid.best_params_)

print(grid.best_estimator_)0.8402985074626865

{'learning_rate': 0.01, 'n_estimators': 140}

XGBClassifier(base_estimator=100, base_score=0.5, booster='gbtree',

colsample_bylevel=1, colsample_bynode=1, colsample_bytree=1,

gamma=0, learning_rate=0.01, max_delta_step=0, max_depth=3,

min_child_weight=1, missing=None, n_estimators=140, n_jobs=1,

nthread=None, objective='binary:logistic', random_state=0,

reg_alpha=0, reg_lambda=1, scale_pos_weight=1, seed=None,

silent=None, subsample=1, verbosity=1)

xgb_grid = grid.best_estimator_

xgb_grid.score(X,y)0.8428731762065096

Bagging Classifier建模

Why use Bagging?

Bagging works best with strong and complex models(for example, fully developed decision trees). However, don't let that fool you to thinking that similar to a decision tree, bagging also overfits the model. Instead, bagging reduces overfitting since a lot of the sample training data are repeated and used to create base estimators. With a lot of equally likely training data, bagging is not very susceptible to overfitting with noisy data, therefore reduces variance. However, the downside is that this leads to an increase in bias.

from sklearn.ensemble import BaggingClassifier

n_estimators = [10,30,50,70,80,150,160, 170,175,180,185];

cv = StratifiedShuffleSplit(n_splits=10, test_size=.30, random_state=15)

parameters = {'n_estimators':n_estimators,}

grid = GridSearchCV(BaggingClassifier(base_estimator= None, ## If None, then the base estimator is a decision tree.

bootstrap_features=False),

param_grid=parameters,

cv=cv,

n_jobs = -1)

grid.fit(X,y) GridSearchCV(cv=StratifiedShuffleSplit(n_splits=10, random_state=15, test_size=0.3,

train_size=None),

error_score=nan,

estimator=BaggingClassifier(base_estimator=None, bootstrap=True,

bootstrap_features=False,

max_features=1.0, max_samples=1.0,

n_estimators=10, n_jobs=None,

oob_score=False, random_state=None,

verbose=0, warm_start=False),

iid='deprecated', n_jobs=-1,

param_grid={'n_estimators': [10, 30, 50, 70, 80, 150, 160, 170,

175, 180, 185]},

pre_dispatch='2*n_jobs', refit=True, return_train_score=False,

scoring=None, verbose=0)

print (grid.best_score_)

print (grid.best_params_)

print (grid.best_estimator_)0.8171641791044777

{'n_estimators': 185}

BaggingClassifier(base_estimator=None, bootstrap=True, bootstrap_features=False,

max_features=1.0, max_samples=1.0, n_estimators=185,

n_jobs=None, oob_score=False, random_state=None, verbose=0,

warm_start=False)

bagging_grid = grid.best_estimator_

bagging_grid.score(X,y)0.9887766554433222

Extra Trees Classifier建模

from sklearn.ensemble import ExtraTreesClassifier

ExtraTreesClassifier = ExtraTreesClassifier()

ExtraTreesClassifier.fit(X, y)

y_pred = ExtraTreesClassifier.predict(X_test)

extraTree_accy = round(accuracy_score(y_pred, y_test), 3)

print(extraTree_accy)0.892

K-Nearest Neighbor classifier(KNN)建模 using GridSearchCV

## Importing the model.

from sklearn.neighbors import KNeighborsClassifier

from sklearn.model_selection import cross_val_score

## calling on the model oject.

knn = KNeighborsClassifier(metric='minkowski', p=2)

## knn classifier works by doing euclidian distance

## doing 10 fold staratified-shuffle-split cross validation

cv = StratifiedShuffleSplit(n_splits=10, test_size=.25, random_state=2)

accuracies = cross_val_score(knn, X,y, cv = cv, scoring='accuracy')

print ("Cross-Validation accuracy scores:{}".format(accuracies))

print ("Mean Cross-Validation accuracy score: {}".format(round(accuracies.mean(),3)))Cross-Validation accuracy scores:[0.78475336 0.76681614 0.79820628 0.81165919 0.81165919 0.79372197 0.77578475 0.8161435 0.78026906 0.8161435 ] Mean Cross-Validation accuracy score: 0.796

## Search for an optimal value of k for KNN.

k_range = range(1,31)

k_scores = []

for k in k_range:

knn = KNeighborsClassifier(n_neighbors=k)

scores = cross_val_score(knn, X,y, cv = cv, scoring = 'accuracy')

k_scores.append(scores.mean())

print("Accuracy scores are: {}\n".format(k_scores))

print ("Mean accuracy score: {}".format(np.mean(k_scores)))Accuracy scores are: [0.742152466367713, 0.7641255605381165, 0.7878923766816144, 0.7923766816143497, 0.7955156950672645, 0.7986547085201794, 0.794170403587444, 0.7914798206278026, 0.7977578475336322, 0.793273542600897, 0.794170403587444, 0.789237668161435, 0.7946188340807174, 0.7887892376681614, 0.7887892376681613, 0.7865470852017937, 0.7887892376681613, 0.788340807174888, 0.795067264573991, 0.7838565022421525, 0.7865470852017937, 0.7820627802690583, 0.7874439461883408, 0.7798206278026906, 0.784304932735426, 0.7690582959641257, 0.775336322869955, 0.7695067264573991, 0.7748878923766817, 0.768609865470852] Mean accuracy score: 0.7844394618834081

from matplotlib import pyplot as plt

plt.plot(k_range, k_scores)

from sklearn.model_selection import GridSearchCV

## trying out multiple values for k

k_range = range(1,31)

##

weights_options=['uniform','distance']

#

param = {'n_neighbors':k_range, 'weights':weights_options}

## Using startifiedShufflesplit.

cv = StratifiedShuffleSplit(n_splits=10, test_size=.30, random_state=15)

# estimator = knn, param_grid = param, n_jobs = -1 to instruct scikit learn to use all available processors.

grid = GridSearchCV(KNeighborsClassifier(), param,cv=cv,verbose = False, n_jobs=-1)

## Fitting the model.

grid.fit(X,y)GridSearchCV(cv=StratifiedShuffleSplit(n_splits=10, random_state=15, test_size=0.3,

train_size=None),

error_score=nan,

estimator=KNeighborsClassifier(algorithm='auto', leaf_size=30,

metric='minkowski',

metric_params=None, n_jobs=None,

n_neighbors=5, p=2,

weights='uniform'),

iid='deprecated', n_jobs=-1,

param_grid={'n_neighbors': range(1, 31),

'weights': ['uniform', 'distance']},

pre_dispatch='2*n_jobs', refit=True, return_train_score=False,

scoring=None, verbose=False)

print(grid.best_score_)

print(grid.best_params_)

print(grid.best_estimator_)0.8082089552238806

{'n_neighbors': 8, 'weights': 'uniform'}

KNeighborsClassifier(algorithm='auto', leaf_size=30, metric='minkowski',

metric_params=None, n_jobs=None, n_neighbors=8, p=2,

weights='uniform')

### Using the best parameters from the grid-search.

knn_grid= grid.best_estimator_

knn_grid.score(X,y)0.8417508417508418

K-Nearest Neighbor classifier(KNN)建模 Using RandomizedSearchCV

from sklearn.model_selection import RandomizedSearchCV

## trying out multiple values for k

k_range = range(1,31)

##

weights_options=['uniform','distance']

#

param = {'n_neighbors':k_range, 'weights':weights_options}

## Using startifiedShufflesplit.

cv = StratifiedShuffleSplit(n_splits=10, test_size=.30)

# estimator = knn, param_grid = param, n_jobs = -1 to instruct scikit learn to use all available processors.

## for RandomizedSearchCV,

grid = RandomizedSearchCV(KNeighborsClassifier(), param,cv=cv,verbose = False, n_jobs=-1, n_iter=40)

## Fitting the model.

grid.fit(X,y)RandomizedSearchCV(cv=StratifiedShuffleSplit(n_splits=10, random_state=None, test_size=0.3,

train_size=None),

error_score=nan,

estimator=KNeighborsClassifier(algorithm='auto',

leaf_size=30,

metric='minkowski',

metric_params=None,

n_jobs=None, n_neighbors=5,

p=2, weights='uniform'),

iid='deprecated', n_iter=40, n_jobs=-1,

param_distributions={'n_neighbors': range(1, 31),

'weights': ['uniform', 'distance']},

pre_dispatch='2*n_jobs', random_state=None, refit=True,

return_train_score=False, scoring=None, verbose=False)

print (grid.best_score_)

print (grid.best_params_)

print(grid.best_estimator_)0.8085820895522389

{'weights': 'uniform', 'n_neighbors': 6}

KNeighborsClassifier(algorithm='auto', leaf_size=30, metric='minkowski',

metric_params=None, n_jobs=None, n_neighbors=6, p=2,

weights='uniform')

### Using the best parameters from the grid-search.

knn_ran_grid = grid.best_estimator_

knn_ran_grid.score(X,y)0.8552188552188552

Gaussian Naive Bayes建模

# Gaussian Naive Bayes

from sklearn.naive_bayes import GaussianNB

from sklearn.metrics import accuracy_score

gaus = GaussianNB()

gaus.fit(X, y)

y_pred = gaus.predict(X_test)

gaus_accy = round(accuracy_score(y_pred, y_test), 3)

print(gaus_accy)0.81

Gaussian Naive Bayes建模 with Gaussian Process Classifier

from sklearn.gaussian_process import GaussianProcessClassifier

GaussianProcessClassifier = GaussianProcessClassifier()

GaussianProcessClassifier.fit(X, y)

y_pred = GaussianProcessClassifier.predict(X_test)

gau_pro_accy = round(accuracy_score(y_pred, y_test), 3)

print(gau_pro_accy)VotingClassifier建模

from IPython.display import Image

Image(filename='C:\\Users\\86185\\Desktop\\TempDesktop\\研究内容\\Python学习\\Py机深文字教程+源码\\LessonPythonCode-main\\Lesson26-Voting.png')

from IPython.display import Image

Image(filename='C:\\Users\\86185\\Desktop\\TempDesktop\\研究内容\\Python学习\\Py机深文字教程+源码\\LessonPythonCode-main\\Lesson26-Voting1.png')

from sklearn.ensemble import VotingClassifier

voting_classifier = VotingClassifier(estimators=[

('lr_grid', logreg_grid),

('lr_grid1', logreg_rand),

('svc', svm_grid),

('random_forest', rf_grid),

('gradient_boosting',gb_grid),

('decision_tree_grid',dectree_grid),

('knn_classifier', knn_grid),

('knn_classifier1', knn_ran_grid),

('XGB_Classifier', xgb_grid),

('bagging_classifier', bagging_grid),

('adaBoost_classifier',ada_grid),

('ExtraTrees_Classifier', ExtraTreesClassifier),

('gaus_classifier', gaus),

('gaussian_process_classifier', GaussianProcessClassifier)

],voting='hard')

voting_classifier = voting_classifier.fit(X,y)voting_classifierVotingClassifier(estimators=[('lr_grid',

LogisticRegression(C=0.4, class_weight=None,

dual=False, fit_intercept=True,

intercept_scaling=1,

l1_ratio=None, max_iter=100,

multi_class='auto',

n_jobs=None, penalty='l2',

random_state=None,

solver='lbfgs', tol=0.0001,

verbose=0, warm_start=False)),

('lr_grid1',

LogisticRegression(C=0.6, class_weight=None,

dual=False, fit_int...

('gaus_classifier',

GaussianNB(priors=None, var_smoothing=1e-09)),

('gaussian_process_classifier',

GaussianProcessClassifier(copy_X_train=True,

kernel=None,

max_iter_predict=100,

multi_class='one_vs_rest',

n_jobs=None,

n_restarts_optimizer=0,

optimizer='fmin_l_bfgs_b',

random_state=None,

warm_start=False))],

flatten_transform=True, n_jobs=None, voting='hard',

weights=None)

y_pred = voting_classifier.predict(X_test)

voting_accy = round(accuracy_score(y_pred, y_test), 3)

print(voting_accy)0.854

all_models = [logreg_grid,

logreg_rand,

knn_grid,

knn_ran_grid,

gb_grid,

dectree_grid,

rf_grid,

bagging_grid,

ada_grid,

ExtraTreesClassifier,

svm_grid,

gaus,

GaussianProcessClassifier,

xgb_grid,

voting_classifier]

c = {}

for i in all_models:

print("{}\n*******************************************************************************\n".format(i))

a = i.predict(X_test)

b = accuracy_score(a, y_test)

c[i] = bLogisticRegression(C=0.4, class_weight=None, dual=False, fit_intercept=True,

intercept_scaling=1, l1_ratio=None, max_iter=100,

multi_class='auto', n_jobs=None, penalty='l2',

random_state=None, solver='lbfgs', tol=0.0001, verbose=0,

warm_start=False)

*******************************************************************************

LogisticRegression(C=0.6, class_weight=None, dual=False, fit_intercept=True,

intercept_scaling=1, l1_ratio=None, max_iter=100,

multi_class='auto', n_jobs=None, penalty='l2',

random_state=None, solver='lbfgs', tol=0.0001, verbose=0,

warm_start=False)

*******************************************************************************

KNeighborsClassifier(algorithm='auto', leaf_size=30, metric='minkowski',

metric_params=None, n_jobs=None, n_neighbors=8, p=2,

weights='uniform')

*******************************************************************************

KNeighborsClassifier(algorithm='auto', leaf_size=30, metric='minkowski',

metric_params=None, n_jobs=None, n_neighbors=6, p=2,

weights='uniform')

*******************************************************************************

GradientBoostingClassifier(ccp_alpha=0.0, criterion='friedman_mse', init=None,

learning_rate=0.01, loss='deviance', max_depth=3,

max_features=None, max_leaf_nodes=None,

min_impurity_decrease=0.0, min_impurity_split=None,

min_samples_leaf=1, min_samples_split=2,

min_weight_fraction_leaf=0.0, n_estimators=140,

n_iter_no_change=None, presort='deprecated',

random_state=None, subsample=1.0, tol=0.0001,

validation_fraction=0.1, verbose=0,

warm_start=False)

*******************************************************************************

DecisionTreeClassifier(ccp_alpha=0.0, class_weight=None, criterion='gini',

max_depth=6, max_features=30, max_leaf_nodes=None,

min_impurity_decrease=0.0, min_impurity_split=None,

min_samples_leaf=1, min_samples_split=2,

min_weight_fraction_leaf=0.0, presort='deprecated',

random_state=None, splitter='best')

*******************************************************************************

RandomForestClassifier(bootstrap=True, ccp_alpha=0.0, class_weight=None,

criterion='entropy', max_depth=5, max_features='auto',

max_leaf_nodes=None, max_samples=None,

min_impurity_decrease=0.0, min_impurity_split=None,

min_samples_leaf=1, min_samples_split=2,

min_weight_fraction_leaf=0.0, n_estimators=160,

n_jobs=None, oob_score=False, random_state=None,

verbose=0, warm_start=False)

*******************************************************************************

BaggingClassifier(base_estimator=None, bootstrap=True, bootstrap_features=False,

max_features=1.0, max_samples=1.0, n_estimators=70,

n_jobs=None, oob_score=False, random_state=None, verbose=0,

warm_start=False)

*******************************************************************************

AdaBoostClassifier(algorithm='SAMME.R', base_estimator=None, learning_rate=0.1,

n_estimators=180, random_state=None)

*******************************************************************************

ExtraTreesClassifier(bootstrap=False, ccp_alpha=0.0, class_weight=None,

criterion='gini', max_depth=None, max_features='auto',

max_leaf_nodes=None, max_samples=None,

min_impurity_decrease=0.0, min_impurity_split=None,

min_samples_leaf=1, min_samples_split=2,

min_weight_fraction_leaf=0.0, n_estimators=100,

n_jobs=None, oob_score=False, random_state=None, verbose=0,

warm_start=False)

*******************************************************************************

SVC(C=1, break_ties=False, cache_size=200, class_weight=None, coef0=0.0,

decision_function_shape='ovr', degree=3, gamma=0.01, kernel='rbf',

max_iter=-1, probability=True, random_state=None, shrinking=True, tol=0.001,

verbose=False)

*******************************************************************************

GaussianNB(priors=None, var_smoothing=1e-09)

*******************************************************************************

GaussianProcessClassifier(copy_X_train=True, kernel=None, max_iter_predict=100,

multi_class='one_vs_rest', n_jobs=None,

n_restarts_optimizer=0, optimizer='fmin_l_bfgs_b',

random_state=None, warm_start=False)

*******************************************************************************

XGBClassifier(base_score=0.5, booster='gbtree', colsample_bylevel=1,

colsample_bynode=1, colsample_bytree=1, criterion='entropy',

gamma=0, learning_rate=0.1, max_delta_step=0, max_depth=1,

max_features=21, min_child_weight=1, missing=None,

n_estimators=100, n_jobs=1, nthread=None,

objective='binary:logistic', random_state=0, reg_alpha=0,

reg_lambda=1, scale_pos_weight=1, seed=None, silent=None,

subsample=1, verbosity=1)

*******************************************************************************

VotingClassifier(estimators=[('lr_grid',

LogisticRegression(C=0.4, class_weight=None,

dual=False, fit_intercept=True,