上一篇文章中,讲解了二进制安装,意在教会小白了解数据流向与组件原理,随着容器技术的快速发展,Kubernetes(简称K8s)已成为容器编排的事实标准。然而,对于初学者来说,Kubernetes的部署和配置可能是一个复杂的过程。本文旨在提供一个简化的Kubernetes使用kubeadm一键部署安装指南,帮助读者快速搭建一个Kubernetes集群。

一. 环境准备

(一)分配信息

| IP地址 | 主机名 | 安装服务 | 运行内存 |

| 192.168.44.70 | master01 | docker-ce kubeadm kubelet kubectl flannel | 4G |

| 192.168.44.60 | node01 | docker-ce kubeadm kubelet kubectl flannel | 8G |

| 192.168.44.50 | node02 | docker-ce kubeadm kubelet kubectl flannel | 8G |

| 192.168.44.40 | hub.china.com | docker-ce docker-compose harbor-offline-v1.2.2 | 4G |

(二)环境准备

在所有机器上操作

1.关闭防护设置

[root@master01 ~]#systemctl stop firewalld

[root@master01 ~]#systemctl disable firewalld

[root@master01 ~]#setenforce 0

[root@master01 ~]#sed -i 's/enforcing/disabled/' /etc/selinux/config

[root@master01 ~]#iptables -F && iptables -t nat -F && iptables -t mangle -F && iptables -X2.关闭swap分区

[root@master01 ~]#swapoff -a

[root@master01 ~]#sed -ri 's/.*swap.*/#&/' /etc/fstab #注释swap挂载信息

[root@master01 ~]#echo vm.swappiness = 0 >> /etc/sysctl.conf #禁止使用swap分区

[root@master01 ~]#sysctl -p3.加载ip_vs模块

[root@master01 ~]#for i in $(ls /usr/lib/modules/$(uname -r)/kernel/net/netfilter/ipvs|grep -o "^[^.]*");do echo $i; /sbin/modinfo -F filename $i >/dev/null 2>&1 && /sbin/modprobe $i;done4.添加解析信息

[root@master01 ~]#cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.44.70 master01

192.168.44.60 node01

192.168.44.50 node02

192.168.44.40 hub.china.com5.调整内核参数

[root@master01 ~]#cat >/etc/sysctl.d/kubernetes.conf

......

net.bridge.bridge-nf-call-ip6tables=1

net.bridge.bridge-nf-call-iptables=1

net.ipv6.conf.all.disable_ipv6=1

net.ipv4.ip_forward=1

[root@master01 ~]#sysctl --system

#使参数生效6.时间同步

[root@master01 ~]#yum install ntpdate -y

[root@master01 ~]#ntpdate time.windows.com #时间同步二、安装docker

在所有机器上进行操作

Harbor服务器上指定安装版本:

[root@hub harbor]#yum install -y docker-ce-20.10.18 docker-ce-cli-20.10.18 containerd.io

1.安装服务

[root@master01 ~]#yum install -y yum-utils device-mapper-persistent-data lvm2

#获取下载工具

[root@master01 ~]#yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

#获取阿里云的下载源

[root@master01 ~]#yum install -y docker-ce docker-ce-cli containerd.io

#下载docker服务2.配置守护进程参数

[root@master01 ~]#vim /etc/docker/daemon.json

[root@master01 ~]#cat /etc/docker/daemon.json

{

"registry-mirrors": ["https://gix2yhc1.mirror.aliyuncs.com"],

#Docker的镜像加速器配置。加速地址可以登录阿里云官网获取

"exec-opts": ["native.cgroupdriver=systemd"],

#指定了Docker容器使用的cgroup驱动程序并交由system管理

"log-driver": "json-file",

#这个配置项指定了Docker容器日志的驱动程序

"log-opts": {

"max-size": "100m"

#定了单个容器日志文件的最大大小为100m

}

}

[root@master01 ~]#systemctl daemon-reload

[root@master01 ~]#systemctl restart docker.service

[root@master01 ~]#systemctl enable docker.service三、安装命令工具

在mastere节点与node节点上安装 kubeadm,kubelet 和 kubectl

(一)定义kubernetes源

[root@master01 ~]#cat > /etc/yum.repos.d/kubernetes.repo << EOF

> [kubernetes]

> name=Kubernetes

> baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

> enabled=1

> gpgcheck=0

> repo_gpgcheck=0

> gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

> EOF

#建立下载kubernetes仓库

(二)下载服务

[root@master01 ~]#yum install -y kubelet-1.20.11 kubeadm-1.20.11 kubectl-1.20.11

#下载指定1.20.11版本的kubernetes相关服务程序

[root@master01 ~]#systemctl enable kubelet.service

#设置为开机自启

#K8S通过kubeadm安装出来以后都是以Pod方式存在,即底层是以容器方式运行,所以kubelet必须设置开机自启四、部署K8S集群

首先在master01节点上操作

(一)初始化Master节点

1.查看初始化镜像

[root@master01 ~]#kubeadm config images list

#列出初始化需要的镜像

W0516 14:46:40.749385 45138 version.go:102] could not fetch a Kubernetes version from the internet: unable to get URL "https://dl.k8s.io/release/stable-1.txt": Get "https://cdn.dl.k8s.io/release/stable-1.txt": context deadline exceeded (Client.Timeout exceeded while awaiting headers)

W0516 14:46:40.749535 45138 version.go:103] falling back to the local client version: v1.20.11

k8s.gcr.io/kube-apiserver:v1.20.11

k8s.gcr.io/kube-controller-manager:v1.20.11

k8s.gcr.io/kube-scheduler:v1.20.11

k8s.gcr.io/kube-proxy:v1.20.11

k8s.gcr.io/pause:3.2

k8s.gcr.io/etcd:3.4.13-0

k8s.gcr.io/coredns:1.7.02.获取镜像

获取镜像的方式有两种

有网络环境下:使用docker pull下载有关镜像

无网络环境下:使用docker load -i 命令加载镜像文件,一般为.tar结尾的压缩包,由镜像下载完毕之后,压缩保存的文件

#上传v1.20.11.zip 压缩包

[root@master01 opt]#unzip v1.20.11.zip

Archive: v1.20.11.zip

creating: v1.20.11/

inflating: v1.20.11/apiserver.tar

inflating: v1.20.11/controller-manager.tar

inflating: v1.20.11/coredns.tar

inflating: v1.20.11/etcd.tar

inflating: v1.20.11/pause.tar

inflating: v1.20.11/proxy.tar

inflating: v1.20.11/scheduler.tar

[root@master01 opt]#ls

cni containerd v1.20.11 v1.20.11.zip

[root@master01 opt]#ls v1.20.11/

apiserver.tar controller-manager.tar coredns.tar etcd.tar pause.tar proxy.tar scheduler.tar使用for循环语句,生成所有镜像

[root@master01 opt]#for i in $(ls /opt/v1.20.11/*.tar); do docker load -i $i; done

07363fa84210: Loading layer [===========================>] 3.062MB/3.062MB

b2fdcf729db8: Loading layer [===========================>] 1.734MB/1.734MB

3bef9b063c52: Loading layer [===========================>] 118.3MB/118.3MB

Loaded image: k8s.gcr.io/kube-apiserver:v1.20.11

aedf9547be2a: Loading layer [===========================>] 112.8MB/112.8MB

Loaded image: k8s.gcr.io/kube-controller-manager:v1.20.11

225df95e717c: Loading layer [===========================>] 336.4kB/336.4kB

96d17b0b58a7: Loading layer [===========================>] 45.02MB/45.02MB

Loaded image: k8s.gcr.io/coredns:1.7.0

d72a74c56330: Loading layer [===========================>] 3.031MB/3.031MB

d61c79b29299: Loading layer [===========================>] 2.13MB/2.13MB

1a4e46412eb0: Loading layer [===========================>] 225.3MB/225.3MB

bfa5849f3d09: Loading layer [===========================>] 2.19MB/2.19MB

bb63b9467928: Loading layer [===========================>] 21.98MB/21.98MB

Loaded image: k8s.gcr.io/etcd:3.4.13-0

ba0dae6243cc: Loading layer [===========================>] 684.5kB/684.5kB

Loaded image: k8s.gcr.io/pause:3.2

48b90c7688a2: Loading layer [===========================>] 61.99MB/61.99MB

dfec24feb8ab: Loading layer [===========================>] 39.49MB/39.49MB

Loaded image: k8s.gcr.io/kube-proxy:v1.20.11

bc3358461296: Loading layer [===========================>] 43.73MB/43.73MB

Loaded image: k8s.gcr.io/kube-scheduler:v1.20.11

[root@master01 opt]#docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

k8s.gcr.io/kube-proxy v1.20.11 f4a6053ca28d 2 years ago 99.7MB

k8s.gcr.io/kube-scheduler v1.20.11 4c5693dacb42 2 years ago 47.3MB

k8s.gcr.io/kube-apiserver v1.20.11 d06f046c0907 2 years ago 122MB

k8s.gcr.io/kube-controller-manager v1.20.11 c857cde24856 2 years ago 116MB

k8s.gcr.io/etcd 3.4.13-0 0369cf4303ff 3 years ago 253MB

k8s.gcr.io/coredns 1.7.0 bfe3a36ebd25 3 years ago 45.2MB

k8s.gcr.io/pause 3.2 80d28bedfe5d 4 years ago 683kB将文件拷贝到node节点上并在 node节点上执行命令加载镜像文件

[root@master01 opt]#scp -r /opt/v1.20.11 node01:/opt

[root@master01 opt]#scp -r /opt/v1.20.11 node02:/optnode01节点

[root@node01 opt]#ls /opt/v1.20.11/

apiserver.tar controller-manager.tar coredns.tar etcd.tar pause.tar proxy.tar scheduler.tar

[root@node01 opt]#for i in $(ls /opt/v1.20.11/*.tar); do docker load -i $i; donenode02节点

[root@node02 opt]#ls /opt/v1.20.11/

apiserver.tar controller-manager.tar coredns.tar etcd.tar pause.tar proxy.tar scheduler.tar

[root@node02 opt]#for i in $(ls /opt/v1.20.11/*.tar); do docker load -i $i; done3.获取初始化文件

[root@master01 opt]#kubeadm config print init-defaults > /opt/kubeadm-config.yaml

kubeadm

#Kubernetes 集群初始化和管理的一个工具。

config print init-defaults

#这个命令会打印出 kubeadm init命令的默认配置。

#这些默认配置是当运行kubeadm init但不提供任何特定配置时所使用的设置。

> /opt/kubeadm-config.yaml

#将前面命令的输出重定向到一个名为 kubeadm-config.yaml 的文件中。

#可以根据需要修改保存的yaml文件,然后使用kubeadm init命令指定该文件来初始化您的Kubernetes集群,并应用您在 YAML 文件中所做的任何更改4.修改初始化文件

[root@master01 opt]#vim /opt/kubeadm-config.yaml

......

11 localAPIEndpoint:

12 advertiseAddress: 192.168.83.10 #指定master节点的IP地址

13 bindPort: 6443

......

34 kubernetesVersion: v1.20.11 #指定kubernetes版本号

35 networking:

36 dnsDomain: cluster.local

37 podSubnet: "10.244.0.0/16" #指定pod网段,10.244.0.0/16用于匹配flannel默认网段.它的默认网段为10.244.0.0

38 serviceSubnet: 10.96.0.0/16 #指定service网段

39 scheduler: {}

#末尾再添加以下内容

---

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

mode: ipvs #把默认的kube-proxy调度方式改为ipvs模式5.初始化master节点

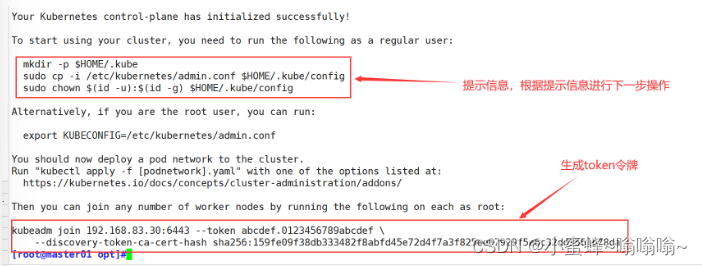

在 Master 节点上运行 kubeadm init --config 命令,该命令会生成一个 token 和一个 CA 证书,用于后续 Node 节点加入集群。初始化成功后,根据提示执行命令来配置 kubectl 的环境变量。

[root@master01 opt]#kubeadm init --config=/opt/kubeadm-config.yaml --upload-certs | tee kubeadm-init.log

[init] Using Kubernetes version: v1.20.11

[preflight] Running pre-flight checks

......

#-upload-certs 参数可以在后续执行加入节点时自动分发证书文件,V1.16版本后开始使用

#tee kubeadm-init.log 用以输出日志

6.配置kubelet变量文件

[root@master01 opt]#mkdir -p $HOME/.kube

//$HOME表示为当前用户的家目录

[root@master01 opt]#cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

//复制kubernetes目录下的admin.conf文件到$HOME/.kube/目录下,命名为config

[root@master01 opt]#chown $(id -u):$(id -g) $HOME/.kube/config

//修该文件的属组与属组

//id -u 与id -g:显示当前用户的UID号与GID号,修改属主与属组为id号是,会自动匹配对应的用户名

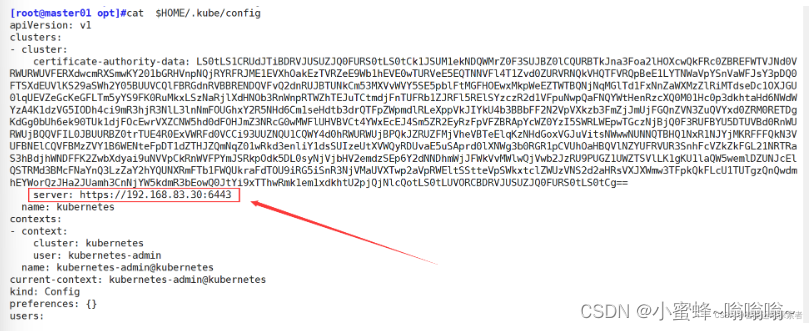

[root@master01 opt]#ll $HOME/.kube/config

-rw------- 1 root root 5565 5月 16 15:19 /root/.kube/config

#该文件是Kubernetes命令行工具kubelet的配置文件,

#它用于存储访问 Kubernetes 集群所需的信息。

#当你使用kubectl命令与Kubernetes集群交互时,这个文件告诉kubectl如何与集群进行通信。

'这个文件通常包含以下信息'

#集群信息:包括集群的 API 服务器的 URL 和证书。

#用户信息:包括用于身份验证的凭据,如证书或令牌。

#上下文(Context):这是集群、用户和命名空间的组合,它定义了一个特定的操作环境。

7.查看文件

[root@master01 opt]#cat /opt/kubeadm-init.log

查看kubeadm-init日志,执行初始化命令时生成的token令牌也可以看到

[root@master01 opt]#ls /etc/kubernetes/

kubernetes配置文件目录

[root@master01 opt]#ls /etc/kubernetes/pki

存放ca等证书和密码的目录8.查看集群状态

[root@master01 opt]#kubectl get cs

Warning: v1 ComponentStatus is deprecated in v1.19+

NAME STATUS MESSAGE ERROR

controller-manager Unhealthy Get "http://127.0.0.1:10252/healthz": dial tcp 127.0.0.1:10252: connect: connection refused

scheduler Unhealthy Get "http://127.0.0.1:10251/healthz": dial tcp 127.0.0.1:10251: connect: connection refused

etcd-0 Healthy {"health":"true"} 如kubectl get cs发现集群不健康,更改以下两个文件

[root@master01 opt]#vim /etc/kubernetes/manifests/kube-scheduler.yaml

......

16 - --bind-address=192.168.83.30 #修改成k8s的控制节点master01的ip

.....

19 # - --port=0 #注释改行

......

25 host: 127.0.0.1 #把httpGet:字段下的hosts修改为master01的ip

......

39 host: 127.0.0.1 #同样为httpGet:字段下的hosts

......

[root@master01 opt]#vim /etc/kubernetes/manifests/kube-controller-manager.yaml

......

17 - --bind-address=192.168.83.30

......

26 # - --port=0

......

37 host: 192.168.83.30

......

51 host: 192.168.83.30

......修改完毕后重新启动kubectl并查看集群状态

[root@master01 opt]#systemctl restart kubelet

[root@master01 opt]#kubectl get cs

Warning: v1 ComponentStatus is deprecated in v1.19+

NAME STATUS MESSAGE ERROR

controller-manager Healthy ok

scheduler Healthy ok

etcd-0 Healthy {"health":"true"} (二)部署网络插件

需要在所有节点上部署网络插件

1.准备文件

flannel-v0.22.2.tar

flannel-cni-v1.2.0.tar

master节点还需上传 kube-flannel.yml 文件

下载地址

https://github.com/flannel-io/flannel/tree/master

下载完毕之后可以将三个文件放在同一个目录进行压缩,便于使用

[root@master01 opt]#unzip kuadmin.zip

Archive: kuadmin.zip

inflating: flannel-v0.22.2.tar

inflating: kube-flannel.yml

inflating: flannel-cni-v1.2.0.tar 将镜像文件远程拷贝到node节点上

[root@master01 opt]#scp flannel-* node01:/opt

root@node01's password:

flannel-cni-v1.2.0.tar 100% 8137KB 17.9MB/s 00:00

flannel-v0.22.2.tar 100% 68MB 27.3MB/s 00:02

[root@master01 opt]#scp flannel-* node02:/opt

root@node02's password:

flannel-cni-v1.2.0.tar 100% 8137KB 22.6MB/s 00:00

flannel-v0.22.2.tar 100% 68MB 28.5MB/s 00:02.生成镜像

在所有节点上操作

master01操作

[root@master01 opt]#docker load -i flannel-v0.22.2.tar

......

[root@master01 opt]#docker load -i flannel-cni-v1.2.0.tar

......

[root@master01 opt]#docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

flannel/flannel v0.22.2 d73868a08083 9 months ago 70.2MB

flannel/flannel-cni-plugin v1.2.0 a55d1bad692b 9 months ago 8.04MBnode01操作

[root@node01 opt]#docker load -i flannel-v0.22.2.tar

......

[root@node01 opt]#docker load -i flannel-cni-v1.2.0.tar

......

[root@node01 opt]#docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

flannel/flannel v0.22.2 d73868a08083 9 months ago 70.2MB

flannel/flannel-cni-plugin v1.2.0 a55d1bad692b 9 months ago 8.04MBnode02操作

[root@node02 opt]#docker load -i flannel-v0.22.2.tar

......

[root@node02 opt]#docker load -i flannel-cni-v1.2.0.tar

......

[root@node02 opt]#docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

flannel/flannel v0.22.2 d73868a08083 9 months ago 70.2MB

flannel/flannel-cni-plugin v1.2.0 a55d1bad692b 9 months ago 8.04MB3.加载yaml文件

查看kube-flannel.yaml文件

#查看文件第142行flannel-cni-plugin:v1.2.0 版本是否与上一步生成镜像版本一致

#第153行 image: docker.io/flannel/flannel:v0.22.2 版本是否与上一步生成镜像版本一致

1 ---

2 kind: Namespace

3 apiVersion: v1

4 metadata:

5 name: kube-flannel

6 labels:

7 k8s-app: flannel

8 pod-security.kubernetes.io/enforce: privileged

9 ---

10 kind: ClusterRole

11 apiVersion: rbac.authorization.k8s.io/v1

12 metadata:

13 labels:

14 k8s-app: flannel

15 name: flannel

16 rules:

17 - apiGroups:

18 - ""

19 resources:

20 - pods

21 verbs:

22 - get

23 - apiGroups:

24 - ""

25 resources:

26 - nodes

27 verbs:

28 - get

29 - list

30 - watch

31 - apiGroups:

32 - ""

33 resources:

34 - nodes/status

35 verbs:

36 - patch

37 - apiGroups:

38 - networking.k8s.io

39 resources:

40 - clustercidrs

41 verbs:

42 - list

43 - watch

44 ---

45 kind: ClusterRoleBinding

46 apiVersion: rbac.authorization.k8s.io/v1

47 metadata:

48 labels:

49 k8s-app: flannel

50 name: flannel

51 roleRef:

52 apiGroup: rbac.authorization.k8s.io

53 kind: ClusterRole

54 name: flannel

55 subjects:

56 - kind: ServiceAccount

57 name: flannel

58 namespace: kube-flannel

59 ---

60 apiVersion: v1

61 kind: ServiceAccount

62 metadata:

63 labels:

64 k8s-app: flannel

65 name: flannel

66 namespace: kube-flannel

67 ---

68 kind: ConfigMap

69 apiVersion: v1

70 metadata:

71 name: kube-flannel-cfg

72 namespace: kube-flannel

73 labels:

74 tier: node

75 k8s-app: flannel

76 app: flannel

77 data:

78 cni-conf.json: |

79 {

80 "name": "cbr0",

81 "cniVersion": "0.3.1",

82 "plugins": [

83 {

84 "type": "flannel",

85 "delegate": {

86 "hairpinMode": true,

87 "isDefaultGateway": true

88 }

89 },

90 {

91 "type": "portmap",

92 "capabilities": {

93 "portMappings": true

94 }

95 }

96 ]

97 }

98 net-conf.json: |

99 {

100 "Network": "10.244.0.0/16",#flannel的默认网段,如果需要修改,需要与初始化master节点时设置的网段一致

101 "Backend": {

102 "Type": "vxlan"

103 }

104 }

105 ---

106 apiVersion: apps/v1

107 kind: DaemonSet #pod控制器为Daemonset

108 metadata:

109 name: kube-flannel-ds

110 namespace: kube-flannel

111 labels:

112 tier: node

113 app: flannel

114 k8s-app: flannel

115 spec:

116 selector:

117 matchLabels:

118 app: flannel

119 template:

120 metadata:

121 labels:

122 tier: node

123 app: flannel

124 spec:

125 affinity:

126 nodeAffinity:

127 requiredDuringSchedulingIgnoredDuringExecution:

128 nodeSelectorTerms:

129 - matchExpressions:

130 - key: kubernetes.io/os

131 operator: In

132 values:

133 - linux

134 hostNetwork: true

135 priorityClassName: system-node-critical

136 tolerations:

137 - operator: Exists

138 effect: NoSchedule

139 serviceAccountName: flannel

140 initContainers:

141 - name: install-cni-plugin

142 image: docker.io/flannel/flannel-cni-plugin:v1.2.0

143 command:

144 - cp

145 args:

146 - -f

147 - /flannel

148 - /opt/cni/bin/flannel

149 volumeMounts:

150 - name: cni-plugin

151 mountPath: /opt/cni/bin

152 - name: install-cni

153 image: docker.io/flannel/flannel:v0.22.2

154 command:

155 - cp

156 args:

157 - -f

158 - /etc/kube-flannel/cni-conf.json

159 - /etc/cni/net.d/10-flannel.conflist

160 volumeMounts:

161 - name: cni

162 mountPath: /etc/cni/net.d

163 - name: flannel-cfg

164 mountPath: /etc/kube-flannel/

165 containers:

166 - name: kube-flannel

167 image: docker.io/flannel/flannel:v0.22.2

168 command:

169 - /opt/bin/flanneld

170 args:

171 - --ip-masq

172 - --kube-subnet-mgr

173 resources:

174 requests:

175 cpu: "100m"

176 memory: "50Mi"

177 securityContext:

178 privileged: false

179 capabilities:

180 add: ["NET_ADMIN", "NET_RAW"]

181 env:

182 - name: POD_NAME

183 valueFrom:

184 fieldRef:

185 fieldPath: metadata.name

186 - name: POD_NAMESPACE

187 valueFrom:

188 fieldRef:

189 fieldPath: metadata.namespace

190 - name: EVENT_QUEUE_DEPTH

191 value: "5000"

192 volumeMounts:

193 - name: run

194 mountPath: /run/flannel

195 - name: flannel-cfg

196 mountPath: /etc/kube-flannel/

197 - name: xtables-lock

198 mountPath: /run/xtables.lock

199 volumes:

200 - name: run

201 hostPath:

202 path: /run/flannel

203 - name: cni-plugin

204 hostPath:

205 path: /opt/cni/bin

206 - name: cni

207 hostPath:

208 path: /etc/cni/net.d

209 - name: flannel-cfg

210 configMap:

211 name: kube-flannel-cfg

212 - name: xtables-lock

213 hostPath:

214 path: /run/xtables.lock

215 type: FileOrCreate 查看节点信息

[root@master01 opt]#kubectl get node

NAME STATUS ROLES AGE VERSION

master01 NotReady control-plane,master 107m v1.20.11

#此时kubectl管理的节点只有本机,且状态属于NotReady使用kubectl apply -f kube-flannel.yml加载文件信息,在master01节点创建flannel资源

[root@master01 opt]#kubectl apply -f kube-flannel.yml

namespace/kube-flannel created

clusterrole.rbac.authorization.k8s.io/flannel created

clusterrolebinding.rbac.authorization.k8s.io/flannel created

serviceaccount/flannel created

configmap/kube-flannel-cfg created

daemonset.apps/kube-flannel-ds created

#稍微等几秒钟

[root@master01 opt]#kubectl get node

NAME STATUS ROLES AGE VERSION

master01 Ready control-plane,master 110m v1.20.11

#此时状态变为Ready(三)加入节点

在node节点上执行

在所有节点上执行 kubeadm join 命令加入群集

1.获取命令及令牌信息

[root@master01 opt]#tail -4 kubeadm-init.log #通过查看日志,获取命令

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.83.30:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:159fe09f38db333482f8abfd45e72d4f7a3f825ee07929f5e6c32dd9561b78da2.加入集群

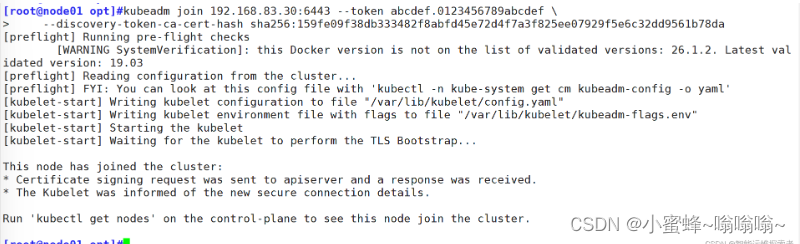

node01操作

[root@node01 opt]#kubeadm join 192.168.83.30:6443 --token abcdef.0123456789abcdef \

> --discovery-token-ca-cert-hash sha256:159fe09f38db333482f8abfd45e72d4f7a3f825ee07929f5e6c32dd9561b78da

[preflight] Running pre-flight checks

[WARNING SystemVerification]: this Docker version is not on the list of validated versions: 26.1.2. Latest validated version: 19.03

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

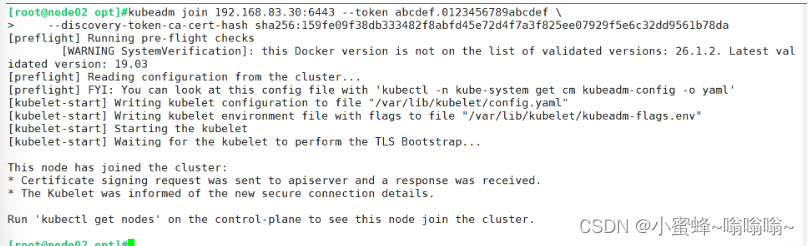

node02操作

[root@node02 opt]#kubeadm join 192.168.83.30:6443 --token abcdef.0123456789abcdef \

> --discovery-token-ca-cert-hash sha256:159fe09f38db333482f8abfd45e72d4f7a3f825ee07929f5e6c32dd9561b78da

[preflight] Running pre-flight checks

[WARNING SystemVerification]: this Docker version is not on the list of validated versions: 26.1.2. Latest validated version: 19.03

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

3.查看节点状态

[root@master01 opt]#kubectl get node

NAME STATUS ROLES AGE VERSION

master01 Ready control-plane,master 129m v1.20.11

node01 Ready <none> 6m59s v1.20.11

node02 Ready <none> 2m50s v1.20.11

#此过程可能需要2-3分钟,需要耐心等待网络插件部署预案

使用命令直接从网页拉取,但是过程时间较长

kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

kubectl apply -f https://github.com/flannel-io/flannel/releases/latest/download/kube-flannel.yml

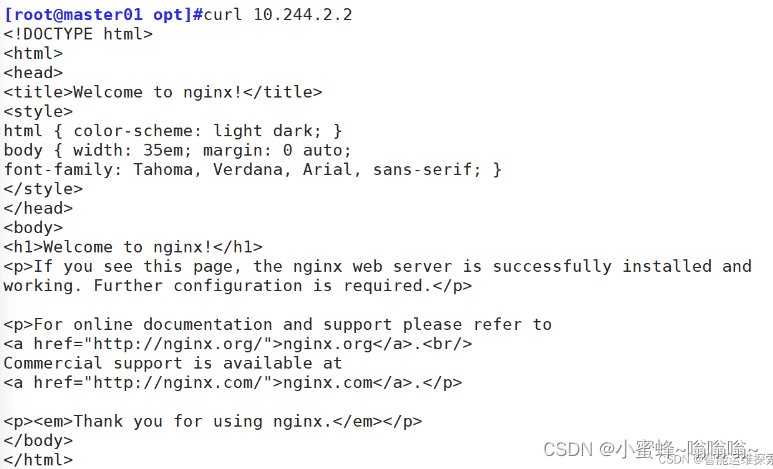

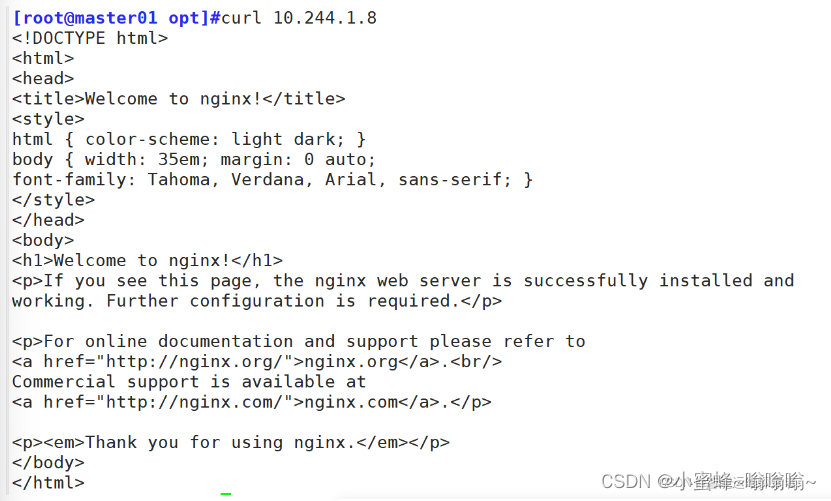

(四)测试pod资源创建

1.创建pod

[root@master01 opt]#kubectl create deployment nginx --image=nginx

deployment.apps/nginx created

#deployment表示静态资源

[root@master01 opt]#kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-6799fc88d8-nbhsh 1/1 Running 0 48s 10.244.2.2 node02 <none> <none>

#pod访问地址

2.暴露端口

暴露端口提供服务

[root@master01 opt]#kubectl expose deployment nginx --port=80 --type=NodePort

service/nginx exposed

kubectl #Kubernetes命令行工具

expose #子命令,用于将资源暴露为一个新的 Service。

deployment nginx #指定想要暴露的资源的类型和名称

--port=80: #指定Service 将要监听的端口。Service将在80端口上监听来自集群内部的请求。

--type=NodePort #指定Service的类型。

#NodePort类型会将Service的端口映射到集群中每个节点的一个静态端口上,使集群外部访问该Service

[root@master01 opt]#kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 140m

nginx NodePort 10.96.120.44 <none> 80:31733/TCP 3m44s

#映射端口

3.扩展pod

[root@master01 ~]#kubectl scale deployment nginx --replicas=3

deployment.apps/nginx scaled

scale #用于更改一个资源(如 Deployment、ReplicaSet、StatefulSet 等)的副本数量。

deployment nginx #指定要更改的资源的类型和名称。

--replicas=3 #这是一个参数,指定了要将Deployment的副本数量更改为 3。

#所以,当你运行这个命令时,Kubernetes 会尝试确保 nginx Deployment 有 3 个副本在运行。

#如果之前已经有少于或多于 3 个的副本,Kubernetes 会相应地添加或删除 Pod 来达到这个数量。

[root@master01 ~]#kubectl get pods -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-6799fc88d8-dhrkq 1/1 Running 0 55s 10.244.1.2 node01 <none> <none>

nginx-6799fc88d8-nbhsh 1/1 Running 0 27m 10.244.2.2 node02 <none> <none>

nginx-6799fc88d8-qhlcj 1/1 Running 0 55s 10.244.2.3 node02 <none> <none>

#IP地址分配会按顺序进行分配,且不同节点之间的网段也不相同五、搭建Harbor私有仓库

(一)添加镜像地址

在所有机器上操作

[root@hub opt]#vim /etc/docker/daemon.json

[root@hub opt]#cat /etc/docker/daemon.json

{

"registry-mirrors": ["https://gix2yhc1.mirror.aliyuncs.com"],

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m"

},

"insecure-registries": ["https://hub.benet.com"] #添加该字段,添加本机镜像地址

}

[root@hub opt]#systemctl daemon-reload

[root@hub opt]#systemctl restart docker.service

(二)安装harbor

1.准备文件

上传 harbor-offline-installer-v1.2.2.tgz 和 docker-compose 文件到 /opt 目录

docker-compose命令文件可以使用curl命令下载,或者在github官网进行下载

curl -L https://github.com/docker/compose/releases/download/1.25.0/docker-compose-`uname -s`-`uname -m` -o /usr/local/bin/docker-compose

[root@hub opt]#ls docker-compose

docker-compose

[root@hub opt]#chmod +x docker-compose

[root@hub opt]#mv docker-compose /usr/local/bin/2.修改配置文件

[root@hub opt]#vim /usr/local/harbor/harbor.cfg

5 hostname = hub.china.com

9 ui_url_protocol = https

24 ssl_cert = /data/cert/server.crt

25 ssl_cert_key = /data/cert/server.key

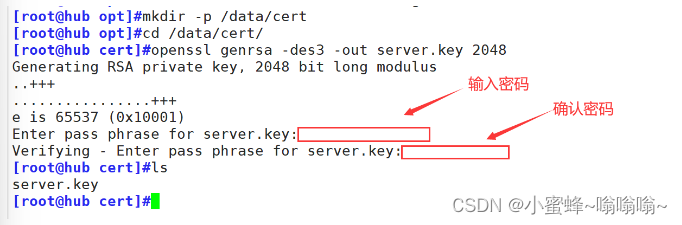

59 harbor_admin_password = Harbor123453.生成证书、私钥

3.1 生成私钥

[root@hub opt]#mkdir -p /data/cert

[root@hub opt]#cd /data/cert/

[root@hub cert]#openssl genrsa -des3 -out server.key 2048

Generating RSA private key, 2048 bit long modulus

..+++

................+++

e is 65537 (0x10001)

Enter pass phrase for server.key:

Verifying - Enter pass phrase for server.key:

[root@hub cert]#ls

server.key

openssl #这是一个强大的加密库和工具集,用于处理SSL/TLS协议和相关的加密操作。

genrsa #openssl的子命令,用于生成 RSA 私钥。

-des3 #openssl 使用 DES3算法来加密生成的私钥。

-out server.key #这个选项指定了输出文件的名称,即生成的私钥将被保存在名为server.key的文件中。

2048 #这个数字指定了RSA密钥的长度(以位为单位)。

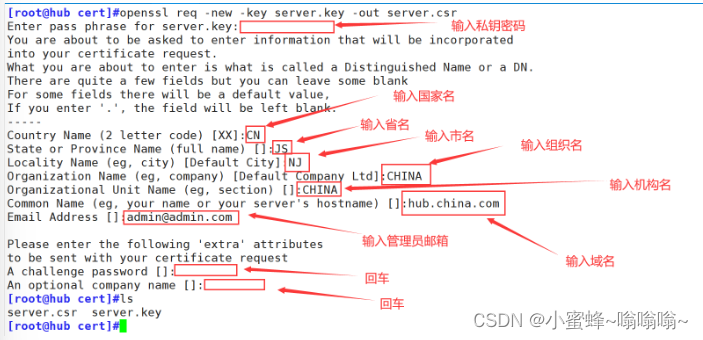

3.2 生成证书

[root@hub cert]#openssl req -new -key server.key -out server.csr

Enter pass phrase for server.key:

You are about to be asked to enter information that will be incorporated

into your certificate request.

What you are about to enter is what is called a Distinguished Name or a DN.

There are quite a few fields but you can leave some blank

For some fields there will be a default value,

If you enter '.', the field will be left blank.

-----

Country Name (2 letter code) [XX]:CN

State or Province Name (full name) []:JS

Locality Name (eg, city) [Default City]:NJ

Organization Name (eg, company) [Default Company Ltd]:CHINA

Organizational Unit Name (eg, section) []:CHINA

Common Name (eg, your name or your server's hostname) []:hub.china.com

Email Address []:admin@admin.com

Please enter the following 'extra' attributes

to be sent with your certificate request

A challenge password []:

An optional company name []:

----------------------------命令行---------------------------------

req #用于处理与证书签名请求(CSR)和私钥相关的任务。

-new #这个选项指示OpenSSL创建一个新的证书签名请求。

-key server.key #指定用于生成CSR的私钥文件的路径

-out server.csr #指定生成的CSR文件的输出路径。

3.3 清除私钥密码

清除私钥密码的主要作用是提高私钥使用的便捷性,避免频繁的输入密码

[root@hub cert]#cp server.key server.key.org #备份私钥

[root@hub cert]#ls

server.csr server.key server.key.org

[root@hub cert]#openssl rsa -in server.key.org -out server.key #清除密码

Enter pass phrase for server.key.org: #输入私钥密码

writing RSA key

3.4 签名证书

用于SSL认证

[root@hub cert]#openssl x509 -req -days 1000 -in server.csr -signkey server.key -out server.crt

Signature ok

subject=/C=CN/ST=JS/L=NJ/O=CHINA/OU=CHINA/CN=hub.china.com/emailAddress=admin@admin.com

Getting Private key

[root@hub cert]#ls

server.crt server.csr server.key server.key.org

openssl x509 #这是OpenSSL的一个子命令,用于处理X.509证书。

-req #指示OpenSSL从一个CSR文件中读取请求信息。

-days 1000 #设置证书的有效期为1000天。这意味着证书将在 1000 天后过期。

-in server.csr #指定CSR文件的输入路径

-signkey server.key #使用指定的私钥(server.key)对CSR进行签名,生成证书。

-out server.crt #指定输出的证书文件路径。在这个例子中,证书文件将被保存为server.crt。3.5 添加执行权限

[root@hub cert]#chmod +x ./*

[root@hub cert]#ll

总用量 16

-rwxr-xr-x. 1 root root 1265 5月 16 18:30 server.crt

-rwxr-xr-x. 1 root root 1037 5月 16 18:17 server.csr

-rwxr-xr-x. 1 root root 1679 5月 16 18:23 server.key

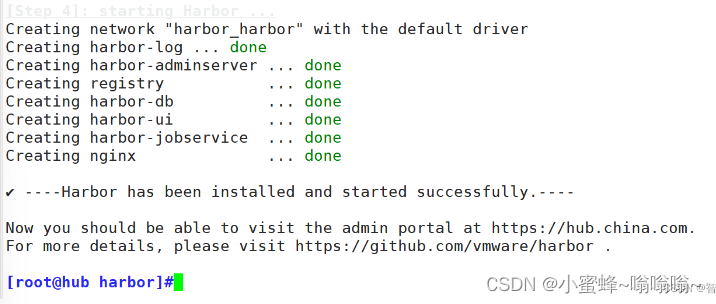

-rwxr-xr-x. 1 root root 1751 5月 16 18:23 server.key.org4. 启动Harbor服务

[root@hub cert]#cd /usr/local/harbor/

[root@hub harbor]#./install.sh

#执行命令 ./install.sh 以 pull 镜像并启动容器

#运行./install.sh时,这个脚本会执行一系列的步骤来配置和启动Harbor服务。这些步骤可能包括:

#检查和准备安装环境(如检查必要的依赖项、配置文件等)。

#生成或验证 Harbor 的配置文件(通常是 harbor.yml)。

#创建必要的数据目录和文件。

#初始化数据库(如果使用了数据库存储)。

#启动 Harbor 的各个组件(如 core、registry、portal、jobservice 等)

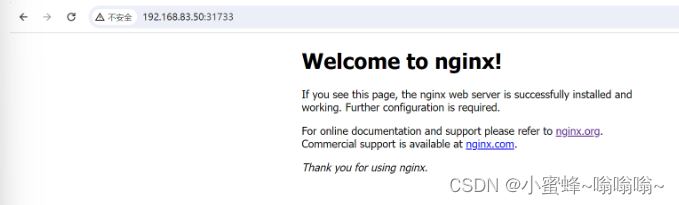

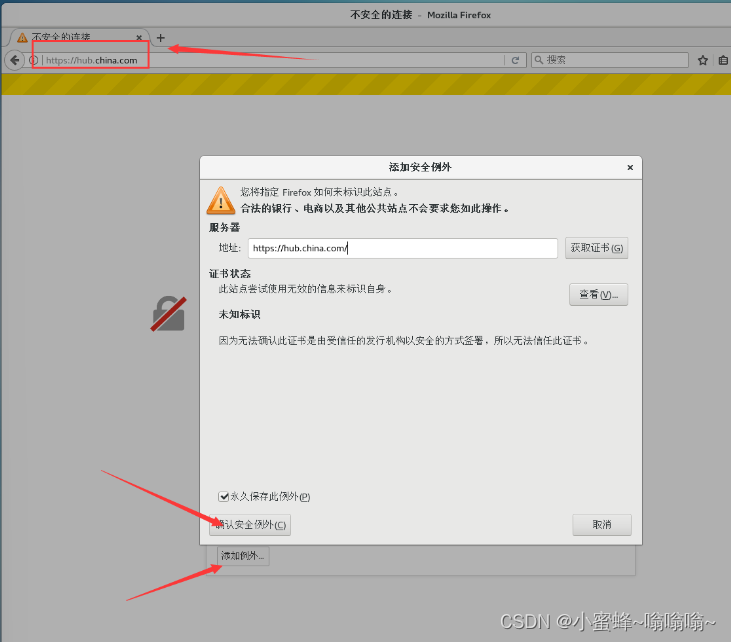

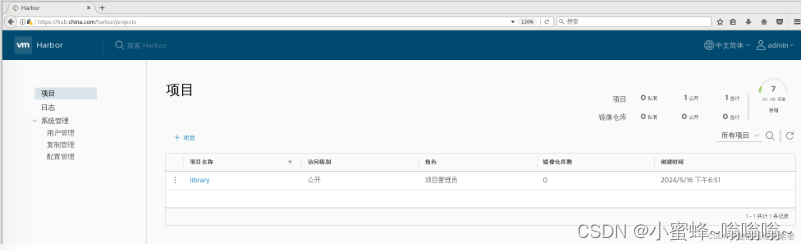

5.访问Harbor

5.1 本机登录

使用浏览器访问https://hua.china.com

点击高级--->添加例外---->确认安全例外

用户密码登录,默认为:

用户名:admin

密码:Harbor12345

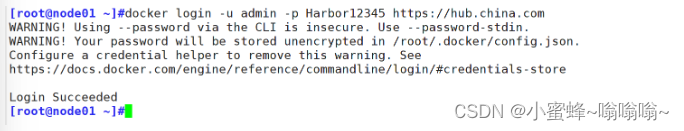

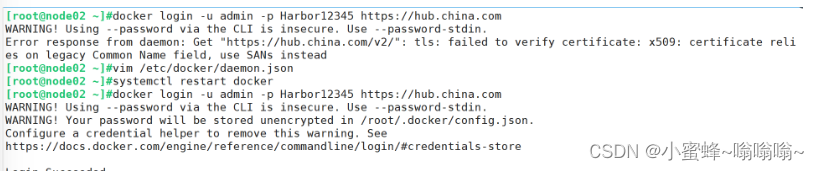

5.2 node节点登录

在一个node节点上登录harbor

[root@node01 ~]#docker login -u admin -p Harbor12345 https://hub.china.com

WARNING! Using --password via the CLI is insecure. Use --password-stdin.

WARNING! Your password will be stored unencrypted in /root/.docker/config.json.

Configure a credential helper to remove this warning. See

https://docs.docker.com/engine/reference/commandline/login/#credentials-store

Login Succeeded

6.上传镜像

6.1 定义标签

[root@node01 ~]#docker tag nginx:latest hub.china.com/library/nginx:v1

[root@node01 ~]#docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

flannel/flannel v0.22.2 49937eb983da 8 months ago 70.2MB

flannel/flannel-cni-plugin v1.2.0 a55d1bad692b 9 months ago 8.04MB

nginx latest 605c77e624dd 2 years ago 141MB

hub.china.com/library/nginx v1 605c77e624dd 2 years ago 141MB

......6.2 上传镜像

[root@node01 ~]#docker push hub.china.com/library/nginx:v1

The push refers to repository [hub.china.com/library/nginx]

d874fd2bc83b: Preparing

d874fd2bc83b: Pushed

32ce5f6a5106: Pushed

f1db227348d0: Pushed

b8d6e692a25e: Pushed

e379e8aedd4d: Pushed

2edcec3590a4: Pushed

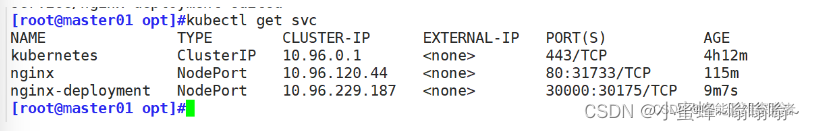

v1: digest: sha256:ee89b00528ff4f02f2405e4ee221743ebc3f8e8dd0bfd5c4c20a2fa2aaa7ede3 size: 15707.创建pod

所有的节点都需要登录harbor

在master01节点上操作

[root@master01 opt]#kubectl delete deployment nginx

deployment.apps "nginx" deleted

#删除之前创建的pod

[root@master01 opt]#kubectl create deployment nginx-deployment --image=hub.benet.com/library/nginx:v1 --port=80 --replicas=3

deployment.apps/nginx-deployment created

#创建3个nignx pod

[root@master01 opt]#kubectl expose deployment nginx-deployment --port=30000 --target-port=80

service/nginx-deployment exposed

#暴露端口

[root@master01 opt]#kubectl get svc,pods -owide

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 4h7m <none>

service/nginx NodePort 10.96.120.44 <none> 80:31733/TCP 110m app=nginx

service/nginx-deployment ClusterIP 10.96.229.187 <none> 30000/TCP 4m12s app=nginx-deployment

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/nginx-deployment-67569d9864-7sst2 1/1 Running 0 82s 10.244.1.8 node01 <none> <none>

pod/nginx-deployment-67569d9864-fskvh 1/1 Running 0 82s 10.244.2.7 node02 <none> <none>

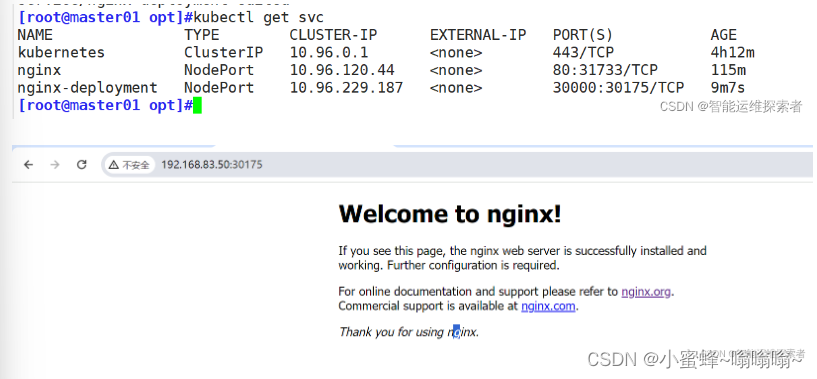

pod/nginx-deployment-67569d9864-vwncj 1/1 Running 0 82s 10.244.1.7 node01 <none> <none>访问验证

修改调度类型

[root@master01 opt]#kubectl edit svc nginx-deployment

service/nginx-deployment edited

#将第25行type: 修改为type: NodePort

7189

7189

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?