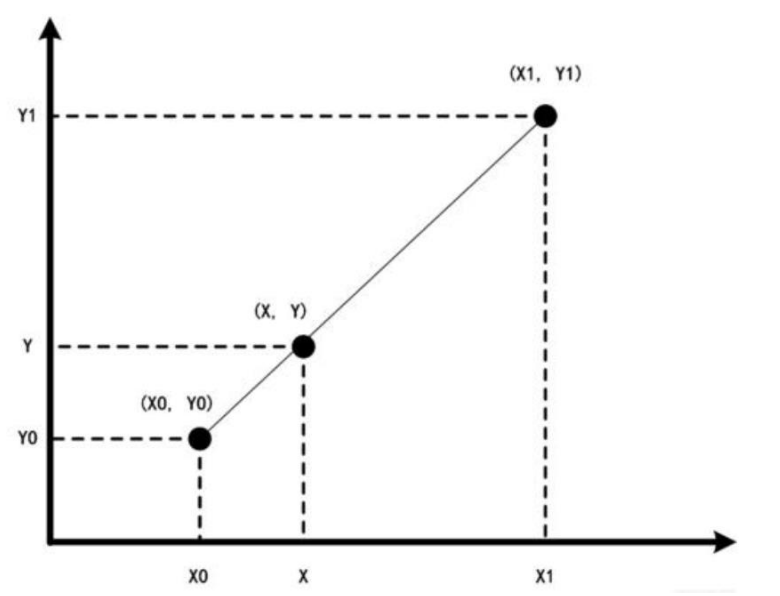

1、单线性插值

先讲一下单线性插值:已知数据 (x0, y0) 与 (x1, y1),要计算 [x0, x1] 区间内某一位置 x 在直线上的y值。因为直线上的函数值是线性变化的,我们只需通过计算x0、x两点斜率和x0、x1两点的斜率,令二者相等可以得到一个方程,如下所示。

通过计算就能算出x点对应的函数值y了

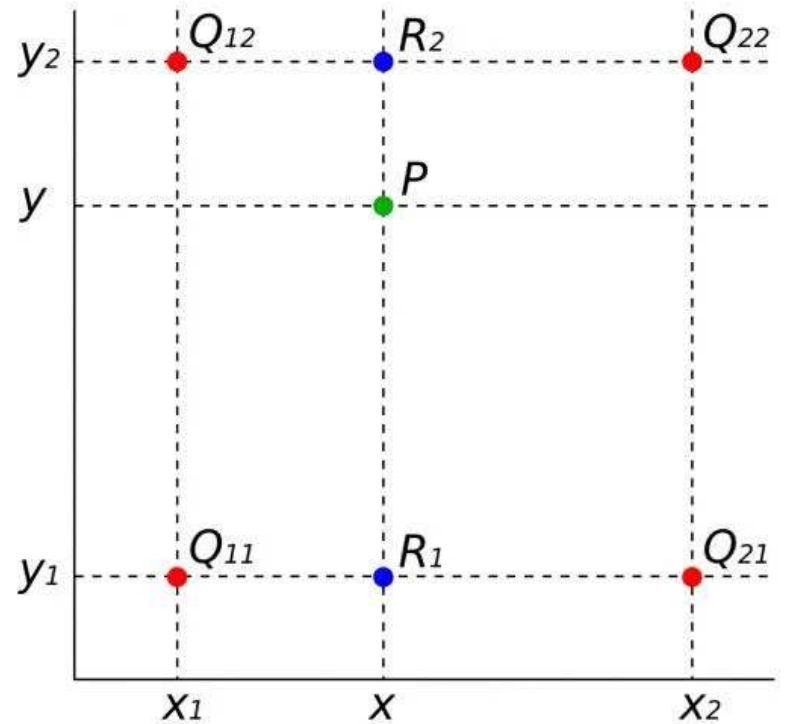

2、双线性插值

所谓双线性插值,就是在两个方向上进行了插值,总共进行了三次插值。

在X方向做插值:

在Y方向做插值:

![]()

综合起来:

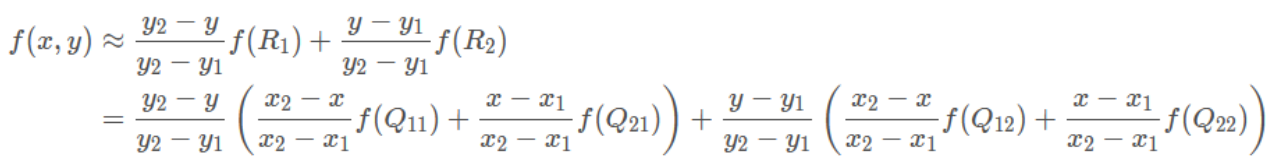

映射公式:(A为原图B为目标图,按几何中心对应,scale为放大倍数)

AX = (BX + 0.5) * ( AW / BW) - 0.5

AY = (BY + 0.5) * ( AH / BH) - 0.5

或

AX = (BX + 0.5) /scale - 0.5(scale是放大缩小倍数)

AY = (BY + 0.5) /scale - 0.5

原图像和目标图像的原点(0,0)均选择左上角,然后根据插值公式计算目标图像每点像素,假设你需要将一幅5x5的图像缩小成3x3,那么源图像和目标图像各个像素之间的对应关系如下。如果没有这个中心对齐,根据基本公式去算,就会得到左边这样的结果;而用了对齐,就会得到右边的结果:

3、代码实现

"""

@author: 绯雨千叶

双线性插值法(使用几何中心对应)

AX=(BX+0.5)*(AW/BW)-0.5

AY=(BY+0.5)*(AH/BH)-0.5

或

AX=(BX+0.5)/scale-0.5(scale是放大倍数)

AY=(BY+0.5)/scale-0.5

A是原图,B是目标图

"""

import numpy as np

import cv2

def bilinear(img, scale):

AH, AW, channel = img.shape

BH, BW = int(AH * scale), int(AW * scale)

dst_img = np.zeros((BH, BW, channel), np.uint8)

for k in range(channel):

for dst_x in range(BW):

for dsy_y in range(BH):

# 找到目标图x、y在原图中对应的坐标

AX = (dst_x + 0.5) / scale - 0.5

AY = (dsy_y + 0.5) / scale - 0.5

# 找到将用于计算插值的点的坐标

x1 = int(np.floor(AX)) # 取下限整数

y1 = int(np.floor(AY))

x2 = min(x1 + 1, AW - 1) # 返回最小值

y2 = min(y1 + 1, AH - 1)

# 计算插值

R1 = (x2 - AX) * img[y1, x1, k] + (AX - x1) * img[y1, x2, k]

R2 = (x2 - AX) * img[y2, x1, k] + (AX - x1) * img[y2, x2, k]

dst_img[dsy_y, dst_x, k] = int((y2 - AY) * R1 + (AY - y1) * R2)

return dst_img

if __name__ == '__main__':

img = cv2.imread('../img/lrn.jpg')

dst = bilinear(img, 1.5) # 设置放大1.5倍

cv2.imshow('bilinear', dst)

cv2.imshow('img', img)

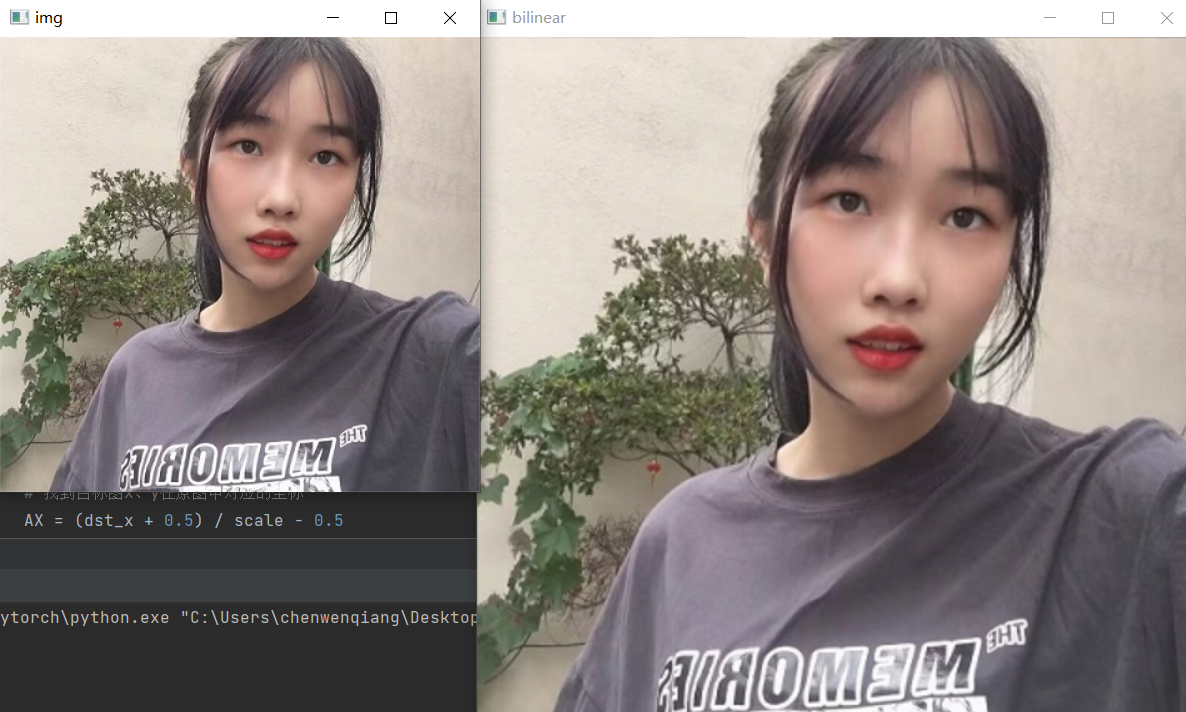

cv2.waitKey() 效果展示:

1万+

1万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?