目录

3.配置好本地DNS服务器(114.114.114.114)

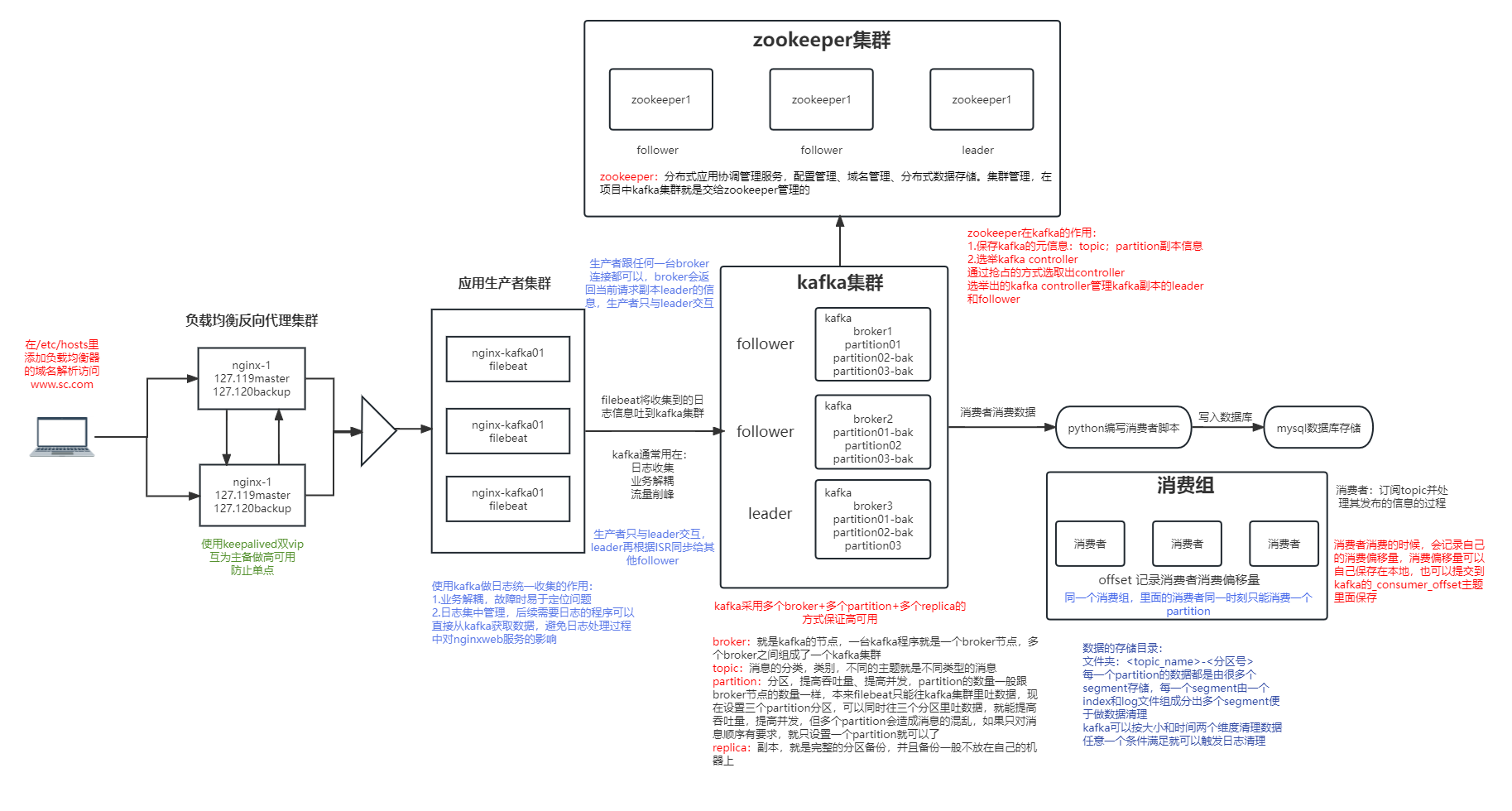

项目背景

模拟企业在大数据背景下的对日志数据进行收集、分析,消费并存储到数据库的流程,并且支持后续消费的扩展设计

实验环境

CentOS7(5台,1核2G)、Nginx(1.14.1)、keepalived(2.1.5)、Filebeat(7.17.5)、kafka(1.12)、zookeeper(3.6.3)、Pycharm2020.3、mysql(5.7.34)

架构图

集群环境

| nginx-1 | 192.168.127.145 | nginx+keepalived服务 |

| nginx-2 | 192.168.127.146 | nginx+keepalived服务 |

| nginx-kafka01 | 192.168.127.142 | kafka+zookeeper+filebeat服务 |

| nginx-kafka02 | 192.168.127.143 | kafka+zookeeper+filebeat服务 |

| nginx-kafka03 | 192.168.127.144 | kafka+zookeeper+filebeat服务 |

1.环境准备

1.准备好5台Linux机器(1核2G)

2.配置好静态ip地址

vim /etc/sysconfig/network-scripts/ifcfg-ens33

3.配置好本地DNS服务器(114.114.114.114)

vim /etc/resolv.conf

4.修改主机名

[root@nginx-kafka01 /]# cat /etc/hostname

nginx-kafka015.每一台机器上都写好域名解析

[root@nginx-kafka01 /]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.127.142 nginx-kafka01

192.168.127.143 nginx-kafka02

192.168.127.144 nginx-kafka036.安装基本软件

yum install wget lsof vim -y

7.开启chronyd服务,关闭防火墙服务和selinux

[root@nginx-kafka01 ~]#yum -y install chrony

[root@nginx-kafka01 ~]#vim /etc/selinux/config

SELINUX=disabled

[root@nginx-kafka01 ~]#systemctl enable chronyd

[root@nginx-kafka01 ~]# systemctl stop firewalld

[root@nginx-kafka01 ~]# systemctl disable firewalld2.搭建nginx集群

1.安装nginx

yum install epel-release -y

yum install nginx -y2.启动nginx并设置开机自启

systemctl start nginx #启动nginx

systemctl enable nginx #设置开机自启

3.编辑配置文件

主配置文件: nginx.conf

... #全局块

events { #events块

...

}

http #http块

{

... #http全局块

server #server块

{

... #server全局块

location [PATTERN] #location块

{

...

}

location [PATTERN]

{

...

}

}

server

{

...

}

... #http全局块

}

1、全局块:配置影响nginx全局的指令。一般有运行nginx服务器的用户组,nginx进程pid存放路径,日志存放路径,配置文件引入,允许生成worker process数等。

2、events块:配置影响nginx服务器或与用户的网络连接。有每个进程的最大连接数,选取哪种事件驱动模型处理连接请求,是否允许同时接受多个网路连接,开启多个网络连接序列化等。

3、http块:可以嵌套多个server,配置代理,缓存,日志定义等绝大多数功能和第三方模块的配置。如文件引入,mime-type定义,日志自定义,是否使用sendfile传输文件,连接超时时间,单连接请求数等。

4、server块:配置虚拟主机的相关参数,一个http中可以有多个server。

5、location块:配置请求的路由,以及各种页面的处理情况

修改配置文件,在nginx.conf中的http全局块中添加include /etc/nginx/conf.d/*.conf;

在/etc/nginx下新建conf.d目录,在此目录下添加sc.conf配置文件

vim /etc/nginx/conf.d/sc.conf

server {

listen 80 default_server;

server_name www.sc.com;

root /usr/share/nginx/html;

access_log /var/log/nginx/sc/access.log main;

location / {

}

}创建日志存放路径

[root@nginx-kafka01 html]mkdir /var/log/nginx/sc/4.nginx反向代理配置

upstream nginx_backend {

server 192.168.127.142:80; #nginx-kafka01

server 192.168.127.143:80; #nginx-kafka02

server 192.168.127.144:80; #nginx-kafka03

}

server {

listen 80 default_server;

root /usr/share/nginx/html;

location / {

proxy_pass http://nginx_backend;

}

}

5.语法检测,检测配置文件语法是否正确

[root@nginx-kafka01 html]# nginx -t

nginx: the configuration file /etc/nginx/nginx.conf syntax is ok

nginx: configuration file /etc/nginx/nginx.conf test is successful

#重新加载nginx

nginx -s reload3.搭建keepalived双VIP高可用

1.安装keepalived

yum install keepalived -y2.配置keepalived

配置nginx-1为vip:192.168.127.119的master,nginx-2为vip:192.168.127.120的backup

[root@nginx-1 ~]# vim /etc/keepalived/keepalived.conf

vrrp_instance VI_1 {

state MASTER

interface ens33

virtual_router_id 51 #虚拟路由id 在同一个局域网内来区分不同的keepalived集群,如>果在同一个keepalived集群中,那每台主机的router id都是一样的

priority 120

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.127.119

}

}

vrrp_instance VI_2 {

state BACKUP

interface ens33

virtual_router_id 52

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.127.120

}

}

配置nginx-1为vip:192.168.127.119的master,nginx-2为vip:192.168.127.120的backup

[root@nginx-2 ~]# vim /etc/keepalived/keepalived.conf

vrrp_instance VI_1 {

state BACKUP

interface ens33

virtual_router_id 51 #虚拟路由id 在同一个局域网内来区分不同的keepalived集群,如>果在同一个keepalived集群中,那每台主机的router id都是一样的

priority 120

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.127.119

}

}

vrrp_instance VI_2 {

state MASTER

interface ens33

virtual_router_id 52

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.127.120

}

}

3.重启keepalived服务

systemctl restart keepalived4.搭建kafka和zookeeper集群

以nginx-kafka01为例

1.安装java和kafka

安装java:

[root@nginx-kafka01 opt]yum install java wget -y

安装kafka:

[root@nginx-kafka01 opt]wget http://mirrors.aliyun.com/apache/kafka/2.8.2/kafka_2.12-2.8.2.tgz

[root@nginx-kafka01 opt]tar xf kafka_2.12-2.8.1.tgz

安装zookeeper:

[root@nginx-kafka01 opt]wget https://mirrors.bfsu.edu.cn/apache/zookeeper/zookeeper-3.6.3/apache-zookeeper-3.6.3-bin.tar.gz

[root@nginx-kafka01 opt]tar xf apache-zookeeper-3.6.3-bin.tar.gz

2.配置kafka

修改/opt/kafka_2.12-2.8.1/config /server.properties

broker.id=0

listeners=PLAINTEXT://nginx-kafka01:9092

zookeeper.connect=192.168.127.142:2181,192.168.127.143:2181,192.168.127.144:21813.配置zookeeper

进入/opt/apache-zookeeper-3.6.3-bin/confs

cp zoo_sample.cfg zoo.cfg

修改zoo.cfg, 添加如下三行:

server.1=192.168.127.142:3888:4888

server.2=192.168.127.143:3888:4888

server.3=192.168.127.144:3888:48883888和4888都是端口 一个用于数据传输,一个用于检验存活性和选举

创建/tmp/zookeeper目录 ,在目录中添加myid文件,文件内容就是本机指定的zookeeper id内容

如:在192.168.127.142机器上

echo 1 > /tmp/zookeeper/myid在192.168.127.143机器上

echo 2 > /tmp/zookeeper/myid在192.168.127.144机器上

echo 3 > /tmp/zookeeper/myid启动zookeeper

[root@nginx-kafka01 apache-zookeeper-3.6.3-bin]apache-zookeeper-3.6.3-bin/bin/zkServer.sh start

开启zookeeper和kafka的时候,一定是先启动zookeeper,再启动kafka

关闭服务的时候,kafka先关闭,再关闭zookeeper

查看三台机器的状态

[root@nginx-kafka01 apache-zookeeper-3.6.3-bin]# bin/zkServer.sh status

/usr/bin/java

ZooKeeper JMX enabled by default

Using config: /opt/apache-zookeeper-3.6.3-bin/bin/../conf/zoo.cfg

Client port found: 2181. Client address: localhost. Client SSL: false.

Mode: follower

[root@nginx-kafka02 apache-zookeeper-3.6.3-bin]# bin/zkServer.sh status

/usr/bin/java

ZooKeeper JMX enabled by default

Using config: /opt/apache-zookeeper-3.6.3-bin/bin/../conf/zoo.cfg

Client port found: 2181. Client address: localhost. Client SSL: false.

Mode: follower

[root@nginx-kafka03 apache-zookeeper-3.6.3-bin]# bin/zkServer.sh status

/usr/bin/java

ZooKeeper JMX enabled by default

Using config: /opt/apache-zookeeper-3.6.3-bin/bin/../conf/zoo.cfg

Client port found: 2181. Client address: localhost. Client SSL: false.

Mode: leader

启动kafka

bin/kafka-server-start.sh -daemon config/server.propertieszookeeper使用

bin/zkCli.sh

[zk: localhost:2181(CONNECTED) 1] ls /

[admin, brokers, cluster, config, consumers, controller, controller_epoch, feature, isr_change_notification, latest_producer_id_block, log_dir_event_notification, sc, zookeeper]

查看brokers的id

[zk: localhost:2181(CONNECTED) 2] ls /brokers/ids

[1, 2, 3]

创建broker

[zk: localhost:2181(CONNECTED) 3] create /sc/yy

Created /sc/yy

[zk: localhost:2181(CONNECTED) 4] ls /sc

[page, xx, yy]

[zk: localhost:2181(CONNECTED) 5] set /sc/yy 90

[zk: localhost:2181(CONNECTED) 6] get /sc/yy

90

5.创建一个topic来测试kafka

1.创建topic

[root@nginx-kafka03 kafka_2.12-2.8.1]# bin/kafka-topics.sh --create --zookeeper 192.168.127.142:2181 --replication-factor 1 --partitions 1 --topic sc2.查看topic

[root@nginx-kafka03 kafka_2.12-2.8.1]# bin/kafka-topics.sh --list --zookeeper 192.168.127.142:2181

__consumer_offsets

sc

3.创建生产者

[root@nginx-kafka03 kafka_2.12-2.8.1]# bin/kafka-console-producer.sh --broker-list 192.168.127.142:9092 --topic sc

>haha

>hello

>

>zhanghaoyang

>xixi

>didi

>woer

>niuya

>

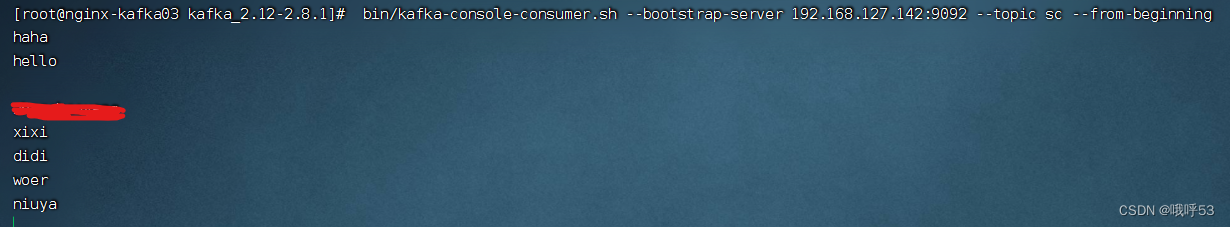

4.创建消费者

[root@nginx-kafka03 kafka_2.12-2.8.1]# bin/kafka-console-consumer.sh --bootstrap-server 192.168.127.142:9092 --topic sc --from-beginning

5.消费成功效果

6.filebeat部署

Filebeat 是使用 Golang 实现的轻量型日志采集器,也是 Elasticsearch stack 里面的一员。本质上是一个 agent ,可以安装在各个节点上,根据配置读取对应位置的日志,并上报到相应的地方去。

Filebeat 由两个主要组件组成:harvester 和 prospector。

采集器 harvester 的主要职责是读取单个文件的内容。读取每个文件,并将内容发送到 the output。 每个文件启动一个 harvester,harvester 负责打开和关闭文件,这意味着在运行时文件描述符保持打开状态。如果文件在读取时被删除或重命名,Filebeat 将继续读取文件。

查找器 prospector 的主要职责是管理 harvester 并找到所有要读取的文件来源。如果输入类型为日志,则查找器将查找路径匹配的所有文件,并为每个文件启动一个 harvester。每个 prospector 都在自己的 Go 协程中运行

1.安装

rpm --import https://packages.elastic.co/GPG-KEY-elasticsearch编辑 vim /etc/yum.repos.d/fb.repo

[elastic-7.x]

name=Elastic repository for 7.x packages

baseurl=https://artifacts.elastic.co/packages/7.x/yum

gpgcheck=1

gpgkey=https://artifacts.elastic.co/GPG-KEY-elasticsearch

enabled=1

autorefresh=1

type=rpm-md2.yum安装filebeat

yum install filebeat -y

rpm -qa |grep filebeat #可以查看filebeat有没有安装 rpm -qa 是查看机器上安装的所有软件包

rpm -ql filebeat 查看filebeat安装到哪里去了,牵扯的文件有哪些设置开机自启

systemctl enable filebeat3.修改配置文件

首先将filebeat的配置文件filebeat.yml备份一份为filebeat.yml.bak

[root@nginx-kafka01 filebeat]# cp filebeat.yml filebeat.yml.bak

将filebeat.yml文件清空

filebeat.inputs:

- type: log

# Change to true to enable this input configuration.

enabled: true

# Paths that should be crawled and fetched. Glob based paths.

paths:

- /var/log/nginx/sc_access.log

#==========------------------------------kafka-----------------------------------

output.kafka:

hosts: ["192.168.127.142:9092","192.168.127.143:9092","192.168.127.144:9092"]

topic: nginxlog

keep_alive: 10s设置开机启动服务,并检查filebeat是否启动

#设置开机自启

systemctl enable filebeat

#启动服务:

systemctl start filebeat

# 查看filebeat是否启动

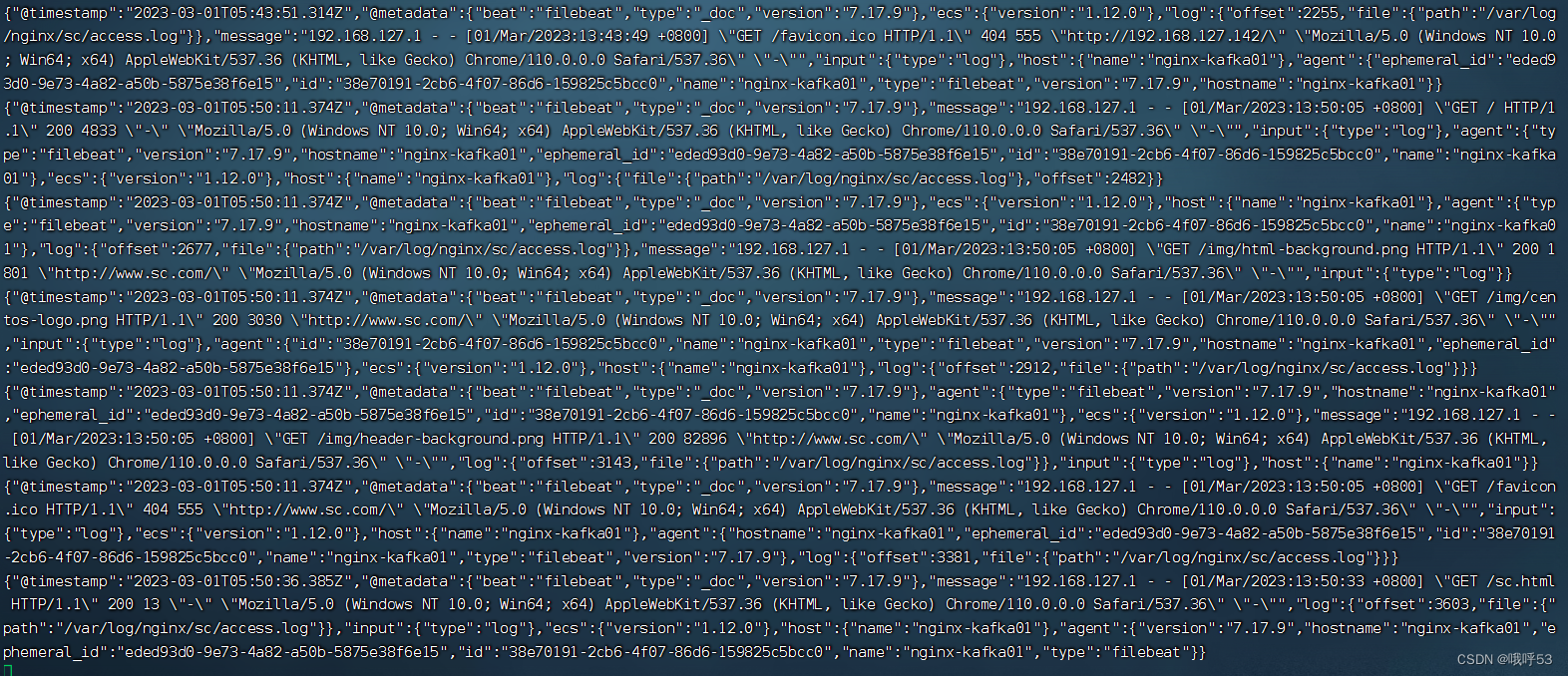

ps -ef |grep filebeat可以通过filebeat来收集nginx的日志

4.测试

#创建主题nginxlog

bin/kafka-topics.sh --create --zookeeper 192.168.127.142:2181 --replication-factor 3 --partitions 1 --topic nginxlog

vim /etc/hosts 修改负载均衡器的域名解析

192.168.127.145 www.sc.com

192.168.127.146 www.sc.com

访问域名

curl www.sc.com创建消费者来检测日志是否生产过来

#创建消费者来检测日志是否生产过来

bin/kafka-console-consumer.sh --bootstrap-server 192.168.127.142:9092 --topic nginxlog --from-beginning

7.编写python脚本,数据入库

创建数据库表

create table nginxlog (

id int primary key auto_increment,

dt datetime not null,

prov int ,

isp int,

bt float

) CHARSET=utf8;import json

import pymysql

import requests

import time

taobao_url = "https://ip.taobao.com/outGetIpInfo?accessKey=alibaba-inc&ip="

#查询ip地址的信息(省份和运营商isp),通过taobao网的接口

def resolv_ip(ip):

response = requests.get(taobao_url+ip)

if response.status_code == 200:

tmp_dict = json.loads(response.text)

prov = tmp_dict["data"]["region"]

isp = tmp_dict["data"]["isp"]

return prov,isp

return None,None

#将日志里读取的格式转换为我们指定的格式

def trans_time(dt):

#把字符串转成时间格式

timeArray = time.strptime(dt, "%d/%b/%Y:%H:%M:%S")

#timeStamp = int(time.mktime(timeArray))

#把时间格式转成字符串

new_time = time.strftime("%Y-%m-%d %H:%M:%S", timeArray)

return new_time

#从kafka里获取数据,清洗为我们需要的ip,时间,带宽

from pykafka import KafkaClient

client = KafkaClient(hosts="192.168.127.142:9092,192.168.127.143:9092,192.168.127.144:9092")

topic = client.topics['nginxlog']

balanced_consumer = topic.get_balanced_consumer(

consumer_group='testgroup',

auto_commit_enable=True,

zookeeper_connect='nginx-kafka01:2181,nginx-kafka02:2181,nginx-kafka03:2181'

)

#consumer = topic.get_simple_consumer()

db = pymysql.connect(host="192.168.127.139",user="sctl",passwd="123456",port=3306,db="consumers",charset="utf8")

cursor = db.cursor()

for message in balanced_consumer:

if message is not None:

try:

line = json.loads(message.value.decode("utf-8"))

log = line["message"]

tmp_lst = log.split()

ip = tmp_lst[0]

dt = tmp_lst[3].replace("[","")

bt = tmp_lst[9]

dt = trans_time(dt)

prov, isp = resolv_ip(ip)

if prov and isp:

print(prov, isp,dt,bt)

try:

cursor.execute('insert into nginxlog(dt,prov,isp,bt) values("%s","%s","%s","%s")'%(dt,prov,isp,bt))

db.commit()

print("保存成功")

except Exception as err:

print("保存失败",err)

db.rollback()

except:

pass

db.close()查询数据库

469

469

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?