机器翻译是指将一段文本从一种语言自动翻译到另一种语言。因为一段文本序列在不同语言中的长度不一定相同,所以我们使用机器翻译为例来介绍编码器—解码器和注意力机制的应用。

10.12.1 读取和预处理数据

我们先定义一些特殊符号。其中“<pad>”(padding)符号用来添加在较短序列后,直到每个序列等长,而“<bos>”和“<eos>”符号分别表示序列的开始和结束。

[15]:

!tar -xf d2lzh_pytorch.tarimport collections

import os

import io

import math

import torch

from torch import nn

import torch.nn.functional as F

import torchtext.vocab as Vocab

import torch.utils.data as Data

import sys

# sys.path.append("..")

import d2lzh_pytorch as d2l

PAD, BOS, EOS = '<pad>', '<bos>', '<eos>'

os.environ["CUDA_VISIBLE_DEVICES"] = "0"

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

print(torch.__version__, device)1.5.0 cpu

接着定义两个辅助函数对后面读取的数据进行预处理。

# 将一个序列中所有的词记录在all_tokens中以便之后构造词典,然后在该序列后面添加PAD直到序列

# 长度变为max_seq_len,然后将序列保存在all_seqs中

def process_one_seq(seq_tokens, all_tokens, all_seqs, max_seq_len):

all_tokens.extend(seq_tokens)

seq_tokens += [EOS] + [PAD] * (max_seq_len - len(seq_tokens) - 1)

all_seqs.append(seq_tokens)

# 使用所有的词来构造词典。并将所有序列中的词变换为词索引后构造Tensor

def build_data(all_tokens, all_seqs):

vocab = Vocab.Vocab(collections.Counter(all_tokens),

specials=[PAD, BOS, EOS])

indices = [[vocab.stoi[w] for w in seq] for seq in all_seqs]

return vocab, torch.tensor(indices)为了演示方便,我们在这里使用一个很小的法语—英语数据集。在这个数据集里,每一行是一对法语句子和它对应的英语句子,中间使用'\t'隔开。在读取数据时,我们在句末附上“<eos>”符号,并可能通过添加“<pad>”符号使每个序列的长度均为max_seq_len。我们为法语词和英语词分别创建词典。法语词的索引和英语词的索引相互独立

def read_data(max_seq_len):

# in和out分别是input和output的缩写

in_tokens, out_tokens, in_seqs, out_seqs = [], [], [], []

with io.open('fr-en-small.txt') as f:

lines = f.readlines()

for line in lines:

in_seq, out_seq = line.rstrip().split('\t')

in_seq_tokens, out_seq_tokens = in_seq.split(' '), out_seq.split(' ')

if max(len(in_seq_tokens), len(out_seq_tokens)) > max_seq_len - 1:

continue # 如果加上EOS后长于max_seq_len,则忽略掉此样本

process_one_seq(in_seq_tokens, in_tokens, in_seqs, max_seq_len)

process_one_seq(out_seq_tokens, out_tokens, out_seqs, max_seq_len)

in_vocab, in_data = build_data(in_tokens, in_seqs)

out_vocab, out_data = build_data(out_tokens, out_seqs)

return in_vocab, out_vocab, Data.TensorDataset(in_data, out_data)将序列的最大长度设成7,然后查看读取到的第一个样本。该样本分别包含法语词索引序列和英语词索引序列。

max_seq_len = 7

in_vocab, out_vocab, dataset = read_data(max_seq_len)

dataset[0](tensor([ 5, 4, 45, 3, 2, 0, 0]), tensor([ 8, 4, 27, 3, 2, 0, 0]))

10.12.2 含注意力机制的编码器—解码器

我们将使用含注意力机制的编码器—解码器来将一段简短的法语翻译成英语。下面我们来介绍模型的实现。

10.12.2.1 编码器

在编码器中,我们将输入语言的词索引通过词嵌入层得到词的表征,然后输入到一个多层门控循环单元中。正如我们在6.5节(循环神经网络的简洁实现)中提到的,PyTorch的nn.GRU实例在前向计算后也会分别返回输出和最终时间步的多层隐藏状态。其中的输出指的是最后一层的隐藏层在各个时间步的隐藏状态,并不涉及输出层计算。注意力机制将这些输出作为键项和值项。

class Encoder(nn.Module):

def __init__(self, vocab_size, embed_size, num_hiddens, num_layers,

drop_prob=0, **kwargs):

super(Encoder, self).__init__(**kwargs)

self.embedding = nn.Embedding(vocab_size, embed_size)

self.rnn = nn.GRU(embed_size, num_hiddens, num_layers, dropout=drop_prob)

def forward(self, inputs, state):

# 输入形状是(批量大小, 时间步数)。将输出互换样本维和时间步维

embedding = self.embedding(inputs.long()).permute(1, 0, 2) # (seq_len, batch, input_size)

return self.rnn(embedding, state)

def begin_state(self):

return None下面我们来创建一个批量大小为4、时间步数为7的小批量序列输入。设门控循环单元的隐藏层个数为2,隐藏单元个数为16。编码器对该输入执行前向计算后返回的输出形状为(时间步数, 批量大小, 隐藏单元个数)。门控循环单元在最终时间步的多层隐藏状态的形状为(隐藏层个数, 批量大小, 隐藏单元个数)。对于门控循环单元来说,state就是一个元素,即隐藏状态;如果使用长短期记忆,state是一个元组,包含两个元素即隐藏状态和记忆细胞。

encoder = Encoder(vocab_size=10, embed_size=8, num_hiddens=16, num_layers=2)

output, state = encoder(torch.zeros((4, 7)), encoder.begin_state())

output.shape, state.shape # GRU的state是h, 而LSTM的是一个元组(h, c)(torch.Size([7, 4, 16]), torch.Size([2, 4, 16]))

10.12.2.2 注意力机制

我们将实现10.11节(注意力机制)中定义的函数𝑎𝑎:将输入连结后通过含单隐藏层的多层感知机变换。其中隐藏层的输入是解码器的隐藏状态与编码器在所有时间步上隐藏状态的一一连结,且使用tanh函数作为激活函数。输出层的输出个数为1。两个Linear实例均不使用偏差。其中函数𝑎𝑎定义里向量𝑣𝑣的长度是一个超参数,即attention_size。

def attention_model(input_size, attention_size):

model = nn.Sequential(nn.Linear(input_size, attention_size, bias=False),

nn.Tanh(),

nn.Linear(attention_size, 1, bias=False))

return model注意力机制的输入包括查询项、键项和值项。设编码器和解码器的隐藏单元个数相同。这里的查询项为解码器在上一时间步的隐藏状态,形状为(批量大小, 隐藏单元个数);键项和值项均为编码器在所有时间步的隐藏状态,形状为(时间步数, 批量大小, 隐藏单元个数)。注意力机制返回当前时间步的背景变量,形状为(批量大小, 隐藏单元个数)。

def attention_forward(model, enc_states, dec_state):

"""

enc_states: (时间步数, 批量大小, 隐藏单元个数)

dec_state: (批量大小, 隐藏单元个数)

"""

# 将解码器隐藏状态广播到和编码器隐藏状态形状相同后进行连结

dec_states = dec_state.unsqueeze(dim=0).expand_as(enc_states)

enc_and_dec_states = torch.cat((enc_states, dec_states), dim=2)

e = model(enc_and_dec_states) # 形状为(时间步数, 批量大小, 1)

alpha = F.softmax(e, dim=0) # 在时间步维度做softmax运算

return (alpha * enc_states).sum(dim=0) # 返回背景变量在下面的例子中,编码器的时间步数为10,批量大小为4,编码器和解码器的隐藏单元个数均为8。注意力机制返回一个小批量的背景向量,每个背景向量的长度等于编码器的隐藏单元个数。因此输出的形状为(4, 8)。

seq_len, batch_size, num_hiddens = 10, 4, 8

model = attention_model(2*num_hiddens, 10)

enc_states = torch.zeros((seq_len, batch_size, num_hiddens))

dec_state = torch.zeros((batch_size, num_hiddens))

attention_forward(model, enc_states, dec_state).shapetorch.Size([4, 8])

10.12.2.3 含注意力机制的解码器

我们直接将编码器在最终时间步的隐藏状态作为解码器的初始隐藏状态。这要求编码器和解码器的循环神经网络使用相同的隐藏层个数和隐藏单元个数。

在解码器的前向计算中,我们先通过刚刚介绍的注意力机制计算得到当前时间步的背景向量。由于解码器的输入来自输出语言的词索引,我们将输入通过词嵌入层得到表征,然后和背景向量在特征维连结。我们将连结后的结果与上一时间步的隐藏状态通过门控循环单元计算出当前时间步的输出与隐藏状态。最后,我们将输出通过全连接层变换为有关各个输出词的预测,形状为(批量大小, 输出词典大小)。

class Decoder(nn.Module):

def __init__(self, vocab_size, embed_size, num_hiddens, num_layers,

attention_size, drop_prob=0):

super(Decoder, self).__init__()

self.embedding = nn.Embedding(vocab_size, embed_size)

self.attention = attention_model(2*num_hiddens, attention_size)

# GRU的输入包含attention输出的c和实际输入, 所以尺寸是 num_hiddens+embed_size

self.rnn = nn.GRU(num_hiddens + embed_size, num_hiddens,

num_layers, dropout=drop_prob)

self.out = nn.Linear(num_hiddens, vocab_size)

def forward(self, cur_input, state, enc_states):

"""

cur_input shape: (batch, )

state shape: (num_layers, batch, num_hiddens)

"""

# 使用注意力机制计算背景向量

c = attention_forward(self.attention, enc_states, state[-1])

# 将嵌入后的输入和背景向量在特征维连结, (批量大小, num_hiddens+embed_size)

input_and_c = torch.cat((self.embedding(cur_input), c), dim=1)

# 为输入和背景向量的连结增加时间步维,时间步个数为1

output, state = self.rnn(input_and_c.unsqueeze(0), state)

# 移除时间步维,输出形状为(批量大小, 输出词典大小)

output = self.out(output).squeeze(dim=0)

return output, state

def begin_state(self, enc_state):

# 直接将编码器最终时间步的隐藏状态作为解码器的初始隐藏状态

return enc_state10.12.3 训练模型

我们先实现batch_loss函数计算一个小批量的损失。解码器在最初时间步的输入是特殊字符BOS。之后,解码器在某时间步的输入为样本输出序列在上一时间步的词,即强制教学。此外,同10.3节(word2vec的实现)中的实现一样,我们在这里也使用掩码变量避免填充项对损失函数计算的影响。

def batch_loss(encoder, decoder, X, Y, loss):

batch_size = X.shape[0]

enc_state = encoder.begin_state()

enc_outputs, enc_state = encoder(X, enc_state)

# 初始化解码器的隐藏状态

dec_state = decoder.begin_state(enc_state)

# 解码器在最初时间步的输入是BOS

dec_input = torch.tensor([out_vocab.stoi[BOS]] * batch_size)

# 我们将使用掩码变量mask来忽略掉标签为填充项PAD的损失, 初始全1

mask, num_not_pad_tokens = torch.ones(batch_size,), 0

l = torch.tensor([0.0])

for y in Y.permute(1,0): # Y shape: (batch, seq_len)

dec_output, dec_state = decoder(dec_input, dec_state, enc_outputs)

l = l + (mask * loss(dec_output, y)).sum()

dec_input = y # 使用强制教学

num_not_pad_tokens += mask.sum().item()

# EOS后面全是PAD. 下面一行保证一旦遇到EOS接下来的循环中mask就一直是0

mask = mask * (y != out_vocab.stoi[EOS]).float()

return l / num_not_pad_tokens在训练函数中,我们需要同时迭代编码器和解码器的模型参数。

def train(encoder, decoder, dataset, lr, batch_size, num_epochs):

enc_optimizer = torch.optim.Adam(encoder.parameters(), lr=lr)

dec_optimizer = torch.optim.Adam(decoder.parameters(), lr=lr)

loss = nn.CrossEntropyLoss(reduction='none')

data_iter = Data.DataLoader(dataset, batch_size, shuffle=True)

for epoch in range(num_epochs):

l_sum = 0.0

for X, Y in data_iter:

enc_optimizer.zero_grad()

dec_optimizer.zero_grad()

l = batch_loss(encoder, decoder, X, Y, loss)

l.backward()

enc_optimizer.step()

dec_optimizer.step()

l_sum += l.item()

if (epoch + 1) % 10 == 0:

print("epoch %d, loss %.3f" % (epoch + 1, l_sum / len(data_iter)))接下来,创建模型实例并设置超参数。然后,我们就可以训练模型了。

embed_size, num_hiddens, num_layers = 64, 64, 2

attention_size, drop_prob, lr, batch_size, num_epochs = 10, 0.5, 0.01, 2, 50

encoder = Encoder(len(in_vocab), embed_size, num_hiddens, num_layers,

drop_prob)

decoder = Decoder(len(out_vocab), embed_size, num_hiddens, num_layers,

attention_size, drop_prob)

train(encoder, decoder, dataset, lr, batch_size, num_epochs)epoch 10, loss 0.491 epoch 20, loss 0.267 epoch 30, loss 0.102 epoch 40, loss 0.075 epoch 50, loss 0.059

10.12.4 预测不定长的序列

在10.10节(束搜索)中我们介绍了3种方法来生成解码器在每个时间步的输出。这里我们实现最简单的贪婪搜索。

def translate(encoder, decoder, input_seq, max_seq_len):

in_tokens = input_seq.split(' ')

in_tokens += [EOS] + [PAD] * (max_seq_len - len(in_tokens) - 1)

enc_input = torch.tensor([[in_vocab.stoi[tk] for tk in in_tokens]]) # batch=1

enc_state = encoder.begin_state()

enc_output, enc_state = encoder(enc_input, enc_state)

dec_input = torch.tensor([out_vocab.stoi[BOS]])

dec_state = decoder.begin_state(enc_state)

output_tokens = []

for _ in range(max_seq_len):

dec_output, dec_state = decoder(dec_input, dec_state, enc_output)

pred = dec_output.argmax(dim=1)

pred_token = out_vocab.itos[int(pred.item())]

if pred_token == EOS: # 当任一时间步搜索出EOS时,输出序列即完成

break

else:

output_tokens.append(pred_token)

dec_input = pred

return output_tokens简单测试一下模型。输入法语句子“ils regardent.”,翻译后的英语句子应该是“they are watching.”。

input_seq = 'ils regardent .'

translate(encoder, decoder, input_seq, max_seq_len)['they', 'are', 'watching', '.']

10.12.5 评价翻译结果

评价机器翻译结果通常使用BLEU(Bilingual Evaluation Understudy)[1]。对于模型预测序列中任意的子序列,BLEU考察这个子序列是否出现在标签序列中。

具体来说,设词数为𝑛𝑛的子序列的精度为𝑝𝑛𝑝𝑛。它是预测序列与标签序列匹配词数为𝑛𝑛的子序列的数量与预测序列中词数为𝑛𝑛的子序列的数量之比。举个例子,假设标签序列为𝐴𝐴、𝐵𝐵、𝐶𝐶、𝐷𝐷、𝐸𝐸、𝐹𝐹,预测序列为𝐴𝐴、𝐵𝐵、𝐵𝐵、𝐶𝐶、𝐷𝐷,那么𝑝1=4/5,𝑝2=3/4,𝑝3=1/3,𝑝4=0𝑝1=4/5,𝑝2=3/4,𝑝3=1/3,𝑝4=0。设𝑙𝑒𝑛label𝑙𝑒𝑛label和𝑙𝑒𝑛pred𝑙𝑒𝑛pred分别为标签序列和预测序列的词数,那么,BLEU的定义为

其中𝑘𝑘是我们希望匹配的子序列的最大词数。可以看到当预测序列和标签序列完全一致时,BLEU为1。

因为匹配较长子序列比匹配较短子序列更难,BLEU对匹配较长子序列的精度赋予了更大权重。例如,当𝑝𝑛𝑝𝑛固定在0.5时,随着𝑛𝑛的增大,0.51/2≈0.7,0.51/4≈0.84,0.51/8≈0.92,0.51/16≈0.960.51/2≈0.7,0.51/4≈0.84,0.51/8≈0.92,0.51/16≈0.96。另外,模型预测较短序列往往会得到较高𝑝𝑛𝑝𝑛值。因此,上式中连乘项前面的系数是为了惩罚较短的输出而设的。举个例子,当𝑘=2𝑘=2时,假设标签序列为𝐴𝐴、𝐵𝐵、𝐶𝐶、𝐷𝐷、𝐸𝐸、𝐹𝐹,而预测序列为𝐴𝐴、𝐵𝐵。虽然𝑝1=𝑝2=1𝑝1=𝑝2=1,但惩罚系数exp(1−6/2)≈0.14exp(1−6/2)≈0.14,因此BLEU也接近0.14。

下面来实现BLEU的计算。

def bleu(pred_tokens, label_tokens, k):

len_pred, len_label = len(pred_tokens), len(label_tokens)

score = math.exp(min(0, 1 - len_label / len_pred))

for n in range(1, k + 1):

num_matches, label_subs = 0, collections.defaultdict(int)

for i in range(len_label - n + 1):

label_subs[''.join(label_tokens[i: i + n])] += 1

for i in range(len_pred - n + 1):

if label_subs[''.join(pred_tokens[i: i + n])] > 0:

num_matches += 1

label_subs[''.join(pred_tokens[i: i + n])] -= 1

score *= math.pow(num_matches / (len_pred - n + 1), math.pow(0.5, n))

return score接下来,定义一个辅助打印函数。

def score(input_seq, label_seq, k):

pred_tokens = translate(encoder, decoder, input_seq, max_seq_len)

label_tokens = label_seq.split(' ')

print('bleu %.3f, predict: %s' % (bleu(pred_tokens, label_tokens, k),

' '.join(pred_tokens)))预测正确则分数为1。

score('ils regardent .', 'they are watching .', k=2)bleu 1.000, predict: they are watching .

score('ils sont canadienne .', 'they are canadian .', k=2)bleu 0.658, predict: they are watching .

小结

- 可以将编码器—解码器和注意力机制应用于机器翻译中。

- BLEU可以用来评价翻译结果。

练习

- 如果编码器和解码器的隐藏单元个数不同或层数不同,我们该如何改进解码器的隐藏状态初始化方法?

"""

如果编码器和解码器的隐藏单元个数或层数不同,我们需要对解码器的隐藏状态初始化方法进行改进。具体方法如下:

线性变换:使用一个线性层将编码器的隐藏状态转换为解码器的隐藏状态维度。

平均或复制:对于层数不同的情况,可以使用平均或复制的方式对齐层数。

"""

import collections

import os

import io

import math

import torch

from torch import nn

import torch.nn.functional as F

import torchtext.vocab as Vocab

import torch.utils.data as Data

import sys

# sys.path.append("..")

import d2lzh_pytorch as d2l

PAD, BOS, EOS = '<pad>', '<bos>', '<eos>'

os.environ["CUDA_VISIBLE_DEVICES"] = "0"

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

print(torch.__version__, device)

# 将一个序列中所有的词记录在all_tokens中以便之后构造词典,然后在该序列后面添加PAD直到序列

# 长度变为max_seq_len,然后将序列保存在all_seqs中

def process_one_seq(seq_tokens, all_tokens, all_seqs, max_seq_len):

all_tokens.extend(seq_tokens)

seq_tokens += [EOS] + [PAD] * (max_seq_len - len(seq_tokens) - 1)

all_seqs.append(seq_tokens)

# 使用所有的词来构造词典。并将所有序列中的词变换为词索引后构造Tensor

def build_data(all_tokens, all_seqs):

vocab = Vocab.Vocab(collections.Counter(all_tokens),

specials=[PAD, BOS, EOS])

indices = [[vocab.stoi[w] for w in seq] for seq in all_seqs]

return vocab, torch.tensor(indices)

def read_data(max_seq_len):

# in和out分别是input和output的缩写

in_tokens, out_tokens, in_seqs, out_seqs = [], [], [], []

with io.open('fr-en-small.txt') as f:

lines = f.readlines()

for line in lines:

in_seq, out_seq = line.rstrip().split('\t')

in_seq_tokens, out_seq_tokens = in_seq.split(' '), out_seq.split(' ')

if max(len(in_seq_tokens), len(out_seq_tokens)) > max_seq_len - 1:

continue # 如果加上EOS后长于max_seq_len,则忽略掉此样本

process_one_seq(in_seq_tokens, in_tokens, in_seqs, max_seq_len)

process_one_seq(out_seq_tokens, out_tokens, out_seqs, max_seq_len)

in_vocab, in_data = build_data(in_tokens, in_seqs)

out_vocab, out_data = build_data(out_tokens, out_seqs)

return in_vocab, out_vocab, Data.TensorDataset(in_data, out_data)

# 读取数据

max_seq_len = 10

in_vocab, out_vocab, dataset = read_data(max_seq_len)

class Encoder(nn.Module):

def __init__(self, vocab_size, embed_size, num_hiddens, num_layers,

drop_prob=0, **kwargs):

super(Encoder, self).__init__(**kwargs)

self.embedding = nn.Embedding(vocab_size, embed_size)

self.rnn = nn.GRU(embed_size, num_hiddens, num_layers, dropout=drop_prob)

def forward(self, inputs, state):

# 输入形状是(批量大小, 时间步数)。将输出互换样本维和时间步维

embedding = self.embedding(inputs.long()).permute(1, 0, 2) # (seq_len, batch, input_size)

return self.rnn(embedding, state)

def begin_state(self):

return None

class HiddenStateTransformer(nn.Module):

def __init__(self, encoder_hidden_dim, decoder_hidden_dim):

super(HiddenStateTransformer, self).__init__()

self.linear = nn.Linear(encoder_hidden_dim, decoder_hidden_dim)

def forward(self, encoder_hidden):

return self.linear(encoder_hidden)

class Decoder(nn.Module):

def __init__(self, vocab_size, embed_size, num_hiddens, num_layers,

attention_size, encoder_hidden_dim, drop_prob=0):

super(Decoder, self).__init__()

self.embedding = nn.Embedding(vocab_size, embed_size)

self.attention = attention_model(2*num_hiddens, attention_size)

# GRU的输入包含attention输出的c和实际输入, 所以尺寸是 num_hiddens+embed_size

self.rnn = nn.GRU(num_hiddens + embed_size, num_hiddens,

num_layers, dropout=drop_prob)

self.out = nn.Linear(num_hiddens, vocab_size)

self.hidden_state_transformer = HiddenStateTransformer(encoder_hidden_dim, num_hiddens)

self.num_encoder_layers = num_layers

self.num_decoder_layers = num_layers

def forward(self, cur_input, state, enc_states):

"""

cur_input shape: (batch, )

state shape: (num_layers, batch, num_hiddens)

"""

# 使用注意力机制计算背景向量

c = attention_forward(self.attention, enc_states, state[-1])

# 将嵌入后的输入和背景向量在特征维连结, (批量大小, num_hiddens+embed_size)

input_and_c = torch.cat((self.embedding(cur_input), c), dim=1)

# 为输入和背景向量的连结增加时间步维,时间步个数为1

output, state = self.rnn(input_and_c.unsqueeze(0), state)

# 移除时间步维,输出形状为(批量大小, 输出词典大小)

output = self.out(output).squeeze(dim=0)

return output, state

def begin_state(self, enc_state):

# 使用线性变换将编码器的隐藏状态转换为解码器的隐藏状态

transformed_hidden = self.hidden_state_transformer(enc_state[-1])

# 处理层数不同的情况

if self.num_encoder_layers > self.num_decoder_layers:

decoder_hidden = transformed_hidden[:self.num_decoder_layers, :, :]

elif self.num_encoder_layers < self.num_decoder_layers:

repeats = self.num_decoder_layers // self.num_encoder_layers + (1 if self.num_decoder_layers % self.num_encoder_layers != 0 else 0)

decoder_hidden = transformed_hidden.repeat(repeats, 1, 1)[:self.num_decoder_layers, :, :]

else:

decoder_hidden = transformed_hidden

return decoder_hidden.unsqueeze(0).repeat(self.num_decoder_layers, 1, 1)

def attention_model(input_size, attention_size):

model = nn.Sequential(nn.Linear(input_size, attention_size, bias=False),

nn.Tanh(),

nn.Linear(attention_size, 1, bias=False))

return model

def attention_forward(model, enc_states, dec_state):

"""

enc_states: (时间步数, 批量大小, 隐藏单元个数)

dec_state: (批量大小, 隐藏单元个数)

"""

# 将解码器隐藏状态广播到和编码器隐藏状态形状相同后进行连结

dec_states = dec_state.unsqueeze(dim=0).expand_as(enc_states)

enc_and_dec_states = torch.cat((enc_states, dec_states), dim=2)

e = model(enc_and_dec_states) # 形状为(时间步数, 批量大小, 1)

alpha = F.softmax(e, dim=0) # 在时间步维度做softmax运算

return (alpha * enc_states).sum(dim=0) # 返回背景变量

seq_len, batch_size, num_hiddens = 10, 4, 8

model = attention_model(2*num_hiddens, 10)

enc_states = torch.zeros((seq_len, batch_size, num_hiddens))

dec_state = torch.zeros((batch_size, num_hiddens))

attention_forward(model, enc_states, dec_state).shape

def batch_loss(encoder, decoder, X, Y, loss, use_teacher_forcing=True):

batch_size = X.shape[0]

enc_state = encoder.begin_state()

enc_outputs, enc_state = encoder(X, enc_state)

# 初始化解码器的隐藏状态

dec_state = decoder.begin_state(enc_state)

# 解码器在最初时间步的输入是BOS

dec_input = torch.tensor([out_vocab.stoi[BOS]] * batch_size).to(device)

# 我们将使用掩码变量mask来忽略掉标签为填充项PAD的损失, 初始全1

mask, num_not_pad_tokens = torch.ones(batch_size,).to(device), 0

l = torch.tensor([0.0]).to(device)

for y in Y.permute(1,0): # Y shape: (batch, seq_len)

dec_output, dec_state = decoder(dec_input, dec_state, enc_outputs)

l = l + (mask * loss(dec_output, y)).sum()

if use_teacher_forcing:

dec_input = y # 使用强制教学

else:

dec_input = dec_output.argmax(dim=1) # 使用自回归方法

num_not_pad_tokens += mask.sum().item()

# EOS后面全是PAD. 下面一行保证一旦遇到EOS接下来的循环中mask就一直是0

mask = mask * (y != out_vocab.stoi[EOS]).float()

return l / num_not_pad_tokens

def train(encoder, decoder, dataset, lr, batch_size, num_epochs):

enc_optimizer = torch.optim.Adam(encoder.parameters(), lr=lr)

dec_optimizer = torch.optim.Adam(decoder.parameters(), lr=lr)

loss = nn.CrossEntropyLoss(reduction='none')

data_iter = Data.DataLoader(dataset, batch_size, shuffle=True)

for epoch in range(num_epochs):

l_sum = 0.0

for X, Y in data_iter:

enc_optimizer.zero_grad()

dec_optimizer.zero_grad()

use_teacher_forcing = True if epoch < num_epochs // 2 else False

l = batch_loss(encoder, decoder, X, Y, loss, use_teacher_forcing)

l.backward()

enc_optimizer.step()

dec_optimizer.step()

l_sum += l.item()

if (epoch + 1) % 10 == 0:

print("epoch %d, loss %.3f" % (epoch + 1, l_sum / len(data_iter)))

embed_size, num_hiddens, num_layers = 64, 64, 2

attention_size, drop_prob, lr, batch_size, num_epochs = 10, 0.5, 0.01, 2, 50

encoder = Encoder(len(in_vocab), embed_size, num_hiddens, num_layers,

drop_prob)

decoder = Decoder(len(out_vocab), embed_size, num_hiddens, num_layers,

attention_size, num_hiddens, drop_prob)

train(encoder, decoder, dataset, lr, batch_size, num_epochs)

def translate(encoder, decoder, input_seq, max_seq_len):

in_tokens = input_seq.split(' ')

in_tokens += [EOS] + [PAD] * (max_seq_len - len(in_tokens) - 1)

enc_input = torch.tensor([[in_vocab.stoi[tk] for tk in in_tokens]]).to(device) # batch=1

enc_state = encoder.begin_state()

enc_output, enc_state = encoder(enc_input, enc_state)

dec_input = torch.tensor([out_vocab.stoi[BOS]]).to(device)

dec_state = decoder.begin_state(enc_state)

output_tokens = []

for _ in range(max_seq_len):

dec_output, dec_state = decoder(dec_input, dec_state, enc_output)

pred = dec_output.argmax(dim=1)

pred_token = out_vocab.itos[int(pred.item())]

if pred_token == EOS: # 当任一时间步搜索出EOS时,输出序列即完成

break

else:

output_tokens.append(pred_token)

dec_input = pred

return output_tokens

input_seq = 'ils regardent .'

translate(encoder, decoder, input_seq, max_seq_len)

def bleu(pred_tokens, label_tokens, k):

len_pred, len_label = len(pred_tokens), len(label_tokens)

score = math.exp(min(0, 1 - len_label / len_pred))

for n in range(1, k + 1):

num_matches, label_subs = 0, collections.defaultdict(int)

for i in range(len_label - n + 1):

label_subs[''.join(label_tokens[i: i + n])] += 1

for i in range(len_pred - n + 1):

if label_subs[''.join(pred_tokens[i: i + n])] > 0:

num_matches += 1

label_subs[''.join(pred_tokens[i: i + n])] -= 1

score *= math.pow(num_matches / (len_pred - n + 1), math.pow(0.5, n))

return score

def score(input_seq, label_seq, k):

pred_tokens = translate(encoder, decoder, input_seq, max_seq_len)

label_tokens = label_seq.split(' ')

print('bleu %.3f, predict: %s' % (bleu(pred_tokens, label_tokens, k),

' '.join(pred_tokens)))

score('ils regardent .', 'they are watching .', k=2)

score('ils sont canadienne .', 'they are canadian .', k=2)

1.5.0 cpu epoch 10, loss 0.602 epoch 20, loss 0.301 epoch 30, loss 1.068 epoch 40, loss 0.488 epoch 50, loss 0.513 bleu 1.000, predict: they are watching . bleu 0.658, predict: they are watching .

- 在训练中,将强制教学替换为使用解码器在上一时间步的输出作为解码器在当前时间步的输入。结果有什么变化吗?

"""

强制教学:

定义:在每个时间步中,使用真实的目标序列作为解码器的输入。

优点:

更快的收敛速度,因为模型始终接收到真实的输入信号。

训练更稳定,特别是在训练的初期阶段。

缺点:

与推断阶段的行为不一致,因为推断时使用的是模型自身的输出。

自回归训练:

定义:在每个时间步中,使用解码器在前一个时间步的输出作为当前时间步的输入。

优点:

与推断阶段的行为一致,有助于模型在推断时表现更好。

模型能够学会纠正自身的错误,因为训练中会遇到自己的预测。

缺点:

训练收敛速度较慢,特别是在初期阶段,因为模型的预测精度可能较低。

训练过程中误差可能会累积,导致梯度消失或爆炸。

实验结果可能的变化

收敛速度:使用自回归训练会降低模型的收敛速度,因为模型在训练初期的预测不准确,会导致输入误差不断累积。

模型稳定性:自回归训练中,模型可能会遇到更大的不稳定性,特别是在训练初期,容易出现梯度爆炸或梯度消失的现象。

推断性能:自回归训练可以提高推断时的性能,因为训练过程更接近推断时的实际使用情况。

"""- 试着使用更大的翻译数据集来训练模型,例如 WMT [2] 和 Tatoeba Project [3]。

import collections

import io

import math

import torch

from torch import nn

import torch.optim as optim

import torch.utils.data as Data

import sentencepiece as spm

from torch.cuda.amp import GradScaler, autocast

# 配置参数

PAD, BOS, EOS = '<pad>', '<bos>', '<eos>'

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

max_seq_len = 40

batch_size = 32

hidden_dim = 256

n_layers, n_heads, pf_dim, dropout = 4, 8, 1024, 0.1

n_epochs, lr = 20, 0.0005

# 训练 SentencePiece 模型

def train_sentencepiece(input_file, model_prefix, vocab_size=1000):

spm.SentencePieceTrainer.train(input=input_file, model_prefix=model_prefix, vocab_size=vocab_size)

# 加载 SentencePiece 模型

def load_sentencepiece_model(model_file):

sp = spm.SentencePieceProcessor()

sp.load(model_file)

return sp

# 对文本进行分词

def preprocess_text(text, sp):

return sp.encode_as_pieces(text)

# 训练并加载 SentencePiece 模型

train_sentencepiece('new_sampled2_europarl-v7.fr-en.fr', 'europarl_fr', vocab_size=1000)

train_sentencepiece('new_sampled2_europarl-v7.fr-en.en', 'europarl_en', vocab_size=1000)

sp_src = load_sentencepiece_model('europarl_fr.model')

sp_tgt = load_sentencepiece_model('europarl_en.model')

# 处理单个序列并添加到 all_tokens 和 all_seqs 中

def process_one_seq(seq_tokens, all_tokens, all_seqs, max_seq_len):

all_tokens.extend(seq_tokens)

seq_tokens = seq_tokens + [EOS] + [PAD] * (max_seq_len - len(seq_tokens) - 1)

all_seqs.append(seq_tokens)

# 构建词汇表并将所有序列转换为张量

def build_data(all_tokens, all_seqs):

vocab = collections.Counter(all_tokens)

vocab = {word: idx for idx, (word, _) in enumerate(vocab.items())}

vocab.update({PAD: len(vocab), BOS: len(vocab) + 1, EOS: len(vocab) + 2})

indices = [[vocab[w] for w in seq] for seq in all_seqs]

return vocab, torch.tensor(indices)

# 从文件中读取数据并进行处理

def read_data(src_file, tgt_file, max_seq_len):

in_tokens, out_tokens, in_seqs, out_seqs = [], [], [], []

with io.open(src_file, encoding='utf-8') as f_src, io.open(tgt_file, encoding='utf-8') as f_tgt:

src_lines = f_src.readlines()

tgt_lines = f_tgt.readlines()

for src_line, tgt_line in zip(src_lines, tgt_lines):

src_tokens = preprocess_text(src_line.strip(), sp_src)

tgt_tokens = preprocess_text(tgt_line.strip(), sp_tgt)

if max(len(src_tokens), len(tgt_tokens)) > max_seq_len - 1:

continue

process_one_seq(src_tokens, in_tokens, in_seqs, max_seq_len)

process_one_seq(tgt_tokens, out_tokens, out_seqs, max_seq_len)

in_vocab, in_data = build_data(in_tokens, in_seqs)

out_vocab, out_data = build_data(out_tokens, out_seqs)

return in_vocab, out_vocab, Data.TensorDataset(in_data, out_data)

# 从 new1_fra.txt 中读取数据并进行处理

def read_validation_data(file, max_seq_len):

in_tokens, out_tokens, in_seqs, out_seqs = [], [], [], []

with io.open(file, encoding='utf-8') as f:

lines = f.readlines()

for line in lines:

parts = line.strip().split('\t')

if len(parts) < 2:

continue

src_text, tgt_text = parts[0], parts[1]

src_tokens = preprocess_text(src_text, sp_src)

tgt_tokens = preprocess_text(tgt_text, sp_tgt)

if max(len(src_tokens), len(tgt_tokens)) > max_seq_len - 1:

continue

process_one_seq(src_tokens, in_tokens, in_seqs, max_seq_len)

process_one_seq(tgt_tokens, out_tokens, out_seqs, max_seq_len)

in_vocab, in_data = build_data(in_tokens, in_seqs)

out_vocab, out_data = build_data(out_tokens, out_seqs)

return in_vocab, out_vocab, Data.TensorDataset(in_data, out_data)

# 读取训练数据

in_vocab, out_vocab, train_dataset = read_data('new_sampled2_europarl-v7.fr-en.fr', 'new_sampled2_europarl-v7.fr-en.en', max_seq_len)

# 读取验证数据

_, _, val_dataset = read_validation_data('new1_fra.txt', max_seq_len)

# 提前保存处理好的数据

torch.save((in_vocab, out_vocab, train_dataset, val_dataset), 'preprocessed_data.pt')

# 创建数据加载器,减少 num_workers 以帮助隔离问题

train_dataloader = Data.DataLoader(train_dataset, batch_size=batch_size, shuffle=True, num_workers=0)

val_dataloader = Data.DataLoader(val_dataset, batch_size=batch_size, shuffle=False, num_workers=0)

# Transformer 模型定义

class Transformer(nn.Module):

def __init__(self, input_dim, output_dim, hidden_dim, n_layers, n_heads, pf_dim, dropout):

super().__init__()

self.encoder = nn.TransformerEncoder(

nn.TransformerEncoderLayer(d_model=hidden_dim, nhead=n_heads, dim_feedforward=pf_dim, dropout=dropout, batch_first=True),

num_layers=n_layers

)

self.decoder = nn.TransformerDecoder(

nn.TransformerDecoderLayer(d_model=hidden_dim, nhead=n_heads, dim_feedforward=pf_dim, dropout=dropout, batch_first=True),

num_layers=n_layers

)

self.src_tok_emb = nn.Embedding(input_dim, hidden_dim)

self.tgt_tok_emb = nn.Embedding(output_dim, hidden_dim)

self.positional_encoding = nn.Parameter(torch.zeros(1, max_seq_len, hidden_dim))

self.fc_out = nn.Linear(hidden_dim, output_dim)

self.dropout = nn.Dropout(dropout)

def forward(self, src, tgt):

src_mask = self.generate_square_subsequent_mask(src.size(1)).to(src.device)

tgt_mask = self.generate_square_subsequent_mask(tgt.size(1)).to(tgt.device)

src_emb = self.dropout(self.src_tok_emb(src) + self.positional_encoding[:, :src.size(1), :])

tgt_emb = self.dropout(self.tgt_tok_emb(tgt) + self.positional_encoding[:, :tgt.size(1), :])

enc_src = self.encoder(src_emb, src_mask)

output = self.decoder(tgt_emb, enc_src, tgt_mask)

return self.fc_out(output)

def generate_square_subsequent_mask(self, sz):

mask = (torch.triu(torch.ones(sz, sz)) == 1).transpose(0, 1)

mask = mask.float().masked_fill(mask == 0, float('-inf')).masked_fill(mask == 1, float(0.0))

return mask

# 确保词汇表大小正确

input_dim = len(in_vocab)

output_dim = len(out_vocab)

print("Input Vocabulary Size:", input_dim)

print("Output Vocabulary Size:", output_dim)

# 初始化模型

model = Transformer(input_dim, output_dim, hidden_dim, n_layers, n_heads, pf_dim, dropout).to(device)

# 定义损失函数和优化器

criterion = nn.CrossEntropyLoss(ignore_index=in_vocab[PAD])

optimizer = optim.AdamW(model.parameters(), lr=lr)

scaler = GradScaler()

# 训练函数

def train_epoch(model, dataloader, criterion, optimizer, scaler, device, pad_idx):

model.train()

epoch_loss = 0

for src, tgt in dataloader:

src, tgt = src.to(device), tgt.to(device)

tgt_input = tgt[:, :-1]

tgt_output = tgt[:, 1:].contiguous().view(-1)

optimizer.zero_grad()

with autocast():

output = model(src, tgt_input).view(-1, output_dim)

loss = criterion(output, tgt_output)

scaler.scale(loss).backward()

scaler.step(optimizer)

scaler.update()

epoch_loss += loss.item()

return epoch_loss / len(dataloader)

# 验证函数

def evaluate_epoch(model, dataloader, criterion, device, pad_idx):

model.eval()

epoch_loss = 0

with torch.no_grad():

for src, tgt in dataloader:

src, tgt = src.to(device), tgt.to(device)

tgt_input = tgt[:, :-1]

tgt_output = tgt[:, 1:].contiguous().view(-1)

with autocast():

output = model(src, tgt_input).view(-1, output_dim)

loss = criterion(output, tgt_output)

epoch_loss += loss.item()

return epoch_loss / len(dataloader)

# 训练和验证循环

best_val_loss = float('inf')

early_stopping_patience = 5

no_improvement_epochs = 0

for epoch in range(n_epochs):

train_loss = train_epoch(model, train_dataloader, criterion, optimizer, scaler, device, in_vocab[PAD])

val_loss = evaluate_epoch(model, val_dataloader, criterion, device, in_vocab[PAD])

if val_loss < best_val_loss:

best_val_loss = val_loss

torch.save(model.state_dict(), 'best_model.pt')

no_improvement_epochs = 0

else:

no_improvement_epochs += 1

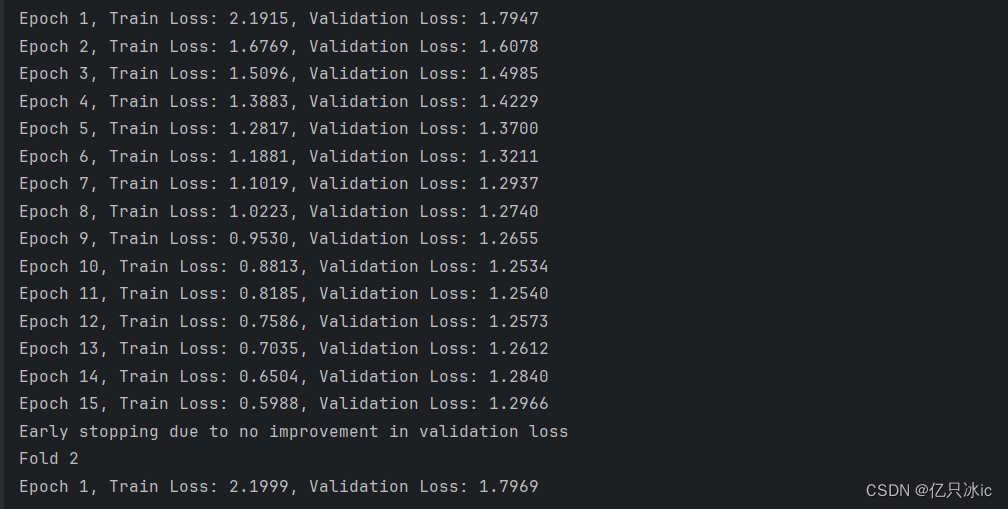

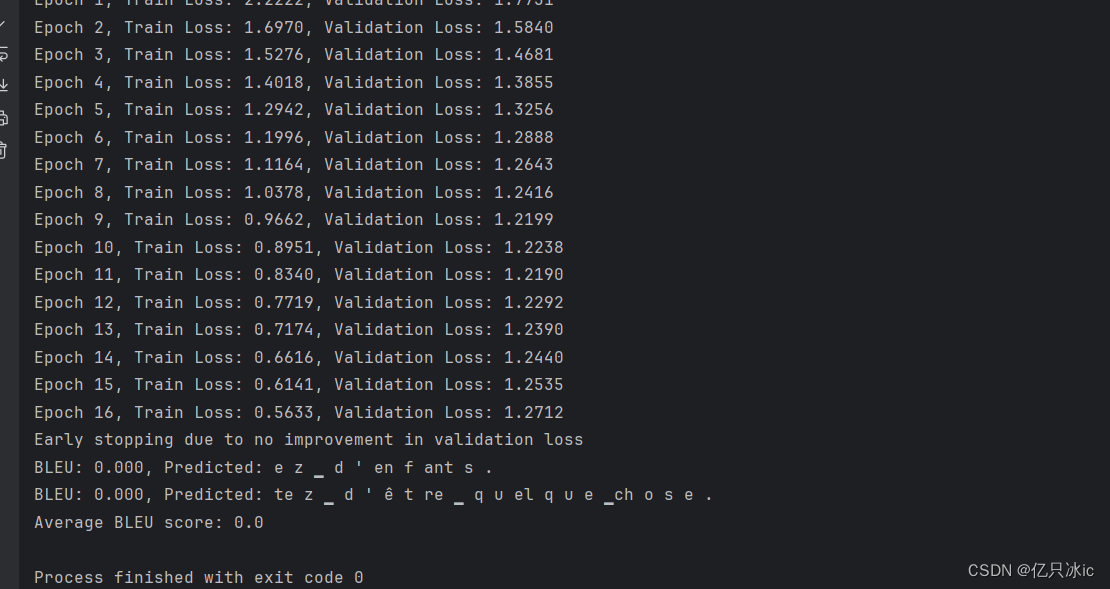

print(f'Epoch {epoch + 1}, Train Loss: {train_loss:.4f}, Validation Loss: {val_loss:.4f}')

if no_improvement_epochs >= early_stopping_patience:

print("Early stopping due to no improvement in validation loss")

break

# Beam Search 解码

def beam_search_decode(model, src_sentence, max_seq_len, sp_src, sp_tgt, in_vocab, out_vocab, device, beam_size=5):

model.eval()

src_tokens = preprocess_text(src_sentence, sp_src)

src_tokens = src_tokens[:max_seq_len - 1] + [EOS] + [PAD] * (max_seq_len - len(src_tokens) - 1)

src_indices = torch.tensor([in_vocab[tk] for tk in src_tokens]).unsqueeze(0).to(device)

src_mask = model.generate_square_subsequent_mask(src_indices.size(1)).to(device)

src_emb = model.src_tok_emb(src_indices) + model.positional_encoding[:, :src_indices.size(1), :]

enc_src = model.encoder(src_emb, src_mask)

beams = [(0, [BOS])]

completed_beams = []

for _ in range(max_seq_len):

new_beams = []

for score, seq in beams:

tgt_indices = torch.tensor([[out_vocab[tk] for tk in seq]]).to(device)

tgt_mask = model.generate_square_subsequent_mask(tgt_indices.size(1)).to(device)

tgt_emb = model.tgt_tok_emb(tgt_indices) + model.positional_encoding[:, :tgt_indices.size(1), :]

with torch.no_grad():

output = model.decoder(tgt_emb, enc_src, tgt_mask)

output = model.fc_out(output)

output = output[:, -1, :] # Use the last token's prediction

topk_probs, topk_indices = torch.topk(output, beam_size)

for i in range(beam_size):

new_seq = seq + [list(out_vocab.keys())[topk_indices[0][i].item()]]

new_score = score + topk_probs[0][i].item()

if new_seq[-1] == EOS:

completed_beams.append((new_score, new_seq))

else:

new_beams.append((new_score, new_seq))

beams = sorted(new_beams, key=lambda x: x[0], reverse=True)[:beam_size]

if completed_beams:

return ' '.join(completed_beams[0][1][1:-1])

else:

return ' '.join(beams[0][1][1:])

# 翻译函数

def translate(model, src_sentence, max_seq_len, sp_src, sp_tgt, in_vocab, out_vocab, device):

return beam_search_decode(model, src_sentence, max_seq_len, sp_src, sp_tgt, in_vocab, out_vocab, device, beam_size=5)

# BLEU 评分函数

def bleu(pred_tokens, label_tokens, k):

len_pred, len_label = len(pred_tokens), len(label_tokens)

score = math.exp(min(0, 1 - len_label / len_pred))

for n in range(1, k + 1):

num_matches, label_subs = 0, collections.defaultdict(int)

for i in range(len_label - n + 1):

label_subs[''.join(label_tokens[i: i + n])] += 1

for i in range(len_pred - n + 1):

if label_subs[''.join(pred_tokens[i: i + n])] > 0:

num_matches += 1

label_subs[''.join(pred_tokens[i: i + n])] -= 1

num_pred_ngrams = max(len_pred - n + 1, 1)

score *= math.pow(num_matches / num_pred_ngrams, math.pow(0.5, n))

return score

# 评估函数

def score(model, src_sentence, tgt_sentence, max_seq_len, sp_src, sp_tgt, in_vocab, out_vocab, device):

pred_tokens = translate(model, src_sentence, max_seq_len, sp_src, sp_tgt, in_vocab, out_vocab, device).split(' ')

label_tokens = preprocess_text(tgt_sentence, sp_tgt)

bleu_score = bleu(pred_tokens, label_tokens, 4) # 使用4-gram计算BLEU分数

print('BLEU: %.3f, Predicted: %s' % (bleu_score, ' '.join(pred_tokens)))

return bleu_score

# 示例评估

bleu_scores = []

bleu_scores.append(score(model, "they are watching.", "ils regardent .", max_seq_len, sp_src, sp_tgt, in_vocab, out_vocab, device))

bleu_scores.append(score(model, "they are Romanian.", "ils sont roumains .", max_seq_len, sp_src, sp_tgt, in_vocab, out_vocab, device))

print('Average BLEU score:', sum(bleu_scores) / len(bleu_scores))

上述代码以new_fra.txt为验证集,结果出现了过拟合,BLEU分数为0。模型不能很好的进行语言翻译。

下面是添加了k折交叉验证的代码,能够较好的在原有模型上降低验证损失,提高泛化能力:

import collections

import io

import math

import torch

from torch import nn

import torch.optim as optim

import torch.utils.data as Data

import sentencepiece as spm

from torch.cuda.amp import GradScaler, autocast

from sklearn.model_selection import KFold

# 配置参数

PAD, BOS, EOS = '<pad>', '<bos>', '<eos>'

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

max_seq_len = 40

batch_size = 32

hidden_dim = 256

n_layers, n_heads, pf_dim, dropout = 4, 8, 1024, 0.1

n_epochs, lr = 20, 0.0005

k_folds = 5

# 训练 SentencePiece 模型

def train_sentencepiece(input_file, model_prefix, vocab_size=1000):

spm.SentencePieceTrainer.train(input=input_file, model_prefix=model_prefix, vocab_size=vocab_size)

# 加载 SentencePiece 模型

def load_sentencepiece_model(model_file):

sp = spm.SentencePieceProcessor()

sp.load(model_file)

return sp

# 对文本进行分词

def preprocess_text(text, sp):

return sp.encode_as_pieces(text)

# 训练并加载 SentencePiece 模型

train_sentencepiece('new_sampled2_europarl-v7.fr-en.fr', 'europarl_fr', vocab_size=1000)

train_sentencepiece('new_sampled2_europarl-v7.fr-en.en', 'europarl_en', vocab_size=1000)

sp_src = load_sentencepiece_model('europarl_fr.model')

sp_tgt = load_sentencepiece_model('europarl_en.model')

# 处理单个序列并添加到 all_tokens 和 all_seqs 中

def process_one_seq(seq_tokens, all_tokens, all_seqs, max_seq_len):

all_tokens.extend(seq_tokens)

seq_tokens = seq_tokens + [EOS] + [PAD] * (max_seq_len - len(seq_tokens) - 1)

all_seqs.append(seq_tokens)

# 构建词汇表并将所有序列转换为张量

def build_data(all_tokens, all_seqs):

vocab = collections.Counter(all_tokens)

vocab = {word: idx for idx, (word, _) in enumerate(vocab.items())}

vocab.update({PAD: len(vocab), BOS: len(vocab) + 1, EOS: len(vocab) + 2})

indices = [[vocab[w] for w in seq] for seq in all_seqs]

return vocab, torch.tensor(indices)

# 从文件中读取数据并进行处理

def read_data(src_file, tgt_file, max_seq_len):

in_tokens, out_tokens, in_seqs, out_seqs = [], [], [], []

with io.open(src_file, encoding='utf-8') as f_src, io.open(tgt_file, encoding='utf-8') as f_tgt:

src_lines = f_src.readlines()

tgt_lines = f_tgt.readlines()

for src_line, tgt_line in zip(src_lines, tgt_lines):

src_tokens = preprocess_text(src_line.strip(), sp_src)

tgt_tokens = preprocess_text(tgt_line.strip(), sp_tgt)

if max(len(src_tokens), len(tgt_tokens)) > max_seq_len - 1:

continue

process_one_seq(src_tokens, in_tokens, in_seqs, max_seq_len)

process_one_seq(tgt_tokens, out_tokens, out_seqs, max_seq_len)

return in_tokens, out_tokens, in_seqs, out_seqs

# 从 new1_fra.txt 中读取数据并进行处理

def read_validation_data(file, max_seq_len):

in_tokens, out_tokens, in_seqs, out_seqs = [], [], [], []

with io.open(file, encoding='utf-8') as f:

lines = f.readlines()

for line in lines:

parts = line.strip().split('\t')

if len(parts) < 2:

continue

src_text, tgt_text = parts[0], parts[1]

src_tokens = preprocess_text(src_text, sp_src)

tgt_tokens = preprocess_text(tgt_text, sp_tgt)

if max(len(src_tokens), len(tgt_tokens)) > max_seq_len - 1:

continue

process_one_seq(src_tokens, in_tokens, in_seqs, max_seq_len)

process_one_seq(tgt_tokens, out_tokens, out_seqs, max_seq_len)

return in_tokens, out_tokens, in_seqs, out_seqs

# Transformer 模型定义

class Transformer(nn.Module):

def __init__(self, input_dim, output_dim, hidden_dim, n_layers, n_heads, pf_dim, dropout):

super().__init__()

self.encoder = nn.TransformerEncoder(

nn.TransformerEncoderLayer(d_model=hidden_dim, nhead=n_heads, dim_feedforward=pf_dim, dropout=dropout, batch_first=True),

num_layers=n_layers

)

self.decoder = nn.TransformerDecoder(

nn.TransformerDecoderLayer(d_model=hidden_dim, nhead=n_heads, dim_feedforward=pf_dim, dropout=dropout, batch_first=True),

num_layers=n_layers

)

self.src_tok_emb = nn.Embedding(input_dim, hidden_dim)

self.tgt_tok_emb = nn.Embedding(output_dim, hidden_dim)

self.positional_encoding = nn.Parameter(torch.zeros(1, max_seq_len, hidden_dim))

self.fc_out = nn.Linear(hidden_dim, output_dim)

self.dropout = nn.Dropout(dropout)

def forward(self, src, tgt):

src_mask = self.generate_square_subsequent_mask(src.size(1)).to(src.device)

tgt_mask = self.generate_square_subsequent_mask(tgt.size(1)).to(tgt.device)

src_emb = self.dropout(self.src_tok_emb(src) + self.positional_encoding[:, :src.size(1), :])

tgt_emb = self.dropout(self.tgt_tok_emb(tgt) + self.positional_encoding[:, :tgt.size(1), :])

enc_src = self.encoder(src_emb, src_mask)

output = self.decoder(tgt_emb, enc_src, tgt_mask)

return self.fc_out(output)

def generate_square_subsequent_mask(self, sz):

mask = (torch.triu(torch.ones(sz, sz)) == 1).transpose(0, 1)

mask = mask.float().masked_fill(mask == 0, float('-inf')).masked_fill(mask == 1, float(0.0))

return mask

# 训练函数

def train_epoch(model, dataloader, criterion, optimizer, scaler, device, pad_idx, output_dim):

model.train()

epoch_loss = 0

for src, tgt in dataloader:

src, tgt = src.to(device), tgt.to(device)

tgt_input = tgt[:, :-1]

tgt_output = tgt[:, 1:].contiguous().view(-1)

optimizer.zero_grad()

with autocast():

output = model(src, tgt_input).view(-1, output_dim)

loss = criterion(output, tgt_output)

scaler.scale(loss).backward()

scaler.step(optimizer)

scaler.update()

epoch_loss += loss.item()

return epoch_loss / len(dataloader)

# 验证函数

def evaluate_epoch(model, dataloader, criterion, device, pad_idx, output_dim):

model.eval()

epoch_loss = 0

with torch.no_grad():

for src, tgt in dataloader:

src, tgt = src.to(device), tgt.to(device)

tgt_input = tgt[:, :-1]

tgt_output = tgt[:, 1:].contiguous().view(-1)

with autocast():

output = model(src, tgt_input).view(-1, output_dim)

loss = criterion(output, tgt_output)

epoch_loss += loss.item()

return epoch_loss / len(dataloader)

# 读取所有数据文件

files = [

('new_sampled2_europarl-v7.fr-en.fr', 'new_sampled2_europarl-v7.fr-en.en'),

('new1_fra.txt', 'new1_fra.txt') # 注意 new1_fra.txt 特殊格式

]

# 读取所有数据

all_in_tokens, all_out_tokens, all_in_seqs, all_out_seqs = [], [], [], []

for src_file, tgt_file in files:

if src_file == 'new1_fra.txt':

in_tokens, out_tokens, in_seqs, out_seqs = read_validation_data(src_file, max_seq_len)

else:

in_tokens, out_tokens, in_seqs, out_seqs = read_data(src_file, tgt_file, max_seq_len)

all_in_tokens.extend(in_tokens)

all_out_tokens.extend(out_tokens)

all_in_seqs.extend(in_seqs)

all_out_seqs.extend(out_seqs)

# 构建词汇表和数据集

in_vocab, in_data = build_data(all_in_tokens, all_in_seqs)

out_vocab, out_data = build_data(all_out_tokens, all_out_seqs)

dataset = Data.TensorDataset(in_data, out_data)

# K折交叉验证

kf = KFold(n_splits=k_folds, shuffle=True)

def init_model(input_dim, output_dim):

return Transformer(input_dim, output_dim, hidden_dim, n_layers, n_heads, pf_dim, dropout).to(device)

early_stopping_patience = 5

for fold, (train_idx, val_idx) in enumerate(kf.split(dataset)):

print(f'Fold {fold + 1}')

train_subsampler = Data.SubsetRandomSampler(train_idx)

val_subsampler = Data.SubsetRandomSampler(val_idx)

train_dataloader = Data.DataLoader(dataset, batch_size=batch_size, sampler=train_subsampler, num_workers=0)

val_dataloader = Data.DataLoader(dataset, batch_size=batch_size, sampler=val_subsampler, num_workers=0)

model = init_model(len(in_vocab), len(out_vocab))

criterion = nn.CrossEntropyLoss(ignore_index=in_vocab[PAD])

optimizer = optim.AdamW(model.parameters(), lr=lr)

scaler = GradScaler()

output_dim = len(out_vocab)

best_val_loss = float('inf')

no_improvement_epochs = 0

for epoch in range(n_epochs):

train_loss = train_epoch(model, train_dataloader, criterion, optimizer, scaler, device, in_vocab[PAD], output_dim)

val_loss = evaluate_epoch(model, val_dataloader, criterion, device, in_vocab[PAD], output_dim)

if val_loss < best_val_loss:

best_val_loss = val_loss

torch.save(model.state_dict(), f'best_model_fold_{fold + 1}.pt')

no_improvement_epochs = 0

else:

no_improvement_epochs += 1

print(f'Epoch {epoch + 1}, Train Loss: {train_loss:.4f}, Validation Loss: {val_loss:.4f}')

if no_improvement_epochs >= early_stopping_patience:

print("Early stopping due to no improvement in validation loss")

break

# Beam Search 解码

def beam_search_decode(model, src_sentence, max_seq_len, sp_src, sp_tgt, in_vocab, out_vocab, device, beam_size=5):

model.eval()

src_tokens = preprocess_text(src_sentence, sp_src)

src_tokens = src_tokens[:max_seq_len - 1] + [EOS] + [PAD] * (max_seq_len - len(src_tokens) - 1)

src_indices = torch.tensor([in_vocab[tk] for tk in src_tokens]).unsqueeze(0).to(device)

src_mask = model.generate_square_subsequent_mask(src_indices.size(1)).to(device)

src_emb = model.src_tok_emb(src_indices) + model.positional_encoding[:, :src_indices.size(1), :]

enc_src = model.encoder(src_emb, src_mask)

beams = [(0, [BOS])]

completed_beams = []

for _ in range(max_seq_len):

new_beams = []

for score, seq in beams:

tgt_indices = torch.tensor([[out_vocab[tk] for tk in seq]]).to(device)

tgt_mask = model.generate_square_subsequent_mask(tgt_indices.size(1)).to(device)

tgt_emb = model.tgt_tok_emb(tgt_indices) + model.positional_encoding[:, :tgt_indices.size(1), :]

with torch.no_grad():

output = model.decoder(tgt_emb, enc_src, tgt_mask)

output = model.fc_out(output)

output = output[:, -1, :] # Use the last token's prediction

topk_probs, topk_indices = torch.topk(output, beam_size)

for i in range(beam_size):

new_seq = seq + [list(out_vocab.keys())[topk_indices[0][i].item()]]

new_score = score + topk_probs[0][i].item()

if new_seq[-1] == EOS:

completed_beams.append((new_score, new_seq))

else:

new_beams.append((new_score, new_seq))

beams = sorted(new_beams, key=lambda x: x[0], reverse=True)[:beam_size]

if completed_beams:

return ' '.join(completed_beams[0][1][1:-1])

else:

return ' '.join(beams[0][1][1:])

# 翻译函数

def translate(model, src_sentence, max_seq_len, sp_src, sp_tgt, in_vocab, out_vocab, device):

return beam_search_decode(model, src_sentence, max_seq_len, sp_src, sp_tgt, in_vocab, out_vocab, device, beam_size=5)

# BLEU 评分函数

def bleu(pred_tokens, label_tokens, k):

len_pred, len_label = len(pred_tokens), len(label_tokens)

score = math.exp(min(0, 1 - len_label / len_pred))

for n in range(1, k + 1):

num_matches, label_subs = 0, collections.defaultdict(int)

for i in range(len_label - n + 1):

label_subs[''.join(label_tokens[i: i + n])] += 1

for i in range(len_pred - n + 1):

if label_subs[''.join(pred_tokens[i: i + n])] > 0:

num_matches += 1

label_subs[''.join(pred_tokens[i: i + n])] -= 1

num_pred_ngrams = max(len_pred - n + 1, 1)

score *= math.pow(num_matches / num_pred_ngrams, math.pow(0.5, n))

return score

# 评估函数

def score(model, src_sentence, tgt_sentence, max_seq_len, sp_src, sp_tgt, in_vocab, out_vocab, device):

pred_tokens = translate(model, src_sentence, max_seq_len, sp_src, sp_tgt, in_vocab, out_vocab, device).split(' ')

label_tokens = preprocess_text(tgt_sentence, sp_tgt)

bleu_score = bleu(pred_tokens, label_tokens, 4) # 使用4-gram计算BLEU分数

print('BLEU: %.3f, Predicted: %s' % (bleu_score, ' '.join(pred_tokens)))

return bleu_score

# 示例评估

model = init_model(len(in_vocab), len(out_vocab))

model.load_state_dict(torch.load('best_model_fold_1.pt')) # 加载第一个折的最佳模型作为示例

bleu_scores = []

bleu_scores.append(score(model, "they are watching.", "ils regardent .", max_seq_len, sp_src, sp_tgt, in_vocab, out_vocab, device))

bleu_scores.append(score(model, "they are Romanian.", "ils sont roumains .", max_seq_len, sp_src, sp_tgt, in_vocab, out_vocab, device))

print('Average BLEU score:', sum(bleu_scores) / len(bleu_scores))

结果图:

BLEU分数为0,可能是实验中数据集问题。

参考文献

[1] Papineni, K., Roukos, S., Ward, T., & Zhu, W. J. (2002, July). BLEU: a method for automatic evaluation of machine translation. In Proceedings of the 40th annual meeting on association for computational linguistics (pp. 311-318). Association for Computational Linguistics.

[2] WMT. Translation Task - ACL 2014 Ninth Workshop on Statistical Machine Translation

[3] Tatoeba Project. Tab-delimited Bilingual Sentence Pairs from the Tatoeba Project (Good for Anki and Similar Flashcard Applications)

1104

1104

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?