CNN(卷积神经网络)介绍

什么是CNN?

首先什么是CNN呢?我们在这里模仿儿童的学习方式,当小孩子学习一个陌生东西的时候,往往会从问题开始,这里我们拿CNN做对比,来介绍什么是CNN。

从上面的对话,我们知道CNN的全称是"Convolutional Neural Network"(卷积神经网络)。而神经网络是一种模仿生物神经网络(动物的中枢神经系统,特别是大脑)结构和功能的数学模型或计算模型。神经网络由大量的人工神经元组成,按不同的连接方式构建不同的网络。CNN是其中的一种,还有GAN(生成对抗网络),RNN(递归神经网络)等,神经网络能够类似人一样具有简单的决定能力和简单的判断能力,在图像和语音识别方面能够给出更好的结果。

CNN的原理

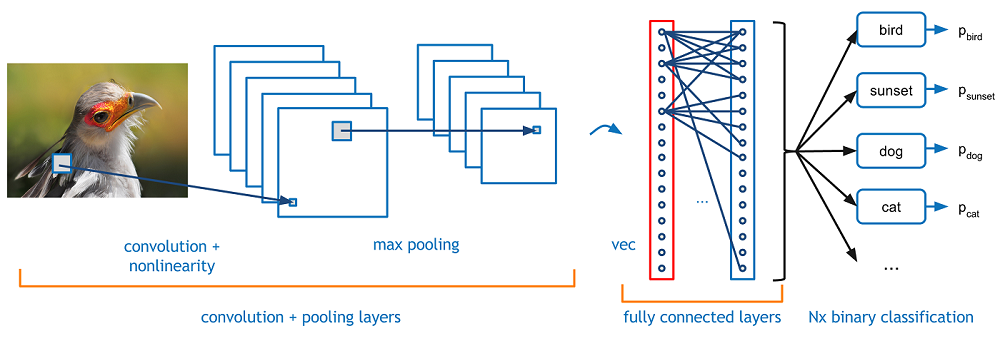

CNN被广泛应用在图像识别领域,那么CNN是如何实现图像识别的呢?我们根据图中的例子来解释CNN的原理。

CNN是一种人工神经网络,CNN的结构可以分为3层:

- 卷积层(Convolutional Layer) - 主要作用是提取特征。

- 池化层(Max Pooling Layer) - 主要作用是下采样(downsampling),却不会损坏识别结果。

- 全连接层(Fully Connected Layer) - 主要作用是分类。

我们可以拿人类来做类比,比如你现在看到上图中的小鸟,人类如何识别它就是鸟的呢?首先你判断鸟的嘴是尖的,全身有羽毛和翅膀,有尾巴。然后通过这些联系起来判断这是一只鸟。而CNN的原理也类似,通过卷积层来查找特征,然后通过全连接层来做分类判断这是一只鸟,而池化层则是为了让训练的参数更少,在保持采样不变的情况下,忽略掉一些信息。

卷积层(Convolutional Layer)

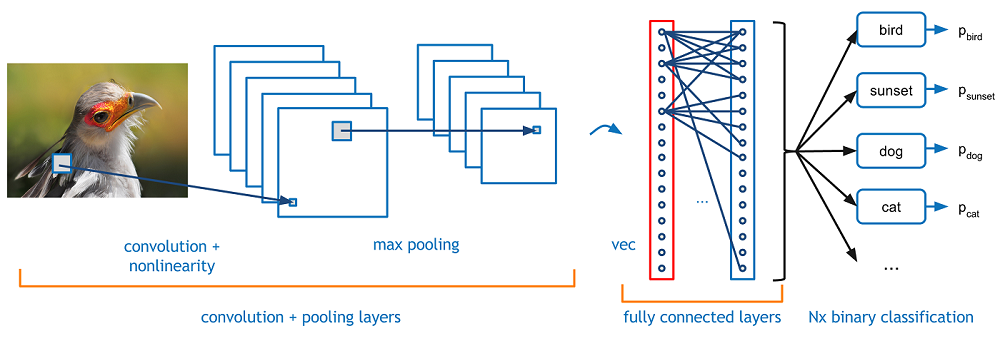

那么卷基层是如何提取特征的呢?我们都知道卷积就是2个函数的叠加,应用在图像上,则可以理解为拿一个滤镜放在图像上,找出图像中的某些特征,而我们需要找到很多特征才能区分某一物体,所以我们会有很多滤镜,通过这些滤镜的组合,我们可以得出很多的特征。

首先一张图片在计算机中保存的格式为一个个的像素,比如一张长度为1080,宽度为1024的图片,总共包含了1080 * 1024的像素,如果为RGB图片,因为RGB图片由3种颜色叠加而成,包含3个通道,因此我们需要用1080 * 1024 * 3的数组来表示RGB图片。

我们先从简单的情况开始考虑,假设我们有一组灰度图片,这样图片就可以表示为一个矩阵,假设我们的图片大小为5 * 5,那么我们就可以得到一个5 * 5的矩阵,接下来,我们用一组过滤器(Filter)来对图片过滤,过滤的过程就是求卷积的过程。假设我们的Filter的大小为3 * 3,我们从图片的左上角开始移动Filter,并且把每次矩阵相乘的结果记录下来。可以通过下面的过程来演示。

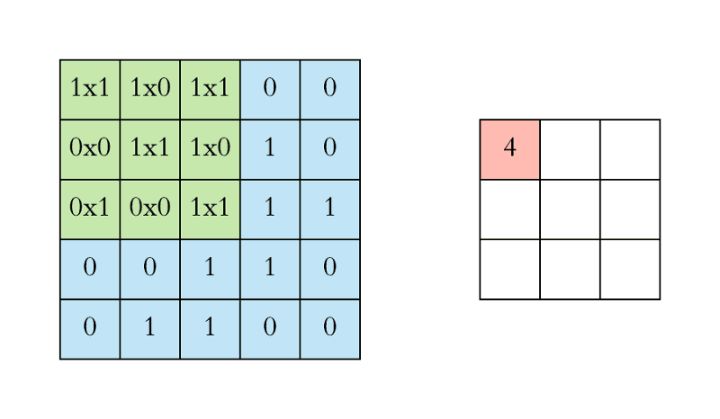

每次Filter从矩阵的左上角开始移动,每次移动的步长是1,从左到右,从上到下,依次移动到矩阵末尾之后结束,每次都把Filter和矩阵对应的区域做乘法,得出一个新的矩阵。这其实就是做卷积的过程。而Filer的选择非常关键,Filter决定了过滤方式,通过不同的Filter会得到不同的特征。举一个例子就是:

我们选择了2种Filter分别对图中的矩阵做卷积,可以看到值越大的就表示找到的特征越匹配,值越小的就表示找到的特征越偏离。Filter1主要是找到为"|"形状的特征,可以看到找到1处,转换后相乘值为3的网格就表示原始的图案中有"|",而Filter2则表示找到"\"形状的特征,我们可以看到在图中可以找到2处。拿真实的图像举例子,我们经过卷积层的处理之后,得到如下的一些特征结果:

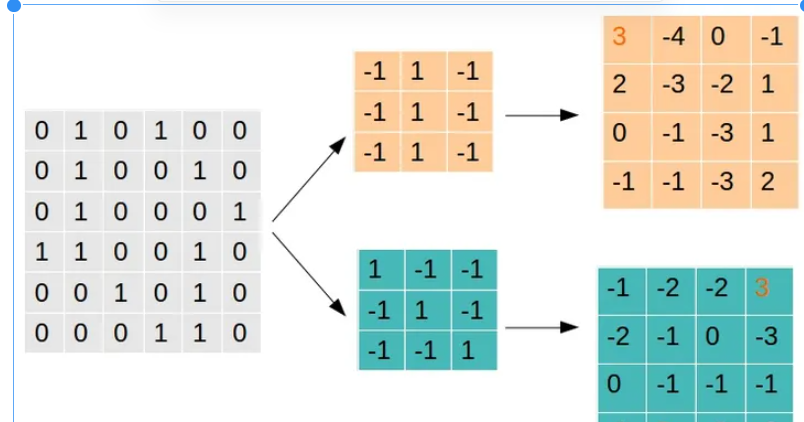

池化层(Max Pooling Layer)

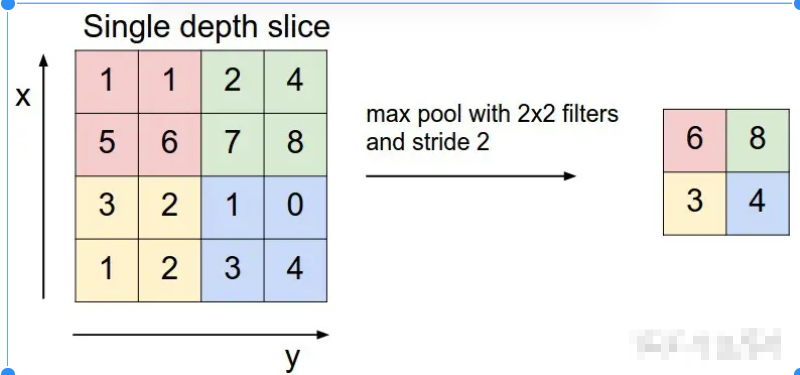

经过卷积层处理的特征是否就可以直接用来分类了呢,答案是不能。我们假设一张图片的大小为500 * 500,经过50个Filter的卷积层之后,得到的结果为500 * 500 * 50",维度非常大,我们需要减少数据大小,而不会对识别的结果产生影响,即对卷积层的输出做下采样(downsampling),这时候就引入了池化层。池化层的原理很简单,先看一个例子:

我们先从右边看起,可以看到把一个4 * 4的矩阵按照2 * 2做切分,每个2 * 2的矩阵里,我们取最大的值保存下来,红色的矩阵里面最大值为6,所以输出为6,绿色的矩阵最大值为8,输出为8,黄色的为3,蓝色的为4,。这样我们就把原来4 * 4的矩阵变为了一个2 * 2的矩阵。在看左边,我们发现原来224 * 224的矩阵,缩小为112 * 112了,减少了一半大小。

那么为什么这样做可行呢?丢失的一部分数据会不会对结果有影响,实际上,池化层不会对数据丢失产生影响,因为我们每次保留的输出都是局部最显著的一个输出,而池化之后,最显著的特征并没。我们只保留了认为最显著的特征,而把其他无用的信息丢掉,来减少运算。池化层的引入还保证了平移不变性,即同样的图像经过翻转变形之后,通过池化层,可以得到相似的结果。

既然是降采样,那么是否有其他方法实现降采样,也能达到同样的效果呢?当然有,通过其它的降采样方式,我们同样可以得到和池化层相同的结果,因此就可以拿这种方式替换掉池化层,可以起到相同的效果。

通常卷积层和池化层会重复多次形成具有多个隐藏层的网络,俗称深度神经网络。

全连接层(Fully Connected Layer)

全连接层的作用主要是进行分类。前面通过卷积和池化层得出的特征,在全连接层对这些总结好的特征做分类。全连接层就是一个完全连接的神经网络,根据权重每个神经元反馈的比重不一样,最后通过调整权重和网络得到分类的结果。

因为全连接层占用了神经网络80%的参数,因此对全连接层的优化就显得至关重要,现在也有用平均值来做最后的分类的。

基本概念

- 卷积核 - 卷积核就是图像处理时,给定输入图像,在输出图像中每一个像素是输入图像中一个小区域中像素的加权平均,其中权值由一个函数定义,这个函数称为卷积核。[kernel](https://en.wikipedia.org/wiki/Kernel_(image_processing))

- 卷积 - 卷积可以对应到2个函数叠加,因此用一个filter和图片叠加就可以求出整个图片的情况,可以用在图像的边缘检测,图片锐化,模糊等方面。[Convolution](https://en.wikipedia.org/wiki/Convolution)

论文汇总

以下内容引用自[A Beginner's Guide to Convolutional Neural Networks (CNNs)](A Beginner's Guide to Convolutional Neural Networks (CNNs)),主要是为了整理和学习相关内容,从新整理了一遍。

ImageNet分类

- Microsoft (Deep Residual Learning) [Paper](https://arxiv.org/pdf/1512.03385v1.pdf) [Slide](http://image-net.org/challenges/talks/ilsvrc2015_deep_residual_learning_kaiminghe.pdf)

- Kaiming He, Xiangyu Zhang, Shaoqing Ren, Jian Sun, Deep Residual Learning for Image Recognition, arXiv:1512.03385.

- Microsoft (PReLu/Weight initialization) [Paper](https://arxiv.org/pdf/1502.01852.pdf)

- Kaiming He, Xiangyu Zhang, Shaoqing Ren, Jian Sun, Delving Deep into Rectifiers: Surpassing Human-Level Performance on ImageNet Classification, arXiv:1502.01852.

- Batch Normalization [Paper](https://arxiv.org/pdf/1502.03167.pdf)

- Sergey Ioffe, Christian Szegedy, Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift, arXiv:1502.03167.

- GoogLeNet [Paper](http://arxiv.org/pdf/1409.4842)

- Christian Szegedy, Wei Liu, Yangqing Jia, Pierre Sermanet, Scott Reed, Dragomir Anguelov, Dumitru Erhan, Vincent Vanhoucke, Andrew Rabinovich, CVPR, 2015.

- VGG-Net [Web](Very Deep CNNS for Large-Scale Visual Recognition) [Paper](https://arxiv.org/pdf/1409.1556.pdf)

- Karen Simonyan and Andrew Zisserman, Very Deep Convolutional Networks for Large-Scale Visual Recognition, ICLR, 2015.

- AlexNet [Paper](NIPS 2012 Proceedings)

- Alex Krizhevsky, Ilya Sutskever, Geoffrey E. Hinton, ImageNet Classification with Deep Convolutional Neural Networks, NIPS, 2012.

物体检测(Object Detection)

- PVANET [paper](https://arxiv.org/pdf/1608.08021.pdf) [Code](sanghoon/pva-faster-rcnn)

- Kye-Hyeon Kim, Sanghoon Hong, Byungseok Roh, Yeongjae Cheon, Minje Park, PVANET: Deep but Lightweight Neural Networks for Real-time Object Detection, arXiv:1608.08021

- OverFeat, NYU [Paper](https://arxiv.org/pdf/1312.6229.pdf)

- OverFeat: Integrated Recognition, Localization and Detection using Convolutional Networks, ICLR, 2014.

- R-CNN, UC Berkeley [Paper-CVPR14](https://www.cv-foundation.org/openaccess/content_cvpr_2014/papers/Girshick_Rich_Feature_Hierarchies_2014_CVPR_paper.pdf) [Paper-arXiv14](https://arxiv.org/pdf/1311.2524.pdf)

- Ross Girshick, Jeff Donahue, Trevor Darrell, Jitendra Malik, Rich feature hierarchies for accurate object detection and semantic segmentation, CVPR, 2014.

- SPP, Microsoft Research [Paper](https://arxiv.org/pdf/1406.4729.pdf)

- Kaiming He, Xiangyu Zhang, Shaoqing Ren, Jian Sun, Spatial Pyramid Pooling in Deep Convolutional Networks for Visual Recognition, ECCV, 2014.

- Fast R-CNN, Microsoft Research [Paper](https://arxiv.org/pdf/1504.08083.pdf)

- Ross Girshick, Fast R-CNN, arXiv:1504.08083.

- Faster R-CNN, Microsoft Research [Paper](https://arxiv.org/pdf/1506.01497.pdf)

- Shaoqing Ren, Kaiming He, Ross Girshick, Jian Sun, Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks, arXiv:1506.01497.

- R-CNN minus R, Oxford [Paper](https://arxiv.org/pdf/1506.06981.pdf)

- Karel Lenc, Andrea Vedaldi, R-CNN minus R, arXiv:1506.06981.

- End-to-end people detection in crowded scenes [Paper](End-to-end people detection in crowded scenes)

- Russell Stewart, Mykhaylo Andriluka, End-to-end people detection in crowded scenes, arXiv:1506.04878.

- You Only Look Once: Unified, Real-Time object Detection [Paper](You Only Look Once: Unified, Real-Time Object Detection), [Paper Version 2](YOLO9000: Better, Faster, Stronger), [C Code](pjreddie/darknet), [Tensorflow Code](thtrieu/darkflow)

- Joseph Redmon, Santosh Divvala, Ross Girshick, Ali Farhadi, You Only Look Once: Unified, Real-Time Object Detection, arXiv:1506.02640

- Joseph Redmon, Ali Farhadi (Version 2)

- Inside-Outside Net [Paper](Inside-Outside Net: Detecting Objects in Context with Skip Pooling and Recurrent Neural Networks)

- Sean Bell, C. Lawrence Zitnick, Kavita Bala, Ross Girshick, Inside-Outside Net: Detecting Objects in Context with Skip Pooling and Recurrent Neural Networks

- Deep Residual Network (Current State-of-the-Art) [Paper](Deep Residual Learning for Image Recognition)

- Kaiming He, Xiangyu Zhang, Shaoqing Ren, Jian Sun, Deep Residual Learning for Image Recognition

- Weakly Supervised Object Localization with Multi-fold Multiple instance Learning [Paper](https://arxiv.org/pdf/1503.00949.pdf)

- R-FCN [Paper](R-FCN: Object Detection via Region-based Fully Convolutional Networks) [Code](daijifeng001/R-FCN)

- Jifeng Dai, Yi Li, Kaiming He, Jian Sun, R-FCN: Object Detection via Region-based Fully Convolutional Networks

- SSD [Paper](https://arxiv.org/pdf/1512.02325v2.pdf) [Code](weiliu89/caffe)

- Wei Liu1, Dragomir Anguelov, Dumitru Erhan, Christian Szegedy, Scott Reed, Cheng-Yang Fu, Alexander C. Berg, SSD: Single Shot MultiBox Detector, arXiv:1512.02325

- Speed/accuracy trade-offs for modern convolutional object detectors [Paper](https://arxiv.org/pdf/1611.10012v1.pdf)

- Jonathan Huang, Vivek Rathod, Chen Sun, Menglong Zhu, Anoop Korattikara, Alireza Fathi, Ian Fischer, Zbigniew Wojna, Yang Song, Sergio Guadarrama, Kevin Murphy, Google Research, arXiv:1611.10012

视频分类(Video Classification)

- Nicolas Ballas, Li Yao, Pal Chris, Aaron Courville, “Delving Deeper into Convolutional Networks for Learning Video Representations”, ICLR 2016. [Paper](https://arxiv.org/pdf/1511.06432v4.pdf)

- Michael Mathieu, camille couprie, Yann Lecun, “Deep Multi Scale Video Prediction Beyond Mean Square Error”, ICLR 2016. [Paper](https://arxiv.org/pdf/1511.05440v6.pdf)

对象跟踪(Object Tracking)

- Seunghoon Hong, Tackgeun You, Suha Kwak, Bohyung Han, Online Tracking by Learning Discriminative Saliency Map with Convolutional Neural Network, arXiv:1502.06796. [Paper](https://arxiv.org/pdf/1502.06796.pdf)

- Hanxi Li, Yi Li and Fatih Porikli, DeepTrack: Learning Discriminative Feature Representations by Convolutional Neural Networks for Visual Tracking, BMVC, 2014. [Paper](http://www.bmva.org/bmvc/2014/files/paper028.pdf)

- N Wang, DY Yeung, Learning a Deep Compact Image Representation for Visual Tracking, NIPS, 2013. [Paper](http://winsty.net/papers/dlt.pdf)

- N Wang, DY Yeung, Learning a Deep Compact Image Representation for Visual Tracking, NIPS, 2013. [Paper](http://winsty.net/papers/dlt.pdf)

- Chao Ma, Jia-Bin Huang, Xiaokang Yang and Ming-Hsuan Yang, Hierarchical Convolutional Features for Visual Tracking, ICCV 2015 [Paper](https://www.cv-foundation.org/openaccess/content_iccv_2015/papers/Ma_Hierarchical_Convolutional_Features_ICCV_2015_paper.pdf) [Code](jbhuang0604/CF2)

- Lijun Wang, Wanli Ouyang, Xiaogang Wang, and Huchuan Lu, Visual Tracking with fully Convolutional Networks, ICCV 2015 [Paper](http://202.118.75.4/lu/Paper/ICCV2015/iccv15_lijun.pdf) [Code](scott89/FCNT)

- Hyeonseob Namand Bohyung Han, Learning Multi-Domain Convolutional Neural Networks for Visual Tracking, [Paper](https://arxiv.org/pdf/1510.07945.pdf) [Code](HyeonseobNam/MDNet) [Project Page](Learning Multi-Domain Convolutional Neural Networks for Visual Tracking)

底层视觉(Low-Level Vision)

超分辨率(Super-Resolution)

- 迭代图像重建(Iterative Image Reconstruction)

- Sven Behnke: Learning Iterative Image Reconstruction. IJCAI, 2001. [Paper](http://www.ais.uni-bonn.de/behnke/papers/ijcai01.pdf)

- Sven Behnke: Learning Iterative Image Reconstruction in the Neural Abstraction Pyramid. International Journal of Computational Intelligence and Applications, vol. 1, no. 4, pp. 427-438, 2001. [Paper](http://www.ais.uni-bonn.de/behnke/papers/ijcia01.pdf)

- Super-Resolution (SRCNN) [Web](Learning a Deep Convolutional Network for Image Super-Resolution) [Paper-ECCV14](http://personal.ie.cuhk.edu.hk/~ccloy/files/eccv_2014_deepresolution.pdf) [Paper-arXiv15](https://arxiv.org/pdf/1501.00092.pdf)

- Chao Dong, Chen Change Loy, Kaiming He, Xiaoou Tang, Learning a Deep Convolutional Network for Image Super-Resolution, ECCV, 2014.

- Chao Dong, Chen Change Loy, Kaiming He, Xiaoou Tang, Image Super-Resolution Using Deep Convolutional Networks, arXiv:1501.00092.

- Very Deep Super-Resolution

- Jiwon Kim, Jung Kwon Lee, Kyoung Mu Lee, Accurate Image Super-Resolution Using Very Deep Convolutional Networks, arXiv:1511.04587, 2015. [Paper](Accurate Image Super-Resolution Using Very Deep Convolutional Networks)

- Deeply-Recursive Convolutional Network

- Jiwon Kim, Jung Kwon Lee, Kyoung Mu Lee, Deeply-Recursive Convolutional Network for Image Super-Resolution, arXiv:1511.04491, 2015. [Paper](Deeply-Recursive Convolutional Network for Image Super-Resolution)

- Casade-Sparse-Coding-Network

- Zhaowen Wang, Ding Liu, Wei Han, Jianchao Yang and Thomas S. Huang, Deep Networks for Image Super-Resolution with Sparse Prior. ICCV, 2015. [Paper](http://www.ifp.illinois.edu/~dingliu2/iccv15/iccv15.pdf) [Code](Deep Networks for Image Super-Resolution with Sparse Prior)

- Perceptual Losses for Super-Resolution

- Justin Johnson, Alexandre Alahi, Li Fei-Fei, Perceptual Losses for Real-Time Style Transfer and Super-Resolution, arXiv:1603.08155, 2016. [Paper](Perceptual Losses for Real-Time Style Transfer and Super-Resolution) [Supplementary](https://cs.stanford.edu/people/jcjohns/papers/fast-style/fast-style-supp.pdf)

- SRGAN

- Christian Ledig, Lucas Theis, Ferenc Huszar, Jose Caballero, Andrew Cunningham, Alejandro Acosta, Andrew Aitken, Alykhan Tejani, Johannes Totz, Zehan Wang, Wenzhe Shi, Photo-Realistic Single Image Super-Resolution Using a Generative Adversarial Network, arXiv:1609.04802v3, 2016. [Paper](https://arxiv.org/pdf/1609.04802v3.pdf)

- Others

- Osendorfer, Christian, Hubert Soyer, and Patrick van der Smagt, Image Super-Resolution with Fast Approximate Convolutional Sparse Coding, ICONIP, 2014. [Paper ICONIP-2014](http://brml.org/uploads/tx_sibibtex/281.pdf)

其他应用(Other Applications)

- Optical Flow (FlowNet) [Paper](http://arxiv.org/pdf/1504.06852)

- Philipp Fischer, Alexey Dosovitskiy, Eddy Ilg, Philip Häusser, Caner Hazırbaş, Vladimir Golkov, Patrick van der Smagt, Daniel Cremers, Thomas Brox, FlowNet: Learning Optical Flow with Convolutional Networks, arXiv:1504.06852.

- Compression Artifacts Reduction [Paper-arXiv15](http://arxiv.org/pdf/1504.06993)

- Chao Dong, Yubin Deng, Chen Change Loy, Xiaoou Tang, Compression Artifacts Reduction by a Deep Convolutional Network, arXiv:1504.06993.

- Blur Removal

- Christian J. Schuler, Michael Hirsch, Stefan Harmeling, Bernhard Schölkopf, Learning to Deblur, arXiv:1406.7444 [Paper](https://arxiv.org/pdf/1406.7444.pdf)

- Jian Sun, Wenfei Cao, Zongben Xu, Jean Ponce, Learning a Convolutional Neural Network for Non-uniform Motion Blur Removal, CVPR, 2015 [Paper](https://arxiv.org/pdf/1503.00593.pdf)

- Image Deconvolution [Web](Deep Deconvolution) [Paper](http://lxu.me/mypapers/dcnn_nips14.pdf)

- Li Xu, Jimmy SJ. Ren, Ce Liu, Jiaya Jia, Deep Convolutional Neural Network for Image Deconvolution, NIPS, 2014.

- Deep Edge-Aware Filter [Paper](http://proceedings.mlr.press/v37/xub15.pdf)

- Li Xu, Jimmy SJ. Ren, Qiong Yan, Renjie Liao, Jiaya Jia, Deep Edge-Aware Filters, ICML, 2015.

- Computing the Stereo Matching Cost with a Convolutional Neural Network [Paper](https://www.cv-foundation.org/openaccess/content_cvpr_2015/papers/Zbontar_Computing_the_Stereo_2015_CVPR_paper.pdf)

- Jure Žbontar, Yann LeCun, Computing the Stereo Matching Cost with a Convolutional Neural Network, CVPR, 2015.

- Colorful Image Colorization Richard Zhang, Phillip Isola, Alexei A. Efros, ECCV, 2016 [Paper](http://arxiv.org/pdf/1603.08511.pdf), [Code](richzhang/colorization)

- Ryan Dahl, [Blog](Automatic Colorization)

- Feature Learning by Inpainting [Paper](https://arxiv.org/pdf/1604.07379v1.pdf) [Code](pathak22/context-encoder)

- Deepak Pathak, Philipp Krahenbuhl, Jeff Donahue, Trevor Darrell, Alexei A. Efros, Context Encoders: Feature Learning by Inpainting, CVPR, 2016

边缘检测(Edge Detection)

- Saining Xie, Zhuowen Tu, Holistically-Nested Edge Detection, arXiv:1504.06375.

- DeepEdge [Paper](http://arxiv.org/pdf/1412.1123)

- Gedas Bertasius, Jianbo Shi, Lorenzo Torresani, DeepEdge: A Multi-Scale Bifurcated Deep Network for Top-Down Contour Detection, CVPR, 2015.

- DeepContour [Paper](http://mc.eistar.net/UpLoadFiles/Papers/DeepContour_cvpr15.pdf)

- Wei Shen, Xinggang Wang, Yan Wang, Xiang Bai, Zhijiang Zhang, DeepContour: A Deep Convolutional Feature Learned by Positive-Sharing Loss for Contour Detection, CVPR, 2015.

语义分割(Semantic Segmentation)

- Adelaide

- Guosheng Lin, Chunhua Shen, Ian Reid, Anton van dan Hengel, Efficient piecewise training of deep structured models for semantic segmentation, arXiv:1504.01013. [Paper](https://arxiv.org/pdf/1504.01013.pdf) (1st ranked in VOC2012)

- Guosheng Lin, Chunhua Shen, Ian Reid, Anton van den Hengel, Deeply Learning the Messages in Message Passing Inference, arXiv:1508.02108. [Paper](https://arxiv.org/pdf/1506.02108.pdf) (4th ranked in VOC2012)

- Deep Parsing Network (DPN)

- Ziwei Liu, Xiaoxiao Li, Ping Luo, Chen Change Loy, Xiaoou Tang, Semantic Image Segmentation via Deep Parsing Network, arXiv:1509.02634 / ICCV 2015 [Paper](https://arxiv.org/pdf/1509.02634.pdf) (2nd ranked in VOC 2012)

- CentraleSuperBoundaries, INRIA [Paper](https://arxiv.org/pdf/1511.07386.pdf)

- Iasonas Kokkinos, Surpassing Humans in Boundary Detection using Deep Learning, arXiv:1411.07386 (4th ranked in VOC 2012)

- BoxSup [Paper](http://arxiv.org/pdf/1503.01640.pdf)

- Jifeng Dai, Kaiming He, Jian Sun, BoxSup: Exploiting Bounding Boxes to Supervise Convolutional Networks for Semantic Segmentation, arXiv:1503.01640. (6th ranked in VOC2012)

- POSTECH

- Hyeonwoo Noh, Seunghoon Hong, Bohyung Han, Learning Deconvolution Network for Semantic Segmentation, arXiv:1505.04366. [Paper](https://arxiv.org/pdf/1505.04366.pdf) (7th ranked in VOC2012)

- Seunghoon Hong, Hyeonwoo Noh, Bohyung Han, Decoupled Deep Neural Network for Semi-supervised Semantic Segmentation, arXiv:1506.04924. [Paper](https://arxiv.org/pdf/1506.04924.pdf)

- Seunghoon Hong,Junhyuk Oh, Bohyung Han, and Honglak Lee, Learning Transferrable Knowledge for Semantic Segmentation with Deep Convolutional Neural Network, arXiv:1512.07928 [Paper](https://arxiv.org/pdf/1512.07928.pdf) [Project Page](Learning Transferrable Knowledge for Semantic Segmentation with Deep Convolutional Neural Network)

- Conditional Random Fields as Recurrent Neural Networks [Paper](https://arxiv.org/pdf/1502.03240.pdf)

- Shuai Zheng, Sadeep Jayasumana, Bernardino Romera-Paredes, Vibhav Vineet, Zhizhong Su, Dalong Du, Chang Huang, Philip H. S. Torr, Conditional Random Fields as Recurrent Neural Networks, arXiv:1502.03240. (8th ranked in VOC2012)

- DeepLab

- Liang-Chieh Chen, George Papandreou, Kevin Murphy, Alan L. Yuille, Weakly-and semi-supervised learning of a DCNN for semantic image segmentation, arXiv:1502.02734. [Paper](https://arxiv.org/pdf/1502.02734.pdf) (9th ranked in VOC2012)

- Zoom-out [Paper](https://www.cv-foundation.org/openaccess/content_cvpr_2015/papers/Mostajabi_Feedforward_Semantic_Segmentation_2015_CVPR_paper.pdf)

- Mohammadreza Mostajabi, Payman Yadollahpour, Gregory Shakhnarovich, Feedforward Semantic Segmentation With Zoom-Out Features, CVPR, 2015

- Joint Calibration [Paper](https://arxiv.org/pdf/1507.01581.pdf)

- Holger Caesar, Jasper Uijlings, Vittorio Ferrari, Joint Calibration for Semantic Segmentation, arXiv:1507.01581.

- Fully Convolutional Networks for Semantic Segmentation [Paper-arXiv15](https://arxiv.org/pdf/1411.4038.pdf)

- Jonathan Long, Evan Shelhamer, Trevor Darrell, Fully Convolutional Networks for Semantic Segmentation, CVPR, 2015.

- Hypercolumn [Paper](https://www.cv-foundation.org/openaccess/content_cvpr_2015/papers/Hariharan_Hypercolumns_for_Object_2015_CVPR_paper.pdf)

- Bharath Hariharan, Pablo Arbelaez, Ross Girshick, Jitendra Malik, Hypercolumns for Object Segmentation and Fine-Grained Localization, CVPR, 2015.

- Deep Hierarchical Parsing [Paper](https://www.cv-foundation.org/openaccess/content_cvpr_2015/papers/Sharma_Deep_Hierarchical_Parsing_2015_CVPR_paper.pdf)

- Abhishek Sharma, Oncel Tuzel, David W. Jacobs, Deep Hierarchical Parsing for Semantic Segmentation, CVPR, 2015.

- Learning Hierarchical Features for Scene Labeling [Paper-ICML12](http://yann.lecun.com/exdb/publis/pdf/farabet-icml-12.pdf) [Paper-PAMI13](http://yann.lecun.com/exdb/publis/pdf/farabet-pami-13.pdf)

- Clement Farabet, Camille Couprie, Laurent Najman, Yann LeCun, Scene Parsing with Multiscale Feature Learning, Purity Trees, and Optimal Covers, ICML, 2012.

- Clement Farabet, Camille Couprie, Laurent Najman, Yann LeCun, Learning Hierarchical Features for Scene Labeling, PAMI, 2013.

- University of Cambridge [Web](SegNet)

- Vijay Badrinarayanan, Alex Kendall and Roberto Cipolla “SegNet: A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation.” arXiv preprint arXiv:1511.00561, 2015. [Paper](SegNet: A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation)

- Alex Kendall, Vijay Badrinarayanan and Roberto Cipolla “Bayesian SegNet: Model Uncertainty in Deep Convolutional Encoder-Decoder Architectures for Scene Understanding.” arXiv preprint arXiv:1511.02680, 2015. [Paper](SegNet: A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation)

- Princeton

- Fisher Yu, Vladlen Koltun, “Multi-Scale Context Aggregation by Dilated Convolutions”, ICLR 2016, [Paper](https://arxiv.org/pdf/1511.07122v2.pdf)

- Univ. of Washington, Allen AI

- Hamid Izadinia, Fereshteh Sadeghi, Santosh Kumar Divvala, Yejin Choi, Ali Farhadi, “Segment-Phrase Table for Semantic Segmentation, Visual Entailment and Paraphrasing”, ICCV, 2015, [Paper](https://www.cv-foundation.org/openaccess/content_iccv_2015/papers/Izadinia_Segment-Phrase_Table_for_ICCV_2015_paper.pdf)

- INRIA

- Iasonas Kokkinos, “Pusing the Boundaries of Boundary Detection Using deep Learning”, ICLR 2016, [Paper](http://arxiv.org/pdf/1511.07386v2.pdf)

- UCSB

- Niloufar Pourian, S. Karthikeyan, and B.S. Manjunath, “Weakly supervised graph based semantic segmentation by learning communities of image-parts”, ICCV, 2015, [Paper](https://www.cv-foundation.org/openaccess/content_iccv_2015/papers/Pourian_Weakly_Supervised_Graph_ICCV_2015_paper.pdf)

视觉注意力和显着性(Visual Attention and Saliency)

- Nian Liu, Junwei Han, Dingwen Zhang, Shifeng Wen, Tianming Liu, Predicting Eye Fixations using Convolutional Neural Networks, CVPR, 2015.

- Learning a Sequential Search for Landmarks [Paper](https://www.cv-foundation.org/openaccess/content_cvpr_2015/papers/Singh_Learning_a_Sequential_2015_CVPR_paper.pdf)

-

Saurabh Singh, Derek Hoiem, David Forsyth, Learning a Sequential Search for Landmarks, CVPR, 2015.

- Multiple Object Recognition with Visual Attention [Paper](https://arxiv.org/pdf/1412.7755.pdf)

- Jimmy Lei Ba, Volodymyr Mnih, Koray Kavukcuoglu, Multiple Object Recognition with Visual Attention, ICLR, 2015.

- Recurrent Models of Visual Attention [Paper](http://papers.nips.cc/paper/5542-recurrent-models-of-visual-attention.pdf)

- Volodymyr Mnih, Nicolas Heess, Alex Graves, Koray Kavukcuoglu, Recurrent Models of Visual Attention, NIPS, 2014.

物体识别(Object Recognition)

- Weakly-supervised learning with convolutional neural networks [Paper](https://www.cv-foundation.org/openaccess/content_cvpr_2015/papers/Oquab_Is_Object_Localization_2015_CVPR_paper.pdf)

- Maxime Oquab, Leon Bottou, Ivan Laptev, Josef Sivic, Is object localization for free? – Weakly-supervised learning with convolutional neural networks, CVPR, 2015.

- FV-CNN [Paper](https://www.cv-foundation.org/openaccess/content_cvpr_2015/papers/Cimpoi_Deep_Filter_Banks_2015_CVPR_paper.pdf)

- Mircea Cimpoi, Subhransu Maji, Andrea Vedaldi, Deep Filter Banks for Texture Recognition and Segmentation, CVPR, 2015.

人体姿势估计(Human Pose Estimation)

- Zhe Cao, Tomas Simon, Shih-En Wei, and Yaser Sheikh, Realtime Multi-Person 2D Pose Estimation using Part Affinity Fields, CVPR, 2017.

- Leonid Pishchulin, Eldar Insafutdinov, Siyu Tang, Bjoern Andres, Mykhaylo Andriluka, Peter Gehler, and Bernt Schiele, Deepcut: Joint subset partition and labeling for multi person pose estimation, CVPR, 2016.

- Shih-En Wei, Varun Ramakrishna, Takeo Kanade, and Yaser Sheikh, Convolutional pose machines, CVPR, 2016.

- Alejandro Newell, Kaiyu Yang, and Jia Deng, Stacked hourglass networks for human pose estimation, ECCV, 2016.

- Tomas Pfister, James Charles, and Andrew Zisserman, Flowing convnets for human pose estimation in videos, ICCV, 2015.

- Jonathan J. Tompson, Arjun Jain, Yann LeCun, Christoph Bregler, Joint training of a convolutional network and a graphical model for human pose estimation, NIPS, 2014.

Understanding CNN

- Karel Lenc, Andrea Vedaldi, Understanding image representations by measuring their equivariance and equivalence, CVPR, 2015. [Paper](https://www.cv-foundation.org/openaccess/content_cvpr_2015/papers/Lenc_Understanding_Image_Representations_2015_CVPR_paper.pdf)

- Anh Nguyen, Jason Yosinski, Jeff Clune, Deep Neural Networks are Easily Fooled:High Confidence Predictions for Unrecognizable Images, CVPR, 2015. [Paper](https://www.cv-foundation.org/openaccess/content_cvpr_2015/papers/Nguyen_Deep_Neural_Networks_2015_CVPR_paper.pdf)

- Aravindh Mahendran, Andrea Vedaldi, Understanding Deep Image Representations by Inverting Them, CVPR, 2015. [Paper](https://www.cv-foundation.org/openaccess/content_cvpr_2015/papers/Mahendran_Understanding_Deep_Image_2015_CVPR_paper.pdf)

- Bolei Zhou, Aditya Khosla, Agata Lapedriza, Aude Oliva, Antonio Torralba, Object Detectors Emerge in Deep Scene CNNs, ICLR, 2015. [arXiv Paper](Object Detectors Emerge in Deep Scene CNNs)

- Alexey Dosovitskiy, Thomas Brox, Inverting Visual Representations with Convolutional Networks, arXiv, 2015. [Paper](Inverting Visual Representations with Convolutional Networks)

- Matthrew Zeiler, Rob Fergus, Visualizing and Understanding Convolutional Networks, ECCV, 2014. [Paper](https://cs.nyu.edu/~fergus/papers/zeilerECCV2014.pdf)

Image and Language

Image Captioning

- Pay Less Attention with Lightweight and Dynamic Convolutions [Paper](Pay Less Attention with Lightweight and Dynamic Convolutions)

- UCLA / Baidu [Paper](https://arxiv.org/pdf/1410.1090.pdf)

- Junhua Mao, Wei Xu, Yi Yang, Jiang Wang, Alan L. Yuille, Explain Images with Multimodal Recurrent Neural Networks, arXiv:1410.1090.

- Toronto [Paper](https://arxiv.org/pdf/1411.2539.pdf)

- Ryan Kiros, Ruslan Salakhutdinov, Richard S. Zemel, Unifying Visual-Semantic Embeddings with Multimodal Neural Language Models, arXiv:1411.2539.

- Berkeley [Paper](https://arxiv.org/pdf/1411.4389.pdf)

- Jeff Donahue, Lisa Anne Hendricks, Sergio Guadarrama, Marcus Rohrbach, Subhashini Venugopalan, Kate Saenko, Trevor Darrell, Long-term Recurrent Convolutional Networks for Visual Recognition and Description, arXiv:1411.4389.

- Google [Paper](https://arxiv.org/pdf/1411.4555.pdf)

- Oriol Vinyals, Alexander Toshev, Samy Bengio, Dumitru Erhan, Show and Tell: A Neural Image Caption Generator, arXiv:1411.4555.

- Stanford [Web](Deep Visual-Semantic Alignments for Generating Image Descriptions) [Paper](https://cs.stanford.edu/people/karpathy/cvpr2015.pdf)

- Andrej Karpathy, Li Fei-Fei, Deep Visual-Semantic Alignments for Generating Image Description, CVPR, 2015.

- UML / UT [Paper](https://arxiv.org/pdf/1412.4729.pdf)

- Subhashini Venugopalan, Huijuan Xu, Jeff Donahue, Marcus Rohrbach, Raymond Mooney, Kate Saenko, Translating Videos to Natural Language Using Deep Recurrent Neural Networks, NAACL-HLT, 2015.

- CMU / Microsoft [Paper-arXiv](https://arxiv.org/pdf/1411.5654.pdf)

- Xinlei Chen, C. Lawrence Zitnick, Learning a Recurrent Visual Representation for Image Caption Generation, arXiv:1411.5654.

- Xinlei Chen, C. Lawrence Zitnick, Mind’s Eye: A Recurrent Visual Representation for Image Caption Generation, CVPR 2015

- Microsoft [Paper](https://arxiv.org/pdf/1411.4952.pdf)

- Hao Fang, Saurabh Gupta, Forrest Iandola, Rupesh Srivastava, Li Deng, Piotr Dollár, Jianfeng Gao, Xiaodong He, Margaret Mitchell, John C. Platt, C. Lawrence Zitnick, Geoffrey Zweig, From Captions to Visual Concepts and Back, CVPR, 2015.

- Univ. Montreal / Univ. Toronto [Web](Neural Image Caption Generation with Visual Attention) [Paper](http://www.cs.toronto.edu/~zemel/documents/captionAttn.pdf)

- Kelvin Xu, Jimmy Lei Ba, Ryan Kiros, Kyunghyun Cho, Aaron Courville, Ruslan Salakhutdinov, Richard S. Zemel, Yoshua Bengio, Show, Attend, and Tell: Neural Image Caption Generation with Visual Attention, arXiv:1502.03044 / ICML 2015

- Idiap / EPFL / Facebook [Paper](https://arxiv.org/pdf/1502.03671.pdf)

- Remi Lebret, Pedro O. Pinheiro, Ronan Collobert, Phrase-based Image Captioning, arXiv:1502.03671 / ICML 2015

- UCLA / Baidu [Paper](https://arxiv.org/pdf/1504.06692.pdf)

- Junhua Mao, Wei Xu, Yi Yang, Jiang Wang, Zhiheng Huang, Alan L. Yuille, Learning like a Child: Fast Novel Visual Concept Learning from Sentence Descriptions of Images, arXiv:1504.06692

- MS + Berkeley

- Jacob Devlin, Saurabh Gupta, Ross Girshick, Margaret Mitchell, C. Lawrence Zitnick, Exploring Nearest Neighbor Approaches for Image Captioning, arXiv:1505.04467 [Paper](https://arxiv.org/pdf/1505.04467.pdf)

- Jacob Devlin, Hao Cheng, Hao Fang, Saurabh Gupta, Li Deng, Xiaodong He, Geoffrey Zweig, Margaret Mitchell, Language Models for Image Captioning: The Quirks and What Works, arXiv:1505.01809 [Paper]

- Adelaide [Paper](https://arxiv.org/pdf/1505.01809.pdf)

- Qi Wu, Chunhua Shen, Anton van den Hengel, Lingqiao Liu, Anthony Dick, Image Captioning with an Intermediate Attributes Layer, arXiv:1506.01144

- Tilburg [Paper](https://arxiv.org/pdf/1506.03694.pdf)

- Grzegorz Chrupala, Akos Kadar, Afra Alishahi, Learning language through pictures, arXiv:1506.03694

- Univ. Montreal [Paper](https://arxiv.org/pdf/1507.01053.pdf)

- Kyunghyun Cho, Aaron Courville, Yoshua Bengio, Describing Multimedia Content using Attention-based Encoder-Decoder Networks, arXiv:1507.01053

- Cornell [Paper](https://arxiv.org/pdf/1508.02091.pdf)

- Jack Hessel, Nicolas Savva, Michael J. Wilber, Image Representations and New Domains in Neural Image Captioning, arXiv:1508.02091

- MS + City Univ. of HongKong [Paper](https://www.cv-foundation.org/openaccess/content_iccv_2015/papers/Yao_Learning_Query_and_ICCV_2015_paper.pdf)

- Ting Yao, Tao Mei, and Chong-Wah Ngo, “Learning Query and Image Similarities with Ranking Canonical Correlation Analysis”, ICCV, 2015

Video Captioning

- Berkeley [Web](Long-term Recurrent Convolutional Networks) [Paper](https://arxiv.org/pdf/1411.4389.pdf)

- Jeff Donahue, Lisa Anne Hendricks, Sergio Guadarrama, Marcus Rohrbach, Subhashini Venugopalan, Kate Saenko, Trevor Darrell, Long-term Recurrent Convolutional Networks for Visual Recognition and Description, CVPR, 2015.

- UT / UML / Berkeley [Paper](https://arxiv.org/pdf/1412.4729.pdf)

- Subhashini Venugopalan, Huijuan Xu, Jeff Donahue, Marcus Rohrbach, Raymond Mooney, Kate Saenko, Translating Videos to Natural Language Using Deep Recurrent Neural Networks, arXiv:1412.4729.

- Microsoft [Paper](https://arxiv.org/pdf/1505.01861.pdf)

- Yingwei Pan, Tao Mei, Ting Yao, Houqiang Li, Yong Rui, Joint Modeling Embedding and Translation to Bridge Video and Language, arXiv:1505.01861.

- UT / Berkeley / UML [Paper](https://arxiv.org/pdf/1505.00487.pdf)

- Subhashini Venugopalan, Marcus Rohrbach, Jeff Donahue, Raymond Mooney, Trevor Darrell, Kate Saenko, Sequence to Sequence–Video to Text, arXiv:1505.00487.

- Univ. Montreal / Univ. Sherbrooke [Paper](https://arxiv.org/pdf/1502.08029.pdf)

- Li Yao, Atousa Torabi, Kyunghyun Cho, Nicolas Ballas, Christopher Pal, Hugo Larochelle, Aaron Courville, Describing Videos by Exploiting Temporal Structure, arXiv:1502.08029

- MPI / Berkeley [Paper](https://arxiv.org/pdf/1506.01698.pdf)

- Anna Rohrbach, Marcus Rohrbach, Bernt Schiele, The Long-Short Story of Movie Description, arXiv:1506.01698

- Univ. Toronto / MIT [Paper](https://arxiv.org/pdf/1506.06724.pdf)

- Yukun Zhu, Ryan Kiros, Richard Zemel, Ruslan Salakhutdinov, Raquel Urtasun, Antonio Torralba, Sanja Fidler, Aligning Books and Movies: Towards Story-like Visual Explanations by Watching Movies and Reading Books, arXiv:1506.06724

- Univ. Montreal [Paper](https://arxiv.org/pdf/1507.01053.pdf)

- Kyunghyun Cho, Aaron Courville, Yoshua Bengio, Describing Multimedia Content using Attention-based Encoder-Decoder Networks, arXiv:1507.01053

- TAU / USC [paper](https://arxiv.org/pdf/1612.06950.pdf)

- Dotan Kaufman, Gil Levi, Tal Hassner, Lior Wolf, Temporal Tessellation for Video Annotation and Summarization, arXiv:1612.06950.

Question Answering

- Virginia Tech / MSR [Web](Announcing the VQA Challenge 2018!) [Paper](https://arxiv.org/pdf/1505.00468.pdf)

- Stanislaw Antol, Aishwarya Agrawal, Jiasen Lu, Margaret Mitchell, Dhruv Batra, C. Lawrence Zitnick, Devi Parikh, VQA: Visual Question Answering, CVPR, 2015 SUNw:Scene Understanding workshop.

- MPI / Berkeley [Web](Visual Turing Challenge) [Paper](https://arxiv.org/pdf/1505.01121.pdf)

- Mateusz Malinowski, Marcus Rohrbach, Mario Fritz, Ask Your Neurons: A Neural-based Approach to Answering Questions about Images, arXiv:1505.01121.

- Toronto [Paper](https://arxiv.org/pdf/1505.02074.pdf) [Dataset](http://www.cs.toronto.edu/~mren/imageqa/data/cocoqa/)

- Mengye Ren, Ryan Kiros, Richard Zemel, Image Question Answering: A Visual Semantic Embedding Model and a New Dataset, arXiv:1505.02074 / ICML 2015 deep learning workshop.

- Baidu / UCLA [Paper](https://arxiv.org/pdf/1505.05612.pdf) [Dataset] //todo

- Hauyuan Gao, Junhua Mao, Jie Zhou, Zhiheng Huang, Lei Wang, Wei Xu, Are You Talking to a Machine? Dataset and Methods for Multilingual Image Question Answering, arXiv:1505.05612.

- POSTECH [Paper](https://arxiv.org/pdf/1511.05756.pdf) [Project Page](Image Question Answering using Convolutional Neural Network with Dynamic Parameter Prediction)

- Hyeonwoo Noh, Paul Hongsuck Seo, and Bohyung Han, Image Question Answering using Convolutional Neural Network with Dynamic Parameter Prediction, arXiv:1511.05765

- CMU / Microsoft Research [Paper](https://arxiv.org/pdf/1511.02274v2.pdf)

- Yang, Z., He, X., Gao, J., Deng, L., & Smola, A. (2015). Stacked Attention Networks for Image Question Answering. arXiv:1511.02274.

- MetaMind [Paper](https://arxiv.org/pdf/1603.01417v1.pdf)

- Xiong, Caiming, Stephen Merity, and Richard Socher. “Dynamic Memory Networks for Visual and Textual Question Answering.” arXiv:1603.01417 (2016).

- SNU + NAVER [Paper](Multimodal Residual Learning for Visual QA)

- Jin-Hwa Kim, Sang-Woo Lee, Dong-Hyun Kwak, Min-Oh Heo, Jeonghee Kim, Jung-Woo Ha, Byoung-Tak Zhang, Multimodal Residual Learning for Visual QA, arXiv:1606:01455

- UC Berkeley + Sony [Paper](https://arxiv.org/pdf/1606.01847.pdf)

- Akira Fukui, Dong Huk Park, Daylen Yang, Anna Rohrbach, Trevor Darrell, and Marcus Rohrbach, Multimodal Compact Bilinear Pooling for Visual Question Answering and Visual Grounding, arXiv:1606.01847

- Postech [Paper](https://arxiv.org/pdf/1606.03647.pdf)

- Hyeonwoo Noh and Bohyung Han, Training Recurrent Answering Units with Joint Loss Minimization for VQA, arXiv:1606.03647

- SNU + NAVER [Paper](Hadamard Product for Low-rank Bilinear Pooling)

- Jin-Hwa Kim, Kyoung Woon On, Jeonghee Kim, Jung-Woo Ha, Byoung-Tak Zhang, Hadamard Product for Low-rank Bilinear Pooling, arXiv:1610.04325.

Image Generation

- Convolutional / Recurrent Networks

- Aäron van den Oord, Nal Kalchbrenner, Oriol Vinyals, Lasse Espeholt, Alex Graves, Koray Kavukcuoglu. “Conditional Image Generation with PixelCNN Decoders” [Paper](https://arxiv.org/pdf/1606.05328v2.pdf) [Code](kundan2510/pixelCNN)

- Alexey Dosovitskiy, Jost Tobias Springenberg, Thomas Brox, “Learning to Generate Chairs with Convolutional Neural Networks”, CVPR, 2015. [Paper](https://www.cv-foundation.org/openaccess/content_cvpr_2015/papers/Dosovitskiy_Learning_to_Generate_2015_CVPR_paper.pdf)

- Karol Gregor, Ivo Danihelka, Alex Graves, Danilo Jimenez Rezende, Daan Wierstra, “DRAW: A Recurrent Neural Network For Image Generation”, ICML, 2015. [Paper](https://arxiv.org/pdf/1502.04623v2.pdf)

- Adversarial Networks

- Ian J. Goodfellow, Jean Pouget-Abadie, Mehdi Mirza, Bing Xu, David Warde-Farley, Sherjil Ozair, Aaron Courville, Yoshua Bengio, Generative Adversarial Networks, NIPS, 2014. [Paper](Generative Adversarial Networks)

- Emily Denton, Soumith Chintala, Arthur Szlam, Rob Fergus, Deep Generative Image Models using a Laplacian Pyramid of Adversarial Networks, NIPS, 2015. [Paper](Deep Generative Image Models using a Laplacian Pyramid of Adversarial Networks)

- Lucas Theis, Aäron van den Oord, Matthias Bethge, “A note on the evaluation of generative models”, ICLR 2016. [Paper](A note on the evaluation of generative models)

- Zhenwen Dai, Andreas Damianou, Javier Gonzalez, Neil Lawrence, “Variationally Auto-Encoded Deep Gaussian Processes”, ICLR 2016. [Paper](https://arxiv.org/pdf/1511.06455v2.pdf)

- Elman Mansimov, Emilio Parisotto, Jimmy Ba, Ruslan Salakhutdinov, “Generating Images from Captions with Attention”, ICLR 2016, [Paper](https://arxiv.org/pdf/1511.02793v2.pdf)

- Jost Tobias Springenberg, “Unsupervised and Semi-supervised Learning with Categorical Generative Adversarial Networks”, ICLR 2016, [Paper](https://arxiv.org/pdf/1511.06390v1.pdf)

- Harrison Edwards, Amos Storkey, “Censoring Representations with an Adversary”, ICLR 2016, [Paper](https://arxiv.org/pdf/1511.05897v3.pdf)

- Takeru Miyato, Shin-ichi Maeda, Masanori Koyama, Ken Nakae, Shin Ishii, “Distributional Smoothing with Virtual Adversarial Training”, ICLR 2016, [Paper](https://arxiv.org/pdf/1507.00677v8.pdf)

- Jun-Yan Zhu, Philipp Krahenbuhl, Eli Shechtman, and Alexei A. Efros, “Generative Visual Manipulation on the Natural Image Manifold”, ECCV 2016. [Paper](https://arxiv.org/pdf/1609.03552v2.pdf) [Code](junyanz/iGAN) [Video](https://youtu.be/9c4z6YsBGQ0)

- Mixing Convolutional and Adversarial Networks

- Alec Radford, Luke Metz, Soumith Chintala, “Unsupervised Representation Learning with Deep Convolutional Generative Adversarial Networks”, ICLR 2016. [Paper](https://arxiv.org/pdf/1511.06434.pdf)

Other Topics

- Visual Analogy [Paper](https://web.eecs.umich.edu/~honglak/nips2015-analogy.pdf)

- Scott Reed, Yi Zhang, Yuting Zhang, Honglak Lee, Deep Visual Analogy Making, NIPS, 2015

- Surface Normal Estimation [Paper](https://www.cv-foundation.org/openaccess/content_cvpr_2015/papers/Wang_Designing_Deep_Networks_2015_CVPR_paper.pdf)

- Xiaolong Wang, David F. Fouhey, Abhinav Gupta, Designing Deep Networks for Surface Normal Estimation, CVPR, 2015.

- Action Detection [Paper](https://www.cv-foundation.org/openaccess/content_cvpr_2015/papers/Gkioxari_Finding_Action_Tubes_2015_CVPR_paper.pdf)

- Georgia Gkioxari, Jitendra Malik, Finding Action Tubes, CVPR, 2015.

- Crowd Counting [Paper](https://www.cv-foundation.org/openaccess/content_cvpr_2015/papers/Zhang_Cross-Scene_Crowd_Counting_2015_CVPR_paper.pdf)

- Cong Zhang, Hongsheng Li, Xiaogang Wang, Xiaokang Yang, Cross-scene Crowd Counting via Deep Convolutional Neural Networks, CVPR, 2015.

- 3D Shape Retrieval [Paper](https://www.cv-foundation.org/openaccess/content_cvpr_2015/papers/Wang_Sketch-Based_3D_Shape_2015_CVPR_paper.pdf)

- Fang Wang, Le Kang, Yi Li, Sketch-based 3D Shape Retrieval using Convolutional Neural Networks, CVPR, 2015.

- Weakly-supervised Classification

- Samaneh Azadi, Jiashi Feng, Stefanie Jegelka, Trevor Darrell, “Auxiliary Image Regularization for Deep CNNs with Noisy Labels”, ICLR 2016, [Paper](https://arxiv.org/pdf/1511.07069v2.pdf)

- Artistic Style [Paper](A Neural Algorithm of Artistic Style) [Code](jcjohnson/neural-style)

- Leon A. Gatys, Alexander S. Ecker, Matthias Bethge, A Neural Algorithm of Artistic Style.

- Human Gaze Estimation

- Xucong Zhang, Yusuke Sugano, Mario Fritz, Andreas Bulling, Appearance-Based Gaze Estimation in the Wild, CVPR, 2015. [Paper](https://www.cv-foundation.org/openaccess/content_cvpr_2015/papers/Zhang_Appearance-Based_Gaze_Estimation_2015_CVPR_paper.pdf) [Website](Appearance-Based Gaze Estimation in the Wild)

- Face Recognition

- Yaniv Taigman, Ming Yang, Marc’Aurelio Ranzato, Lior Wolf, DeepFace: Closing the Gap to Human-Level Performance in Face Verification, CVPR, 2014. [Paper](https://www.cs.toronto.edu/~ranzato/publications/taigman_cvpr14.pdf)

- Yi Sun, Ding Liang, Xiaogang Wang, Xiaoou Tang, DeepID3: Face Recognition with Very Deep Neural Networks, 2015. [Paper](DeepID3: Face Recognition with Very Deep Neural Networks)

- Florian Schroff, Dmitry Kalenichenko, James Philbin, FaceNet: A Unified Embedding for Face Recognition and Clustering, CVPR, 2015. [Paper](FaceNet: A Unified Embedding for Face Recognition and Clustering)

- Facial Landmark Detection

- Yue Wu, Tal Hassner, KangGeon Kim, Gerard Medioni, Prem Natarajan, Facial Landmark Detection with Tweaked Convolutional Neural Networks, 2015. [Paper](Facial Landmark Detection with Tweaked Convolutional Neural Networks) [Project](Facial Landmark Detection with Tweaked Convolutional Neural Networks)

Courses

- Deep Vision

- [Stanford] [CS231n: Convolutional Neural Networks for Visual Recognition](CS231n: Convolutional Neural Networks for Visual Recognition)

- [CUHK] [ELEG 5040: Advanced Topics in Signal Processing(Introduction to Deep Learning)](ELEG 5040 | Class Profile | Piazza)

- More Deep Learning

- [Stanford] [CS224d: Deep Learning for Natural Language Processing](CS224d: Deep Learning for Natural Language Processing)

- [Oxford] [Deep Learning by Prof. Nando de Freitas](Machine Learning)

- [NYU] [Deep Learning by Prof. Yann LeCun](courses:deeplearning2014:start | CILVR Lab @ NYU)

Books

- Free Online Books

- [Deep Learning by Ian Goodfellow, Yoshua Bengio, and Aaron Courville](Deep Learning)

- [Neural Networks and Deep Learning by Michael Nielsen](Neural networks and deep learning)

- [Deep Learning Tutorial by LISA lab, University of Montreal](http://deeplearning.net/tutorial/deeplearning.pdf)

Videos

- Talks

- Deep Learning, Self-Taught Learning and Unsupervised Feature Learning By Andrew Ng [video](https://www.youtube.com/watch?v=n1ViNeWhC24)

- Recent Developments in Deep Learning By Geoff Hinton [video](https://www.youtube.com/watch?v=vShMxxqtDDs)

- The Unreasonable Effectiveness of Deep Learning by Yann LeCun [video](https://www.youtube.com/watch?v=sc-KbuZqGkI)

- Deep Learning of Representations by Yoshua bengio [video](https://www.youtube.com/watch?v=4xsVFLnHC_0)

Applications

- Adversarial Training

- Code and hyperparameters for the paper “Generative Adversarial Networks” [Web](goodfeli/adversarial)

- Understanding and Visualizing

- Source code for “Understanding Deep Image Representations by Inverting Them,” CVPR, 2015. [Web](aravindhm/deep-goggle)

- Semantic Segmentation

- Source code for the paper “Rich feature hierarchies for accurate object detection and semantic segmentation,” CVPR, 2014. [Web](rbgirshick/rcnn)

- Source code for the paper “Fully Convolutional Networks for Semantic Segmentation,” CVPR, 2015. [Web](longjon/caffe)

- Super-Resolution

- Image Super-Resolution for Anime-Style-Art [Web](nagadomi/waifu2x)

- Edge Detection

- Source code for the paper “DeepContour: A Deep Convolutional Feature Learned by Positive-Sharing Loss for Contour Detection,” CVPR, 2015. [Web](shenwei1231/DeepContour)

- Source code for the paper “Holistically-Nested Edge Detection”, ICCV 2015. [Web](s9xie/hed)

Blogs

- Deep down the rabbit hole: CVPR 2015 and beyond@Tombone’s Computer Vision Blog [Web](Deep down the rabbit hole: CVPR 2015 and beyond)

- CVPR recap and where we’re going@Zoya Bylinskii (MIT PhD Student)’s Blog [Web](http://zoyathinks.blogspot.kr/2015/06/cvpr-recap-and-where-were-going.html)

- Facebook’s AI Painting@Wired [Web](Facebook's New AI Can Paint, But Google's Knows How to Party)

- Inceptionism: Going Deeper into Neural Networks@Google Research [Web](http://googleresearch.blogspot.kr/2015/06/inceptionism-going-deeper-into-neural.html)

- Implementing Neural networks [Web](Notes on machine learning)

引用

[A Beginner's Guide to Convolutional Neural Networks (CNNs)](A Beginner's Guide to Convolutional Neural Networks (CNNs))

[A Beginner's Guide To Understanding Convolutional Neural Networks](A Beginner's Guide To Understanding Convolutional Neural Networks)

[Convolutional Neural Networks (CNNs / ConvNets)](CS231n Convolutional Neural Networks for Visual Recognition)

[An intuitive guide to Convolutional Neural Networks](An intuitive guide to Convolutional Neural Networks)

[Fully Connected Deep Networks](TensorFlow for Deep Learning)

[使用 Estimator 构建卷积神经网络](https://www.tensorflow.org/tutorials/estimators/cnn?hl=zh-cn)

推荐阅读

CNN卷积神经网络及优化器卷积神经网络(Convolutional Netural Network,CNN)可以有效降低传统神经网络的复杂性。 CNN的主要应用是在图像分类和物品识别等应用场 CNN网络结构CNN网络结构主要包括:输入层,卷积层,…心一卷积神经网络(CNN)的理解与可视化Deeper Go深度学习笔记9:卷积神经网络(CNN)入门出处:数据科学家养成记 深度学习笔记9:卷积神经网络(CNN)入门前面的八篇学习笔记,基本上都是围绕着深度神经网络(DNN)和全连接网络(FCN)在学习。从本篇开始,笔者将跟着大家一起学…程序员必修...发表于程序猿数据...卷积神经网络(三,R-CNN)

本人做资料记录方便学习。

2522

2522

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?