7、Kafka Source

tier1.sources.source1.type = org.apache.flume.source.kafka.KafkaSource

tier1.sources.source1.channels = channel1

tier1.sources.source1.batchSize = 5000

tier1.sources.source1.batchDurationMillis = 2000

tier1.sources.source1.kafka.bootstrap.servers = localhost:9092

tier1.sources.source1.kafka.topics = test1, test2

tier1.sources.source1.kafka.consumer.group.id = custom.g.id

tier1.sources.source1.type = org.apache.flume.source.kafka.KafkaSource

tier1.sources.source1.channels = channel1

tier1.sources.source1.kafka.bootstrap.servers = localhost:9092

tier1.sources.source1.kafka.topics.regex = ^topic[0-9]$

the default kafka.consumer.group.id=flume is used

8、NetCat TCP Source

a1.sources = r1

a1.channels = c1

a1.sources.r1.type = netcat

a1.sources.r1.bind = 0.0.0.0

a1.sources.r1.port = 6666

a1.sources.r1.channels = c1

9、NetCat UDP Source

a1.sources = r1

a1.channels = c1

a1.sources.r1.type = netcatudp

a1.sources.r1.bind = 0.0.0.0

a1.sources.r1.port = 6666

a1.sources.r1.channels = c1

9、Sequence Generator Source

a1.sources = r1

a1.channels = c1

a1.sources.r1.type = seq

a1.sources.r1.channels = c1

10、Syslog TCP Source

a1.sources = r1

a1.channels = c1

a1.sources.r1.type = syslogtcp

a1.sources.r1.port = 5140

a1.sources.r1.host = localhost

a1.sources.r1.channels = c1

11、Multiport Syslog TCP Source

a1.sources = r1

a1.channels = c1

a1.sources.r1.type = multiport_syslogtcp

a1.sources.r1.channels = c1

a1.sources.r1.host = 0.0.0.0

a1.sources.r1.ports = 10001 10002 10003

a1.sources.r1.portHeader = port

12、Syslog UDP Source

a1.sources = r1

a1.channels = c1

a1.sources.r1.type = syslogudp

a1.sources.r1.port = 5140

a1.sources.r1.host = localhost

a1.sources.r1.channels = c1

13、HTTP Source

a1.sources = r1

a1.channels = c1

a1.sources.r1.type = http

a1.sources.r1.port = 5140

a1.sources.r1.channels = c1

a1.sources.r1.handler = org.example.rest.RestHandler

a1.sources.r1.handler.nickname = random props

a1.sources.r1.HttpConfiguration.sendServerVersion = false

a1.sources.r1.ServerConnector.idleTimeout = 300

14、Stress Source

a1.sources = stresssource-1

a1.channels = memoryChannel-1

a1.sources.stresssource-1.type = org.apache.flume.source.StressSource

a1.sources.stresssource-1.size = 10240

a1.sources.stresssource-1.maxTotalEvents = 1000000

a1.sources.stresssource-1.channels = memoryChannel-1

15、Avro Legacy Source

a1.sources = r1

a1.channels = c1

a1.sources.r1.type = org.apache.flume.source.avroLegacy.AvroLegacySource

a1.sources.r1.host = 0.0.0.0

a1.sources.r1.bind = 6666

a1.sources.r1.channels = c1

16、Thrift Legacy Source

a1.sources = r1

a1.channels = c1

a1.sources.r1.type = org.apache.flume.source.thriftLegacy.ThriftLegacySource

a1.sources.r1.host = 0.0.0.0

a1.sources.r1.bind = 6666

a1.sources.r1.channels = c1

17、Custom Source

a1.sources = r1

a1.channels = c1

a1.sources.r1.type = org.example.MySource

a1.sources.r1.channels = c1

18、Scribe Source

a1.sources = r1

a1.channels = c1

a1.sources.r1.type = org.apache.flume.source.scribe.ScribeSource

a1.sources.r1.port = 1463

a1.sources.r1.workerThreads = 5

a1.sources.r1.channels = c1

四、Flume sink

1、hdfs sink

a1.channels = c1

a1.sinks = k1

a1.sinks.k1.type = hdfs

a1.sinks.k1.channel = c1

a1.sinks.k1.hdfs.path = /flume/events/%y-%m-%d/%H%M/%S

a1.sinks.k1.hdfs.filePrefix = events-

a1.sinks.k1.hdfs.round = true

a1.sinks.k1.hdfs.roundValue = 10

a1.sinks.k1.hdfs.roundUnit = minute

2、hive sink

hive sink需要定义的内容比较多,根据表的字段,分区以及分隔符的不同设置相应与之变化,如下hive建表

create table weblogs ( id int , msg string )

partitioned by (continent string, country string, time string)

clustered by (id) into 5 buckets

stored as orc;

hive_sink.conf

a1.channels = c1

a1.channels.c1.type = memory

a1.sinks = k1

a1.sinks.k1.type = hive

a1.sinks.k1.channel = c1

a1.sinks.k1.hive.metastore = thrift://127.0.0.1:9083

a1.sinks.k1.hive.database = logsdb

a1.sinks.k1.hive.table = weblogs

a1.sinks.k1.hive.partition = asia,%{country},%y-%m-%d-%H-%M

a1.sinks.k1.useLocalTimeStamp = false

a1.sinks.k1.round = true

a1.sinks.k1.roundValue = 10

a1.sinks.k1.roundUnit = minute

a1.sinks.k1.serializer = DELIMITED

a1.sinks.k1.serializer.delimiter = “\t”

a1.sinks.k1.serializer.serdeSeparator = ‘\t’

a1.sinks.k1.serializer.fieldnames =id,msg

3、logger sink

a1.channels = c1

a1.sinks = k1

a1.sinks.k1.type = logger

a1.sinks.k1.channel = c1

4、avro sink

a1.channels = c1

a1.sinks = k1

a1.sinks.k1.type = avro

a1.sinks.k1.channel = c1

a1.sinks.k1.hostname = 10.10.10.10

a1.sinks.k1.port = 4545

5、Thrift Sink

a1.channels = c1

a1.sinks = k1

a1.sinks.k1.type = thrift

a1.sinks.k1.channel = c1

a1.sinks.k1.hostname = 10.10.10.10

a1.sinks.k1.port = 4545

6、IRC Sink

a1.channels = c1

a1.sinks = k1

a1.sinks.k1.type = irc

a1.sinks.k1.channel = c1

a1.sinks.k1.hostname = irc.yourdomain.com

a1.sinks.k1.nick = flume

a1.sinks.k1.chan = #flume

7、File Roll Sink

a1.channels = c1

a1.sinks = k1

a1.sinks.k1.type = file_roll

a1.sinks.k1.channel = c1

a1.sinks.k1.sink.directory = /var/log/flume

8、Null Sink

a1.channels = c1

a1.sinks = k1

a1.sinks.k1.type = null

a1.sinks.k1.channel = c1

9、HBase1Sink

a1.channels = c1

a1.sinks = k1

a1.sinks.k1.type = hbase

a1.sinks.k1.table = foo_table

a1.sinks.k1.columnFamily = bar_cf

a1.sinks.k1.serializer = org.apache.flume.sink.hbase.RegexHbaseEventSerializer

a1.sinks.k1.channel = c1

10、HBase2Sink

a1.channels = c1

a1.sinks = k1

a1.sinks.k1.type = hbase2

a1.sinks.k1.table = foo_table

a1.sinks.k1.columnFamily = bar_cf

a1.sinks.k1.serializer = org.apache.flume.sink.hbase2.RegexHBase2EventSerializer

a1.sinks.k1.channel = c1

10、AsyncHBaseSink

a1.channels = c1

a1.sinks = k1

a1.sinks.k1.type = asynchbase

a1.sinks.k1.table = foo_table

a1.sinks.k1.columnFamily = bar_cf

a1.sinks.k1.serializer = org.apache.flume.sink.hbase.SimpleAsyncHbaseEventSerializer

a1.sinks.k1.channel = c1

11、MorphlineSolrSink

a1.channels = c1

a1.sinks = k1

a1.sinks.k1.type = org.apache.flume.sink.solr.morphline.MorphlineSolrSink

a1.sinks.k1.channel = c1

a1.sinks.k1.morphlineFile = /etc/flume-ng/conf/morphline.conf

a1.sinks.k1.morphlineId = morphline1

a1.sinks.k1.batchSize = 1000

a1.sinks.k1.batchDurationMillis = 1000

12、ElasticSearchSink

a1.channels = c1

a1.sinks = k1

a1.sinks.k1.type = elasticsearch

a1.sinks.k1.hostNames = 127.0.0.1:9200,127.0.0.2:9300

a1.sinks.k1.indexName = foo_index

a1.sinks.k1.indexType = bar_type

a1.sinks.k1.clusterName = foobar_cluster

a1.sinks.k1.batchSize = 500

a1.sinks.k1.ttl = 5d

a1.sinks.k1.serializer = org.apache.flume.sink.elasticsearch.ElasticSearchDynamicSerializer

a1.sinks.k1.channel = c1

13、Kite Dataset Sink

14、Kafka Sink

a1.sinks.k1.channel = c1

a1.sinks.k1.type = org.apache.flume.sink.kafka.KafkaSink

a1.sinks.k1.kafka.topic = mytopic

a1.sinks.k1.kafka.bootstrap.servers = localhost:9092

a1.sinks.k1.kafka.flumeBatchSize = 20

a1.sinks.k1.kafka.producer.acks = 1

a1.sinks.k1.kafka.producer.linger.ms = 1

a1.sinks.k1.kafka.producer.compression.type = snappy

15、TSL Kafka Sink

a1.sinks.sink1.type = org.apache.flume.sink.kafka.KafkaSink

a1.sinks.sink1.kafka.bootstrap.servers = kafka-1:9093,kafka-2:9093,kafka-3:9093

a1.sinks.sink1.kafka.topic = mytopic

a1.sinks.sink1.kafka.producer.security.protocol = SSL

optional, the global truststore can be used alternatively

a1.sinks.sink1.kafka.producer.ssl.truststore.location = /path/to/truststore.jks

a1.sinks.sink1.kafka.producer.ssl.truststore.password =

16、HTTP Sink

a1.channels = c1

a1.sinks = k1

a1.sinks.k1.type = http

a1.sinks.k1.channel = c1

a1.sinks.k1.endpoint = http://localhost:8080/someuri

a1.sinks.k1.connectTimeout = 2000

a1.sinks.k1.requestTimeout = 2000

a1.sinks.k1.acceptHeader = application/json

a1.sinks.k1.contentTypeHeader = application/json

a1.sinks.k1.defaultBackoff = true

a1.sinks.k1.defaultRollback = true

a1.sinks.k1.defaultIncrementMetrics = false

a1.sinks.k1.backoff.4XX = false

a1.sinks.k1.rollback.4XX = false

a1.sinks.k1.incrementMetrics.4XX = true

a1.sinks.k1.backoff.200 = false

a1.sinks.k1.rollback.200 = false

a1.sinks.k1.incrementMetrics.200 = true

17、Custom Sink

a1.channels = c1

a1.sinks = k1

a1.sinks.k1.type = org.example.MySink

a1.sinks.k1.channel = c1

18、自定义source和sink

flume开发者文档:Flume 1.9.0 Developer Guide — Apache Flume

五、Flume 通道

1、Memory Channel

a1.channels = c1

a1.channels.c1.type = memory

a1.channels.c1.capacity = 10000

a1.channels.c1.transactionCapacity = 10000

a1.channels.c1.byteCapacityBufferPercentage = 20

a1.channels.c1.byteCapacity = 800000

2、JDBC Channel

a1.channels = c1

a1.channels.c1.type = jdbc

3、Kafka Channel

a1.channels.channel1.type = org.apache.flume.channel.kafka.KafkaChannel

a1.channels.channel1.kafka.bootstrap.servers = kafka-1:9092,kafka-2:9092,kafka-3:9092

a1.channels.channel1.kafka.topic = channel1

a1.channels.channel1.kafka.consumer.group.id = flume-consumer

4、TSL Kafka Channel

a1.channels.channel1.type = org.apache.flume.channel.kafka.KafkaChannel

a1.channels.channel1.kafka.bootstrap.servers = kafka-1:9093,kafka-2:9093,kafka-3:9093

a1.channels.channel1.kafka.topic = channel1

a1.channels.channel1.kafka.consumer.group.id = flume-consumer

a1.channels.channel1.kafka.producer.security.protocol = SSL

optional, the global truststore can be used alternatively

a1.channels.channel1.kafka.producer.ssl.truststore.location = /path/to/truststore.jks

a1.channels.channel1.kafka.producer.ssl.truststore.password =

a1.channels.channel1.kafka.consumer.security.protocol = SSL

optional, the global truststore can be used alternatively

a1.channels.channel1.kafka.consumer.ssl.truststore.location = /path/to/truststore.jks

a1.channels.channel1.kafka.consumer.ssl.truststore.password =

5、File Channel

a1.channels = c1

a1.channels.c1.type = file

a1.channels.c1.checkpointDir = /mnt/flume/checkpoint

a1.channels.c1.dataDirs = /mnt/flume/data

6、Spillable Memory Channel

a1.channels = c1

a1.channels.c1.type = SPILLABLEMEMORY

a1.channels.c1.memoryCapacity = 10000

a1.channels.c1.overflowCapacity = 1000000

a1.channels.c1.byteCapacity = 800000

a1.channels.c1.checkpointDir = /mnt/flume/checkpoint

a1.channels.c1.dataDirs = /mnt/flume/data

7、Pseudo Transaction Channel

a1.channels = c1

a1.channels.c1.type = org.example.MyChannel

六、Flume 通道选择器

1、Replicating Channel Selector (default)

a1.sources = r1

a1.channels = c1 c2 c3

a1.sources.r1.selector.type = replicating

a1.sources.r1.channels = c1 c2 c3

a1.sources.r1.selector.optional = c3

2、Multiplexing Channel Selector

a1.sources = r1

a1.channels = c1 c2 c3 c4

a1.sources.r1.selector.type = multiplexing

a1.sources.r1.selector.header = state

a1.sources.r1.selector.mapping.CZ = c1

a1.sources.r1.selector.mapping.US = c2 c3

a1.sources.r1.selector.default = c4

3、Custom Channel Selector

a1.sources = r1

a1.channels = c1

a1.sources.r1.selector.type = org.example.MyChannelSelector

七、Flume Sink 处理器

1、Default Sink Processor

a1.sinkgroups = g1

a1.sinkgroups.g1.sinks = k1 k2

a1.sinkgroups.g1.processor.type = load_balance

2、Failover Sink Processor

a1.sinkgroups = g1

a1.sinkgroups.g1.sinks = k1 k2

a1.sinkgroups.g1.processor.type = failover

a1.sinkgroups.g1.processor.priority.k1 = 5

a1.sinkgroups.g1.processor.priority.k2 = 10

a1.sinkgroups.g1.processor.maxpenalty = 10000

3、Load balancing Sink Processor

a1.sinkgroups = g1

a1.sinkgroups.g1.sinks = k1 k2

a1.sinkgroups.g1.processor.type = load_balance

a1.sinkgroups.g1.processor.backoff = true

a1.sinkgroups.g1.processor.selector = random

4、Body Text Serializer

a1.sinks = k1

a1.sinks.k1.type = file_roll

a1.sinks.k1.channel = c1

a1.sinks.k1.sink.directory = /var/log/flume

a1.sinks.k1.sink.serializer = text

a1.sinks.k1.sink.serializer.appendNewline = false

八、Flume 事件序列化

1、Body Text Serializer

a1.sinks = k1

a1.sinks.k1.type = file_roll

a1.sinks.k1.channel = c1

a1.sinks.k1.sink.directory = /var/log/flume

a1.sinks.k1.sink.serializer = text

a1.sinks.k1.sink.serializer.appendNewline = false

2、“Flume Event” Avro Event Serializer

a1.sinks.k1.type = hdfs

a1.sinks.k1.channel = c1

a1.sinks.k1.hdfs.path = /flume/events/%y-%m-%d/%H%M/%S

a1.sinks.k1.serializer = avro_event

a1.sinks.k1.serializer.compressionCodec = snappy

3、Avro Event Serializer

a1.sinks.k1.type = hdfs

a1.sinks.k1.channel = c1

a1.sinks.k1.hdfs.path = /flume/events/%y-%m-%d/%H%M/%S

a1.sinks.k1.serializer = org.apache.flume.sink.hdfs.AvroEventSerializer$Builder

a1.sinks.k1.serializer.compressionCodec = snappy

a1.sinks.k1.serializer.schemaURL = hdfs://namenode/path/to/schema.avsc

九、Flume 拦截器

1、default interceptor

a1.sources = r1

a1.sinks = k1

a1.channels = c1

a1.sources.r1.interceptors = i1 i2

a1.sources.r1.interceptors.i1.type = org.apache.flume.interceptor.HostInterceptor$Builder

a1.sources.r1.interceptors.i1.preserveExisting = false

a1.sources.r1.interceptors.i1.hostHeader = hostname

a1.sources.r1.interceptors.i2.type = org.apache.flume.interceptor.TimestampInterceptor$Builder

a1.sinks.k1.filePrefix = FlumeData.%{CollectorHost}.%Y-%m-%d

a1.sinks.k1.channel = c1

2、Timestamp Interceptor

a1.sources = r1

a1.channels = c1

a1.sources.r1.channels = c1

a1.sources.r1.type = seq

a1.sources.r1.interceptors = i1

a1.sources.r1.interceptors.i1.type = timestamp

3、Host Interceptor

a1.sources = r1

a1.channels = c1

a1.sources.r1.interceptors = i1

a1.sources.r1.interceptors.i1.type = host

4、Static Interceptor

a1.sources = r1

a1.channels = c1

a1.sources.r1.channels = c1

a1.sources.r1.type = seq

a1.sources.r1.interceptors = i1

a1.sources.r1.interceptors.i1.type = static

a1.sources.r1.interceptors.i1.key = datacenter

a1.sources.r1.interceptors.i1.value = NEW_YORK

5、Remove Header Interceptor

6、UUID Interceptor

7、Morphline Interceptor

a1.sources.avroSrc.interceptors = morphlineinterceptor

a1.sources.avroSrc.interceptors.morphlineinterceptor.type = org.apache.flume.sink.solr.morphline.MorphlineInterceptor$Builder

a1.sources.avroSrc.interceptors.morphlineinterceptor.morphlineFile = /etc/flume-ng/conf/morphline.conf

a1.sources.avroSrc.interceptors.morphlineinterceptor.morphlineId = morphline1

8、Search and Replace Interceptor

a1.sources.avroSrc.interceptors = search-replace

a1.sources.avroSrc.interceptors.search-replace.type = search_replace

Remove leading alphanumeric characters in an event body.

a1.sources.avroSrc.interceptors.search-replace.searchPattern = 1+

a1.sources.avroSrc.interceptors.search-replace.replaceString =

a1.sources.avroSrc.interceptors = search-replace

a1.sources.avroSrc.interceptors.search-replace.type = search_replace

Use grouping operators to reorder and munge words on a line.

a1.sources.avroSrc.interceptors.search-replace.searchPattern = The quick brown ([a-z]+) jumped over the lazy ([a-z]+)

a1.sources.avroSrc.interceptors.search-replace.replaceString = The hungry $2 ate the careless $1

9、Regex Filtering Interceptor

10、Regex Extractor Interceptor

a1.sources.r1.interceptors.i1.regex = (\d)😦\d)😦\d)

a1.sources.r1.interceptors.i1.serializers = s1 s2 s3

a1.sources.r1.interceptors.i1.serializers.s1.name = one

a1.sources.r1.interceptors.i1.serializers.s2.name = two

a1.sources.r1.interceptors.i1.serializers.s3.name = three

a1.sources.r1.interceptors.i1.regex = ^(?:\n)?(\d\d\d\d-\d\d-\d\d\s\d\d:\d\d)

a1.sources.r1.interceptors.i1.serializers = s1

a1.sources.r1.interceptors.i1.serializers.s1.type = org.apache.flume.interceptor.RegexExtractorInterceptorMillisSerializer

a1.sources.r1.interceptors.i1.serializers.s1.name = timestamp

a1.sources.r1.interceptors.i1.serializers.s1.pattern = yyyy-MM-dd HH:mm

十、Flume 配置

1、Environment Variable Config Filter

a1.sources = r1

a1.channels = c1

a1.configfilters = f1

a1.configfilters.f1.type = env

a1.sources.r1.channels = c1

a1.sources.r1.type = http

a1.sources.r1.keystorePassword = ${f1[‘my_keystore_password’]} #will get the value Secret123

2、External Process Config Filter

a1.sources = r1

a1.channels = c1

a1.configfilters = f1

a1.configfilters.f1.type = external

a1.configfilters.f1.command = /usr/bin/passwordResolver.sh

a1.configfilters.f1.charset = UTF-8

a1.sources.r1.channels = c1

a1.sources.r1.type = http

a1.sources.r1.keystorePassword = ${f1[‘my_keystore_password’]} #will get the value Secret123

a1.sources = r1

a1.channels = c1

a1.configfilters = f1

a1.configfilters.f1.type = external

a1.configfilters.f1.command = /usr/bin/generateUniqId.sh

a1.configfilters.f1.charset = UTF-8

a1.sinks = k1

a1.sinks.k1.type = file_roll

a1.sinks.k1.channel = c1

a1.sinks.k1.sink.directory = /var/log/flume/agent_${f1[‘agent_name’]} # will be /var/log/flume/agent_1234

3、Hadoop Credential Store Config Filter

a1.sources = r1

a1.channels = c1

a1.configfilters = f1

a1.configfilters.f1.type = hadoop

a1.configfilters.f1.credential.provider.path = jceks://file/<path_to_jceks file>

a1.sources.r1.channels = c1

a1.sources.r1.type = http

a1.sources.r1.keystorePassword = ${f1[‘my_keystore_password’]} #will get the value from the credential store

4、Log4J Appender

log4j.appender.flume = org.apache.flume.clients.log4jappender.Log4jAppender

log4j.appender.flume.Hostname = example.com

log4j.appender.flume.Port = 41414

log4j.appender.flume.UnsafeMode = true

configure a class’s logger to output to the flume appender

log4j.logger.org.example.MyClass = DEBUG,flume

log4j.appender.flume = org.apache.flume.clients.log4jappender.Log4jAppender

log4j.appender.flume.Hostname = example.com

log4j.appender.flume.Port = 41414

log4j.appender.flume.AvroReflectionEnabled = true

log4j.appender.flume.AvroSchemaUrl = hdfs://namenode/path/to/schema.avsc

configure a class’s logger to output to the flume appender

log4j.logger.org.example.MyClass = DEBUG,flume

5、Load Balancing Log4J Appender

log4j.appender.out2 = org.apache.flume.clients.log4jappender.LoadBalancingLog4jAppender

log4j.appender.out2.Hosts = localhost:25430 localhost:25431

configure a class’s logger to output to the flume appender

log4j.logger.org.example.MyClass = DEBUG,flume

log4j.appender.out2 = org.apache.flume.clients.log4jappender.LoadBalancingLog4jAppender

log4j.appender.out2.Hosts = localhost:25430 localhost:25431

log4j.appender.out2.Selector = RANDOM

configure a class’s logger to output to the flume appender

log4j.logger.org.example.MyClass = DEBUG,flume

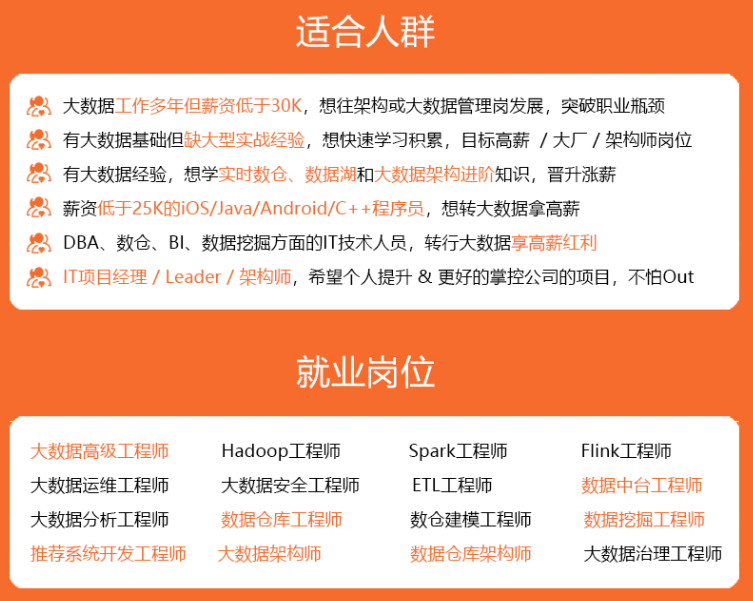

自我介绍一下,小编13年上海交大毕业,曾经在小公司待过,也去过华为、OPPO等大厂,18年进入阿里一直到现在。

深知大多数大数据工程师,想要提升技能,往往是自己摸索成长或者是报班学习,但对于培训机构动则几千的学费,着实压力不小。自己不成体系的自学效果低效又漫长,而且极易碰到天花板技术停滞不前!

因此收集整理了一份《2024年大数据全套学习资料》,初衷也很简单,就是希望能够帮助到想自学提升又不知道该从何学起的朋友。

既有适合小白学习的零基础资料,也有适合3年以上经验的小伙伴深入学习提升的进阶课程,基本涵盖了95%以上大数据开发知识点,真正体系化!

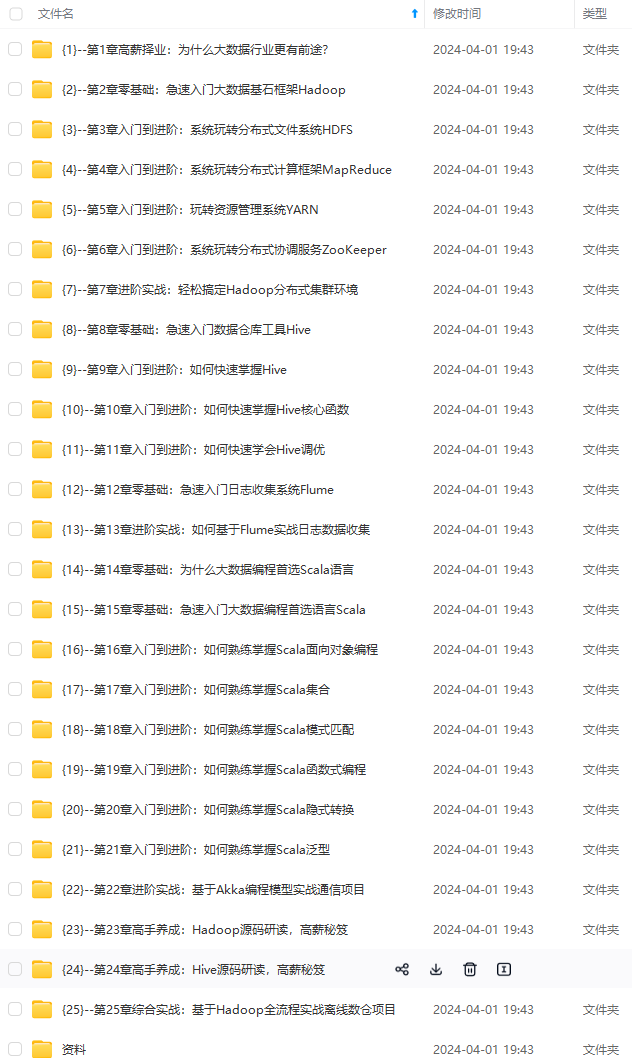

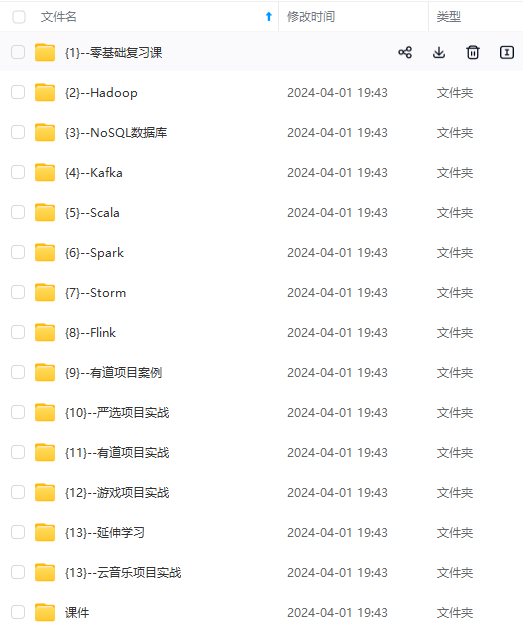

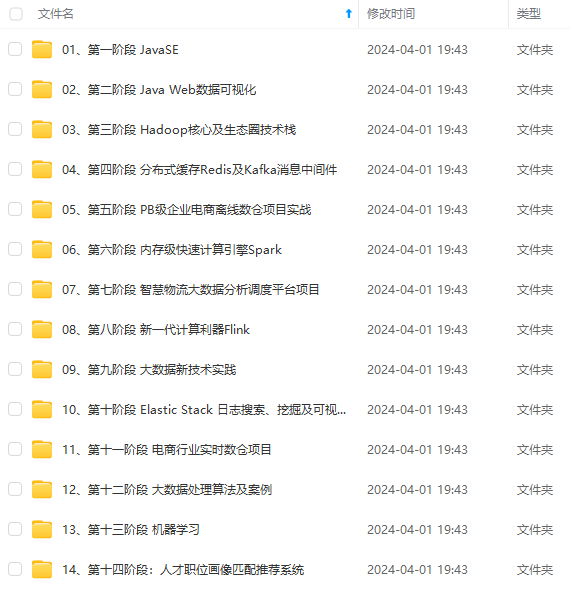

由于文件比较大,这里只是将部分目录大纲截图出来,每个节点里面都包含大厂面经、学习笔记、源码讲义、实战项目、讲解视频,并且后续会持续更新

如果你觉得这些内容对你有帮助,可以添加VX:vip204888 (备注大数据获取)

一个人可以走的很快,但一群人才能走的更远。不论你是正从事IT行业的老鸟或是对IT行业感兴趣的新人,都欢迎扫码加入我们的的圈子(技术交流、学习资源、职场吐槽、大厂内推、面试辅导),让我们一起学习成长!

path/to/schema.avsc

configure a class’s logger to output to the flume appender

log4j.logger.org.example.MyClass = DEBUG,flume

5、Load Balancing Log4J Appender

log4j.appender.out2 = org.apache.flume.clients.log4jappender.LoadBalancingLog4jAppender

log4j.appender.out2.Hosts = localhost:25430 localhost:25431

configure a class’s logger to output to the flume appender

log4j.logger.org.example.MyClass = DEBUG,flume

log4j.appender.out2 = org.apache.flume.clients.log4jappender.LoadBalancingLog4jAppender

log4j.appender.out2.Hosts = localhost:25430 localhost:25431

log4j.appender.out2.Selector = RANDOM

configure a class’s logger to output to the flume appender

log4j.logger.org.example.MyClass = DEBUG,flume

自我介绍一下,小编13年上海交大毕业,曾经在小公司待过,也去过华为、OPPO等大厂,18年进入阿里一直到现在。

深知大多数大数据工程师,想要提升技能,往往是自己摸索成长或者是报班学习,但对于培训机构动则几千的学费,着实压力不小。自己不成体系的自学效果低效又漫长,而且极易碰到天花板技术停滞不前!

因此收集整理了一份《2024年大数据全套学习资料》,初衷也很简单,就是希望能够帮助到想自学提升又不知道该从何学起的朋友。

[外链图片转存中…(img-brWbIJVj-1713022748020)]

[外链图片转存中…(img-QznWt6zn-1713022748021)]

[外链图片转存中…(img-Q6duY4TQ-1713022748021)]

[外链图片转存中…(img-Qu9ZJDax-1713022748021)]

[外链图片转存中…(img-1wx0EAfb-1713022748022)]

既有适合小白学习的零基础资料,也有适合3年以上经验的小伙伴深入学习提升的进阶课程,基本涵盖了95%以上大数据开发知识点,真正体系化!

由于文件比较大,这里只是将部分目录大纲截图出来,每个节点里面都包含大厂面经、学习笔记、源码讲义、实战项目、讲解视频,并且后续会持续更新

如果你觉得这些内容对你有帮助,可以添加VX:vip204888 (备注大数据获取)

[外链图片转存中…(img-LFmZjuLA-1713022748022)]

一个人可以走的很快,但一群人才能走的更远。不论你是正从事IT行业的老鸟或是对IT行业感兴趣的新人,都欢迎扫码加入我们的的圈子(技术交流、学习资源、职场吐槽、大厂内推、面试辅导),让我们一起学习成长!

A-Za-z0-9_ ↩︎

321

321

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?