爬虫一

本次爬取为两个爬虫,第一个爬虫爬取需要访问的URL并且存储到文本中,第二个爬虫读取第一个爬虫爬取的URl然后依次爬取该URL下内容,先运行第一个爬虫然后运行第二个爬虫即可完成爬取。

本帖仅供学习交流使用,请不要胡乱尝试以免影响网站正常运转

spiders文件下的spander.py文件内容

# -*- coding:utf-8 -*-

import scrapy

from ..items import ZhilianFistItem

class zhilian_url(scrapy.Spider):

name = 'zhilian_url'

start_urls = ['http://jobs.zhaopin.com/']

def parse(self,response):

myurl = ZhilianFistItem()

urls = response.xpath('/html/body/div/div/div/a[@target="_blank"]/@href').extract()

# if len(urls) == 0:

# print('+++++++++++++++++++ 空空空空空空空 +++++++++++++++++++++++++')

for url in urls:

myurl['url'] = url

# print('---------begin-----------------------------------------')

# print(url)

# print('---------end-----------------------------------------')

yield myurl

pass

items.py文件

# -*- coding: utf-8 -*-

# Define here the models for your scraped items

#

# See documentation in:

# http://doc.scrapy.org/en/latest/topics/items.html

from scrapy import Item,Field

class ZhilianFistItem(Item):

# define the fields for your item here like:

# name = scrapy.Field()

url = Field()

middlewares.py文件

# -*- coding: utf-8 -*-

# Define here the models for your spider middleware

#

# See documentation in:

# http://doc.scrapy.org/en/latest/topics/spider-middleware.html

from scrapy import signals

class ZhilianFistSpiderMiddleware(object):

# Not all methods need to be defined. If a method is not defined,

# scrapy acts as if the spider middleware does not modify the

# passed objects.

@classmethod

def from_crawler(cls, crawler):

# This method is used by Scrapy to create your spiders.

s = cls()

crawler.signals.connect(s.spider_opened, signal=signals.spider_opened)

return s

def process_spider_input(response, spider):

# Called for each response that goes through the spider

# middleware and into the spider.

# Should return None or raise an exception.

return None

def process_spider_output(response, result, spider):

# Called with the results returned from the Spider, after

# it has processed the response.

# Must return an iterable of Request, dict or Item objects.

for i in result:

yield i

def process_spider_exception(response, exception, spider):

# Called when a spider or process_spider_input() method

# (from other spider middleware) raises an exception.

# Should return either None or an iterable of Response, dict

# or Item objects.

pass

def process_start_requests(start_requests, spider):

# Called with the start requests of the spider, and works

# similarly to the process_spider_output() method, except

# that it doesn’t have a response associated.

# Must return only requests (not items).

for r in start_requests:

yield r

def spider_opened(self, spider):

spider.logger.info('Spider opened: %s' % spider.name)

pipelines.py文件

# -*- coding: utf-8 -*-

# Define your item pipelines here

#

# Don't forget to add your pipeline to the ITEM_PIPELINES setting

# See: http://doc.scrapy.org/en/latest/topics/item-pipeline.html

# import xlsxwriter

class ZhilianFistPipeline(object):

# def open_spider(self, spider):

def open_spider(self,spider):

print('++++++++++++ ++++++++++++')

print('++++++++++++ start ++++++++++++')

# 打开excel文件命名为url.xls

# self.xls =xlsxwriter.Workbook('url.xlsx')

# self.worksheet = self.xls.add_worksheet('myurls')

# self.id = 0

self.fp = open('myurls','w')

print('++++++++++++ ok ++++++++++++')

pass

def process_item(self, item, spider):

if '.htm' in item['url']:

pass

elif 'http://jobs.zhaopin.com/' in item['url']:

print('++++++++++++ ++++++++++++')

print('++++++++++++ 存储中 ++++++++++++')

# id = 'A' + str(self.id + 1)

# # print('*****************', id, '***************************************')

# self.worksheet.write(id, item['url'])

# self.id = self.id +1

self.fp.writelines(item['url']+"\n")

print('++++++++++++ ok ++++++++++++')

return item

else:

pass

# def spider_closed(self, spider):

# def spider_closed(self, spider):

def spider_closed(self, spider):

print('++++++++++++ ++++++++++++')

print('++++++++++++ 结束 ++++++++++++')

self.fp.close()

print('++++++++++++ ok ++++++++++++')

setting.py文件

# -*- coding: utf-8 -*-

BOT_NAME = 'zhilian_fist'

SPIDER_MODULES = ['zhilian_fist.spiders']

NEWSPIDER_MODULE = 'zhilian_fist.spiders'

# Obey robots.txt rules

ROBOTSTXT_OBEY = False

DEFAULT_REQUEST_HEADERS = {

'Host':'jobs.zhaopin.com',

'User-Agent':'Mozilla/5.0 (Windows NT 6.1; Win64; x64; rv:53.0) Gecko/20100101 Firefox/53.0'

}

ITEM_PIPELINES = {

'zhilian_fist.pipelines.ZhilianFistPipeline': 300,

}

第二个爬虫,zhilian_second

spander.py文件

# -*- coding:utf-8 -*-

import scrapy

from ..items import ZhilianSecondItem

from scrapy import Request

from bs4 import BeautifulSoup

class spider(scrapy.Spider):

name = 'zhilian_second'

start_urls =[]

def __init__(self):

links = open('E:/PythonWorkStation/zhilian_fist/myurls')

for line in links:

# 一定要去掉换行符,如果有换行符则无法访问网址,真他妈坑爹

line=line[:-1]

# print('-----------------------------')

# print('-----------------------------')

# print(line+'测试是否有换行符')

# print('-----------------------------')

# print('-----------------------------')

self.start_urls.append(line)

# break

def parse(self, response):

item = ZhilianSecondItem()

# print('-------------- start -----------------------')

title_list = response.xpath('//div/span[@class="post"]/a/text()').extract()

company_list = response.xpath('//div/span[@class="company_name"]/a/text()').extract()

salary_list = response.xpath('//div/span[@class="salary"]/text()').extract()

address_list = response.xpath('//div/span[@class="address"]/text()').extract()

release_list = response.xpath('//div/span[@class="release_time"]/text()').extract()

if response.xpath('//span[@class="search_page_next"]').extract()!= None:

next_url = response.xpath('//span[@class="search_page_next"]/a/@href').extract()

next_url=next_url[0].split('/')[2]

# print('----b--------')

# print('----b--------')

# print(response.url)

# print(len(response.url.split('/')))

# print(next_url)

# print(len(next_url))

# print('----e--------')

# print('----e--------')

# self.start_urls.append( Request(response.url[:-9]+next_url[0]))

if len(response.url.split('/'))==5:

yield Request(response.url+next_url)

elif len(response.url.split('/'))>5:

i = len(next_url)+1

print('***********')

# print(i)

print(next_url.lstrip('p'))

print('***********')

if (next_url.lstrip('p') == str(10) or next_url.lstrip('p')==str(100) or next_url.lstrip('p')==str(1000) or next_url.lstrip('p')== str(10000)):

print('++++++++++++++++')

i = i-1

yield Request(response.url[:-(i)] + next_url)

for a,s,d,f,g in zip(title_list,company_list,salary_list, address_list,release_list):

item['company']=s

item['salary']=d

item['address']=f

item['release']=g

item['title'] = a

yield item

items.py文件

# -*- coding: utf-8 -*-

# Define here the models for your scraped items

#

# See documentation in:

# http://doc.scrapy.org/en/latest/topics/items.html

import scrapy

class ZhilianSecondItem(scrapy.Item):

# define the fields for your item here like:

# name = scrapy.Field()

title =scrapy.Field()

company =scrapy.Field()

salary =scrapy.Field()

address =scrapy.Field()

release =scrapy.Field()

middlewares.py文件

# -*- coding: utf-8 -*-

# Define here the models for your spider middleware

#

# See documentation in:

# http://doc.scrapy.org/en/latest/topics/spider-middleware.html

from scrapy import signals

class ZhilianSecondSpiderMiddleware(object):

# Not all methods need to be defined. If a method is not defined,

# scrapy acts as if the spider middleware does not modify the

# passed objects.

@classmethod

def from_crawler(cls, crawler):

# This method is used by Scrapy to create your spiders.

s = cls()

crawler.signals.connect(s.spider_opened, signal=signals.spider_opened)

return s

def process_spider_input(response, spider):

# Called for each response that goes through the spider

# middleware and into the spider.

# Should return None or raise an exception.

return None

def process_spider_output(response, result, spider):

# Called with the results returned from the Spider, after

# it has processed the response.

# Must return an iterable of Request, dict or Item objects.

for i in result:

yield i

def process_spider_exception(response, exception, spider):

# Called when a spider or process_spider_input() method

# (from other spider middleware) raises an exception.

# Should return either None or an iterable of Response, dict

# or Item objects.

pass

def process_start_requests(start_requests, spider):

# Called with the start requests of the spider, and works

# similarly to the process_spider_output() method, except

# that it doesn’t have a response associated.

# Must return only requests (not items).

for r in start_requests:

yield r

def spider_opened(self, spider):

spider.logger.info('Spider opened: %s' % spider.name)

pipeline.py文件

# -*- coding: utf-8 -*-

class ZhilianSecondPipeline(object):

def open_spider(self,spider):

self.file = open('E:/招聘岗位.txt','w',encoding='utf-8')

def process_item(self, item, spider):

self.file.write(item['title']+","+item['company']+","+item['salary']+","+item['address']+","+item['release']+'\n')

# print('----------------------------------------------------------')

# print(item['title'],item['company'],item['salary'],item['address'],item['release'])

# print('----------------------------------------------------------')

return item

def spoder_closed(self,spider):

self.file.close()

setting.py文件

# -*- coding: utf-8 -*-

BOT_NAME = 'zhilian_second'

SPIDER_MODULES = ['zhilian_second.spiders']

NEWSPIDER_MODULE = 'zhilian_second.spiders'

ROBOTSTXT_OBEY = False

ITEM_PIPELINES = {

'zhilian_second.pipelines.ZhilianSecondPipeline': 300,

}

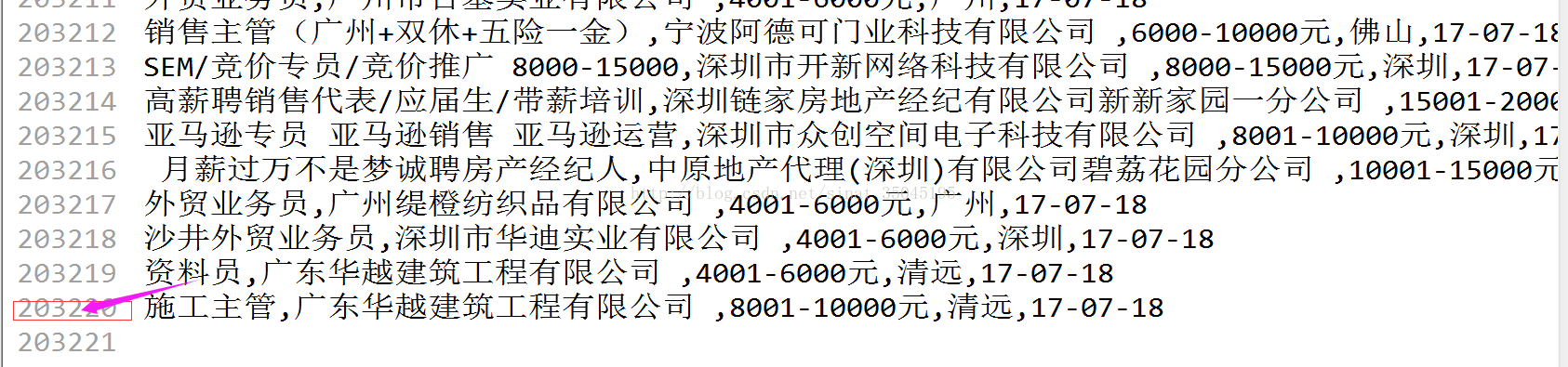

LOG_LEVEL = 'INFO'由于爬取的太多需要等的时间过长,所以本人在程序没有运行结束之前关终止了运行,但是依旧爬取了数十万岗位信息如下图所示

爬取的内容分割如下

(

职位,公司名称,工资介绍,地址,发布日期。)

232

232

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?