系统环境

Hardware Environment(Ascend/GPU/CPU): Ascend

Software Environment:

MindSpore version (source or binary): 1.6.0

Python version (e.g., Python 3.7.5): 3.7.5

OS platform and distribution (e.g., Linux Ubuntu 16.04):

GCC/Compiler version (if compiled from source): 7.3.0

复制运行脚本

在分布式运行环境中,需要bash启动脚本、python训练脚本以及rank_table的配置文件,将三个文件保存在同一目录下,三文件分别如下:

bash启动脚本

将以下shell脚本保存为run.sh

#!/bin/bash

RANK_SIZE=$1

EXEC_PATH=$(pwd)

export RANK_SIZE=${RANK_SIZE}

export HCCL_CONNECT_TIMEOUT=120 # 避免复现需要很长时间,设置超时为120s

export RANK_TABLE_FILE=${EXEC_PATH}/rank_table_8pcs.json # rank_table file的存放位置

for((i=0;i<${RANK_SIZE};i++))

do

rm -rf device$i

mkdir device$i

cp ./train.py ./device$i

cd ./device$i

export DEVICE_ID=$i

export RANK_ID=$i

echo "start training for device $i"

env > env$i.log

python ./train.py > train.log$i 2>&1 &

cd ../

done

echo "The program launch succeed, the log is under device*/train.log*."

复制训练脚本

将以下python脚本保存为train.py

"""Parallel Example"""

import numpy as np

from mindspore import context, Parameter

from mindspore.nn import Cell, Momentum

from mindspore.ops import operations as P

from mindspore.train import Model

from mindspore.nn.loss import SoftmaxCrossEntropyWithLogits

import mindspore.dataset as ds

import mindspore.communication.management as D

from mindspore.train.callback import LossMonitor

from mindspore.train.callback import ModelCheckpoint

from mindspore.common.initializer import initializer

step_per_epoch = 4

def get_dataset(*inputs):

def generate():

for _ in range(step_per_epoch):

yield inputs

return generate

class Net(Cell):

"""define net"""

def __init__(self):

super().__init__()

self.matmul = P.MatMul().shard(((2, 4), (4, 1)))

self.weight = Parameter(initializer("normal", [32, 16]), "w1")

self.relu = P.ReLU().shard(((8, 1),))

def construct(self, x):

out = self.matmul(x, self.weight)

out = self.relu(out)

return out

if __name__ == "__main__":

context.set_context(mode=context.GRAPH_MODE, device_target="Ascend", save_graphs=True)

D.init()

rank = D.get_rank()

context.set_auto_parallel_context(parallel_mode="semi_parallel", device_num=8, full_batch=True)

np.random.seed(1)

input_data = np.random.rand(16, 32).astype(np.float32)

label_data = np.random.rand(16, 16).astype(np.float32)

fake_dataset = get_dataset(input_data, label_data)

net = Net()

callback = [LossMonitor(), ModelCheckpoint(directory="{}".format(rank))]

dataset = ds.GeneratorDataset(fake_dataset, ["input", "label"])

loss = SoftmaxCrossEntropyWithLogits()

learning_rate = 0.001

momentum = 0.1

epoch_size = 1

opt = Momentum(net.trainable_params(), learning_rate, momentum)

model = Model(net, loss_fn=loss, optimizer=opt)

model.train(epoch_size, dataset, callbacks=callback, dataset_sink_mode=False)

复制rank_table文件

将下面json文件保存为rank_table_8pcs.json

{

"version": "1.0",

"server_count": "1",

"server_list": [

{

"server_id": "10.90.41.205",

"device": [

{

"device_id": "0",

"device_ip": "192.98.92.107",

"rank_id": "0"

},

{

"device_id": "1",

"device_ip": "192.98.93.107",

"rank_id": "1"

},

{

"device_id": "2",

"device_ip": "192.98.94.107",

"rank_id": "2"

},

{

"device_id": "3",

"device_ip": "192.98.95.107",

"rank_id": "3"

},

{

"device_id": "4",

"device_ip": "192.98.92.108",

"rank_id": "4"

},

{

"device_id": "5",

"device_ip": "192.98.93.108",

"rank_id": "5"

},

{

"device_id": "6",

"device_ip": "192.98.94.108",

"rank_id": "6"

},

{

"device_id": "7",

"device_ip": "192.98.95.108",

"rank_id": "7"

}

],

"host_nic_ip": "reserve"

}

],

"status": "completed"

}

复制报错分析

启动脚本

如果本地机器有8个Ascend 910设备,那么可以设置启动命令如下:

bash run.sh 8

复制错误信息分析

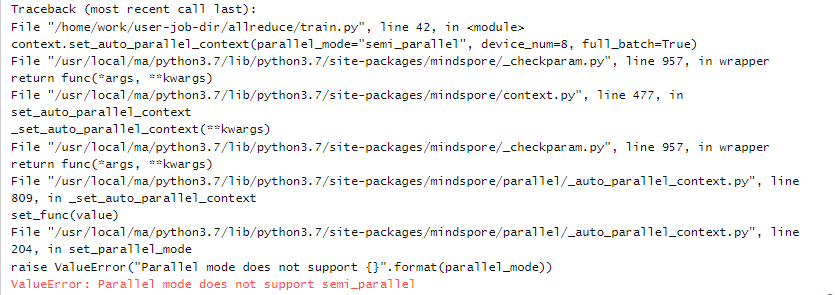

如下图所示,明显的报错信息说明当前的并行模式中不支持semi_parallel这种模式。

报错解决

修改错误

在MindSpore主页中找到set_auto_parallel_context接口,观察到参数parallel_mode 的可选项有如下几种,正确配置即可。

成功运行

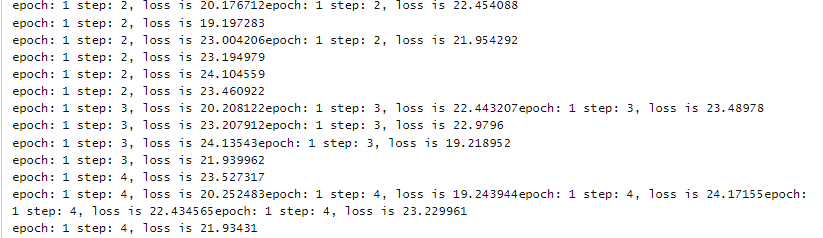

在合理配置并行模式之后(如配置成semi_auto_parallel),成功运行输出loss值,如下图所示:

2539

2539

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?