一、Kubernetes简介

在Docker 作为高级容器引擎快速发展的同时,在Google内部,容器技术已经应用了很多年,Borg系统运行管理着成千上万的容器应用。

Kubernetes项目来源于Borg,可以说是集结了Borg设计思想的精华,并且吸收了Borg系统中的经验和教训。

Kubernetes对计算资源进行了更高层次的抽象,通过将容器进行细致的组合,将最终的应用服务交给用户。

Kubernetes的好处:

-

隐藏资源管理和错误处理,用户仅需要关注应用的开发。

-

服务高可用、高可靠。

-

可将负载运行在由成千上万的机器联合而成的集群中。

1.kubernetes设计架构

Kubernetes集群包含有节点代理kubelet和Master组件(APIs, scheduler, etc),一切都基于分布式的存储系统。

Kubernetes主要由以下几个核心组件组成:

- etcd:保存了整个集群的状态

- apiserver:提供了资源操作的唯一入口,并提供认证、授权、访问控制、API注册和发现等机制

- controller manager:负责维护集群的状态,比如故障检测、自动扩展、滚动更新等

- scheduler:负责资源的调度,按照预定的调度策略将Pod调度到相应的机器上

- kubelet:负责维护容器的生命周期,同时也负责Volume(CVI)和网络(CNI)的管理

- Container runtime:负责镜像管理以及Pod和容器的真正运行(CRI)

- kube-proxy:负责为Service提供cluster内部的服务发现和负载均衡

除了核心组件,还有一些推荐的Add-ons:

- kube-dns:负责为整个集群提供DNS服务

- Ingress Controller:为服务提供外网入口

- Heapster:提供资源监控

- Dashboard:提供GUI

- Federation:提供跨可用区的集群

- Fluentd-elasticsearch:提供集群日志采集、存储与查询

Kubernetes设计理念和功能其实就是一个类似Linux的分层架构

核心层:Kubernetes最核心的功能,对外提供API构建高层的应用,对内提供插件式应用执行环境

应用层:部署(无状态应用、有状态应用、批处理任务、集群应用等)和路由(服务发现、DNS解析等)

管理层:系统度量(如基础设施、容器和网络的度量),自动化(如自动扩展、动态Provision等)以及策略管理(RBAC、Quota、PSP、NetworkPolicy等)

接口层:kubectl命令行工具、客户端SDK以及集群联邦

生态系统:在接口层之上的庞大容器集群管理调度的生态系统,可以划分为两个范畴

- Kubernetes外部:日志、监控、配置管理、CI、CD、Workflow、FaaS、OTS应用、ChatOps等

- Kubernetes内部:CRI、CNI、CVI、镜像仓库、Cloud Provider、集群自身的配置和管理等

二、Kubernetes部署

参考官网

-

关闭节点的selinux和iptables防火墙

-

所有节点部署docker引擎

-

所有节点都一样server2 server3 server4

修改驱动

[root@server2 docker]# vim daemon.json

{

"registry-mirrors": ["https://reg.westos.org"],

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m"

},

"storage-driver": "overlay2",

"storage-opts": [

"overlay2.override_kernel_check=true"

]

}

[root@server2 docker]# systemctl daemon-reload

[root@server2 docker]# systemctl restart docker.service

docker info 查看驱动是否变成了systemd形式的

注意: 如果修改完之后重启失败,按照以下操作完成再次重启即可

[root@server3 ~]# cd /etc/systemd/system/docker.service.d

[root@server3 docker.service.d]# ls

10-machine.conf

[root@server3 docker.service.d]# rm -fr 10-machine.conf

禁用swap分区

每个节点都需要做

#swapoff -a

注释掉/etc/fstab文件中的swap定义 防止开机自启

安装部署软件kubeadm

[root@server2 ~]# cd /etc/yum.repos.d/

[root@server2 yum.repos.d]# vim k8s.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=0

此前如果配置了Centos的源,请先将它禁用,以免下载服务时有依赖性的影响。

每个节点操作一样

[root@server2 ~]# yum install kubelet kubeadm kubectl

[root@server2 ~]# systemctl enable --now kubelet

[root@server2 yum.repos.d]# scp k8s.repo server3:/etc/yum.repos.d/

[root@server2 yum.repos.d]# scp k8s.repo server4:/etc/yum.repos.d/

[root@server2 ~]# kubeadm config print init-defaults ## //查看默认配置信息

默认从k8s.gcr.io上下载组件镜像,需要翻墙才可以,所以需要修改镜像仓库:

只有管理端需要拉取镜像

[root@server2 ~]# kubeadm config images list --image-repository registry.aliyuncs.com/google_containers

## 列出所需镜像

[root@server2 ~]# kubeadm config images pull --image-repository registry.aliyuncs.com/google_containers

## 拉取镜像

初始化集群

[root@server2 ~]# kubeadm init --pod-network-cidr=10.244.0.0/16 --image-repository registry.aliyuncs.com/google_containers

--pod-network-cidr=10.244.0.0/16 //使用flannel网络组件时必须添加

--kubernetes-version //指定k8s安装版本

安装flannel网络组件

https://github.com/coreos/flannel

链接: kube-flannel.yml 提取码: s452

kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

kubectl apply -f kube-flannel.yml

如果下载失败,在网盘自行提取

[root@server2 ~]# kubectl get pod --namespace kube-system

加入网络插件之后,状态就会变成ready

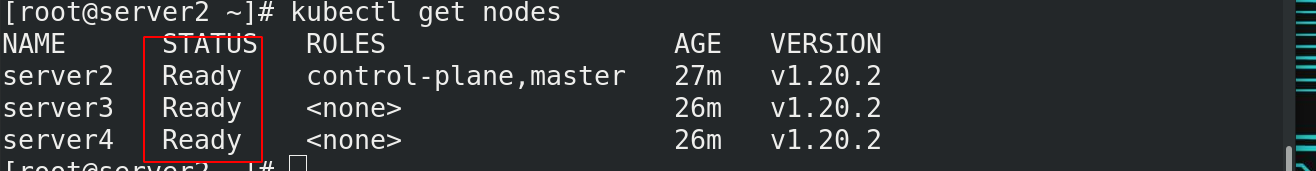

[root@server2 etc]# kubectl get nodes

为了方便,我们直接将需要的镜像打包,发送给集群其他节点,让其本地拉取就行

[root@server2 ~]# docker save quay.io/coreos/flannel:v0.12.0-amd64 registry.aliyuncs.com/google_containers/pause:3.2 registry.aliyuncs.com/google_containers/coredns:1.7.0 registry.aliyuncs.com/google_containers/kube-proxy:v1.20.2 > node.tar

[root@server2 ~]# scp node.tar server3:~/

[root@server2 ~]# scp node.tar server4:~/

[root@server3 ~]# docker load -i node.tar

[root@server4 ~]# docker load -i node.tar

在server3 server4执行,初始化之后生成的。按照实际添加。

kubeadm join 172.25.1.2:6443 --token 3jc2ts.iby7sspiql1atfj2 \

--discovery-token-ca-cert-hash sha256:e5d5e3660a96182995168d4d35199c7cc451601453fe074957de6bf023e1cecc

最后在master端查看节点状态

[root@server2 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

server2 Ready control-plane,master 27m v1.20.2

server3 Ready <none> 26m v1.20.2

server4 Ready <none> 26m v1.20.2

如果查询状态,以及pod 等都显示正常,到这里集群部署就结束了。

3981

3981

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?