Android MediaRecorder, 录音机的录音流程,以及类图

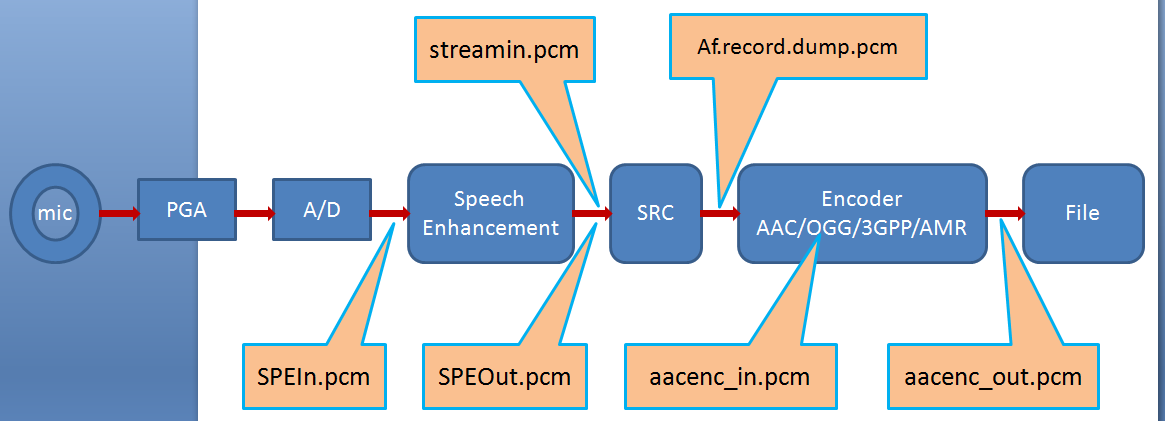

48k recording

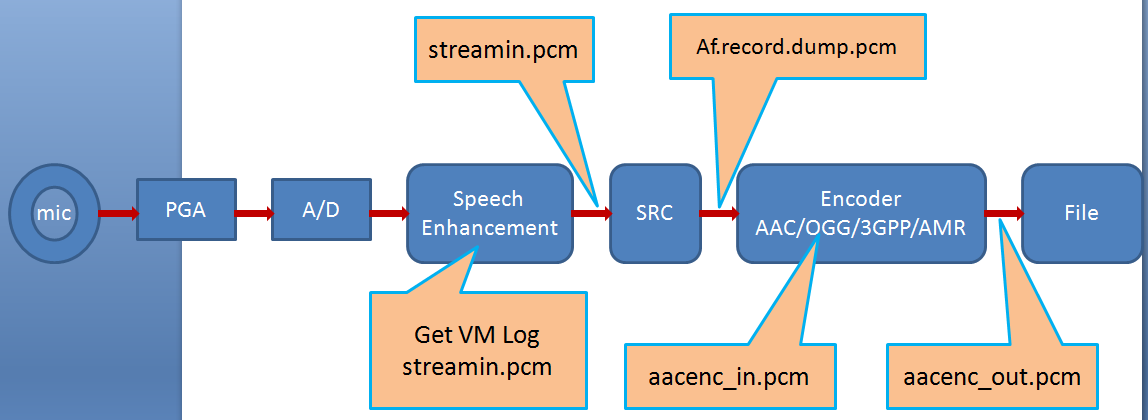

16k recording

从软件这边分析,问题原因已经定位到,主要是由于SPE这块的处理造成的,从录音过程中抓取的PCM数据来看,可以看到通过SPE之前,左右声道变化不大,通过SPE之后,左右声道变化比较大。SPE是MTK的语音增强算法,如果要解决这个问题,需要电子的帮忙调试SPE语音增强的参数。

SPEIn_Uplink.pcm -> SPEOut_Uplink.pcm -> StreamInManager_Dump.pcm -> StreamInOri_Dump.pcm -> StreamIn_Dump.pcm -> aacenc_in.pcm -> aacenc_out.pcm

1.Input PCM data before the speech enhancement process

adb shell setprop SPEIn.pcm.dump 1

adb shell setprop SPEIn.pcm.dump 0

file position:/sdcard/pcm/audio_dump/SPEIn_Uplink.pcm

2.PCM data after the speech enhancement processing.

adb shell setprop SPEOut.pcm.dump 1

adb shell setprop SPEOut.pcm.dump 0

file position:/sdcard/pcm/audio_dump/SPEOut_Uplink.pcm

3.speech enhancement EPL data

adb shell setprop SPE_EPL 1

adb shell setprop SPE_EPL 0

file position:/sdcard/pcm/audio_dump/SPE_EPL.EPL

4.StreamInManager_Dump.pcm

adb shell setprop streamin_manager.pcm.dump 1

adb shell setprop streamin_manager.pcm.dump 0

file position:/sdcard/pcm/audio_dump/StreamInManager_Dump.pcm

5.streamin_ori.pcm

adb shell setprop streamin_ori.pcm.dump 1

adb shell setprop streamin_ori.pcm.dump 0

file position:/sdcard/pcm/audio_dump/streamin_ori.pcm.dump

6.StreamIn_Dump.pcm

adb shell setprop streamin.pcm.dump 1

adb shell setprop streamin.pcm.dump 0

file position:/sdcard/pcm/audio_dump/StreamIn_Dump.pcm

7.aacenc_in.pcm

adb shell setprop audio.dumpenc.aac 1

adb shell setprop audio.dumpenc.aac 0

file position:/sdcard/pcm/audio_dump/aacenc_in.pcm

8.aacenc_out.pcm

adb shell setprop audio.dumpenc.aac 1

adb shell setprop audio.dumpenc.aac 0

file position:/sdcard/pcm/audio_dump/aacenc_out.pcm

---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

/frameworks/av/include/media/IMediaRecorder.h

/frameworks/av/include/media/MediaRecorderBase.h

/frameworks/av/include/media/mediarecorder.h

/frameworks/av/media/libmedia/IMediaRecorder.cpp

/frameworks/av/media/libmedia/mediarecorder.cpp

/frameworks/av/media/libmediaplayerservice/MediaRecorderClient.cpp

/frameworks/av/media/libmediaplayerservice/MediaRecorderClient.h

/frameworks/av/media/libmediaplayerservice/StagefrightRecorder.cpp

/frameworks/av/media/libmediaplayerservice/StagefrightRecorder.h

/frameworks/base/media/java/android/media/MediaRecorder.java

/frameworks/base/media/jni/android_media_MediaRecorder.cpp

/frameworks/av/media/libstagefright/MPEG4Writer.cpp

---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

adb vivoroot

adb remount

adb shell rm /system/app/BBKSoundRecorder.odex

adb shell rm /system/app/BBKSoundRecorder.apk

adb push D:\1222W\BBKSoundRecorder.apk system/app

adb push D:\1222W\libmediaplayerservice.so system/lib/

adb push D:\1222W\libmedia.so system/lib/

adb push D:\1222W\libaudioflinger.so system/lib/

adb push D:\1222W\libandroid_runtime.so system/lib/

adb reboot

pause

---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

///

status_t AMRWriter::threadFunc(){

while (!mDone) {

MediaBuffer *buffer;

err = mSource->read(&buffer); //mSource 表示AudioSource.cpp,参考status_t AMRWriter::addSource(const sp<MediaSource> &source) { mSource = source; }

}

}

status_t AudioSource::read( MediaBuffer **out, const ReadOptions *options) {

MediaBuffer *buffer = *mBuffersReceived.begin();

*out = buffer;

}

status_t AudioSource::dataCallback(const AudioRecord::Buffer& audioBuffer) {

mBuffersReceived.push_back(buffer);

queueInputBuffer_l(lostAudioBuffer, timeUs);

if (audioBuffer.size == 0) {

ALOGW("Nothing is available from AudioRecord callback buffer");

return OK;

}

const size_t bufferSize = audioBuffer.size;

MediaBuffer *buffer = new MediaBuffer(bufferSize);

memcpy((uint8_t *) buffer->data(),audioBuffer.i16, audioBuffer.size);

buffer->set_range(0, bufferSize);

queueInputBuffer_l(buffer, timeUs);

return OK;

}

void AudioSource::queueInputBuffer_l(MediaBuffer *buffer, int64_t timeUs) {

const size_t bufferSize = buffer->range_length();

const size_t frameSize = mRecord->frameSize();

const int64_t timestampUs =

mPrevSampleTimeUs +

((1000000LL * (bufferSize / frameSize)) +

(mSampleRate >> 1)) / mSampleRate;

if (mNumFramesReceived == 0) {

buffer->meta_data()->setInt64(kKeyAnchorTime, mStartTimeUs);

}

buffer->meta_data()->setInt64(kKeyTime, mPrevSampleTimeUs);

buffer->meta_data()->setInt64(kKeyDriftTime, timeUs - mInitialReadTimeUs);

mPrevSampleTimeUs = timestampUs;

mNumFramesReceived += bufferSize / frameSize;

mBuffersReceived.push_back(buffer);

mFrameAvailableCondition.signal();

}

///

static void AudioRecordCallbackFunction(int event, void *user, void *info) {

AudioSource *source = (AudioSource *) user;

switch (event) {

case AudioRecord::EVENT_MORE_DATA: {

source->dataCallback(*((AudioRecord::Buffer *) info));

break;

}

case AudioRecord::EVENT_OVERRUN: {

ALOGW("AudioRecord reported overrun!");

break;

}

//deal time out MTK80721 2012-03-29

#ifndef ANDROID_DEFAULT_CODE

case AudioRecord::EVENT_WAIT_TIEMOUT:{

ALOGE("audio record wait time out");

AudioRecord::Buffer pbuffer;

pbuffer.raw = NULL;

source->dataCallback(pbuffer);

break;

}

#endif

default:

// does nothing

break;

}

}

AudioSource::AudioSource(

audio_source_t inputSource, uint32_t sampleRate, uint32_t channelCount)

: mRecord(NULL),

mStarted(false),

mSampleRate(sampleRate),

mPrevSampleTimeUs(0),

mNumFramesReceived(0),

mNumClientOwnedBuffers(0) {

ALOGV("sampleRate: %d, channelCount: %d", sampleRate, channelCount);

CHECK(channelCount == 1 || channelCount == 2);

int minFrameCount;

status_t status = AudioRecord::getMinFrameCount(&minFrameCount,

sampleRate,

AUDIO_FORMAT_PCM_16_BIT,

audio_channel_in_mask_from_count(channelCount));

if (status == OK) {

// make sure that the AudioRecord callback never returns more than the maximum

// buffer size

int frameCount = kMaxBufferSize / sizeof(int16_t) / channelCount;

// make sure that the AudioRecord total buffer size is large enough

int bufCount = 2;

while ((bufCount * frameCount) < minFrameCount) {

bufCount++;

}

mRecord = new AudioRecord(

inputSource, sampleRate, AUDIO_FORMAT_PCM_16_BIT,

audio_channel_in_mask_from_count(channelCount),

bufCount * frameCount,

AudioRecordCallbackFunction,

this,

frameCount);

mInitCheck = mRecord->initCheck();

} else {

mInitCheck = status;

}

}

--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

Class Overview

Used to record audio and video. The recording control is based on a simple state machine (see below).

A common case of using MediaRecorder to record audio works as follows:

MediaRecorder recorder = new MediaRecorder(); recorder.setAudioSource(MediaRecorder.AudioSource.MIC); recorder.setOutputFormat(MediaRecorder.OutputFormat.THREE_GPP); recorder.setAudioEncoder(MediaRecorder.AudioEncoder.AMR_NB); recorder.setOutputFile(PATH_NAME); recorder.prepare(); recorder.start(); // Recording is now started ... recorder.stop(); recorder.reset(); // You can reuse the object by going back to setAudioSource() step recorder.release(); // Now the object cannot be reused

--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

MediaRecordertest.javaMediaRecorder.java

android_media_mediarecorder.cpp

MediaRecorder.cpp(BpMediaRecorder)

<-Binder->

http://blog.csdn.net/martingang/article/details/8045717

--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

android之通过MediaRecorder进行手机录音

直接给上个详细的解说代码:

- package cn.com.chenzheng_java.media;

- import java.io.IOException;

- import android.app.Activity;

- import android.media.MediaRecorder;

- import android.os.Bundle;

- /**

- * @description 对通过android系统手机进行录音的一点说明测试

- * @author chenzheng_java

- * @since 2011/03/23

- */

- public class MediaRecordActivity extends Activity {

- MediaRecorder mediaRecorder ;

- @Override

- public void onCreate(Bundle savedInstanceState) {

- super.onCreate(savedInstanceState);

- setContentView(R.layout.main);

- mediaRecorder = new MediaRecorder();

- record();

- }

- /**

- * 开始录制

- */

- private void record(){

- /**

- * mediaRecorder.setAudioSource设置声音来源。

- * MediaRecorder.AudioSource这个内部类详细的介绍了声音来源。

- * 该类中有许多音频来源,不过最主要使用的还是手机上的麦克风,MediaRecorder.AudioSource.MIC

- */

- mediaRecorder.setAudioSource(MediaRecorder.AudioSource.MIC);

- /**

- * mediaRecorder.setOutputFormat代表输出文件的格式。该语句必须在setAudioSource之后,在prepare之前。

- * OutputFormat内部类,定义了音频输出的格式,主要包含MPEG_4、THREE_GPP、RAW_AMR……等。

- */

- mediaRecorder.setOutputFormat(MediaRecorder.OutputFormat.THREE_GPP);

- /**

- * mediaRecorder.setAddioEncoder()方法可以设置音频的编码

- * AudioEncoder内部类详细定义了两种编码:AudioEncoder.DEFAULT、AudioEncoder.AMR_NB

- */

- mediaRecorder.setAudioEncoder(MediaRecorder.AudioEncoder.DEFAULT);

- /**

- * 设置录音之后,保存音频文件的位置

- */

- mediaRecorder.setOutputFile("file:///sdcard/myvido/a.3pg");

- /**

- * 调用start开始录音之前,一定要调用prepare方法。

- */

- try {

- mediaRecorder.prepare();

- mediaRecorder.start();

- } catch (IllegalStateException e) {

- e.printStackTrace();

- } catch (IOException e) {

- e.printStackTrace();

- }

- }

- /***

- * 此外,还有和MediaRecorder有关的几个参数与方法,我们一起来看一下:

- * sampleRateInHz :音频的采样频率,每秒钟能够采样的次数,采样率越高,音质越高。

- * 给出的实例是44100、22050、11025但不限于这几个参数。例如要采集低质量的音频就可以使用4000、8000等低采样率

- *

- * channelConfig :声道设置:android支持双声道立体声和单声道。MONO单声道,STEREO立体声

- *

- * recorder.stop();停止录音

- * recorder.reset(); 重置录音 ,会重置到setAudioSource这一步

- * recorder.release(); 解除对录音资源的占用

- */

- }

这里,一定要注意一点,那就是如果我们想要录音的话,那么首先得添加录音权限到AndroidManiferst.xml中:

<uses-permission android:name="android.permission.RECORD_AUDIO"></uses-permission>

http://blog.csdn.net/chenzheng_java/article/category/800126

http://blog.csdn.net/lamdoc/article/category/1173899

--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

// If the input could not be opened with the requested parameters and we can handle the conversion internally,

// try to open again with the proposed parameters. The AudioFlinger can resample the input and do mono to stereo

// or stereo to mono conversions on 16 bit PCM inputs.

if (inStream == NULL && status == BAD_VALUE &&

reqFormat == format && format == AUDIO_FORMAT_PCM_16_BIT &&

#ifdef ANDROID_DEFAULT_CODE

(samplingRate <= 2 * reqSamplingRate) &&

#endif

(popcount(channels) < 3) && (popcount(reqChannels) < 3)) {

LOGV("openInput() reopening with proposed sampling rate and channels");

status = inHwDev->open_input_stream(inHwDev, *pDevices, (int *)&format,

&channels, &samplingRate,

(audio_in_acoustics_t)acoustics,

&inStream);

}

--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

不管上层设置48K,16K,8K,单声道或是双生道,底层AudioYusuStreamIn.cpp中,硬件上的数据都是16K,双声道,如果最后都在AudioFlinger的threadLoop()中把16K双生道的硬件数据PCM转化成40.1K的数据,最后经过ogg,3gpp,amr三种格式的录音文件的编码,编码成具有一定录音格式的文件,此时在编码之前的数据都是一样的,只是在编码的时候才出现数据编码的不同,数据大小的不同。在播放的时候先解码,过程是和录音相反的,最后变成硬件上可以播放的PCM数据。

三种模式,普通和近距离,远距离录音模式的不同在于:从硬件上来的数据都是一样的,只是在编码之前对这些数据进行滤波,滤波的参数对应普通和近距离,远距离录音模式三种模式参数。

89平台有48K的硬件上的录音数据

bool AudioFlinger::RecordThread::threadLoop(){

.........

mResampler->resample(mRsmpOutBuffer, framesOut, this);

........

}

人耳可以听到20HZ到20KHZ的音频信号,人说话声音频率范围是300HZ到3400HZ。现实世界中音乐声、风雨声、汽车声,其带宽宽的多,可达20HZ到20KHZ,通称为全频带声音。

石彦华(石彦华:6116) 2013-05-13 14:30:44

录音模式-普通 会议 远距录音,这个是MTK自己搞的,高通上不能用

陈祎(陈祎 6115) 2013-05-13 14:32:20

这个是针对Mic硬件的设置吧

关于贵司的问题,可参考我司FAQ_03686: online.mediatek.com

三种录音在流程上是一样的,只是在实际情况下根据录音距离远近降噪处理上有所不同: Normal:普通录音, Meeting:近距离录音 ,Lecture:远距离录音

采样定理

百科名片

采样定理,又称香农采样定理,奈奎斯特采样定理,是信息论,特别是通讯与信号处理学科中的一个重要基本结论。E. T. Whittaker(1915年发表的统计理论),克劳德·香农 与Harry Nyquist都对它作出了重要贡献。另外,V. A. Kotelnikov 也对这个定理做了重要贡献。

编辑本段简介

在进行模拟/数字信号的转换过程中,当 采样频率fs.max大于信号中最高频率fmax的2倍时(fs.max>=2fmax),采样之后的数字信号完整地保留了原始信号中的信息,一般实际应用中保证采样频率为信号最高频率的5~10倍;采样定理又称 奈奎斯特定理。 1924年 奈奎斯特(Nyquist)就推导出在 理想低通信道的最高 码元传输速率的公式: 理想低通信道的最高码元传输速率B=2W Baud (其中W是理想) 理想信道的极限信息速率(信道容量) C = B * log2 N ( bps ) 采样过程所应遵循的规律,又称取样定理、抽样定理。采样定理说明采样频率与信号频谱之间的关系,是连续信号离散化的基本依据。采样定理是1928年由 美国电信工程师H.奈奎斯特首先提出来的,因此称为奈奎斯特采样定理。1933年由 苏联工程师科捷利尼科夫首次用公式严格地表述这一定理,因此在苏联文献中称为科捷利尼科夫采样定理。1948年信息论的创始人C.E.香农对这一定理加以明确地说明并正式作为定理引用,因此在许多文献中又称为 香农采样定理。采样定理有许多表述形式,但最基本的表述方式是时域采样定理和 频域采样定理。采样定理在数字式遥测系统、 时分制遥测系统、信息处理、数字通信和采样控制理论等领域得到广泛的应用。编辑本段时域和频域采样定理

时域采样定理 频带为 F的连续信号 f( t)可用一系列离散的采样值 f( t1), f( t1±Δ t), f( t1±2Δ t),...来表示,只要这些采样点的时间间隔Δ t≤1/2 F,便可根据各采样值完全恢复原来的信号 f( t)。 这是时域采样定理的一种表述方式。 时域采样定理的另一种表述方式是:当时间信号函数 f( t)的最高频率分量为 fM时, f( t)的值可由一系列采样间隔小于或等于1/2 fM的采样值来确定,即采样点的重复频率 f≥2 fM。图为模拟信号和采样样本的示意图。 时域采样定理是采样误差理论、随机变量采样理论和多变量采样理论的基础。 频域采样定理 对于时间上受限制的连续信号 f( t)(即当│ t│> T时, f( t)=0,这里 T= T2- T1是信号的持续时间),若其频谱为 F( ω),则可在频域上用一系列离散的采样值 来表示,只要这些采样点的频率间隔ω≦π / tm 。 http://baike.baidu.com/view/611055.htm根据人的听觉范围:20HZ--20KHZ,和采样定律,可以推理出采用频率只有在大于或等于44.1KHZ的时候,采样之后的数字信号才可以完整地保留了原始信号中的信息,一般实际应用中保证采样频率为信号最高频率的5~10倍;采样定理又称奈奎斯特定理。

而mtk实际的录音采样是这样的:从硬件上的采用率是16K,双声道;之后有进行重新采样,使硬件上来的16K的采用率的数据变成44.1KHZ采样率的数据,

而mtk实际的播音采样是这样的:从音源上的数据来看,有不同的采样率的数据,但是经过从新采用后,全部变成44.1KHZ的采样率的PCM数据

$ adb logcat -v threadtime | egrep "this is syh"

05-03 20:20:53.340 253 818 D StagefrightRecorder: frameworks/av/media/libmediaplayerservice/StagefrightRecorder.cpp(1004)-startAMRRecording:this is syh

05-03 20:20:53.340 253 818 D StagefrightRecorder: frameworks/av/media/libmediaplayerservice/StagefrightRecorder.cpp(1065)-startRawAudioRecording:this is syh

05-03 20:20:53.350 253 818 D AudioSource: frameworks/av/media/libstagefright/AudioSource.cpp(101)-AudioSource:this is syh

05-03 20:20:53.360 253 818 D StagefrightRecorder: frameworks/av/media/libmediaplayerservice/StagefrightRecorder.cpp(978)-createAudioSource-2:this is syh

05-03 20:20:53.360 253 818 D : frameworks/av/media/libstagefright/AMRWriter.cpp(97)-addSource:this is syh

05-03 20:20:53.360 253 818 D : frameworks/av/media/libstagefright/AMRWriter.cpp(110)-start:this is syh

05-03 20:21:32.238 253 253 D : frameworks/av/include/media/stagefright/AMRWriter.h(40)-stop:this is syh

05-03 20:21:32.238 253 253 D : frameworks/av/media/libstagefright/AMRWriter.cpp(158)-reset:this is syh

05-03 20:21:32.298 253 253 W AudioSource: frameworks/av/include/media/stagefright/AudioSource.h(45)-stop:this is syh

05-03 20:21:32.369 253 253 W AudioSource: frameworks/av/media/libstagefright/AudioSource.cpp(246)-reset---0:this is syh

05-03 20:21:32.369 253 253 W : frameworks/av/media/libstagefright/AMRWriter.cpp(171)-reset-0:this is syh

Administrator@tgdn-3288 ~

$ adb logcat -v threadtime | egrep "this is syh"

05-03 21:44:13.147 253 714 D StagefrightRecorder: frameworks/av/media/libmediaplayerservice/StagefrightRecorder.cpp(1004)-startAMRRecording:this is syh

05-03 21:44:13.147 253 714 D StagefrightRecorder: frameworks/av/media/libmediaplayerservice/StagefrightRecorder.cpp(1065)-startRawAudioRecording:this is syh

05-03 21:44:13.167 253 714 D AudioSource: frameworks/av/media/libstagefright/AudioSource.cpp(102)-AudioSource-0:this is syh

05-03 21:44:13.177 253 714 D StagefrightRecorder: frameworks/av/media/libmediaplayerservice/StagefrightRecorder.cpp(978)-createAudioSource-2:this is syh

05-03 21:44:13.177 253 714 D : frameworks/av/media/libstagefright/AMRWriter.cpp(98)-addSource:this is syh

05-03 21:44:13.408 253 714 D AudioSource: frameworks/av/media/libstagefright/AudioSource.cpp(214)-start-0:this is syh

05-03 21:46:23.695 253 253 W : frameworks/av/include/media/stagefright/AMRWriter.h(40)-stop:this is syh

05-03 21:46:23.695 253 253 D : frameworks/av/media/libstagefright/AMRWriter.cpp(160)-reset:this is syh

05-03 21:46:23.705 253 253 W AudioSource: frameworks/av/include/media/stagefright/AudioSource.h(45)-stop:this is syh

05-03 21:46:23.775 253 253 D AudioSource: frameworks/av/media/libstagefright/AudioSource.cpp(251)-reset-0:this is syh

05-03 21:46:23.775 253 253 D : frameworks/av/media/libstagefright/AMRWriter.cpp(173)-reset-0:this is syh

Administrator@tgdn-3288 ~

$ adb logcat -v threadtime | egrep "this is syh"

05-04 15:40:23.767 251 1461 D StagefrightRecorder: frameworks/av/media/libmediaplayerservice/StagefrightRecorder.cpp(1735)-startMPEG4Recording:this is syh

05-04 15:40:23.797 251 1461 D AudioSource: frameworks/av/media/libstagefright/AudioSource.cpp(102)-AudioSource-0:this is syh

05-04 15:40:23.817 251 1461 D StagefrightRecorder: frameworks/av/media/libmediaplayerservice/StagefrightRecorder.cpp(978)-createAudioSource-2:this is syh

05-04 15:40:23.937 251 1461 D AudioRecord: frameworks/av/media/libmedia/AudioRecord.cpp(386)-start-0:this is syh

05-04 15:40:23.937 251 1461 D AudioSource: frameworks/av/media/libstagefright/AudioSource.cpp(214)-start-0:this is syh

05-04 15:40:45.018 251 1462 W AudioSource: frameworks/av/include/media/stagefright/AudioSource.h(45)-stop:this is syh

05-04 15:40:45.108 251 1462 D AudioRecord: frameworks/av/media/libmedia/AudioRecord.cpp(400)-stop:this is syh

05-04 15:40:45.108 251 1462 D AudioSource: frameworks/av/media/libstagefright/AudioSource.cpp(251)-reset-0:this is syh

720

720

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?