目录

- 掌握构建kafka之Java客户端

- 了解kafka客户端类型及其区别

- 掌握kafka客户端的基本操作

Kafka客户端操作

如图所示,kafka给我提供了admin、producer、consumer、connectors、stream五种客户端。我们主要学习producer、consumer两种客户端。其中consumer客户端API操作比较复杂。

Kafka客户端API类型

- AdminClient API:允许管理和检测Topic、broker以及其它kafka对象

- Producer API:发布消息到一个或者多个topic

- Consumer API:订阅一个或者多个topic,并处理产生的消息

- Streams API:高效的将输入流转换到输出流

- Connertor API:从一些源系统或应用程序中拉取数据到kafka

AdminClient API

- pom依赖

<dependency>

<groupId>org.apache.kafka</groupId>

<artifactId>kafka-clients</artifactId>

<version>2.4.0</version>

</dependency>

| API | 作用 |

|---|---|

| AdminClient | AdminClient客户端对象 |

| NewTopic | 创建Topic |

| CreateTopicsResult | 创建Topic的返回结果 |

| ListTopicsResult | 查询Topic列表 |

| ListTopicsOptions | 查询Topic列表及选项 |

| DescribleTopicsResult | 查询Topics |

| DescribleConfigsResult | 查询Topics配置项 |

- AdminClient对象创建

/**

* 创建AdminClient对象创建需要指定对应的Properties配置对象,这里学习使用简单的配置

* @return

*/

public static AdminClient adminClient(){

Properties props = new Properties();

props.setProperty(AdminClientConfig.BOOTSTRAP_SERVERS_CONFIG,"192.163.124.3:9092");

AdminClient adminClient = AdminClient.create(props);

return adminClient;

}

- topic增删改查

public static void createTopic(){

AdminClient adminClient = adminClient();

Short rs = 1;

NewTopic newTopic = new NewTopic(TOPIC_NAME,1,rs);

CreateTopicsResult topics = adminClient.createTopics(Arrays.asList(newTopic));

System.out.println(topics);

}

public static void delTopic() throws Exception{

AdminClient adminClient = adminClient();

DeleteTopicsResult deleteTopicsResult = adminClient.deleteTopics(Arrays.asList(TOPIC_NAME));

deleteTopicsResult.all().get();

}

/**

* topic列表查询展示

* @throws Exception

*/

public static void topicLists() throws Exception{

AdminClient adminClient = adminClient();

ListTopicsOptions listTopicsOptions = new ListTopicsOptions();

listTopicsOptions.listInternal(true);

ListTopicsResult listTopics = adminClient.listTopics(listTopicsOptions);

Set<String> strings = listTopics.names().get();

strings.forEach(System.out::println);

}

/**

* 描述Topic 打印信息 主要用于kafka监控API

* name:kafka_study-topic,desc:(name=kafka_study-topic,

* internal=false, 零点几的版本kafka都是将客户端的offset存在zookeeper,一点几的版本zk同步consumer的offset慢影响吞吐量,迁移到kafka上

* partitions=

* (partition=0,

* leader=192.163.124.3:9092 (id: 0 rack: null),

* replicas=192.163.124.3:9092 (id: 0 rack: null),

* isr=192.163.124.3:9092 (id: 0 rack: null)), leader集合就这一个

* authorizedOperations=[])

*/

public static void describeTopics()throws Exception{

AdminClient adminClient = adminClient();

DescribeTopicsResult describeTopicsResult = adminClient.describeTopics(Arrays.asList(TOPIC_NAME));

Map<String, TopicDescription> stringTopicDescriptionMap = describeTopicsResult.all().get();

stringTopicDescriptionMap.forEach((k,v) ->{

System.out.println("name:"+k+","+"desc:"+v);

});

}

/**

* 查看配置信息 主要用于kafka监控API

* ConfigResource:ConfigResource(type=TOPIC, name='kafka_study-topic')

* Config:Config(entries=[

* ConfigEntry(name=compression.type, value=producer, source=DEFAULT_CONFIG, isSensitive=false, isReadOnly=false, synonyms=[]),

* ConfigEntry(name=leader.replication.throttled.replicas, value=, source=DEFAULT_CONFIG, isSensitive=false, isReadOnly=false, synonyms=[]),

* ConfigEntry(name=message.downconversion.enable, value=true, source=DEFAULT_CONFIG, isSensitive=false, isReadOnly=false, synonyms=[]),

* ConfigEntry(name=min.insync.replicas, value=1, source=DEFAULT_CONFIG, isSensitive=false, isReadOnly=false, synonyms=[]),

* ConfigEntry(name=segment.jitter.ms, value=0, source=DEFAULT_CONFIG, isSensitive=false, isReadOnly=false, synonyms=[]),

* ConfigEntry(name=cleanup.policy, value=delete, source=DEFAULT_CONFIG, isSensitive=false, isReadOnly=false, synonyms=[]),

* ConfigEntry(name=flush.ms, value=9223372036854775807, source=DEFAULT_CONFIG, isSensitive=false, isReadOnly=false, synonyms=[]),

* ConfigEntry(name=follower.replication.throttled.replicas, value=, source=DEFAULT_CONFIG, isSensitive=false, isReadOnly=false, synonyms=[]),

* ConfigEntry(name=segment.bytes, value=1073741824, source=STATIC_BROKER_CONFIG, isSensitive=false, isReadOnly=false, synonyms=[]),

* ConfigEntry(name=retention.ms, value=604800000, source=DEFAULT_CONFIG, isSensitive=false, isReadOnly=false, synonyms=[]),

* ConfigEntry(name=flush.messages, value=9223372036854775807, source=DEFAULT_CONFIG, isSensitive=false, isReadOnly=false, synonyms=[]),

* ConfigEntry(name=message.format.version, value=2.4-IV1, source=DEFAULT_CONFIG, isSensitive=false, isReadOnly=false, synonyms=[]),

* ConfigEntry(name=file.delete.delay.ms, value=60000, source=DEFAULT_CONFIG, isSensitive=false, isReadOnly=false, synonyms=[]),

* ConfigEntry(name=max.compaction.lag.ms, value=9223372036854775807, source=DEFAULT_CONFIG, isSensitive=false, isReadOnly=false, synonyms=[]),

* ConfigEntry(name=max.message.bytes, value=1000012, source=DEFAULT_CONFIG, isSensitive=false, isReadOnly=false, synonyms=[]),

* ConfigEntry(name=min.compaction.lag.ms, value=0, source=DEFAULT_CONFIG, isSensitive=false, isReadOnly=false, synonyms=[]),

* ConfigEntry(name=message.timestamp.type, value=CreateTime, source=DEFAULT_CONFIG, isSensitive=false, isReadOnly=false, synonyms=[]),

* ConfigEntry(name=preallocate, value=false, source=DEFAULT_CONFIG, isSensitive=false, isReadOnly=false, synonyms=[]),

* ConfigEntry(name=min.cleanable.dirty.ratio, value=0.5, source=DEFAULT_CONFIG, isSensitive=false, isReadOnly=false, synonyms=[]),

* ConfigEntry(name=index.interval.bytes, value=4096, source=DEFAULT_CONFIG, isSensitive=false, isReadOnly=false, synonyms=[]),

* ConfigEntry(name=unclean.leader.election.enable, value=false, source=DEFAULT_CONFIG, isSensitive=false, isReadOnly=false, synonyms=[]),

* ConfigEntry(name=retention.bytes, value=-1, source=DEFAULT_CONFIG, isSensitive=false, isReadOnly=false, synonyms=[]),

* ConfigEntry(name=delete.retention.ms, value=86400000, source=DEFAULT_CONFIG, isSensitive=false, isReadOnly=false, synonyms=[]),

* ConfigEntry(name=segment.ms, value=604800000, source=DEFAULT_CONFIG, isSensitive=false, isReadOnly=false, synonyms=[]),

* ConfigEntry(name=message.timestamp.difference.max.ms, value=9223372036854775807, source=DEFAULT_CONFIG, isSensitive=false, isReadOnly=false, synonyms=[]),

* ConfigEntry(name=segment.index.bytes, value=10485760, source=DEFAULT_CONFIG, isSensitive=false, isReadOnly=false, synonyms=[])])

* @throws Exception

*/

public static void describeConfig() throws Exception {

AdminClient adminClient = adminClient();

//集群时会使用这个配置

//ConfigResource configResource = new ConfigResource(ConfigResource.Type.BROKER, TOPIC_NAME);

ConfigResource configResource = new ConfigResource(ConfigResource.Type.TOPIC, TOPIC_NAME);

DescribeConfigsResult describeConfigsResult = adminClient.describeConfigs(Arrays.asList(configResource));

Map<ConfigResource, Config> configResourceConfigMap = describeConfigsResult.all().get();

configResourceConfigMap.forEach((k,v) -> {

System.out.println("ConfigResource:"+k+"Config:"+v);

});

}

/**

* 修改配置信息

* @throws Exception

*/

public static void updateConfig() throws Exception {

AdminClient adminClient = adminClient();

//alterConfig()方法已经过期,使用这个API,但是对于集群支持很差所以暂时使用alterConfigs()

/*

Map<ConfigResource, Config> configMaps = new HashMap<>();

ConfigResource configResource = new ConfigResource(ConfigResource.Type.TOPIC, TOPIC_NAME);

Config config = new Config(Arrays.asList(new ConfigEntry("preallocate","true")));

configMaps.put(configResource,config);

AlterConfigsResult alterConfigsResult = adminClient.alterConfigs(configMaps);

alterConfigsResult.all().get();

*/

Map<ConfigResource, Collection<AlterConfigOp>> configMaps = new HashMap<>();

ConfigResource configResource = new ConfigResource(ConfigResource.Type.TOPIC, TOPIC_NAME);

AlterConfigOp alterConfigOp = new AlterConfigOp(new ConfigEntry("preallocate","true"),AlterConfigOp.OpType.SET);

configMaps.put(configResource,Arrays.asList(alterConfigOp));

adminClient.incrementalAlterConfigs(configMaps);

}

/**

* 在kafka中partition只能增加不能减少

*/

public static void incrPartitions(int partitionsNum){

AdminClient adminClient = adminClient();

Map<String,NewPartitions> partitionsMap = new HashMap<>();

NewPartitions newPartitions = NewPartitions.increaseTo(partitionsNum);

partitionsMap.put(TOPIC_NAME,newPartitions);

adminClient.createPartitions(partitionsMap);

}

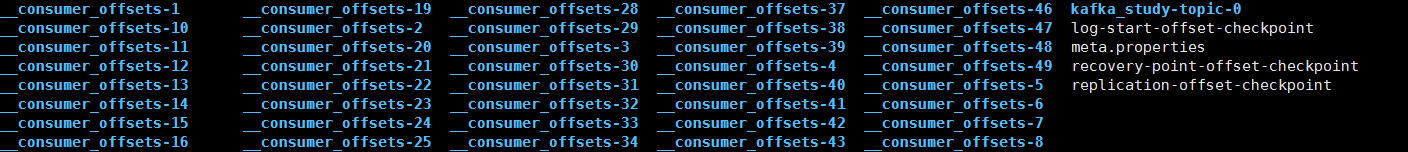

[root@localhost logs]# ls /tmp/kafka-logs/

1万+

1万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?