之前从未接触过Hadoop,本次搭建对于很多细节理解并不深刻,仅作为操作记录;

本次环境为Centos7,使用Virtualbox实例的四台主机作为集群,相关网络配置自行百度,多个节点免密跳转参考我另一篇文章:SSH免密登陆、跳板;

Hadoop集群部署至少需要四个节点:mater、slave1、slave2、slave3,需要两个namenode节点(master - active namenode, slave1 - stand by namenode)防止单点故障,master外的slave1、slave2、slave3为datanode节点;

由于Hadoop HDFS需要使用zookeeper实现高可用机制,需要先搭建zookeeper;

ps:zookeeper的集群至少三台以上且必须是奇数个:

注:NN(namenode)、JN(journalnode)、DN(datanote),JN作用请参考:https://www.cnblogs.com/muhongxin/p/9436765.html

Zookeeper搭建:

1、登陆master主机,官网下载zookeeper压缩包,解压至/opt/soft

2、配置zookeeper/conf/zoo.cfg

# The number of milliseconds of each tick

tickTime=2000

# The number of ticks that the initial

# synchronization phase can take

initLimit=10

# The number of ticks that can pass between

# sending a request and getting an acknowledgement

syncLimit=5

# the directory where the snapshot is stored.

# do not use /tmp for storage, /tmp here is just

# example sakes.

dataDir=/data/zookeeper

# the port at which the clients will connect

clientPort=2181

# the maximum number of client connections.

# increase this if you need to handle more clients

#maxClientCnxns=60

#

# Be sure to read the maintenance section of the

# administrator guide before turning on autopurge.

#

# http://zookeeper.apache.org/doc/current/zookeeperAdmin.html#sc_maintenance

#

# The number of snapshots to retain in dataDir

#autopurge.snapRetainCount=3

# Purge task interval in hours

# Set to "0" to disable auto purge feature

#autopurge.purgeInterval=1

server.1=192.168.1.240:2888:3888

server.2=192.168.1.241:2888:3888

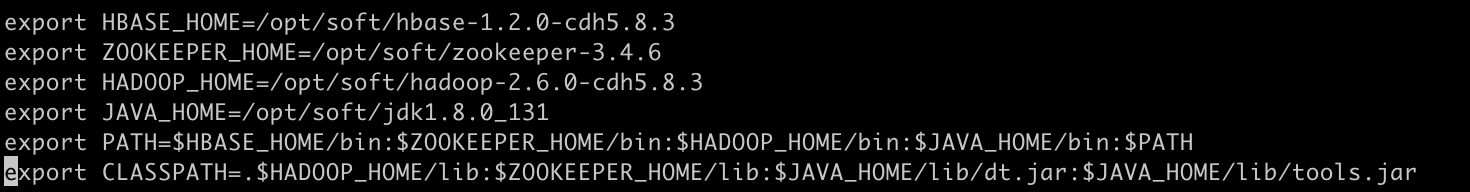

server.3=192.168.1.242:2888:38883、配置zookeeper/etc/profile环境变量(底部添加export)

vi /etc/profile

source /etc/profile

4、新建zookeeper/data/myid(根据zoo.cfg中的配置,集群不同主机对应不同数字)

echo 1 > /opt/soft/zookeeper-3.4.6/data/myid![]()

5、同步项目至集群内所有主机

scp -r /opt/soft/zookeeper-3.4.6/ slave1:/opt/soft/

scp -r /opt/soft/zookeeper-3.4.6/ slave2:/opt/soft/

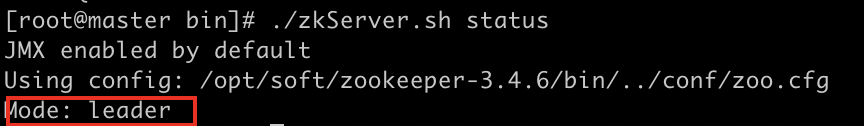

..6、bin/zkServer.sh所有主机单独启动,查看主机状态

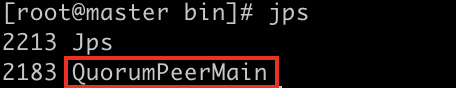

8、jps查看(JDK配置前提)

Hadoop搭建:

1、登陆master主机,官网下载hadoop压缩包,解压至/opt/soft

2、配置hadoop/etc/hadoop/core-site.xml、hadoop/etc/hadoop/hdfs-site.xml

core-site.xml

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://mycluster</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>/data/hadoop/tmp</value>

</property>

<property>

<name>dfs.name.dir</name>

<value>/data/hadoop/name</value>

</property>

<property>

<name>ha.zookeeper.quorum</name>

<value>slave1:2181,slave2:2181,master:2181</value>

</property>

</configuration>hdfs-site.xml

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<name>dfs.replication</name>

<value>2</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>file:///data/hadoop/hdfs/name</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>file:///data/hadoop/hdfs/data</value>

</property>

<property>

<name>dfs.permissions.enabled</name>

<value>false</value>

</property>

<!-- 开启WebHDFS功能(基于REST的接口服务) -->

<property>

<name>dfs.webhdfs.enabled</name>

<value>true</value>

</property>

<!-- //以下为HDFS HA的配置// -->

<!-- 指定hdfs的nameservices名称为mycluster -->

<property>

<name>dfs.nameservices</name>

<value>mycluster</value>

</property>

<!-- 指定mycluster的两个namenode的名称分别为nn1,nn2 -->

<property>

<name>dfs.ha.namenodes.mycluster</name>

<value>slave1,master</value>

</property>

<property>

<name>dfs.namenode.rpc-address.mycluster.slave1</name>

<value>slave1:8020</value>

</property>

<property>

<name>dfs.namenode.rpc-address.mycluster.master</name>

<value>master:8020</value>

</property>

<property>

<name>dfs.namenode.http-address.mycluster.slave1</name>

<value>slave1:50070</value>

</property>

<property>

<name>dfs.namenode.http-address.mycluster.master</name>

<value>master:50070</value>

</property>

<property>

<name>dfs.namenode.shared.edits.dir</name>

<value>qjournal://slave1:8485;slave2:8485;slave3:8485/mycluster</value>

</property>

<property>

<name>dfs.journalnode.edits.dir</name>

<value>/data/hadoop/journal</value>

</property>

<!-- 指定HDFS客户端连接active namenode的java类 -->

<property>

<name>dfs.client.failover.proxy.provider.mycluster</name>

<value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value>

</property>

<!-- 配置隔离机制为ssh -->

<property>

<name>dfs.ha.fencing.methods</name>

<value>sshfence</value>

</property>

<!-- 指定秘钥的位置 -->

<property>

<name>dfs.ha.fencing.ssh.private-key-files</name>

<value>/root/.ssh/id_rsa</value>

</property>

<property>

<name>dfs.ha.automatic-failover.enabled</name>

<value>true</value>

</property>

</configuration>

3、初始化

- Stop the Hdfs service

- Start only the journal nodes (as they will need to be made aware of the formatting)

- On the first namenode (as user hdfs)

- hadoop namenode -format

- hdfs namenode -initializeSharedEdits -force (for the journal nodes)

- hdfs zkfc -formatZK -force (to force zookeeper to reinitialise)

- restart that first namenode

- On the second namenode

- hdfs namenode -bootstrapStandby -force (force synch with first namenode)

- On every datanode clear the data directory

- Restart the HDFS service

4、复制hadoop至所有主机

scp -r /opt/soft/hadoop-2.6.0-cdh5.8.3 slave1:/opt/soft/

...5、sbin/start-dfs.sh启动

6、jps查看,NN、JN、DN启动是否正确

7、访问master-ip:50070 web网页 - active ,slave1-ip:50070 web网页 - standby

Hbase搭建:

1、复制hadoop/conf/core-site.xml、hadoop/conf/hdfs-site.xml至hbase/conf

2、配置hbase-site.xml

* "License"); you may not use this file except in compliance

* with the License. You may obtain a copy of the License at

*

* http://www.apache.org/licenses/LICENSE-2.0

*

* Unless required by applicable law or agreed to in writing, software

* distributed under the License is distributed on an "AS IS" BASIS,

* WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

* See the License for the specific language governing permissions and

* limitations under the License.

*/

-->

<configuration>

<!-- HBase 数据存储的位置 -->

<property>

<name>hbase.rootdir</name>

<value>hdfs://mycluster/HBase</value>

</property>

<!-- true 开启集群模式 -->

<property>

<name>hbase.cluster.distributed</name>

<value>true</value>

</property>

<!-- 0.98 后的新变动,之前版本没有.port,默认端口为 60000 -->

<property>

<name>hbase.master.port</name>

<value>16000</value>

</property>

<!-- zookeeper 地址 -->

<property>

<name>hbase.zookeeper.quorum</name>

<value>slave1:2181,slave2:2181,master:2181</value>

</property>

<!-- ZooKeeper的zoo.conf中的配置。 快照的存储位置 -->

<property>

<name>hbase.zookeeper.property.dataDir</name>

<value>/data/zookeeper</value>

</property>

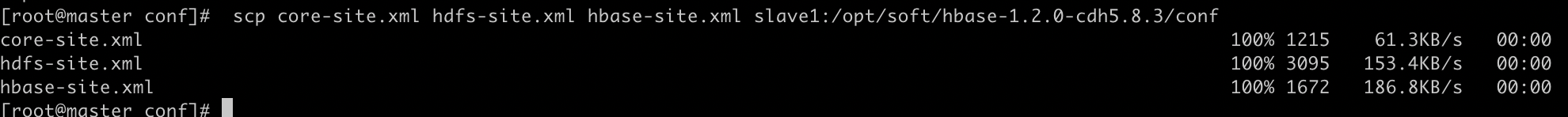

</configuration>3、同步配置文件至集群内所有主机

scp core-site.xml hdfs-site.xml hbase-site.xml slave1:/opt/soft/hbase-1.2.0-cdh5.8.3/conf

4、启动,访问web网址 master-ip:60010

1278

1278

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?