1.三台虚拟机搭建

- 开三台虚拟机。使用Centos7 系统,网络模式使用NAT模式(校园网应该是用不了桥接模式)

- 在虚拟机里设置主机名

#依次设置主机名

hostnamectl set-hostname k8s-master1

hostnamectl set-hostname k8s-worker1

hostnamectl set-hostname k8s-worker2

- 主机地址ip设置

# 查看eens33的配置文件

cat /etc/sysconfig/network-scripts/ifcfg-ens33

# 然后进行修改

vi /etc/sysconfig/network-scripts/ifcfg-ens33

#将 BOOTPROTO改为

BOOTPROTO="static" 或BOOTPROTO="none"

#在文件最后添加

IPADDR="192.168.255.100"

PREFIX="24"

GATEWAY="192.16.255.2"

DNS1="119.29.29.29" #顶级域名皆可

ip配置注意要和虚拟的ip网卡在同一网段上

- 推出文件后重启网卡来生效

systemctl restart network

# 查看IP

ip a s

2.安装前环境配置

- 添加host解析

cat >> /etc/hosts <<EOF

192.168.255.100 k8s-master1

192.168.255.101 k8s-worker1

192.168.255.102 k8s-worker2

EOF

cat <<EOF > /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward=1

vm.max_map_count=262144

EOF

- 调整系统,都是所有机器执行

#防火墙策略,防止集群间不能通信

iptables -P FORWARD ACCEPT

# 防止开机自动挂载swap分区

swapoff -a

sed -i '/ swap / s/^\(.*\)$/#\1/g' /etc/fstab

# 关闭防火墙和SELINUX

sed -ri 's#(SELINUX=).*#\1disabled#' /etc/selinux/config

setenforce 0

systemctl disable firewalld && systemctl stop firewalld

# 查看一下防火墙状态 (dead死亡状态才对)

systemctl status firewalld

#开启内核对流量的转发

cat <<EOF > /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward=1

vm.max_map_count=262144

EOF

# 使上一条指令生效内核加载

modprobe br_netfilter

sysctl -p /etc/sysctl.d/k8s.conf

#配置centos的yum源

curl -o /etc/yum.repos.d/Centos-7.repo http://mirrors.aliyun.com/repo/Centos-7.repo

#配置docker的yum镜像源

curl -o /etc/yum.repos.d/docker-ce.repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

#配置kubernetes的yum源

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yumkey.gpg http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

# 生成yum缓存

yum clean all && yum makecache

执行完上述最后一条操作,如果没问题的话会有17个文件下载

3.安装docker (所有机器都安装)

# 列出所有可安装的docker版本

yum list docker-ce --showduplicates | sort -r

#安装docker

yum install docker-ce -y #没有指定版本即安装最新的稳定版

yum install docker-ce-20.10.0 -y #指定安装 20.10.0.ce版本的,加y是,有要求yes or no 的回答yes

# 配置docker镜像加速地址

mkdir -p /etc/docker #创建文件夹

#然后创建并打开daemon.json文件

vi /etc/docker/daemon.json

#并写入配置daocloud的镜像加速地址

{

"registry-mirrors": ["http://f1361db2.m.daocloud.io"]

}

#启动并加入开机启动

systemctl enable docker && systemctl start docker

然后执行命令 docker ps 有正确的反应说明docker 安装好了。docker version命令也可以查看,client,server都显示docker的版本号

4.安装kuberentes

安装 kubeadm, kubelet和kubectl

- kubeadm:用来初始化集群的指令,安装集群的

- kubelet: 在集群中的每个节点上用来启动Pod和容器等,管理docker的.

- kubectl:用来与集群通信的命令行工具,就好比你用docker ps ,docker images。

4.1 安装k8s-master1(master1机器执行)

操作节点:所有的master和worker节点需要执行

#版本选择的是1.16.2

yum install -y kubelet-1.16.2 kubeadm-1.16.2 kubectl-1.16.2 --disableexcludes=kubernetes

#查看kubeadm版本

kubeadm version

#设置kubelet 开机自启,作用是管理容器的,下载镜像,创建容器,启停容器。确保机器一开机,kubelet服务启动了就会自动帮你管理pod(容器)

systemctl enable kubelet

4.2 初始化配置文件(只在k8s-master1执行)

# 创建一个目录

mkdir ~/k8s-install && cd ~/k8s-install

#在k8s-install这个文件夹下用kubeadm config这条命令取init生成kubeadm.yaml文件

kubeadm config print init-defaults > kubeadm.yaml

# 接着执行 下一行命令就可以进行编辑

vi kubeadm.yaml

默认的kubeadm.yaml

apiVersion: kubeadm.k8s.io/v1beta2

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 1.2.3.4 # 此处改为k8s-master1的内网ip,我的是192.168.255.100

bindPort: 6443

nodeRegistration:

criSocket: /var/run/dockershim.sock

name: k8s-master1

taints:

- effect: NoSchedule

key: node-role.kubernetes.io/master

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta2

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns:

type: CoreDNS

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: k8s.gcr.io # 此处镜像源修改为阿里的镜像源:registry.aliyuncs.com/google_containers

kind: ClusterConfiguration

kubernetesVersion: v1.16.0 #改成自己的k8s版本 我的是v1.16.2

networking:

dnsDomain: cluster.local

podSubnet: 10.244.0.0/16 #添加pod网段,设置容器内网络

serviceSubnet: 10.96.0.0/12

"kubeadm.yaml" 38L, 831C

4.3 提前下载镜像(只在k8s-master1执行)

# 检查镜像列表 根据kubeadm.yaml文件列出包含的k8s镜像

kubeadm config images list --config kubeadm.yaml

结果如下:

registry.aliyuncs.com/google_containers/kube-apiserver:v1.16.2

registry.aliyuncs.com/google_containers/kube-controller-manager:v1.16.2

registry.aliyuncs.com/google_containers/kube-scheduler:v1.16.2

registry.aliyuncs.com/google_containers/kube-proxy:v1.16.2

registry.aliyuncs.com/google_containers/pause:3.1

registry.aliyuncs.com/google_containers/etcd:3.3.15-0

registry.aliyuncs.com/google_containers/coredns:1.6.2

# 提前下载镜像

kubeadm config images pull --config kubeadm.yaml

结果如下:

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-apiserver:v1.16.2

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-controller-manager:v1.16.2

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-scheduler:v1.16.2

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-proxy:v1.16.2

[config/images] Pulled registry.aliyuncs.com/google_containers/pause:3.1

[config/images] Pulled registry.aliyuncs.com/google_containers/etcd:3.3.15-0

[config/images] Pulled registry.aliyuncs.com/google_containers/coredns:1.6.2

#检查镜像是否存在

docker images

结果:

registry.aliyuncs.com/google_containers/kube-proxy v1.16.2 8454cbe08dc9 22 months ago 86.1MB

registry.aliyuncs.com/google_containers/kube-apiserver v1.16.2 c2c9a0406787 22 months ago 217MB

registry.aliyuncs.com/google_containers/kube-controller-manager v1.16.2 6e4bffa46d70 22 months ago 163MB

registry.aliyuncs.com/google_containers/kube-scheduler v1.16.2 ebac1ae204a2 22 months ago 87.3MB

registry.aliyuncs.com/google_containers/etcd 3.3.15-0 b2756210eeab 24 months ago 247MB

registry.aliyuncs.com/google_containers/coredns 1.6.2 bf261d157914 2 years ago 44.1MB

registry.aliyuncs.com/google_containers/pause 3.1 da86e6ba6ca1 3 years ago 742kB

# 初始化集群

kubeadm init --config kubeadm.yaml

结果如下:

[init] Using Kubernetes version: v1.16.2

[preflight] Running pre-flight checks

[WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver. The recommended driver is "systemd". Please follow the guide at https://kubernetes.io/docs/setup/cri/

[WARNING SystemVerification]: this Docker version is not on the list of validated versions: 20.10.8. Latest validated version: 18.09

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Activating the kubelet service

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [k8s-master1 kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 192.168.255.100]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [k8s-master1 localhost] and IPs [192.168.255.100 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [k8s-master1 localhost] and IPs [192.168.255.100 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[kubelet-check] Initial timeout of 40s passed.

[apiclient] All control plane components are healthy after 117.203432 seconds

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config-1.16" in namespace kube-system with the configuration for the kubelets in the cluster

[upload-certs] Skipping phase. Please see --upload-certs

[mark-control-plane] Marking the node k8s-master1 as control-plane by adding the label "node-role.kubernetes.io/master=''"

[mark-control-plane] Marking the node k8s-master1 as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule]

[bootstrap-token] Using token: abcdef.0123456789abcdef

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully! # 初始化成功

To start using your cluster, you need to run the following as a regular user: # 下一步创建集群的操作提示

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

# 你可能需要创建pod网络,你的pod才能正常工作

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

# 你需要用如下命令,将node节点加入集群

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.255.100:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:fb88ee0d2039f7bbf5e9ae65ca5a86276587d3a452ae6ae20c9c5a8431ec0179

# 根据提示创建配置文件

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

注意上面结果如下的的最后一行 Then you can join any number of worker nodes by running the following on each as root:之下即为让k8s worker节点加入集群的命令

4.4 添加k8s-node(worker1和2)节点到集群中

所有worker节点 复制上述k8s-master1生成的信息(每一次安装k8s生成的都不一样)

kubeadm join 192.168.255.100:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:fb88ee0d2039f7bbf5e9ae65ca5a86276587d3a452ae6ae20c9c5a8431ec0179

#两个worker节点显示如下意味着加入集群成功

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

# 在主节点 输入kubectl get nodes 可以看到集群成功(但因为还没有网络插件,status 还是显示NotReady)

[root@k8s-master1 k8s-install]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master1 NotReady master 9h v1.16.2

k8s-worker1 NotReady <none> 7m1s v1.16.2

k8s-worker2 NotReady <none> 5m54s v1.16.2

此时搭建到这,准备给三台虚拟机打个快照。以防止后面配置网络插件有问题。

快照已经打好,下面进行安装flannel插件。有机会可以尝试一下calico插件,这个似乎是最好的

4.5 安装网络插件(k8s-master1执行)

4.5.1 安装flannel网络插件(k8s-master1执行)

这里可能有网络问题,多尝试几次。

# 利用wget命令下载 kube-flannel.yml文件。因为实在国外下载,可能会失败或卡死,可以CTRL+C终止后重新下载。

#(wget:非交互式的网络文件下载工具。 如果提示-bash: wget: 未找到命令。执行 :yum -y install wget)

wget https://raw.githubusercontent.com/coreos/flannel/2140ac876ef134e0ed5af15c65e414cf26827915/Documentation/kube-flannel.yml

# 如果报无法连接ssL链接的错误,把上边网址的https改为http即可。

修改配置文件,指定机器的网卡名,大约在190行

#进入文件

vi kube-flannel.yml

找到如下:

args:

- --ip-masq

- --kube-subnet-mgr

- --iface=ens33 #添加这一行,我的是ens33,也有的是eth0

下载flannel网络插件镜像

docker pull quay.io/coreos/flannel:v0.11.0-amd64

# 显示各节点的详细信息

kubectl get nodes -o wide

# 结果:

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

k8s-master1 NotReady master 18h v1.16.2 192.168.255.100 <none> CentOS Linux 7 (Core) 3.10.0-957.el7.x86_64 docker://20.10.8

k8s-worker1 NotReady <none> 9h v1.16.2 192.168.255.101 <none> CentOS Linux 7 (Core) 3.10.0-957.el7.x86_64 docker://20.10.8

k8s-worker2 NotReady <none> 9h v1.16.2 192.168.255.102 <none> CentOS Linux 7 (Core) 3.10.0-957.el7.x86_64 docker://20.10.8

# STATUS 还是NotReady 是因为node的网络节点还没配置

PS :之前这里一直报 docker pull quay.io/coreos/flannel:v0.11.0-amd64 Error response from daemon: Get “https://quay.io/v2/”: context deadline exceeded 的错误。试了很多方法不行。网上还说是因为国外墙的原因。其实是因为自己内存太小了连接超时。加了4G的内存条解决了。

开始安装flannel网络插件

#[root@k8s-master1 k8s-install] 目录下执行

kubectl create -f kube-flannel.yml

查看集群中所有的pod状态,确保都是正常的

kubectl get pods -A

#结果:

kube-system coredns-58cc8c89f4-qwjrq 1/1 Running 0 18h

kube-system coredns-58cc8c89f4-tcjdm 1/1 Running 0 18h

kube-system etcd-k8s-master1 1/1 Running 10 18h

kube-system kube-apiserver-k8s-master1 1/1 Running 29 18h

kube-system kube-controller-manager-k8s-master1 1/1 Running 10 18h

kube-system kube-flannel-ds-amd64-fb9fx 1/1 Running 0 55s

kube-system kube-flannel-ds-amd64-m8h9n 1/1 Running 0 55s

kube-system kube-flannel-ds-amd64-qr92q 1/1 Running 0 55s

kube-system kube-proxy-8pqn2 1/1 Running 1 9h

kube-system kube-proxy-rvbb7 1/1 Running 1 9h

kube-system kube-proxy-w7679 1/1 Running 1 18h

kube-system kube-scheduler-k8s-master1 1/1 Running 12 18h

再次检查k8s集群

kubectl get nodes

#结果: 此时节点状态都为Ready

NAME STATUS ROLES AGE VERSION

k8s-master1 Ready master 19h v1.16.2

k8s-worker1 Ready <none> 9h v1.16.2

k8s-worker2 Ready <none> 9h v1.16.2

到此为止 kubernetes 的网络组件安装成功。k8s 集群搭建完毕。

4.5.2 安装 calico 网络组件(跳过)

5. 首次使用k8s部署应用程序

初体验,k8s 部署 nginx web 服务

Kubernetes kubectl run 命令详解

kubectl run

-

创建并运行一个或多个容器镜像。

-

创建一个deployment 或job 来管理容器

语法

kubectl run NAME --image=image:版本号 [–env=“key=value”] [–port=port] [–replicas=replicas] [–dry-run=bool] [–overrides=inline-json] [–command] – [COMMAND] [args…]

示例1:启动nginx实例

kubectl run nginx --image=nginx:alpine # 启动精简版(alpine)的nginx实例,名字叫nginx

示例2:启动hazelcast实例,暴露容器端口 5701(不加版本号就用最新版本latest)

kubectl run hazelcast --image=hazelcast --port=5701

示例3:启动hazelcast实例,在容器中设置环境变量“DNS_DOMAIN = cluster”和“POD_NAMESPACE = default”

kubectl run hazelcast --image=hazelcast --env=“DNS_DOMAIN=cluster” --env="POD_NAMESPACE=default"

执行命令

kubectl run twz-nginx --image=nginx:alpine

# k8s 是创建pod,分配到某一个node机器,然后在机器上运行机器。

# 执行 kubectl get pods -o wide 发现nginx被安排在k8s-worker2节点上。pod的IP是10.244.2.2 。这个就是上面kubeadm.yaml 里加的podSubnet: 10.244.0.0/1 分配的网段。

[root@k8s-master1 k8s-install]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

twz-nginx-58bf898b8b-llq8x 1/1 Running 0 19m 10.244.2.2 k8s-worker2 <none> <none>

# 那么如何访问nginx这个应用呢? 三台机器都 可以访问分配的pod ip

curl 10.244.2.2

# 这个CLUSTER-IP 是 serviceSubnet: 10.96.0.0/12 分配的网段。

[root@k8s-master1 k8s-install]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 20h

[root@k8s-master1 k8s-install]# kubectl get deploy

NAME READY UP-TO-DATE AVAILABLE AGE

twz-nginx 1/1 1 1 23m

#查看deploy的详细信息

kubectl describe deploy twz-nginx

#查看pods的详细信息

kubectl describe pods twz-nginx-58bf898b8b-llq8x

ps:

输入 ifconfig 查看网络配置:

[root@k8s-worker2 ~]# ifconfig

cni0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1450

inet 10.244.2.1 netmask 255.255.255.0 broadcast 10.244.2.255

inet6 fe80::b0e0:ceff:fe5f:3aa9 prefixlen 64 scopeid 0x20<link>

ether b2:e0:ce:5f:3a:a9 txqueuelen 1000 (Ethernet)

RX packets 19 bytes 3466 (3.3 KiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 29 bytes 2204 (2.1 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

docker0: flags=4099<UP,BROADCAST,MULTICAST> mtu 1500

inet 172.17.0.1 netmask 255.255.0.0 broadcast 172.17.255.255

ether 02:42:e6:e3:ac:36 txqueuelen 0 (Ethernet)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

ens33: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 192.168.255.102 netmask 255.255.255.0 broadcast 192.168.255.255

inet6 fe80::69cb:cab8:8466:92b0 prefixlen 64 scopeid 0x20<link>

ether 00:0c:29:d4:07:4f txqueuelen 1000 (Ethernet)

RX packets 66270 bytes 49686525 (47.3 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 44053 bytes 4255676 (4.0 MiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

flannel.1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1450

inet **10.244.2.0** netmask 255.255.255.255 broadcast 0.0.0.0

inet6 fe80::882f:c0ff:fe90:c02f prefixlen 64 scopeid 0x20<link>

ether 8a:2f:c0:90:c0:2f txqueuelen 0 (Ethernet)

RX packets 14 bytes 892 (892.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 10 bytes 2236 (2.1 KiB)

TX errors 0 dropped 8 overruns 0 carrier 0 collisions 0

lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

inet6 ::1 prefixlen 128 scopeid 0x10<host>

loop txqueuelen 1000 (Local Loopback)

RX packets 72 bytes 4992 (4.8 KiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 72 bytes 4992 (4.8 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

veth80e2fb41: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1450

inet6 fe80::e04b:28ff:fe22:76a2 prefixlen 64 scopeid 0x20<link>

ether e2:4b:28:22:76:a2 txqueuelen 0 (Ethernet)

RX packets 19 bytes 3732 (3.6 KiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 34 bytes 2602 (2.5 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

**发现了flannel.1的IP是10.244.2.0与 twz-nginx的pod的IP :10.244.2.2是一个网段的。这说明pod的IP是网络插件flanel分配的局域网**

k8s命令补全工具 可用tab键补全命令名和文件名。

yum install -y bash-completion

source /usr/share/bash-completion/bash_completion

source <(kubectl completion bash)

echo "source <(kubectl completion bash)" >> ~/.bashrc

一篇好文章的推荐

[(4条消息) Kubernetes之kubectl常用命令使用指南:1:创建和删除_sdfsdfytre的博客-CSDN博客

注意:问题来了,刚才访问nginx是在集群内部。通过访问pod 的IP来访问的。那么如何在外面比如浏览器来访问pod内的应用程序呢?

6. 再来部署nginx应用–deployment

- 刚才是小试牛刀,快速的用k8s创建pod 运行 nginx,且可以在集群内,访问nginx页面。

- pod

- k8s支持容器的高可用 --------实现靠deployment

- node01上的应用程序挂了,能自动重启

- 还支持扩容,缩容

- 实现负载均衡

这里我们创建一个deployment 资源,该资源是k8s部署应用的重点,这里我们先不做介绍,就来看看他的作用

创建deployment资源后,deployment 指示k8s如何创建应用实例,k8s-master将应用程序,调度到具体的node上,也就是生成pod以及内部的容器实例。

应用创建后,deployment会持续监控这些pod,如果node节点出现故障,deployment控制器会自动找到一个更优node,重新创建新的实例。这就提供了自我修复能力,解决服务器故障问题。

6.1 创建k8s资源有两种方式

-

yaml配置文件,生产环境使用。

-

命令行,调试使用。

我们这里使用yaml文件来创建k8s资源

- 先写yaml

- 应用yaml文件

下面是一个 deployment的yaml文件-----创建一个名为tianwenzhao-nginx.yaml (路径为[root@k8s-master1 k8s-install]# )的文件贴入一下内容

apiVersion: apps/v1

kind: Deployment

metadata:

name: tianwenzhao-nginx

labels:

app: nginx

spec:

# 创建两个nginx容器

replicas: 2 # 副本数

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers: # 容器的配置

- name: nginx

image: nginx:alpine

ports:

- containerPort: 80

读取yaml文件,创建k8s资源。

kubectl apply -f tianwenzhao-nginx.yaml

#kubectl create -f tianwenzhao-nginx.yaml 也行,两者命令有区别,一个是更新(之前没有的也会创建),一个是创建

# 查看 发现两个新的一个在worker1,一个在worker2

[root@k8s-master1 k8s-install]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

tianwenzhao-nginx-5c559d5697-bfc7s 1/1 Running 0 46s 10.244.1.2 k8s-worker1 <none> <none>

tianwenzhao-nginx-5c559d5697-p4x84 1/1 Running 0 46s 10.244.2.4 k8s-worker2 <none> <none>

twz-nginx-58bf898b8b-llq8x 1/1 Running 1 16h 10.244.2.3 k8s-worker2 <none> <none>

[root@k8s-master1 k8s-install]# kubectl get deploy -o wide

NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

tianwenzhao-nginx 2/2 2 2 2m32s nginx nginx:alpine app=nginx

twz-nginx 1/1 1 1 16h twz-nginx nginx:alpine run=twz-nginx

service 负载均衡

那么继续我们的问题,你现在运行了一个deployment类型的应用,如何通过浏览器在外部访问这个nginx呢?

通过以前的学习,我们已经能够通过deployment来创建一组pod来提具有高可用的服务。虽然每个pod都会分配一个单独的Pod IP ,然而却存在如下两个问题:

-

Pod IP 仅仅是集群内可见的虚拟IP,外部无法访问。

-

Pod IP 会随着Pod 的销毁而消失,当ReplicaSet对Pod进行动态伸缩时,Pod IP 可能随时随地地都会发生变化,这样对我们访问这个服务带来了难度。

-

因此通过Pod的IP 去访问服务,基本是不现实的,解决方案就是新的资源(service)负载均衡。

kubectl expose deployment tianwenzhao-nginx --port=80 --type=LoadBalancer

# 然后service 查看

[root@k8s-master1 k8s-install]# kubectl get svc -o wide

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 41h <none>

tianwenzhao-nginx LoadBalancer 10.96.24.89 <pending> 80:31975/TCP 3m15s app=nginx

可以看到新建了一个名称为tianwenzhao-nginx的服务。它有一个独立的cluster-ip,内部端口80和外部IP(Node的IP)

然后在外界浏览器用三台虚拟机的IP加外部的端口31975 都能访问到nginx页面

192.168.255.100:31975

192.168.255.101:31975

192.168.255.102:31975

解释:

https://kubernetes.io/zh/docs/concepts/services-networking/service/

kubectl get services 是k8s的资源之一

service 是一组pod 的抽象服务,相当于一组pod的LB,负责将请求分发给对应的pod.service 会为这个LB提供一个IP,一般称这个IP为 cluster IP。

使用service对象,通过selector进行标签选择,找到对应的Pod:

小技巧:查看容器的日志 docker logs -f 容器ID。 查看pod的日志: kubectl logs -f pod-name

6.2 yaml文件创建pod(小插曲)

这块实是在慕课网视频中看到的,只创建pod 不创建deployment,也就是不用deployment来管理。

apiVersion: v1 # deployment 这里是apps/v1

kind: Pod # deployment 这里是Deployment

metadata:

name: twz-nginx

spec:

containers:

- name: nginx

image: nginx:1.7.9

ports:

- containerPort: 80

7. 部署dashboard

官网:https://github.com/kubernetes/dashboard

Kubernetes 仪表盘是kubernetes 集群的通用、基于web 的UI。它允许用户管理集群中运行的应用程序并对其即行故障排除,以及管理集群本身。

# 下载k8s yaml文件

wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.0-rc5/aio/deploy/recommended.yaml

# 修改配置文件 vi recommended.yaml

spec:

ports:

- port: 443

targetPort: 8443

selector:

k8s-app: kubernetes-dashboard

type: NodePort # 大约43行,加上这个,能够让你访问宿主机的IP,就能够访问到集群内的dashboard页面。支持对外访问

创建资源,测试访问

[root@k8s-master01 k8s-install]# kubectl create -f recommended.yaml

# 检查状态

[root@k8s-master1 k8s-install]# kubectl -n kubernetes-dashboard get pod

NAME READY STATUS RESTARTS AGE

dashboard-metrics-scraper-7b8b58dc8b-sf64h 1/1 Running 0 101s

kubernetes-dashboard-866f987876-5n9ph 0/1 ContainerCreating 0 101s

# 查看所有nameserver下的pod

[root@k8s-master1 k8s-install]# kubectl get po -o wide -A --watch

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

default tianwenzhao-nginx-5c559d5697-bfc7s 1/1 Running 0 6h55m 10.244.1.2 k8s-worker1 <none> <none>

default tianwenzhao-nginx-5c559d5697-p4x84 1/1 Running 0 6h55m 10.244.2.4 k8s-worker2 <none> <none>

default twz-nginx-58bf898b8b-llq8x 1/1 Running 1 23h 10.244.2.3 k8s-worker2 <none> <none>

kube-system coredns-58cc8c89f4-qwjrq 1/1 Running 1 43h 10.244.0.4 k8s-master1 <none> <none>

kube-system coredns-58cc8c89f4-tcjdm 1/1 Running 1 43h 10.244.0.5 k8s-master1 <none> <none>

kube-system etcd-k8s-master1 1/1 Running 11 43h 192.168.255.100 k8s-master1 <none> <none>

kube-system kube-apiserver-k8s-master1 1/1 Running 30 43h 192.168.255.100 k8s-master1 <none> <none>

kube-system kube-controller-manager-k8s-master1 1/1 Running 11 43h 192.168.255.100 k8s-master1 <none> <none>

kube-system kube-flannel-ds-amd64-fb9fx 1/1 Running 1 25h 192.168.255.102 k8s-worker2 <none> <none>

kube-system kube-flannel-ds-amd64-m8h9n 1/1 Running 1 25h 192.168.255.100 k8s-master1 <none> <none>

kube-system kube-flannel-ds-amd64-qr92q 1/1 Running 1 25h 192.168.255.101 k8s-worker1 <none> <none>

kube-system kube-proxy-8pqn2 1/1 Running 2 34h 192.168.255.102 k8s-worker2 <none> <none>

kube-system kube-proxy-rvbb7 1/1 Running 2 34h 192.168.255.101 k8s-worker1 <none> <none>

kube-system kube-proxy-w7679 1/1 Running 2 43h 192.168.255.100 k8s-master1 <none> <none>

kube-system kube-scheduler-k8s-master1 1/1 Running 13 43h 192.168.255.100 k8s-master1 <none> <none>

kubernetes-dashboard dashboard-metrics-scraper-7b8b58dc8b-sf64h 1/1 Running 0 5m17s 10.244.1.3 k8s-worker1 <none> <none>

kubernetes-dashboard kubernetes-dashboard-866f987876-5n9ph 1/1 Running 0 5m17s 10.244.1.4 k8s-worker1 <none> <none>

# 容器正确运行后,测试访问,查看service资源

[root@k8s-master1 k8s-install]# kubectl -n kubernetes-dashboard get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

dashboard-metrics-scraper ClusterIP 10.104.253.65 <none> 8000/TCP 9m53s

kubernetes-dashboard NodePort 10.111.47.237 <none> 443:30497/TCP 9m55s

# 可以看出可以访问30497这个端口,就对应内部Pod 443的端口

# 可以通过集群中任一主机IP 加端口号30497访问dashboard页面

https://192.168.255.100:30497/

成功访问到dashboard 登录页面后选择令牌token登录

创建账户访问dashboard ,这里涉及k8s的权限认账系统,跟着操作就好

[root@k8s-master1 k8s-install]# vi admin.yaml

# 贴入

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: admin

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

roleRef:

kind: ClusterRole

name: cluster-admin

apiGroup: rbac.authorization.k8s.io

subjects:

- kind: ServiceAccount

name: admin

namespace: kubernetes-dashboard

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin

namespace: kubernetes-dashboard

# 创建

[root@k8s-master1 k8s-install]# kubectl create -f admin.yaml

# 获取令牌,用于登录dashboard,本条命令是得到secret的名字

[root@k8s-master1 k8s-install]# kubectl -n kubernetes-dashboard get secret |grep admin-token

admin-token-g6r7r kubernetes.io/service-account-token 3 143m

得到的secret的名字是admin-token-g6r7r

#根据如下命令,获取token,注意修改secret的名字

kubectl -n kubernetes-dashboard get secret admin-token-g6r7r -o jsonpath={.data.token}|base64 -d

[root@k8s-master1 k8s-install]# kubectl -n kubernetes-dashboard get secret admin-token-g6r7r -o jsonpath={.data.token}|base64 -d

eyJhbGciOiJSUzI1NiIsImtpZCI6Im5QMENLeGtOczN2YTN6bDFja3RpUm1odkNTM1R4R210d3oxZ0R6R0ktZlEifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJhZG1pbi10b2tlbi1nNnI3ciIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50Lm5hbWUiOiJhZG1pbiIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50LnVpZCI6ImRiYWYzMTc0LTA3YzYtNDk1Yi04NmFkLTY1NzI5MDUwNmExYiIsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDprdWJlcm5ldGVzLWRhc2hib2FyZDphZG1pbiJ9.kVhx_UVY2gie5LgS-BdqCyqsCM4M1MJyuMBxicLpIcNnQFXGBtPu6QidEL5cDWRk3_Y14a7hB5Ku2p6hEU-NhVQ86eSnK1FxOOAr0DM6Nfk-pR-X899fvZGZXx-khaPRR1R6P6UJIdUVcT5qtDV6s3GvtWTAT91v0NdUTxyKyXFylLZ0belmcV2SFcNiSy06kyc6f4D__bTgUFdlsPOpXC-gCNtFqYq6HolqpCv1-6dwFy2SWbFCwav7UbRJOUm1VZHonHP0N3-joe2BJYyMx6FZaFaJHH1sVT9-RjjKKg4nsnlUYvFwE2De8F4p8iBWaqvW1zFw1nHQi_xpyUt6VQ

将得到的token输入dashboard的登录页面要求输入token框中**,登录成功!**

8 .将实验室新的tp系统部署在docker上(随便的一个springboot项目即可)

这里我选择单独启动k8s-worker1这台虚拟机

8.1 在idea 中将项目打包

找到target包下的jar 包

利用xshell和xftp 软件在k8s-worker1虚拟机的root目录下建一个docker_files(我取的名是docker_files_twz)。并将jar包上传至此文件夹下。

8.2 然后在同一个文件夹下编写一个dockerfile文件

创建并编写

vi mis_dockerfile

# 贴入内容

FROM java:8

MAINTAINER TIANWENZHAO

ADD mis-1.0-SNAPSHOT.jar app.jar #因为是当前路径所以就不用改路径了

CMD java -jar app.jar

# 因为我这个项目用的是云数据库,所以只用了一个java

8.3 构建镜像

现在是在[root@k8s-worker1 docker_files_twz]# 路径下执行操作

# 执行

docker build -f ./mis_dockerfile -t twz-mis:v1 .

# -f 是指定执行的dockerfile文件路径。

./是指当前目录下的

./mis_dockerfile 即当前目录下的mis_dockerfile

-t 是指tag

-t twz-mis:v1 是取名为twz-mis 版本为v1

docker build 最后的 . 号,其实是在指定镜像构建过程中的上下文环境的目录。就是代表当前的目录

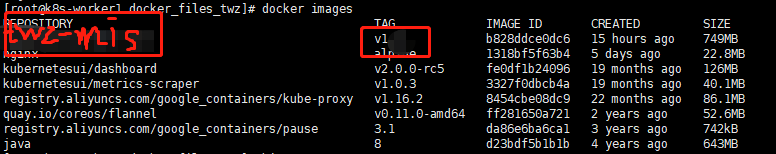

docker images查看后

8.4 启动这个镜像生成容器,然后进行访问

# 启动

docker run -id -p 8096:8096 twz-mis:v1

# -p 8096:8096是指端口映射。

第一个8096是容器对外被访问的端口,可以随意指定,

第二个8096是springboot项目里application.yml文件里 server.port写好的port端口

此时项目已经在docker容器里启动了。

访问一下:使用k8s -worker1虚拟机的ip地址192.168.255.101加上对外映射的端口8096进行访问

8.5 将此镜像上传至dockerhub上

8.5.1 先登录dockerhub创建仓库(没有的话自己注册一个账号)

然后创建一个仓库(我创建了一个私有仓库,dockerhub只允许免费创建一个私人仓库,再创建只能选public了。这样所有人都会看到)

仓库的话默认有个前缀就是自己的账号名/ 。如果你建了一个仓库叫 springboot。那么最后的仓库名就是 账号名/springboot 。从docker 上传镜像时打tag 也是用的 账号名/springboot。

在dockerhub创建一个仓库

8.5.2 在虚拟机中docker 登录dockerhub 账号

# 登录dockerhub

docker login

按照提示输入账号和密码

8.5.3 push镜像到dockerhub 仓库中

推镜像之前要先打tag

#这里换成自己的

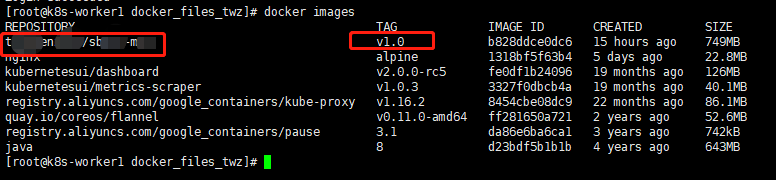

docker tag twz-mis:v1 t**w***/sb***:v1.0

查看

打tag 之前的 twz-mis : v1 的镜像也是共存的。他们的镜像id都是相同的,只不过时tag不同。我这里图片里没显示是我把他给删除了。只留了最新的

这里打的 tag 名是和dockerhub仓库名对应的。tag版本也是要有的

去dockerhub 仓库查看

说明成功了!

2694

2694

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?