一.MySQL高可用简介

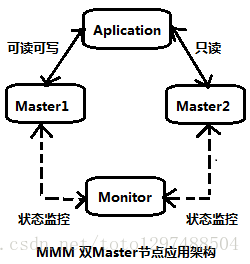

MMM(Master-Master Replication mananger for mysql),由一个管理端(monitor)和多个代理端(agent)构成。通过MMM可以实现监控和管理Mysql主主复制和服务状态,同时也可监控多个Slave节点的复制以及运行状态,并且可以做到任何节点发生故障时实现自动化切换的功能。

MMM套件三个主要脚本:

mmm_mond:监控进程,运行在管理节点,主要负责对所有数据库的监控工作,同时决定和处理所有节点的角色切换。

mmm_agent:代理进程,运行在每台Mysql服务器,完成监控的测试工作和执行远程服务设置。

mmm_control:管理脚本,查看和管理集群运行状态,同时管理mmm_mond进程。

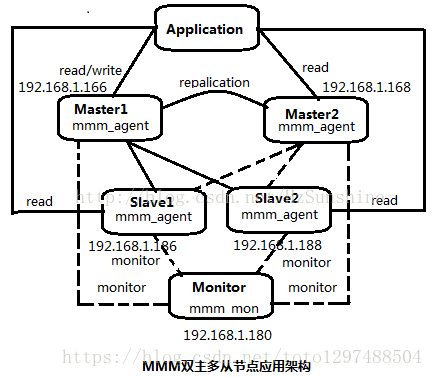

二.MMM典型应用架构

三.MMM双主多从Mysql架构配置

本文环境:

主库:CentOS6.7 x64 192.168.106.106 mysql-5.7

主库:CentOS6.7 x64 192.168.106.107 mysql-5.7

备库:CentOS6.7 x64 192.168.106.109 mysql-5.7

备库:CentOS6.7 x64 192.168.106.110 mysql-5.7

管理:CentOS6.7 x64 192.168.106.148MMM服务器角色对应关系:

node1(主1) 192.168.106.106 db1 1

node2(主2) 192.168.106.107 db2 2

node3(从1) 192.168.106.109 db3 11

node4(从1) 192.168.106.110 db4 12

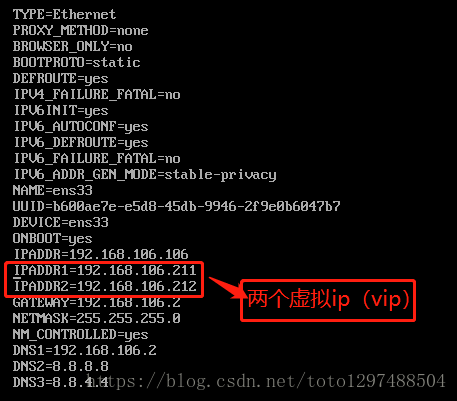

node5(监控) 192.168.106.148 mon -配置完成后,使用下面的VIP访问MySQL Cluster(下面是虚拟ip)。

192.168.106.211 writer

192.168.106.212 reader

192.168.106.213 reader

192.168.106.214 reader

192.168.106.215 reader修改:/etc/sysconfig/network-scripts/ifcfg-ens33中的内容如下:

四、双主环境,以及从服务器环境准备

1,node1(主1) /etc/my.cnf

[mysqld]

server-id = 1

binlog-format = ROW

log-bin = master-bin

log-bin-index = master-bin.index

log-slave-updates = true

auto_increment_offset = 1

auto_increment_increment = 2systemctl restart mysqld

[root@localhost ~]# mysql -uroot -p

mysql> show master status;

+-------------------+----------+--------------+------------------+-------------------+

| File | Position | Binlog_Do_DB | Binlog_Ignore_DB | Executed_Gtid_Set |

+-------------------+----------+--------------+------------------+-------------------+

| master-bin.000001 | 120 | | | |

+-------------------+----------+--------------+------------------+-------------------+

1 row in set (0.00 sec)

mysql>2. node1(主2) /etc/my.cnf

[mysqld]

server-id = 2

binlog-format = ROW

log-bin = master-bin

log-bin-index = master-bin.index

log-slave-updates = true

auto_increment_offset = 2

auto_increment_increment = 2

systemctl restart mysqld

[root@localhost ~]# mysql -uroot -p

mysql> show master status;

+-------------------+----------+--------------+------------------+-------------------+

| File | Position | Binlog_Do_DB | Binlog_Ignore_DB | Executed_Gtid_Set |

+-------------------+----------+--------------+------------------+-------------------+

| master-bin.000001 | 120 | | | |

+-------------------+----------+--------------+------------------+-------------------+

1 row in set (0.00 sec)

mysql> 3. node1,node2 双主服务器配置同步复制帐号

grant replication slave on *.* to 'repl'@'%' identified by '123456';

flush privileges;执行完成之后,发现再次执行show master status;发现有变化了。都变成了:

mysql> show master status;

+-------------------+----------+--------------+------------------+-------------------+

| File | Position | Binlog_Do_DB | Binlog_Ignore_DB | Executed_Gtid_Set |

+-------------------+----------+--------------+------------------+-------------------+

| master-bin.000001 | 407 | | | |

+-------------------+----------+--------------+------------------+-------------------+

1 row in set (0.00 sec)

mysql>4, node3(从)/etc/my.cnf

[mysqld]

binlog-format = ROW

log-bin = mysql-bin

relay-log = slave-relay-bin

relay-log-index = slave-relay-bin.index

log-slave-updates = true

server-id = 11

skip-name-resolve5, node4(从)/etc/my.cnf

[mysqld]

binlog-format = ROW

log-bin = mysql-bin

relay-log = slave-relay-bin

relay-log-index = slave-relay-bin.index

log-slave-updates = true

server-id = 12

skip-name-resolve6. node1从库配置同步过程

(1) 配置同步,手动执行同步参数,该配置会写入master.info文件中。

mysql >

CHANGE MASTER TO

MASTER_HOST='192.168.106.107',

MASTER_PORT=3306,

MASTER_USER='repl',

MASTER_PASSWORD='123456',

MASTER_LOG_FILE='master-bin.000001',

MASTER_LOG_POS= 407;

mysql> start slave;

mysql> show slave status\G;

*************************** 1. row ***************************

Slave_IO_State: Waiting for master to send event

Master_Host: 192.168.106.107

Master_User: repl

Master_Port: 3306

Connect_Retry: 60

Master_Log_File: master-bin.000001

Read_Master_Log_Pos: 407

Relay_Log_File: node1-relay-bin.000002

Relay_Log_Pos: 571

Relay_Master_Log_File: master-bin.000001

Slave_IO_Running: Yes

Slave_SQL_Running: Yes

Replicate_Do_DB:7. node2从库配置同步过程

(1) 配置同步,手动执行同步参数,该配置会写入master.info文件中。

mysql >

CHANGE MASTER TO

MASTER_HOST='192.168.106.106',

MASTER_PORT=3306,

MASTER_USER='repl',

MASTER_PASSWORD='123456',

MASTER_LOG_FILE='master-bin.000001',

MASTER_LOG_POS= 407;

mysql> start slave;

mysql> show slave status\G;

*************************** 1. row ***************************

Slave_IO_State: Waiting for master to send event

Master_Host: 192.168.106.106

Master_User: repl

Master_Port: 3306

Connect_Retry: 60

Master_Log_File: master-bin.000001

Read_Master_Log_Pos: 694

Relay_Log_File: node2-relay-bin.000002

Relay_Log_Pos: 571

Relay_Master_Log_File: master-bin.000001

Slave_IO_Running: Yes

Slave_SQL_Running: Yes

Replicate_Do_DB:8. 测试双主相互复制

(1) node1上创建数据库

mysql> create database mydb;

Query OK, 1 row affected (0.00 sec)

mysql> use mydb;

Database changed

create table teacher

(

id int(3) auto_increment not null primary key,

name char(10) not null,

address varchar(50) default '深圳',

year date

);

insert into teacher values(1,'allen','飞数科技1','2005-10-10');

insert into teacher values(2,'jack','飞数科技2','2005-12-23');

mysql> show tables;

+----------------+

| Tables_in_mydb |

+----------------+

| teacher |

+----------------+

1 row in set (0.00 sec)

mysql> select * from teacher;

+----+-------+---------------+------------+

| id | name | address | year |

+----+-------+---------------+------------+

| 1 | allen | 飞数科技1 | 2005-10-10 |

| 2 | jack | 飞数科技2 | 2005-12-23 |

+----+-------+---------------+------------+

2 rows in set (0.00 sec)(2) node2上的操作如下:

mysql> show databases;

+--------------------+

| Database |

+--------------------+

| information_schema |

| mydb |

| mysql |

| performance_schema |

+--------------------+

4 rows in set (0.00 sec)

mysql> use mydb;

Reading table information for completion of table and column names

You can turn off this feature to get a quicker startup with -A

Database changed

mysql> show tables;

+----------------+

| Tables_in_mydb |

+----------------+

| teacher |

+----------------+

1 row in set (0.00 sec)

mysql> select * from teacher;

+----+-------+---------------+------------+

| id | name | address | year |

+----+-------+---------------+------------+

| 1 | allen | 飞数科技1 | 2005-10-10 |

| 2 | jack | 飞数科技2 | 2005-12-23 |

+----+-------+---------------+------------+

2 rows in set (0.00 sec)

mysql>

Database changed

CREATE TABLE `user` (

`id` varchar(20) NOT NULL,

`username` varchar(20) NOT NULL,

`password` char(32) NOT NULL,

PRIMARY KEY (`id`)

);

INSERT INTO user VALUES ('1', 'koumm', '123456');五、配置node3, node4同步node1(主)

1,node1主库锁表,导数据库

(1) 主库锁表

mysql> flush tables with read lock;

Query OK, 0 rows affected (0.00 sec)

mysql> show master status;

+-------------------+----------+--------------+------------------+-------------------+

| File | Position | Binlog_Do_DB | Binlog_Ignore_DB | Executed_Gtid_Set |

+-------------------+----------+--------------+------------------+-------------------+

| master-bin.000001 | 1226 | | | |

+-------------------+----------+--------------+------------------+-------------------+

1 row in set (0.00 sec)(2) 主库备份

[root@master ~]# mysqldump -uroot -p -B mydb > mydb.sql说明:-B参数有建库语句。

(3) 主库解开锁表功能

mysql> unlock tables;

Query OK, 0 rows affected (0.00 sec)

mysql>2. node3,node4从库导入数据库

# mysql -uroot -p < mydb.sql3. node3,node4 从库配置同步过程

(1) 配置同步,手动执行同步参数,该配置会写入master.info文件中。

mysql >

CHANGE MASTER TO

MASTER_HOST='192.168.106.106',

MASTER_PORT=3306,

MASTER_USER='repl',

MASTER_PASSWORD='123456',

MASTER_LOG_FILE='master-bin.000015',

MASTER_LOG_POS= 342; ---》这个值为上面node1中查找出来的值

systemctl restart mysqld;

mysql> start slave;

Query OK, 0 rows affected (0.04 sec)

mysql> show slave status\G;

*************************** 1. row ***************************

Slave_IO_State: Waiting for master to send event

Master_Host: 192.168.1.121

Master_User: repl

Master_Port: 3306

Connect_Retry: 60

Master_Log_File: master-bin.000005

Read_Master_Log_Pos: 730

Relay_Log_File: slave-relay-bin.000002

Relay_Log_Pos: 284

Relay_Master_Log_File: master-bin.000005

Slave_IO_Running: Yes

Slave_SQL_Running: Yes

Replicate_Do_DB:

Replicate_Ignore_DB:可以简单测试一下同步数据情况。

六、配置MMM过程

1, 安装mmm软件包

(1) node1,node2,node3,node4,node5节点

# wget http://download.fedoraproject.org/pub/epel/6/x86_64/epel-release-6-8.noarch.rpm

# rpm -ivh epel-release-6-8.noarch.rpm(2) node5(监控节点)

# yum install mysql-mmm mysql-mmm-agent mysql-mmm-tools mysql-mmm-monitor(3) node1,node2,node3,node4 (mysql节点)

# yum install mysql-mmm-agent2,所有的MySQL节点添加以下两个用户,这里可以在主库做该操作,会自动同步到其它节点。

mysql> grant replication client on *.* to 'mmm_monitor'@'192.168.106.%' identified by '123456';

mysql> grant super, replication client, process on *.* to 'mmm_agent'@'192.168.106.%' identified by '123456';

mysql> flush privileges;3,所有主机node1,node2,node3,node4,node5上创建如下文件

[root@node1 ~]# vi /etc/mysql-mmm/mmm_common.conf

active_master_role writer

<host default>

cluster_interface eth0

pid_path /var/run/mysql-mmm/mmm_agentd.pid

bin_path /usr/libexec/mysql-mmm/

replication_user repl

replication_password 123456

agent_user mmm_agent

agent_password 123456

</host>

<host db1>

ip 192.168.106.106

mode master

peer db2

</host>

<host db2>

ip 192.168.106.107

mode master

peer db1

</host>

<host db3>

ip 192.168.106.109

mode slave

</host>

<host db4>

ip 192.168.106.110

mode slave

</host>

<role writer>

hosts db1, db2

ips 192.168.106.211

mode exclusive

</role>

<role reader>

hosts db1, db2, db3, db4

ips 192.168.106.212,192.168.106.213,192.168.106.214,192.168.106.215

mode balanced

</role>复制该配置文件到所有节点上:

[root@node1 ~]# scp /etc/mysql-mmm/mmm_common.conf node2:/etc/mysql-mmm/

[root@node1 ~]# scp /etc/mysql-mmm/mmm_common.conf node3:/etc/mysql-mmm/

[root@node1 ~]# scp /etc/mysql-mmm/mmm_common.conf node4:/etc/mysql-mmm/

[root@node1 ~]# scp /etc/mysql-mmm/mmm_common.conf node5:/etc/mysql-mmm/4. 配置agent代理节点,所有mysql主从服务器均为代理节点

(1) node1,node2,node3,node4 的MySQL节点配置mmm_agent.conf

# vi /etc/mysql-mmm/mmm_agent.conf

include mmm_common.conf

this db1 #在四台mysql节点上设置对应的db,分别为db1、db2、db3、db4(2) 默认为启用,可以不用修改

# cat /etc/default/mysql-mmm-agent

# mysql-mmm-agent defaults

ENABLED=1(3) mysql节点启动服务

# chkconfig mysql-mmm-agent on

# /etc/init.d/mysql-mmm-agent start5,配置MMM管理监控节点node5

(1) 配置监控

[root@node5 ~]# vi /etc/mysql-mmm/mmm_mon.conf

include mmm_common.conf<monitor>

ip 127.0.0.1

pid_path /var/run/mysql-mmm/mmm_mond.pid

bin_path /usr/libexec/mysql-mmm

status_path /var/lib/mysql-mmm/mmm_mond.status

ping_ips 192.168.106.1,192.168.106.106,192.168.106.107,192.168.106.109,192.168.106.110

auto_set_online 8

# The kill_host_bin does not exist by default, though the monitor will

# throw a warning about it missing. See the section 5.10 "Kill Host

# Functionality" in the PDF documentation.

#

# kill_host_bin /usr/libexec/mysql-mmm/monitor/kill_host

#

</monitor>

<host default>

monitor_user mmm_monitor

monitor_password 123456

</host>

debug 0(2) mysql节点启动服务

# chkconfig mysql-mmm-monitor on

# /etc/init.d/mysql-mmm-monitor start6, 查看状态

(1) 管理服务器上查看状态

[root@node1 ~]# mmm_control show

db1(192.168.106.104) master/ONLINE. Roles: reader(192.168.106.215), writer(192.168.106.211)

db2(192.168.106.107) master/ONLINE. Roles: reader(192.168.106.213)

db3(192.168.106.109) slave/ONLINE. Roles: reader(192.168.106.212)

db4(192.168.106.110) slave/ONLINE. Roles: reader(192.168.106.214)

[root@node1 ~]# mmm_control checks all

db4 ping [last change: 2016/04/27 16:45:49] OK

db4 mysql [last change: 2016/04/27 16:45:49] OK

db4 rep_threads [last change: 2016/04/27 16:45:49] OK

db4 rep_backlog [last change: 2016/04/27 16:45:49] OK: Backlog is null

db2 ping [last change: 2016/04/27 16:45:49] OK

db2 mysql [last change: 2016/04/27 16:45:49] OK

db2 rep_threads [last change: 2016/04/27 16:45:49] OK

db2 rep_backlog [last change: 2016/04/27 16:45:49] OK: Backlog is null

db3 ping [last change: 2016/04/27 16:45:49] OK

db3 mysql [last change: 2016/04/27 16:45:49] OK

db3 rep_threads [last change: 2016/04/27 16:45:49] OK

db3 rep_backlog [last change: 2016/04/27 16:45:49] OK: Backlog is null

db1 ping [last change: 2016/04/27 16:45:49] OK

db1 mysql [last change: 2016/04/27 16:45:49] OK

db1 rep_threads [last change: 2016/04/27 16:45:49] OK

db1 rep_backlog [last change: 2016/04/27 16:45:49] OK: Backlog is null

[root@node1 ~]#

# Warning: agent on host db2 is not reachable1、 设置虚拟ip

2、 /usr/sbin/启动mmm_agentd

如果mysql> show slave status\G;

出现: Last_Error: Could not execute Write_rows event on table db1.tb_pos_trade10; Duplicate entry '20171208154721001265953059' for key 'PRIMARY', Error_code: 1062; handler error HA_ERR_FOUND_DUPP_KEY; the event's master log master-bin.000017, end_log_pos 6963242解决办法是:

mysql> stop slave;

mysql> set global sql_slave_skip_counter=1;

mysql> start slave;

mysql> show slave status;(2) 服务器的启动VIP地址

[root@node1 ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:0c:29:ff:33:6a brd ff:ff:ff:ff:ff:ff

inet 192.168.106.106/24 brd 192.168.106.255 scope global eth0

inet 192.168.106.215/32 scope global eth0

inet 192.168.106.211/32 scope global eth0

inet6 fe80::20c:29ff:feff:336a/64 scope link

valid_lft forever preferred_lft forever

[root@node1 ~]#

[root@node2 ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.106.0.1/8 scope host lo

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:0c:29:8c:60:58 brd ff:ff:ff:ff:ff:ff

inet 192.168.106.106/24 brd 192.168.106.255 scope global eth0

inet 192.168.106.213/32 scope global eth0

inet6 fe80::20c:29ff:fe8c:6058/64 scope link

valid_lft forever preferred_lft forever

[root@node2 ~]#服务器读写采有VIP地址进行读写,出现故障时VIP会漂移到其它节点,由其它节点提供服务。

七、MMM高可用测试

首先查看整个集群的状态,可以看到整个集群状态正常。

[root@node5 ~]# mmm_control show

db1(192.168.106.106) master/ONLINE. Roles: reader(192.168.106.212), writer(192.168.106.211)

db2(192.168.106.107) master/ONLINE. Roles: reader(192.168.106.213)

db3(192.168.106.109) slave/ONLINE. Roles: reader(192.168.106.215)

db4(192.168.106.110) slave/ONLINE. Roles: reader(192.168.106.214)

[root@192.168.106.30 ~]#1. 模拟node2宕机,手动停止mysql服务,观察monitor日志node2由关闭到启动的日志如下:

[root@192.168.106.30 ~]# tail -f /var/log/mysql-mmm/mmm_mond.log

2016/04/29 09:58:33 FATAL State of host 'db2' changed from ONLINE to HARD_OFFLINE (ping: OK, mysql: not OK)

2016/04/29 10:00:54 FATAL State of host 'db2' changed from HARD_OFFLINE to AWAITING_RECOVERY

2016/04/29 10:01:24 FATAL State of host 'db2' changed from AWAITING_RECOVERY to ONLINE because of auto_set_online(30 seconds). It was in state AWAITING_RECOVERY for 30

seconds重新查看集群的最新状态:

[root@node5 ~]# mmm_control show

db1(192.168.106.201) master/ONLINE. Roles: reader(192.168.106.212), writer(192.168.106.211)

db2(192.168.106.202) master/HARD_OFFLINE. Roles: #从日志发现db2的状态有ONLINE转换为HARD_OFFLINE

db3(192.168.106.203) slave/ONLINE. Roles: reader(192.168.106.213), reader(192.168.106.215)

db4(192.168.106.204) slave/ONLINE. Roles: reader(192.168.106.214)

[root@node5 ~]# mmm_control checks all

db4 ping [last change: 2016/04/29 09:01:08] OK

db4 mysql [last change: 2016/04/29 09:01:08] OK

db4 rep_threads [last change: 2016/04/29 09:01:08] OK

db4 rep_backlog [last change: 2016/04/29 09:01:08] OK: Backlog is null

db2 ping [last change: 2016/04/29 09:01:08] OK

db2 mysql [last change: 2016/04/29 09:58:33] ERROR: Connect error (host = 192.168.106.202:3306, user = mmm_monitor)! Lost connection to MySQL server at 'reading

initial communication packet', system error: 111 能ping通,说明只是服务死掉了。

db2 rep_threads [last change: 2016/04/29 09:01:08] OK

db2 rep_backlog [last change: 2016/04/29 09:01:08] OK: Backlog is null

db3 ping [last change: 2016/04/29 09:01:08] OK

db3 mysql [last change: 2016/04/29 09:01:08] OK

db3 rep_threads [last change: 2016/04/29 09:01:08] OK

db3 rep_backlog [last change: 2016/04/29 09:01:08] OK: Backlog is null

db1 ping [last change: 2016/04/29 09:01:08] OK

db1 mysql [last change: 2016/04/29 09:56:33] OK

db1 rep_threads [last change: 2016/04/29 09:01:08] OK

db1 rep_backlog [last change: 2016/04/29 09:01:08] OK: Backlog is null

[root@node5 ~]#启动node2,node2由HARD_OFFLINE转到AWAITING_RECOVERY状态。这里db2再次接管读请求。

[root@node5 ~]# mmm_control show

db1(192.168.106.201) master/ONLINE. Roles: reader(192.168.106.212), writer(192.168.106.211)

db2(192.168.106.202) master/ONLINE. Roles: reader(192.168.106.213)

db3(192.168.106.203) slave/ONLINE. Roles: reader(192.168.106.215)

db4(192.168.106.204) slave/ONLINE. Roles: reader(192.168.106.214)

[root@node5 ~]#2. 模拟node1主库宕机,查看集群状态。

[root@node5 ~]# mmm_control show

db1(192.168.106.201) master/HARD_OFFLINE. Roles:

db2(192.168.106.202) master/ONLINE. Roles: reader(192.168.106.213), writer(192.168.106.211)

db3(192.168.106.203) slave/ONLINE. Roles: reader(192.168.106.212), reader(192.168.106.215)

db4(192.168.106.204) slave/ONLINE. Roles: reader(192.168.106.214)node1主库再启动:

[root@node5 ~]# mmm_control show

db1(192.168.106.201) master/ONLINE. Roles: reader(192.168.106.212)

db2(192.168.106.202) master/ONLINE. Roles: reader(192.168.106.213), writer(192.168.106.211)

db3(192.168.106.203) slave/ONLINE. Roles: reader(192.168.106.215)

db4(192.168.106.204) slave/ONLINE. Roles: reader(192.168.106.214)可以看到主库启动用会自动转到读的角色,不会接管主,只到现有的主再次宕机。

[root@192.168.106.30 ~]# tail -f /var/log/mysql-mmm/mmm_mond.log

2016/04/29 10:03:25 FATAL State of host 'db1' changed from ONLINE to HARD_OFFLINE (ping: OK, mysql: not OK)

2016/04/29 10:06:20 FATAL State of host 'db1' changed from HARD_OFFLINE to AWAITING_RECOVERY

2016/04/29 10:06:51 FATAL State of host 'db1' changed from AWAITING_RECOVERY to ONLINE because of auto_set_online(30 seconds). It was in state AWAITING_RECOVERY for 31

seconds3. 测试小结:

(1)node2备选主节点宕机不影响集群的状态,就是移除了node2备选节点的读状态。

(2)node1主节点宕机,由node2备选主节点接管写角色,node3,node4指向新node2主库进行复制,node3,node4会自动change master到node2.

(3)问题来了:

如果node1主库宕机,node2复制应用又落后于node1时就变成了主可写状态,这进的数据主无法保证一致性。

如果node2,node3,node4延迟于node1主,这个时node1宕机,node3,node4将会等待数据追上db1后,再重新指向新的主node2进行复制操作,这时的数据也无法保证同步的一致性。

(4)如查采用MMM高可用架构,主,主备选节点机器配置一样,而且开启半同步进一步提高安全性或采用MariaDB进行多线程从复制,提高复制的性能。说明:MMM(Master-Master Replication Manager for MySQL)主主复制管理器, MMM集群套件具有良好的稳定性、高可用性和可扩展性。当活动的Master节点出现故障时,备用Master节点可以立即接管,而其他的Slave节点也能自动切换到备用Master节点继续进行同步复制,而无需人为干涉;MMM架构需要多个节点、多个IP,对服务器数量有要求,在读写非常繁忙的业务系统下表现不是很稳定,可能会出现复制延时、切换失效等问题。MMM方案并不太适应于对数据安全性要求很高,并且读、写繁忙的环境中。

===============================================

一 MMM 高可用mysql简介

https://blog.csdn.net/hzsunshine/article/details/67081917

MMM(Master-Master Replication mananger for mysql),由一个管理端(monitor)和多个代理端(agent)构成。通过MMM可以实现监控和管理Mysql主主复制和服务状态,同时也可监控多个Slave节点的复制以及运行状态,并且可以做到任何节点发生故障时实现自动化切换的功能。

MMM套件三个主要脚本:

mmm_mond:监控进程,运行在管理节点,主要负责对所有数据库的监控工作,同时决定和处理所有节点的角色切换。

mmm_agent:代理进程,运行在每台Mysql服务器,完成监控的测试工作和执行远程服务设置。

mmm_control:管理脚本,查看和管理集群运行状态,同时管理mmm_mond进程。

二 MMM典型应用架构

三 MMM双主多从Mysql架构配置

架构图如上图

双主双从应用架构读、写分离IP列表

角色 物理IP server_id 虚拟IP地址 IP角色 功能

Master1 192.168.106.106 1 192.168.106.200 writer IP 写入VIP,单点写入

192.168.106.201 reader IP 读查询VIP,每个节点一个读VIP,可通过负载均衡软件对读负载均衡

Master2 192.168.106.107 2 192.168.106.202

Slave1 192.168.106.109 3 192.168.106.203

Slave2 192.168.106.110 4 192.168.106.204

Monitor 192.168.106.148

0.配置前准备

校时操作

安装ntpdate工具

yum install ntpdate -y

使用ntpdate校时(后面的是ntp服务器)

ntpdate pool.ntp.org

关闭selinux

setenforce 0

sed -i ‘s/enforcing/disabled/g’ /etc/selinux/config

1 MMM的安装配置

1.MMM套件安装

1.在Monitor端安装所有MMM组件

yum install epel-release.noarch -y

yum install mysql-mmm mysql-mmm-agent mysql-mmm-tools mysql-mmm-monitor -y

2.在其他所有节点安装mysql-mmm-agent

yum install epel-release.noarch -y

yum install mysql-mmm-agent -y

2.Master1和Master2的主主配置和Masetr1和Slave1和Slave2的主从配置

(安装配置参考《Mysql主从复制配置》《Mysql+Keepalived双主互备高可用》的配置)

3.在所有MySQL节点的/etc/my.cnf中增加参数(要重启)

read_only=1

read_only是因为MMM对数据需严格的读写控制

此参数不影响replication;root用户依然可写。

4.所有MySQL节点创建monitor user(健康检测)和monitor agent(切换只读模式和同步Master信息)帐号(仅在mysql写入主节点,其他节点会自动复制)

grant replication client on . to ‘mmm_monitor’@’192.168.1.%’ identified by ‘monitorpasswd’;

grant super, replication client, process on . to ‘mmm_agent’@’192.168.1.%’ identified by ‘agentpasswd’;

5.在所有MMM节点配置mmm_common.conf (注意以下所有配置文件中不能以下注释,会报错 使用sed -i ‘/^#/d;s/#.*//g’ file 清除注释)

vim /etc/mysql-mmm/mmm_common.conf

当设置此参数,所有mysql节点都设置为”read_only=1”,MMM会根据Mysql角色来决定是否执行”set global read_only=0”.

active_master_role writer

cluster_interface eno16777736 #设置网络接口

pid_path /run/mysql-mmm-agent.pid #设置PID文件位置

bin_path /usr/libexec/mysql-mmm/ #设置MMM可执行文件路径

replication_user slave_cp #设置复制的用户名

replication_password pass #设置复制用户密码

agent_user mmm_agent #设置更改只读操作用户

agent_password agentpasswd #设置更改只读操作用户密码

#DB1配置信息

ip 192.168.106.106

mode master

peer db2 #与DB1对等主机

ip 192.168.106.107

mode master

peer db1

ip 192.168.106.109

mode slave

ip 192.168.106.110

mode slave

#设置可执行写用户

hosts db1, db2 #DB1和DB2都可执行

ips 192.168.1.160 #设置可写的VIP

mode exclusive #设置角色互斥模式,互斥角色只有一个IP,同一时间只能分配给一个用户

#设置刻度角色模式

hosts db1, db2, db3, db4 #设置可执行主机

ips 192.168.106.201, 192.168.106.202, 192.168.106.203, 192.168.106.204

mode balanced #设置角色模式为负载均衡,这些IP动态分配多个MySQL主机

6.在仅在MMM管理节点配置mmm_mom.conf

vim /etc/mysql-mmm/mmm_mon.conf

include mmm_common.conf

ip 127.0.0.1 #安全起见,只在本机监听,默认端口9988

pid_path /run/mysql-mmm-monitor.pid

bin_path /usr/libexec/mysql-mmm

status_path /var/lib/mysql-mmm/mmm_mond.status

#测试网络连通性,只要一个正常则网络正常

ping_ips 192.168.1.1, 192.168.106.106, 192.168.106.107, 192.168.106.109, 192.168.106.110

flap_duration 3600 #抖动时间范围

flap_count 3 #在抖动时间范围内最大抖动次数

auto_set_online 8 #是否自动上线,如果大于0,抖动的主机在抖动时间范围过后,则设置自动上线

# The kill_host_bin does not exist by default, though the monitor will

# throw a warning about it missing. See the section 5.10 "Kill Host

# Functionality" in the PDF documentation.

#

# kill_host_bin /usr/libexec/mysql-mmm/monitor/kill_host

#

monitor_user mmm_monitor

monitor_password monitorpasswd

debug 0 #MMM管理端运行模式 0 正常模式 1 debug模式

开启MMM管理端的9988端口

firewall-cmd –permanent –add-port=9988/tcp

firewall-cmd –reload

开启所有mysql节点的9989端口

firewall-cmd –permanent –add-port=9989/tcp

firewall-cmd –reload

7.在所有Mysql节点设置mmm_agent.conf

vim /etc/mysql-mmm/mmm_agent.conf

include mmm_common.conf

this db1 #在四台mysql节点上设置对应的db,分别为db1、db2、db3、db4

8.启动MMM服务

MMM管理端启动

systemctl restart mysql-mmm-monitor

systemctl enable mysql-mmm-monitor

Mysql节点启动

systemctl restart mysql-mmm-agent

systemctl enable mysql-mmm-agent

MMM管理端基本管理命令

mmm_control show

db1(192.168.106.106) master/AWAITING_RECOVERY. Roles:

db2(192.168.106.107) master/AWAITING_RECOVERY. Roles:

db3(192.168.106.109) slave/AWAITING_RECOVERY. Roles:

db4(192.168.106.110) slave/AWAITING_RECOVERY. Roles:

如果一直显示等待,可手动设置

mmm_control set_online db1

mmm_control set_online db2

mmm_control set_online db3

mmm_control set_online db4

mmm_control show

db1(192.168.106.106) master/ONLINE. Roles: reader(192.168.106.204), writer(192.168.106.200)

db2(192.168.106.107) master/ONLINE. Roles: reader(192.168.106.201)

db3(192.168.106.109) slave/ONLINE. Roles: reader(192.168.106.203)

db4(192.168.106.110) slave/ONLINE. Roles: reader(192.168.106.202)

查看各个节点运行状态

mmm_control checks all

db4 ping [last change: 2017/03/25 22:55:49] OK

db4 mysql [last change: 2017/03/25 22:55:49] OK

db4 rep_threads [last change: 2017/03/25 22:55:49] OK

db4 rep_backlog [last change: 2017/03/25 22:55:49] OK: Backlog is null

db2 ping [last change: 2017/03/25 22:55:49] OK

db2 mysql [last change: 2017/03/25 22:55:49] OK

db2 rep_threads [last change: 2017/03/25 22:55:49] OK

db2 rep_backlog [last change: 2017/03/25 22:55:49] OK: Backlog is null

db3 ping [last change: 2017/03/25 22:55:49] OK

db3 mysql [last change: 2017/03/25 22:55:49] OK

db3 rep_threads [last change: 2017/03/25 22:55:49] OK

db3 rep_backlog [last change: 2017/03/25 22:55:49] OK: Backlog is null

db1 ping [last change: 2017/03/25 22:55:49] OK

db1 mysql [last change: 2017/03/25 22:55:49] OK

db1 rep_threads [last change: 2017/03/25 22:55:49] OK

db1 rep_backlog [last change: 2017/03/25 22:55:49] OK: Backlog is null

查看mysql各个节点VIP绑定状态

ip a

9.测试

1.读写分离测试

创建测试用户

mysql -uroot -p

create database test;

create user test@”192.168.1.%” identified by ‘123’;

grant all on test.* to test@”192.168.1.%”;

exit

写VIP登录(创建表单,插入数据测试略)

mysql -utest -p -h192.168.1.160

use test;

create table mmm_test(id varchar(60));

insert into mmm_test (id) values (“masetr”);

exit

读VIP登录

mysql -utest -p -h192.168.106.201

select * from test.mmm_test;

+—————-+

| Tables_in_test |

+—————-+

| mmm_test |

+—————-+

mysql -utest -p -h192.168.106.202

select * from test.mmm_test;

2.故障测试

[root@monitor ~]# mmm_control show

db1(192.168.106.106) master/ONLINE. Roles: reader(192.168.106.202), writer(192.168.1.160)

db2(192.168.106.107) master/ONLINE. Roles: reader(192.168.106.201)

db3(192.168.106.109) slave/ONLINE. Roles: reader(192.168.106.204)

db4(192.168.106.110) slave/ONLINE. Roles: reader(192.168.106.203)

[root@DB1 ~]# systemctl stop mariadb

[root@www ~]# mmm_control show

db1(192.168.106.106) master/HARD_OFFLINE. Roles:

db2(192.168.106.107) master/ONLINE. Roles: reader(192.168.106.201)

db3(192.168.106.109) slave/ONLINE. Roles: reader(192.168.106.204)

db4(192.168.106.110) slave/ONLINE. Roles: reader(192.168.106.203)

[root@monitor ~]# mmm_control show

db1(192.168.106.106) master/HARD_OFFLINE. Roles:

db2(192.168.106.107) master/ONLINE. Roles: reader(192.168.106.201), writer(192.168.1.160)

db3(192.168.106.109) slave/ONLINE. Roles: reader(192.168.106.202), reader(192.168.106.204)

db4(192.168.106.110) slave/ONLINE. Roles: reader(192.168.106.203)

[root@DB1 ~]# systemctl restart mariadb

注意虽然DB1复活,但写VIP仍然在DB2不变

[root@monitor ~]# mmm_control show

db1(192.168.106.106) master/ONLINE. Roles: reader(192.168.106.202)

db2(192.168.106.107) master/ONLINE. Roles: reader(192.168.106.201), writer(192.168.1.160)

db3(192.168.106.109) slave/ONLINE. Roles: reader(192.168.106.204)

db4(192.168.106.110) slave/ONLINE. Roles: reader(192.168.106.203)

四 Amoeba优化MMM架构(服务器IP 192.168.1.199)

1.安装Amoeba开发环境Java

建立安装目录

mkdir /usr/java

cd /usr/java

官网下载地址http://download.oracle.com/otn-pub/java/jdk/8u92-b14/jdk-8u92-linux-x64.rpm

wget -c –no-check-certificate –no-cookie –header “Cookie: s_nr=1420682671945; s_cc=true; oraclelicense=accept-securebackup-cookie; gpw_e24=http%3A%2F%2Fwww.oracle.com%2Ftechnetwork%2Fjava%2Fjavase%2Fdownloads%2Fjdk7-downloads-1880260.html;s_sq=%5B%5BB%5D%5D” http://download.oracle.com/otn-pub/java/jdk/8u92-b14/jdk-8u92-linux-x64.rpm

chmod +x jdk-8u92-linux-x64.rpm

rpm -ivh jdk-8u92-linux-x64.rpm

vim /etc/profile

追加以下信息

export JAVA_HOME=/usr/java/jdk1.8.0_92

export CLASSPATH=.:

JAVAHOME/jre/lib/rt.jar:

J

A

V

A

H

O

M

E

/

j

r

e

/

l

i

b

/

r

t

.

j

a

r

:

JAVA_HOME/lib/dt.jar:

JAVAHOME/lib/tools.jarexportPATH=

J

A

V

A

H

O

M

E

/

l

i

b

/

t

o

o

l

s

.

j

a

r

e

x

p

o

r

t

P

A

T

H

=

PATH:$JAVA_HOME/bin

立即生效

source /etc/profile

查看版本信息

java -version

java version “1.8.0_92”

Java(TM) SE Runtime Environment (build 1.8.0_92-b14)

Java HotSpot(TM) 64-Bit Server VM (build 25.92-b14, mixed mode)

2.安装Amoeba

wget https://sourceforge.net/projects/amoeba/files/Amoeba%20for%20mysql/3.x/amoeba-mysql-3.0.5-RC-distribution.zip

unzip amoeba-mysql-3.0.5-RC-distribution.zip

mv amoeba-mysql-3.0.5-RC /usr/local/amoeba/

3.配置Amoeba

vim /usr/local/amoeba/conf/dbServers.xml

修改以下黑体信息

给所有权限(测试会用到)

grant all on . to amoeba@”192.168.1.199”;

flush privileges;

exit

5.开启amoeba防火墙

firewall-cmd –permanent –add-port=8066/tcp

firewall-cmd –reload

6.启动Amoeba

/usr/local/amoeba/bin/launcher &

netstat -tlunp | grep java

tcp6 0 0 :::8066 :::* LISTEN 2666/java

报错解决方法

vim /usr/local/amoeba/jvm.properties

将下面内容修改成最下面

JVM_OPTIONS=”-server -Xms256m -Xmx1024m -Xss196k -XX:PermSize=16m -XX:MaxPermSize=96m”

JVM_OPTIONS=”-server -Xms1024m -Xmx1024m -Xss256k -XX:PermSize=16m -XX:MaxPermSize=96m”

7.验证

在mysql进行如下操作

在slave1,2从库执行

insert into test.mmm_test (id) values (“slave”);

开始验证

mysql -uroot -p1234567890 -h192.168.1.199 -P8066

select * from test.mmm_test;

MySQL [(none)]> select * from test.mmm_test;

+——–+

| id |

+——–+

| masetr |

| slave |

+——–+

2 rows in set (0.07 sec)

MySQL [(none)]> select * from test.mmm_test;

+——–+

| id |

+——–+

| masetr |

| slave |

+——–+

2 rows in set (0.03 sec)

MySQL [(none)]> select * from test.mmm_test;

+——–+

| id |

+——–+

| masetr |

+——–+

1 row in set (0.04 sec)

MySQL [(none)]> select * from test.mmm_test;

+——–+

| id |

+——–+

| masetr |

+——–+

1 row in set (0.04 sec)

五 MySQL读写分离完整高可用集群架构

(电脑最多支持6台虚拟机,再多就翘翘了,就不测试)

527

527

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?