一、首先找到spark-1.6.1-bin-hadoop2.6.tgz 的JavaKafkaWordCount

位置具体在

spark-1.6.1-bin-hadoop2.6\examples\src\main\java\org\apache\spark\examples\streaming\JavaKafkaWordCount/*

* Licensed to the Apache Software Foundation (ASF) under one or more

* contributor license agreements. See the NOTICE file distributed with

* this work for additional information regarding copyright ownership.

* The ASF licenses this file to You under the Apache License, Version 2.0

* (the "License"); you may not use this file except in compliance with

* the License. You may obtain a copy of the License at

*

* http://www.apache.org/licenses/LICENSE-2.0

*

* Unless required by applicable law or agreed to in writing, software

* distributed under the License is distributed on an "AS IS" BASIS,

* WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

* See the License for the specific language governing permissions and

* limitations under the License.

*/

package org.apache.spark.examples.streaming;

import java.util.Map;

import java.util.HashMap;

import java.util.regex.Pattern;

import scala.Tuple2;

import com.google.common.collect.Lists;

import org.apache.spark.SparkConf;

import org.apache.spark.api.java.function.FlatMapFunction;

import org.apache.spark.api.java.function.Function;

import org.apache.spark.api.java.function.Function2;

import org.apache.spark.api.java.function.PairFunction;

import org.apache.spark.examples.streaming.StreamingExamples;

import org.apache.spark.streaming.Duration;

import org.apache.spark.streaming.api.java.JavaDStream;

import org.apache.spark.streaming.api.java.JavaPairDStream;

import org.apache.spark.streaming.api.java.JavaPairReceiverInputDStream;

import org.apache.spark.streaming.api.java.JavaStreamingContext;

import org.apache.spark.streaming.kafka.KafkaUtils;

/**

* Consumes messages from one or more topics in Kafka and does wordcount.

*

* Usage: JavaKafkaWordCount <zkQuorum> <group> <topics> <numThreads>

* <zkQuorum> is a list of one or more zookeeper servers that make quorum

* <group> is the name of kafka consumer group

* <topics> is a list of one or more kafka topics to consume from

* <numThreads> is the number of threads the kafka consumer should use

*

* To run this example:

* `$ bin/run-example org.apache.spark.examples.streaming.JavaKafkaWordCount zoo01,zoo02, \

* zoo03 my-consumer-group topic1,topic2 1`

*/

public final class JavaKafkaWordCount {

private static final Pattern SPACE = Pattern.compile(" ");

private JavaKafkaWordCount() {

}

public static void main(String[] args) {

if (args.length < 4) {

System.err.println("Usage: JavaKafkaWordCount <zkQuorum> <group> <topics> <numThreads>");

System.exit(1);

}

StreamingExamples.setStreamingLogLevels();

SparkConf sparkConf = new SparkConf().setAppName("JavaKafkaWordCount");

// Create the context with 2 seconds batch size

JavaStreamingContext jssc = new JavaStreamingContext(sparkConf, new Duration(2000));

int numThreads = Integer.parseInt(args[3]);

Map<String, Integer> topicMap = new HashMap<String, Integer>();

String[] topics = args[2].split(",");

for (String topic: topics) {

topicMap.put(topic, numThreads);

}

JavaPairReceiverInputDStream<String, String> messages =

KafkaUtils.createStream(jssc, args[0], args[1], topicMap);

JavaDStream<String> lines = messages.map(new Function<Tuple2<String, String>, String>() {

@Override

public String call(Tuple2<String, String> tuple2) {

return tuple2._2();

}

});

JavaDStream<String> words = lines.flatMap(new FlatMapFunction<String, String>() {

@Override

public Iterable<String> call(String x) {

return Lists.newArrayList(SPACE.split(x));

}

});

JavaPairDStream<String, Integer> wordCounts = words.mapToPair(

new PairFunction<String, String, Integer>() {

@Override

public Tuple2<String, Integer> call(String s) {

return new Tuple2<String, Integer>(s, 1);

}

}).reduceByKey(new Function2<Integer, Integer, Integer>() {

@Override

public Integer call(Integer i1, Integer i2) {

return i1 + i2;

}

});

wordCounts.print();

jssc.start();

jssc.awaitTermination();

}

}首选部署spark平台启动 根据类上面的注释:启动的方式:

bin/run-example org.apache.spark.examples.streaming.JavaKafkaWordCount zoo01,zoo02,zoo03 my-consumer-group topic1,topic2 1第一个参数为zk;

第二个参数为消费组名称,这个自己可以任意命名

第三个是kafka 要消费的主题

第四个 是 线程数,一般根据cpu的内核定义

自己模拟如下: 首先你到spark部署的位置 cd /opt/spark-1.6.1-bin-custom-spark 然后执行下面命令

./bin/run-example org.apache.spark.examples.streaming.JavaKafkaWordCount 192.168.186.29:2181 test_java_kafka_word_count test-topic 4等启动起来,开始编写kafka生产者

package com.ultrapower.scala.kafka.producer;

import org.apache.kafka.clients.producer.KafkaProducer;

import org.apache.kafka.clients.producer.Producer;

import org.apache.kafka.clients.producer.ProducerRecord;

import java.util.Properties;

/**

*

* @author xiefg

*/

public class KafkaProducerExample {

public void run()

{

Properties props = getConfig();

Producer<String, String> producer = new KafkaProducer<String, String>(props);

String topic="test-topic",key,value;

for (int i = 0; i < 1000; i++) {

key = "key_"+i;

value="value_"+i;

System.out.println("TOPIC: test-topic;发送KEY:"+key+";Value:"+value);

producer.send(new ProducerRecord<String, String>(topic, key,value));

try {

Thread.sleep(1000);

}

catch (InterruptedException e) {

e.printStackTrace();

}

}

producer.close();

}

// config

public Properties getConfig()

{

Properties props = new Properties();

//kafka节点

props.put("bootstrap.servers", "192.168.186.29:9092");

props.put("acks", "all");

props.put("retries", 0);

props.put("batch.size", 16384);

props.put("linger.ms", 1);

props.put("buffer.memory", 33554432);

props.put("key.serializer", "org.apache.kafka.common.serialization.StringSerializer");

props.put("value.serializer", "org.apache.kafka.common.serialization.StringSerializer");

return props;

}

public static void main(String[] args)

{

KafkaProducerExample example = new KafkaProducerExample();

example.run();

}

}

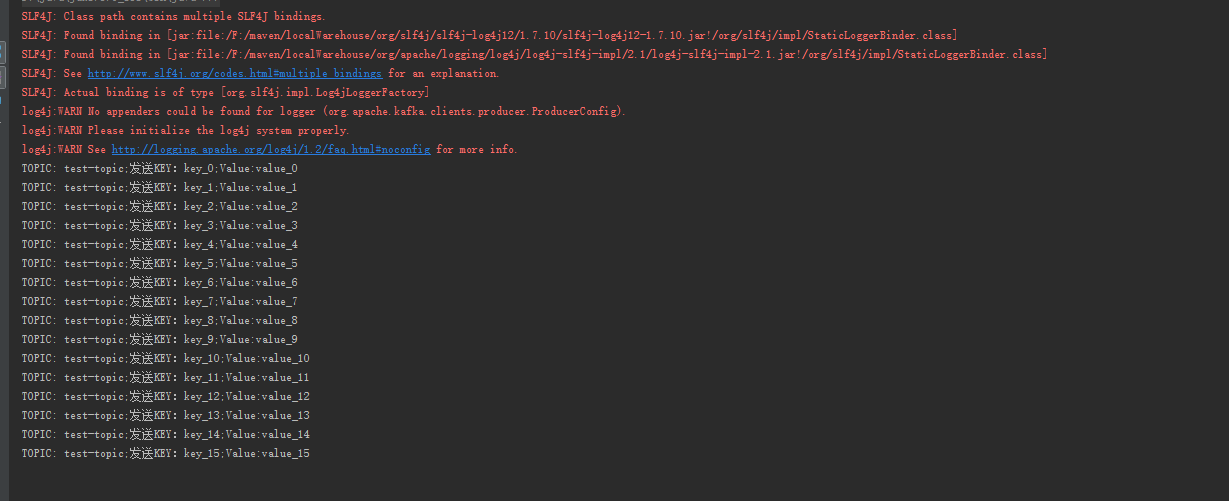

在idea运行起来如图:

接下来看一下spark启动的后端生成的日志

可以看到生产者在发送消息主题,消费者在实时的处理。同时我们可以用工具监控一下消费的情况

可以看到分区数,偏移指针的变化和日志的大小等等。

本案列应该是最简单的一个从生产到消费的过程思路,如果牵涉到具体的业务需要具体的做相应的处理

1167

1167

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?