1 简介

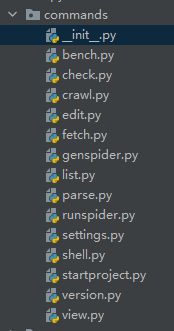

源码截图

scrapy一共有14类命令,每一类命令对应上不一个文件

2 settings优先级说明

SETTINGS_PRIORITIES = {

'default': 0,

'command': 10,

'project': 20,

'spider': 30,

'cmdline': 40,

}class SettingsAttribute:

"""Class for storing data related to settings attributes.

This class is intended for internal usage, you should try Settings class

for settings configuration, not this one.

"""

def __init__(self, value, priority):

self.value = value

if isinstance(self.value, BaseSettings):

self.priority = max(self.value.maxpriority(), priority)

else:

self.priority = priority

def set(self, value, priority):

"""Sets value if priority is higher or equal than current priority."""

if priority >= self.priority:

if isinstance(self.value, BaseSettings):

value = BaseSettings(value, priority=priority)

self.value = value

self.priority = priority

def __str__(self):

return f"<SettingsAttribute value={self.value!r} priority={self.priority}>"

__repr__ = __str__以上源码说明 cmdline > spider > project > command > default

3 基类源码

class ScrapyCommand:

requires_project = False

crawler_process = None

# default settings to be used for this command instead of global defaults

default_settings: Dict[str, Any] = {}

exitcode = 0

def __init__(self):

self.settings = None # set in scrapy.cmdline

def set_crawler(self, crawler):

if hasattr(self, '_crawler'):

raise RuntimeError("crawler already set")

self._crawler = crawler

def syntax(self):

"""

Command syntax (preferably one-line). Do not include command name.

"""

return ""

def short_desc(self):

"""

A short description of the command

"""

return ""

def long_desc(self):

"""A long description of the command. Return short description when not

available. It cannot contain newlines since contents will be formatted

by optparser which removes newlines and wraps text.

"""

return self.short_desc()

def help(self):

"""An extensive help for the command. It will be shown when using the

"help" command. It can contain newlines since no post-formatting will

be applied to its contents.

"""

return self.long_desc()

def add_options(self, parser):

"""

Populate option parse with options available for this command

"""

group = OptionGroup(parser, "Global Options")

group.add_option("--logfile", metavar="FILE",

help="log file. if omitted stderr will be used")

group.add_option("-L", "--loglevel", metavar="LEVEL", default=None,

help=f"log level (default: {self.settings['LOG_LEVEL']})")

group.add_option("--nolog", action="store_true",

help="disable logging completely")

group.add_option("--profile", metavar="FILE", default=None,

help="write python cProfile stats to FILE")

group.add_option("--pidfile", metavar="FILE",

help="write process ID to FILE")

group.add_option("-s", "--set", action="append", default=[], metavar="NAME=VALUE",

help="set/override setting (may be repeated)")

group.add_option("--pdb", action="store_true", help="enable pdb on failure")

parser.add_option_group(group)

def process_options(self, args, opts):

try:

self.settings.setdict(arglist_to_dict(opts.set),

priority='cmdline')

except ValueError:

raise UsageError("Invalid -s value, use -s NAME=VALUE", print_help=False)

if opts.logfile:

self.settings.set('LOG_ENABLED', True, priority='cmdline')

self.settings.set('LOG_FILE', opts.logfile, priority='cmdline')

if opts.loglevel:

self.settings.set('LOG_ENABLED', True, priority='cmdline')

self.settings.set('LOG_LEVEL', opts.loglevel, priority='cmdline')

if opts.nolog:

self.settings.set('LOG_ENABLED', False, priority='cmdline')

if opts.pidfile:

with open(opts.pidfile, "w") as f:

f.write(str(os.getpid()) + os.linesep)

if opts.pdb:

failure.startDebugMode()

def run(self, args, opts):

"""

Entry point for running commands

"""

raise NotImplementedError

class BaseRunSpiderCommand(ScrapyCommand):

"""

Common class used to share functionality between the crawl, parse and runspider commands

"""

def add_options(self, parser):

ScrapyCommand.add_options(self, parser)

parser.add_option("-a", dest="spargs", action="append", default=[], metavar="NAME=VALUE",

help="set spider argument (may be repeated)")

parser.add_option("-o", "--output", metavar="FILE", action="append",

help="append scraped items to the end of FILE (use - for stdout)")

parser.add_option("-O", "--overwrite-output", metavar="FILE", action="append",

help="dump scraped items into FILE, overwriting any existing file")

parser.add_option("-t", "--output-format", metavar="FORMAT",

help="format to use for dumping items")

def process_options(self, args, opts):

ScrapyCommand.process_options(self, args, opts)

try:

opts.spargs = arglist_to_dict(opts.spargs)

except ValueError:

raise UsageError("Invalid -a value, use -a NAME=VALUE", print_help=False)

if opts.output or opts.overwrite_output:

feeds = feed_process_params_from_cli(

self.settings,

opts.output,

opts.output_format,

opts.overwrite_output,

)

self.settings.set('FEEDS', feeds, priority='cmdline')ScrapyCommand 类有一下参数

-

--logfile 保存日志文件

-

-L , --loglevel 设置日志级别 默认为 DEBUG

-

--nolog 关闭打印日志

-

--profile 写state到文件

-

--pidfile 写进程ID到文件

-

-s, --set 添加settings 能够重复 NAME=VALUE

-

--pdb

BaseRunSpiderCommand 继承 ScrapyCommand 新添加参数

-

-a 设置自定义参数

-

-o,--output 设置输出文件

-

-O, --overwrite-output 覆盖输出文件

-

-t, --output-format 输出文件格式

2 命令详情

1) bench

class Command(ScrapyCommand):

default_settings = {

'LOG_LEVEL': 'INFO',

'LOGSTATS_INTERVAL': 1,

'CLOSESPIDER_TIMEOUT': 10,

}

def short_desc(self):

return "Run quick benchmark test"

def run(self, args, opts):

with _BenchServer():

self.crawler_process.crawl(_BenchSpider, total=100000)

self.crawler_process.start()测试scrapy性能

2) check

class Command(ScrapyCommand):

requires_project = True

default_settings = {'LOG_ENABLED': False}

def syntax(self):

return "[options] <spider>"

def short_desc(self):

return "Check spider contracts"

def add_options(self, parser):

ScrapyCommand.add_options(self, parser)

parser.add_option("-l", "--list", dest="list", action="store_true",

help="only list contracts, without checking them")

parser.add_option("-v", "--verbose", dest="verbose", default=False, action='store_true',

help="print contract tests for all spiders")

def run(self, args, opts):

# load contracts

contracts = build_component_list(self.settings.getwithbase('SPIDER_CONTRACTS'))

conman = ContractsManager(load_object(c) for c in contracts)

runner = TextTestRunner(verbosity=2 if opts.verbose else 1)

result = TextTestResult(runner.stream, runner.descriptions, runner.verbosity)

# contract requests

contract_reqs = defaultdict(list)

spider_loader = self.crawler_process.spider_loader

with set_environ(SCRAPY_CHECK='true'):

for spidername in args or spider_loader.list():

spidercls = spider_loader.load(spidername)

spidercls.start_requests = lambda s: conman.from_spider(s, result)

tested_methods = conman.tested_methods_from_spidercls(spidercls)

if opts.list:

for method in tested_methods:

contract_reqs[spidercls.name].append(method)

elif tested_methods:

self.crawler_process.crawl(spidercls)

# start checks

if opts.list:

for spider, methods in sorted(contract_reqs.items()):

if not methods and not opts.verbose:

continue

print(spider)

for method in sorted(methods):

print(f' * {method}')

else:

start = time.time()

self.crawler_process.start()

stop = time.time()

result.printErrors()

result.printSummary(start, stop)

self.exitcode = int(not result.wasSuccessful())添加参数 -l, --list 列出所有的contracts但是不检查,-v,--verbose检查并打印所有的方法

3) crawl

class Command(BaseRunSpiderCommand):

requires_project = True

def syntax(self):

return "[options] <spider>"

def short_desc(self):

return "Run a spider"

def run(self, args, opts):

if len(args) < 1:

raise UsageError()

elif len(args) > 1:

raise UsageError("running 'scrapy crawl' with more than one spider is not supported")

spname = args[0]

crawl_defer = self.crawler_process.crawl(spname, **opts.spargs)

if getattr(crawl_defer, 'result', None) is not None and issubclass(crawl_defer.result.type, Exception):

self.exitcode = 1

else:

self.crawler_process.start()

if (

self.crawler_process.bootstrap_failed

or hasattr(self.crawler_process, 'has_exception') and self.crawler_process.has_exception

):

self.exitcode = 1根据Spider.name 执行Spider

例如 scrapy crawl ***

与runspider的区别在于runspider 执行的是py文件中第一个Spider类

4) edit

class Command(ScrapyCommand):

requires_project = True

default_settings = {'LOG_ENABLED': False}

def syntax(self):

return "<spider>"

def short_desc(self):

return "Edit spider"

def long_desc(self):

return ("Edit a spider using the editor defined in the EDITOR environment"

" variable or else the EDITOR setting")

def _err(self, msg):

sys.stderr.write(msg + os.linesep)

self.exitcode = 1

def run(self, args, opts):

if len(args) != 1:

raise UsageError()

editor = self.settings['EDITOR']

try:

spidercls = self.crawler_process.spider_loader.load(args[0])

except KeyError:

return self._err(f"Spider not found: {args[0]}")

sfile = sys.modules[spidercls.__module__].__file__

sfile = sfile.replace('.pyc', '.py')

self.exitcode = os.system(f'{editor} "{sfile}"')EDITOR = 'vi'

if sys.platform == 'win32':

EDITOR = '%s -m idlelib.idle'在liunx上执行使用的vi编辑文件

5) fetch

class Command(ScrapyCommand):

requires_project = False

def syntax(self):

return "[options] <url>"

def short_desc(self):

return "Fetch a URL using the Scrapy downloader"

def long_desc(self):

return (

"Fetch a URL using the Scrapy downloader and print its content"

" to stdout. You may want to use --nolog to disable logging"

)

def add_options(self, parser):

ScrapyCommand.add_options(self, parser)

parser.add_option("--spider", dest="spider", help="use this spider")

parser.add_option("--headers", dest="headers", action="store_true",

help="print response HTTP headers instead of body")

parser.add_option("--no-redirect", dest="no_redirect", action="store_true", default=False,

help="do not handle HTTP 3xx status codes and print response as-is")

def _print_headers(self, headers, prefix):

for key, values in headers.items():

for value in values:

self._print_bytes(prefix + b' ' + key + b': ' + value)

def _print_response(self, response, opts):

if opts.headers:

self._print_headers(response.request.headers, b'>')

print('>')

self._print_headers(response.headers, b'<')

else:

self._print_bytes(response.body)

def _print_bytes(self, bytes_):

sys.stdout.buffer.write(bytes_ + b'\n')

def run(self, args, opts):

if len(args) != 1 or not is_url(args[0]):

raise UsageError()

request = Request(args[0], callback=self._print_response,

cb_kwargs={"opts": opts}, dont_filter=True)

# by default, let the framework handle redirects,

# i.e. command handles all codes expect 3xx

if not opts.no_redirect:

request.meta['handle_httpstatus_list'] = SequenceExclude(range(300, 400))

else:

request.meta['handle_httpstatus_all'] = True

spidercls = DefaultSpider

spider_loader = self.crawler_process.spider_loader

if opts.spider:

spidercls = spider_loader.load(opts.spider)

else:

spidercls = spidercls_for_request(spider_loader, request, spidercls)

self.crawler_process.crawl(spidercls, start_requests=lambda: [request])

self.crawler_process.start()案例 scrapy fetch https://www.ifeng.com/

添加三个参数

-

--spider 使用spider.name

-

--headers 指定request.headers

-

--no-redirect 不处理http status 在[300, 400)范围内的数值

6) genspider

源码忽略

案例 scrapy genspider ***

添加了一下参数

-

-l, --list 列出所有可用的模板 目前系统默认四个模板,basic.tmpl,crawl.tmpl,csvfeed.tmpl,xmlfeed.tmpl

-

-e, --edit 创建spider后编辑

-

-d, --dump 打印出指定模板

-

-t, --template 指定模板

-

--force 如果创建的spider已经存在,则强制覆盖

7) list

class Command(ScrapyCommand):

requires_project = True

default_settings = {'LOG_ENABLED': False}

def short_desc(self):

return "List available spiders"

def run(self, args, opts):

for s in sorted(self.crawler_process.spider_loader.list()):

print(s)案例 scrapy list

打印出所有的Spider.name

8) parse

使用spider 解析url对应的内容

9) runspider

详情见上面的 crawl

10) settings

查看scrapy的settings默认值

案例 scrapy settings --getint LOGSTATS_INTERVAL

11) shell

调试url

案例 scrapy shell https://www.ifeng.com/

12) startproject

创建一个Scrapy项目

案例 scrapy startproject XXX

就是把scrapy包中的templates的project复制到指定文件夹

13) version

案例 scrapy version --verbose

查看scrapy以及twisted/python/platform 数据

14) view

class Command(fetch.Command):

def short_desc(self):

return "Open URL in browser, as seen by Scrapy"

def long_desc(self):

return "Fetch a URL using the Scrapy downloader and show its contents in a browser"

def add_options(self, parser):

super().add_options(parser)

parser.remove_option("--headers")

def _print_response(self, response, opts):

open_in_browser(response)打开一个url浏览用scrapy的spider 用法和fetch一样,但是移除了--headers参数

案例 scrapy view https://www.ifeng.com/

1016

1016

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?