一、入门操作kubernetes集群

1、部署一个tomcat

kubectl create deployment tomcat6 --image=tomcat:6.0.53-jre8 kubectl get all

[root@k8s-node1 k8s]# kubectl get all

NAME READY STATUS RESTARTS AGE

pod/tomcat6-5f7ccf4cb9-djp52 0/1 ContainerCreating 0 23s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 15m

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/tomcat6 0/1 1 0 23s

NAME DESIRED CURRENT READY AGE

replicaset.apps/tomcat6-5f7ccf4cb9 1 1 0 23skubectl get all -o wide

[root@k8s-node1 k8s]# kubectl get all -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/tomcat6-5f7ccf4cb9-djp52 1/1 Running 0 100s 10.244.1.2 k8s-node2 <none> <none>

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 16m <none>

NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

deployment.apps/tomcat6 1/1 1 1 100s tomcat tomcat:6.0.53-jre8 app=tomcat6

NAME DESIRED CURRENT READY AGE CONTAINERS IMAGES SELECTOR

replicaset.apps/tomcat6-5f7ccf4cb9 1 1 1 100s tomcat tomcat:6.0.53-jre8 app=tomcat6,pod-template-hash=5f7ccf4cb9[root@k8s-node1 k8s]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy v1.17.3 ae853e93800d 2 years ago 116MB

registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver v1.17.3 90d27391b780 2 years ago 171MB

registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager v1.17.3 b0f1517c1f4b 2 years ago 161MB

registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler v1.17.3 d109c0821a2b 2 years ago 94.4MB

registry.cn-hangzhou.aliyuncs.com/google_containers/coredns 1.6.5 70f311871ae1 2 years ago 41.6MB

registry.cn-hangzhou.aliyuncs.com/google_containers/etcd 3.4.3-0 303ce5db0e90 2 years ago 288MB

quay.io/coreos/flannel v0.11.0-amd64 ff281650a721 3 years ago 52.6MB

registry.cn-hangzhou.aliyuncs.com/google_containers/pause 3.1 da86e6ba6ca1 4 years ago 742kB

[root@k8s-node1 k8s]# kubectl get pods

NAME READY STATUS RESTARTS AGE

tomcat6-5f7ccf4cb9-djp52 1/1 Running 0 4m42s

[root@k8s-node1 k8s]# kubectl get pods --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

default tomcat6-5f7ccf4cb9-djp52 1/1 Running 0 5m13s

kube-system coredns-7f9c544f75-hgr2q 1/1 Running 0 20m

kube-system coredns-7f9c544f75-l7bsw 1/1 Running 0 20m

kube-system etcd-k8s-node1 1/1 Running 0 20m

kube-system kube-apiserver-k8s-node1 1/1 Running 0 20m

kube-system kube-controller-manager-k8s-node1 1/1 Running 0 20m

kube-system kube-flannel-ds-amd64-5xrfs 1/1 Running 0 17m

kube-system kube-flannel-ds-amd64-hncrh 1/1 Running 0 19m

kube-system kube-flannel-ds-amd64-n7rgv 1/1 Running 0 14m

kube-system kube-proxy-2mkxq 1/1 Running 0 14m

kube-system kube-proxy-wvkq5 1/1 Running 0 17m

kube-system kube-proxy-xzdvj 1/1 Running 0 20m

kube-system kube-scheduler-k8s-node1 1/1 Running 0 20m[root@k8s-node1 k8s]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

tomcat6-5f7ccf4cb9-djp52 1/1 Running 0 5m32s 10.244.1.2 k8s-node2 <none> <none>这个Tomcat在k8s-node2中运行

到k8s-node2机器

[root@k8s-node2 k8s]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy v1.17.3 ae853e93800d 2 years ago 116MB

quay.io/coreos/flannel v0.11.0-amd64 ff281650a721 3 years ago 52.6MB

registry.cn-hangzhou.aliyuncs.com/google_containers/pause 3.1 da86e6ba6ca1 4 years ago 742kB

tomcat 6.0.53-jre8 49ab0583115a 5 years ago 290MB[root@k8s-node2 k8s]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

e73d66dee3a3 tomcat "catalina.sh run" 3 minutes ago Up 2 minutes k8s_tomcat_tomcat6-5f7ccf4cb9-djp52_default_684c3cff-109b-4f9a-a4b7-6580dbdb1282_0

a1f42944d84f registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.1 "/pause" 4 minutes ago Up 3 minutes k8s_POD_tomcat6-5f7ccf4cb9-djp52_default_684c3cff-109b-4f9a-a4b7-6580dbdb1282_0

37d712cfc4cb ff281650a721 "/opt/bin/flanneld -…" 14 minutes ago Up 14 minutes k8s_kube-flannel_kube-flannel-ds-amd64-5xrfs_kube-system_a193d438-95dd-469f-87d2-acd64929dd7c_0

6435f73b8c70 registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy "/usr/local/bin/kube…" 15 minutes ago Up 15 minutes k8s_kube-proxy_kube-proxy-wvkq5_kube-system_fc4cb6f6-368b-49c9-bee1-1c14ac2f959d_0

3493aca1314a registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.1 "/pause" 15 minutes ago Up 15 minutes k8s_POD_kube-flannel-ds-amd64-5xrfs_kube-system_a193d438-95dd-469f-87d2-acd64929dd7c_0

db0736bb5a92 registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.1 "/pause" 15 minutes ago Up 15 minutes k8s_POD_kube-proxy-wvkq5_kube-system_fc4cb6f6-368b-49c9-bee1-1c14ac2f959d_0

看到确实有tomcat这个镜像正在运行

暴露nginx访问

kubectl expose deployment tomcat6 --port=80 --target-port=8080 --type=NodePortPod的80端口 映射 容器的8080;(注:容器docker)

service会代理Pod的80

[root@k8s-node1 ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 3d

tomcat6 NodePort 10.96.100.191 <none> 80:32253/TCP 3m23s

kubectl get deployment

应用升级kubectl set image (--help查看帮助)

扩容:

kubectl scale --replicas=3 deployment tomcat6

扩容了多份,所有无论访问哪个node的指定端口,都可以访问到tomcat6

[root@k8s-node1 ~]# kubectl scale --replicas=3 deployment tomcat6

deployment.apps/tomcat6 scaled

5、删除

Kubectl get all

kubectl delete deploy/nginx

kubectl delete service/nginx-service

[root@k8s-node1 ~]# kubectl get all

NAME READY STATUS RESTARTS AGE

pod/tomcat6-5f7ccf4cb9-gjcbp 1/1 Running 0 2m1s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 3d

service/tomcat6 NodePort 10.96.100.191 <none> 80:32253/TCP 40m

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/tomcat6 1/1 1 1 3d

NAME DESIRED CURRENT READY AGE

replicaset.apps/tomcat6-5f7ccf4cb9 1 1 1 3d

[root@k8s-node1 ~]# kubectl delete deployment.apps/tomcat6

deployment.apps "tomcat6" deleted

[root@k8s-node1 ~]# kubectl get all

NAME READY STATUS RESTARTS AGE

pod/tomcat6-5f7ccf4cb9-gjcbp 0/1 Terminating 0 2m47s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 3d

service/tomcat6 NodePort 10.96.100.191 <none> 80:32253/TCP 41m

[root@k8s-node1 ~]# kubectl get pods

No resources found in default namespace.

[root@k8s-node1 ~]# kubectl delete service/tomcat6

service "tomcat6" deleted

[root@k8s-node1 ~]# kubectl get all

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 3dyaml文件:

kubectl create deployment tomcat6 --image=tomcat:6.0.53-jre8 --dry-run -o yaml > tomcat6.yaml [root@k8s-node1 k8s]# cat tomcat6.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

creationTimestamp: null

labels:

app: tomcat6

name: tomcat6

spec:

replicas: 1

selector:

matchLabels:

app: tomcat6

strategy: {}

template:

metadata:

creationTimestamp: null

labels:

app: tomcat6

spec:

containers:

- image: tomcat:6.0.53-jre8

name: tomcat

resources: {}

status: {}

删除没用数据后:

[root@k8s-node1 k8s]# cat tomcat6.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: tomcat6

name: tomcat6

spec:

replicas: 3

selector:

matchLabels:

app: tomcat6

template:

metadata:

labels:

app: tomcat6

spec:

containers:

- image: tomcat:6.0.53-jre8

name: tomcat

使用 tomcat6.yaml 中的定义创建服务

[root@k8s-node1 k8s]# kubectl apply -f tomcat6.yaml

deployment.apps/tomcat6 configured

[root@k8s-node1 k8s]# kubectl get pods

NAME READY STATUS RESTARTS AGE

tomcat6-5f7ccf4cb9-452xr 1/1 Running 0 32s

tomcat6-5f7ccf4cb9-b599n 0/1 ContainerCreating 0 3s

tomcat6-5f7ccf4cb9-zv9bp 0/1 ContainerCreating 0 3s[root@k8s-node1 k8s]# kubectl get pods

NAME READY STATUS RESTARTS AGE

tomcat6-5f7ccf4cb9-452xr 1/1 Running 0 41s

tomcat6-5f7ccf4cb9-b599n 1/1 Running 0 12s

tomcat6-5f7ccf4cb9-zv9bp 1/1 Running 0 12s查看运营的tomcat的镜像信息:

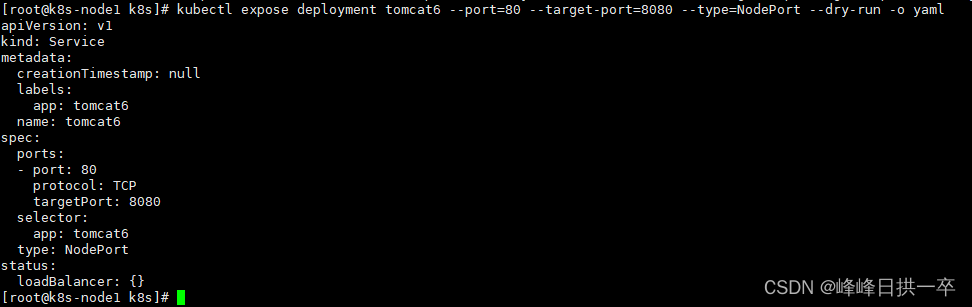

kubectl expose deployment tomcat6 --port=80 --target-port=8080 --type=NodePort --dry-run -o yaml

查看某一个容器

kubectl get pods tomcat6-5f7ccf4cb9-5vw9d

查看某一个容器的yaml信息:

kubectl get pods tomcat6-5f7ccf4cb9-5vw9d -o yamlapiVersion: v1

kind: Pod

metadata:

creationTimestamp: "2022-08-23T10:28:33Z"

generateName: tomcat6-5f7ccf4cb9-

labels:

app: tomcat6

pod-template-hash: 5f7ccf4cb9

name: tomcat6-5f7ccf4cb9-5vw9d

namespace: default

ownerReferences:

- apiVersion: apps/v1

blockOwnerDeletion: true

controller: true

kind: ReplicaSet

name: tomcat6-5f7ccf4cb9

uid: d726b79a-e7e2-44a9-b30c-bc9f7eb62d17

resourceVersion: "149218"

selfLink: /api/v1/namespaces/default/pods/tomcat6-5f7ccf4cb9-5vw9d

uid: 3d491668-fd7d-40db-8b26-2a7b00f85b2d

spec:

containers:

- image: tomcat:6.0.53-jre8

imagePullPolicy: IfNotPresent

name: tomcat

resources: {}

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

volumeMounts:

- mountPath: /var/run/secrets/kubernetes.io/serviceaccount

name: default-token-bkfsg

readOnly: true

dnsPolicy: ClusterFirst

enableServiceLinks: true

nodeName: k8s-node3

priority: 0

restartPolicy: Always

schedulerName: default-scheduler

securityContext: {}

serviceAccount: default

serviceAccountName: default

terminationGracePeriodSeconds: 30

tolerations:

- effect: NoExecute

key: node.kubernetes.io/not-ready

operator: Exists

tolerationSeconds: 300

- effect: NoExecute

key: node.kubernetes.io/unreachable

operator: Exists

tolerationSeconds: 300

volumes:

- name: default-token-bkfsg

secret:

defaultMode: 420

secretName: default-token-bkfsg

status:

conditions:

- lastProbeTime: null

lastTransitionTime: "2022-08-23T10:28:33Z"

status: "True"

type: Initialized

- lastProbeTime: null

lastTransitionTime: "2022-09-13T06:03:42Z"

status: "True"

type: Ready

- lastProbeTime: null

lastTransitionTime: "2022-09-13T06:03:42Z"

status: "True"

type: ContainersReady

- lastProbeTime: null

lastTransitionTime: "2022-08-23T10:28:33Z"

status: "True"

type: PodScheduled

containerStatuses:

- containerID: docker://d5c6a56c7ec4ca5420a80a1be376cc9c05f0a6fa1fcff502ffa961549d11f2a1

image: tomcat:6.0.53-jre8

imageID: docker-pullable://tomcat@sha256:8c643303012290f89c6f6852fa133b7c36ea6fbb8eb8b8c9588a432beb24dc5d

lastState:

terminated:

containerID: docker://1da4b737b845c463547c8ed56af9ee0eaba884769b4467f04f5b4f4590321cb2

exitCode: 143

finishedAt: "2022-08-29T11:09:06Z"

reason: Error

startedAt: "2022-08-29T10:27:18Z"

name: tomcat

ready: true

restartCount: 6

started: true

state:

running:

startedAt: "2022-09-13T06:03:39Z"

hostIP: 10.0.2.5

phase: Running

podIP: 10.244.2.24

podIPs:

- ip: 10.244.2.24

qosClass: BestEffort

startTime: "2022-08-23T10:28:33Z"

将某一个已经运营的容器的yaml文件存入自己定义的一个yaml文件中:

kubectl create deployment tomcat6 --image=tomcat:6.0.53-jre8 --dry-run -o yaml > tomcat6.yaml

使用 tomcat6.yaml 中的定义创建服务

kubectl apply -f tomcat6.yaml 查看 cat ingress-tomcat6.yml

apiVersion: extensions/v1betal

kind: Ingress

metadata:

name: web

spec:

rules:

- host: tomcat6.atguigu.com

http:

paths:

- backend:

serviceName: tomcat6

servicePort: 80

然后使用switch_hosts.exe文件创建ip和域名的对应关系

192.168.56.100 tomcat6.atguigu.com

使用下面两个网址都可以文档对应的tomcat

http://192.168.56.100:30846/

http://tomcat6.atguigu.com:30846/

二、kubesphere安装环境

Helm2的安装

wget https://get.helm.sh/helm-v2.16.2-linux-amd64.tar.gz

# 解压:

tar -zxvf helm-v2.16.2-linux-amd64.tar.gz# 把解压后的文件夹内的helm文件放入/usr/local/bin/:

mv linux-amd64/helm /usr/local/bin/#查看helm版本,如下,可见客户端版本是2.16.2,由于helm服务端(名为tiller)还没有部署,因此显示"could not find tiller":

[root@node1 ~]# helm version

Client: &version.Version{SemVer:"v2.16.2", GitCommit:"bbdfe5e7803a12bbdf97e94cd847859890cf4050", GitTreeState:"clean"}

Error: could not find tiller

部署tiller

客户端部署完毕,接下来要把ServiceAccount和角色绑定建好

参见官网:

https://devopscube.com/kubernetes-api-access-service-account/

Step 1: Create service account in a namespace

We will create a service account in a custom namespace rather than the default namespace for demonstration purposes.

1、Create a ‘devops-tools’ namespace.

kubectl create namespace devops-tools[root@k8s-node1 k8s]# kubectl create namespace devops-tools

namespace/devops-tools created

[root@k8s-node1 k8s]# kubectl get ns

NAME STATUS AGE

default Active 9d

devops-tools Active 3s

ingress-nginx Active 5d19h

kube-node-lease Active 9d

kube-public Active 9d

kube-system Active 9d

2、Create a service account named “api-service-account” in devops-tools namespace

kubectl create serviceaccount api-service-account -n devops-tools[root@k8s-node1 k8s]# kubectl create serviceaccount api-service-account -n devops-tools

serviceaccount/api-service-account created

Step 2: Create a Cluster Role

Assuming that the service account needs access to the entire cluster resources, we will create a cluster role with a list of allowed access.

Create a clusterRole named api-cluster-role with the following manifest file.cat <<EOF | kubectl apply -f -

> ---

> apiVersion: rbac.authorization.k8s.io/v1

> kind: ClusterRole

> metadata:

> name: api-cluster-role

> namespace: devops-tools

> rules:

> - apiGroups:

> - ""

> - apps

> - autoscaling

> - batch

> - extensions

> - policy

> - rbac.authorization.k8s.io

> resources:

> - pods

> - componentstatuses

> - configmaps

> - daemonsets

> - deployments

> - events

> - endpoints

> - horizontalpodautoscalers

> - ingress

> - jobs

> - limitranges

> - namespaces

> - nodes

> - pods

> - persistentvolumes

> - persistentvolumeclaims

> - resourcequotas

> - replicasets

> - replicationcontrollers

> - serviceaccounts

> - services

> verbs: ["get", "list", "watch", "create", "update", "patch", "delete"]

> EOF输入结果:

clusterrole.rbac.authorization.k8s.io/api-cluster-role created

Step 3: Create a CluserRole Binding

Now that we have the ClusterRole and service account, it needs to be mapped together.

Bind the cluster-api-role to api-service-account using a RoleBinding

cat <<EOF | kubectl apply -f -

> ---

> apiVersion: rbac.authorization.k8s.io/v1

> kind: ClusterRoleBinding

> metadata:

> name: api-cluster-role-binding

> subjects:

> - namespace: devops-tools

> kind: ServiceAccount

> name: api-service-account

> roleRef:

> apiGroup: rbac.authorization.k8s.io

> kind: ClusterRole

> name: api-cluster-role

> EOF输出结果:

clusterrolebinding.rbac.authorization.k8s.io/api-cluster-role-binding created

第 4 步:使用 kubectl 验证服务帐户访问权限

要验证 clusterrole 绑定,我们可以使用can-i命令来验证 API 访问,假设服务帐户位于特定命名空间中。

例如,以下命令检查命名空间api-service-account中的是否devops-tools可以列出 pod。

kubectl auth can-i get pods --as=system:serviceaccount:devops-tools:api-service-account输出结果:

yes

这是另一个示例,用于检查服务帐户是否具有删除部署的权限。

kubectl auth can-i delete deployments --as=system:serviceaccount:devops-tools:api-service-account输出结果:

yes

第5 步:使用 API 调用验证服务帐户访问权限

要将服务帐户与 HTTP 调用一起使用,您需要将令牌与服务帐户关联。

首先,获取与 api-service-account

kubectl get serviceaccount api-service-account -o=jsonpath='{.secrets[0].name}' -n devops-tools输出结果:

api-service-account-token-hqgt2

使用秘密名称获取经过 base64 解码的令牌。它将在 API 调用中用作不记名令牌

kubectl get secrets api-service-account-token-hqgt2 -o=jsonpath='{.data.token}' -n devops-tools | base64 -Dkubectl get secrets api-service-account-token-hqgt2 -o=jsonpath='{.data.token}' -n devops-tools | base64 -D

base64: invalid option -- 'D'

Try 'base64 --help' for more information.

[root@k8s-node1 k8s]# base64 --help

Usage: base64 [OPTION]... [FILE]

Base64 encode or decode FILE, or standard input, to standard output.

Mandatory arguments to long options are mandatory for short options too.

-d, --decode decode data

-i, --ignore-garbage when decoding, ignore non-alphabet characters

-w, --wrap=COLS wrap encoded lines after COLS character (default 76).

Use 0 to disable line wrapping我这里使用-D提示无效,使用base64 --help 后,发现应该使用-d,然后再次输入正常,如下:

kubectl get secrets api-service-account-token-hqgt2 -o=jsonpath='{.data.token}' -n devops-tools | base64 -d

eyJhbGciOiJSUzI1NiIsImtpZCI6IjY5TE9GZGpwS2taSlpaNnlLbm9oSUp4U0sydTJfUWxmeEpqdlloSHg2TGsifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJkZXZvcHMtdG9vbHMiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlY3JldC5uYW1lIjoiYXBpLXNlcnZpY2UtYWNjb3VudC10b2tlbi1ocWd0MiIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50Lm5hbWUiOiJhcGktc2VydmljZS1hY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQudWlkIjoiMDllYjkxNmQtYjQ3NS00MDZhLWI3YTktM2VkN2E4NTNmYjdlIiwic3ViIjoic3lzdGVtOnNlcnZpY2VhY2NvdW50OmRldm9wcy10b29sczphcGktc2VydmljZS1hY2NvdW50In0.Ywh5vIOD5KPGx9-rJKC4Jej6wz_Z6FsxbEsgCNVGY-gD29cgsPxIDleJiOzqvlV-jghB6ElPZLprYBoqs6yXtyMA7jOL_WBsYFq3uzMQnBp6mDb5-H18G2p8RJQfhEdvSuJqSp7SkcvL4G3XjMir7U3vRoNsBkas6cqrrMfRf7BIsCmK_0PqhXW8xANBzi1W4-Hbo3r7wInR4ClUq-J9-vYl48I2MhwwaOnzlKNqIbtCiBtRmVQasyqbWFksOlhXMIGdch5l5woaPhvZRWcPP11ZvN9Z-bRyXmShXJFNWxQyPOr5zLCvDRubcSC0ysvwE5EhXbVe2jxGX4BBINFX3w

下面的部分,参见官网:https://devopscube.com/install-configure-helm-kubernetes/

我们将在一个 yaml 文件中添加服务帐户和 clusterRoleBinding。

创建一个名为的文件helm-rbac.yaml并将以下内容复制到该文件中。

apiVersion: v1

kind: ServiceAccount

metadata:

name: tiller

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: tiller

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: tiller

namespace: kube-system

[root@k8s-node1 helm]# kubectl apply -f helm-rbac.yaml

serviceaccount/tiller created

clusterrolebinding.rbac.authorization.k8s.io/tiller created

初始化 Helm:部署 Tiller

下一步是初始化 helm。初始化 helm 时,将在 kube-system 命名空间中部署一个名为 tiller-deploy 的部署。

使用以下命令初始化 helm。

helm init --service-account=tiller --history-max 300

您可以使用 kubectl 检查 kube-system 命名空间中的分蘖部署。

[root@k8s-node1 k8s]# kubectl get deployment tiller-deploy -n kube-system

NAME READY UP-TO-DATE AVAILABLE AGE

tiller-deploy 1/1 1 1 46s

使用 Helm 部署示例应用程序

现在让我们使用 helm部署一个示例nginx 入口。

执行以下 helm install 命令在 kubernetes 集群中部署一个 nginx 入口。它将从公共 github helm chart repo下载 nginx-ingress helm chart 。

Error: unable to build kubernetes objects from release manifest: unable to recognize "": no matches for kind "Deployment" in version "extensions/v1beta1"

如果有如上报错,需要修改nginx-ingress depolyment文件的apiVersion

grep -irl "extensions/v1beta1" nginx-ingress | grep deploy | xargs sed -i 's#extensions/v1beta1#apps/v1#g'

859

859

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?