一、 前言

本文简单介绍yarn安装,主要介绍spark1.5.2on yarn模式安装,仅供参考。

二、 yarn配置

1. yarne.xml

yarne.xml需要添加的配置如下:

| <property> <name>yarn.nodemanager.aux-services</name> <value>mapreduce_shuffle,spark_shuffle</value> </property> <property> <name>yarn.nodemanager.aux-services.spark_shuffle.class</name> <value>org.apache.spark.network.yarn.YarnShuffleService</value> </property> |

如果配置了yarn.log-aggregation-enable,一定要设置yarn.log.server.url,不然spark历史任务无法查看:

| <property> <name>yarn.log-aggregation-enable</name> <value>true</value> </property> <property> <name>yarn.log.server.url</name> <value>http://namenode1:19888/jobhistory/logs</value> </property> |

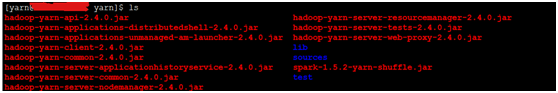

2. spark-1.5.2-yarn-shuffle.jar

将spark-1.5.2-yarn-shuffle.jar拷贝所有nodemanager的classpath下面:

3. 重启所有nodemanager

三、 Spark

1. 安装hadoop 客户端

添加如下内容到.bashrc

| JAVA_HOME=/home/spark/software/java HADOOP_HOME=/home/bigdata/software/hadoop SPARK_HOME=/home/spark/software/spark R_HOME=/home/spark/software/R PATH=$R_HOME/bin:$JAVA_HOME/bin:$HADOOP_HOME/bin:$SPARK_HOME/bin:$SPARK_HOME/sbin:$PATH export LANG JAVA_HOME HADOOP_HOME SPARK_HOME HIVE_HOME R_HOME PATH CLASSPATH

export HADOOP_CONF_DIR=$HADOOP_HOME/etc/hadoop export YARN_CONF_DIR=/home/yarn/software/hadoop/etc/hadoop export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:$HADOOP_COMMON_LIB_NATIVE_DIR export PATH="/home/spark/software/anaconda/bin:$PATH" export HADOOP_COMMON_LIB_NATIVE_DIR=/home/bigdata/software/hadoop/lib/native/Linux-amd64-64 export HADOOP_CLIENT_OPTS="-Djava.library.path=$HADOOP_COMMON_LIB_NATIVE_DIR" export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:$HADOOP_COMMON_LIB_NATIVE_DIR |

2. 配置spark-defaults.conf

| # This is useful for setting default environmental settings. spark.serializer org.apache.spark.serializer.KryoSerializer spark.eventLog.enabled true #spark.eventLog.dir hdfs://SuningHadoop2/sparklogs/sparklogs1.4.0 spark.eventLog.dir hdfs:///sparklogs/sparklogshistorylogpre spark.driver.cores 1 spark.driver.memory 4096m # Tuning parameters spark.shuffle.consolidateFiles true spark.sql.shuffle.partitions 40 spark.default.parallelism 20 #spark.cores.max 2 spark.shuffle.consolidateFiles true

[spark@spark-pre1 conf]$ vim spark-defaults.conf # Default system properties included when running spark-submit. # This is useful for setting default environmental settings.

# Example: # spark.master spark://master:7077 # spark.eventLog.enabled true # spark.eventLog.dir hdfs://namenode:8021/directory spark.serializer org.apache.spark.serializer.KryoSerializer # spark.driver.memory 5g # spark.executor.extraJavaOptions -XX:+PrintGCDetails -Dkey=value -Dnumbers="one two three"

spark.eventLog.enabled true spark.eventLog.dir hdfs:///sparklogs/sparklogshistorylogpre spark.driver.cores 1 spark.driver.memory 4096m # Tuning parameters spark.shuffle.consolidateFiles true spark.sql.shuffle.partitions 40 spark.default.parallelism 20 spark.shuffle.consolidateFiles true |

3. 配置spark-env.sh

| export SPARK_LOCAL_DIRS=/data/spark/sparkLocalDir export HADOOP_CONF_DIR=/home/bigdata/software/hadoop/etc/hadoop export SPARK_LIBRARY_PATH=$SPARK_LIBRARY_PATH:/home/bigdata/software/hadoop/lib/native/Linux-amd64-64/ export SPARK_HISTORY_OPTS="-XX:+HeapDumpOnOutOfMemoryError -XX:HeapDumpPath=/home/spark/spark/logs/historyserver.hprof -verbose:gc -XX:+PrintGCDetails -XX:+PrintGCDateStamps -Xloggc:/home/spark/spark/logs/historyserver.gc.log -XX:+UseGCLogFileRotation -XX:NumberOfGCLogFiles=5 -XX:GCLogFileSize=512M -Dcom.sun.management.jmxremote -Dcom.sun.management.jmxremote.port=19229 -Dcom.sun.management.jmxremote.ssl=false -Dcom.sun.management.jmxremote.authenticate=false -Dspark.history.fs.logDirectory=hdfs://SuningHadoop2/sparklogs/sparklogshistorylogpre -Dspark.history.ui.port=8078 |

4. 启动HistoryServer

start-history-server.sh

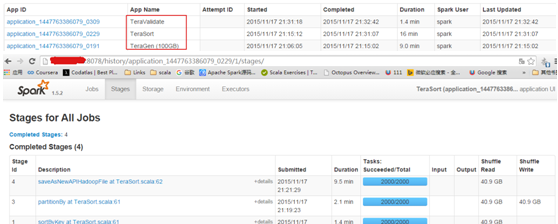

四、 测试脚本

| yarnmode=yarn-cluster mytime=`date '+%s'` datasizeG=100G inputfiles=/user/spark/TeraGen$mytime outputfiles=/user/spark/TeraSort$mytime Validatefiles=/user/spark/TeraValidate$mytime numexecutors=20 executormemory=8G export HADOOP_USER_NAME=spark sparkqueue=spark sparkconf="spark.default.parallelism=2000" classTeraGen=com.github.ehiggs.spark.terasort.TeraGen classTeraSort=com.github.ehiggs.spark.terasort.TeraSort classTeraValidate=com.github.ehiggs.spark.terasort.TeraValidate sourcejar=/home/spark/workspace/tersort/spark-terasort-1.0-SNAPSHOT-jar-with-dependencies.jar

spark-submit --master $yarnmode \ --supervise \ --num-executors $numexecutors \ --executor-memory $executormemory \ --queue $sparkqueue \ --conf $sparkconf \ --class $classTeraGen $sourcejar $datasizeG $inputfiles beginTime=`date '+%s'` spark-submit --master $yarnmode \ --supervise \ --queue $sparkqueue \ --executor-memory $executormemory \ --num-executors $numexecutors \ --conf $sparkconf --class $classTeraSort $sourcejar $inputfiles $outputfiles endTime=`date '+%s'` speadtime=$((endTime-beginTime)) spark-submit --master $yarnmode \ --supervise \ --executor-memory $executormemory \ --queue $sparkqueue \ --num-executors $numexecutors \ --conf $sparkconf \ --class $classTeraValidate $sourcejar $outputfiles $classTeraValidate echo times:$speadtime hadoop fs -rm -r $inputfiles hadoop fs -rm -r $outputfiles hadoop fs -rm -r $Validatefiles |

714

714

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?