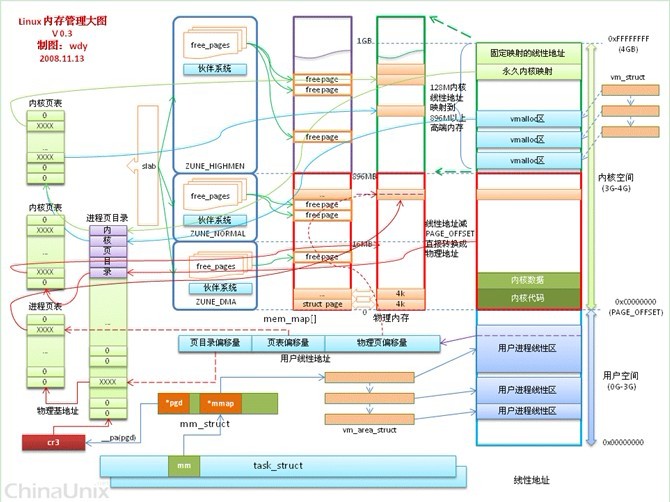

linux 内存管理基于分段、分页把逻辑地址转换为物理地址,同时有些RAM永久的分配给了内核使用用来存放内核代码以及静态数据。其余的RAM为动态内存。linux中采用了很多有效的管理方法,包括页表管理、高端内存(临时映射区、固定映射区、永久映射区、非连续内存区)管理、为减小外部碎片的伙伴系统、为减小内部碎片的slab机制、伙伴系统未建立之前的页面分配制度以及紧急内存管理等等。。

此图copyfrom http://bbs.chinaunix.net/thread-2018659-2-1.html

页描述符:

页描述符记录页框的当前状态,类型为一个struct page的页描述符;所有的页描述符放在mem_map中,每个描述符场为32bytes,

/*

* Each physical page in the system has a struct page associated with

* it to keep track of whatever it is we are using the page for at the

* moment. Note that we have no way to track which tasks are using

* a page, though if it is a pagecache page, rmap structures can tell us

* who is mapping it.

*

* The objects in struct page are organized in double word blocks in

* order to allows us to use atomic double word operations on portions

* of struct page. That is currently only used by slub but the arrangement

* allows the use of atomic double word operations on the flags/mapping

* and lru list pointers also.

*/

struct page {

/* First double word block */描述了页框的状态

unsigned long flags; /* Atomic flags, some possibly

* updated asynchronously */

struct address_space *mapping; /* If low bit clear, points to

* inode address_space, or NULL.

* If page mapped as anonymous

* memory, low bit is set, and

* it points to anon_vma object:

* see PAGE_MAPPING_ANON below.

*/

/* Second double word */

struct {

union {

pgoff_t index; /* Our offset within mapping. */

void *freelist; /* slub/slob first free object */

bool pfmemalloc; /* If set by the page allocator,

* ALLOC_NO_WATERMARKS was set

* and the low watermark was not

* met implying that the system

* is under some pressure. The

* caller should try ensure

* this page is only used to

* free other pages.

*/

};

union {

#if defined(CONFIG_HAVE_CMPXCHG_DOUBLE) && \

defined(CONFIG_HAVE_ALIGNED_STRUCT_PAGE)

/* Used for cmpxchg_double in slub */

unsigned long counters;

#else

/*

* Keep _count separate from slub cmpxchg_double data.

* As the rest of the double word is protected by

* slab_lock but _count is not.

*/

unsigned counters;

#endif

struct {

union {

/*

* Count of ptes mapped in

* mms, to show when page is

* mapped & limit reverse map

* searches.

*

* Used also for tail pages

* refcounting instead of

* _count. Tail pages cannot

* be mapped and keeping the

* tail page _count zero at

* all times guarantees

* get_page_unless_zero() will

* never succeed on tail

* pages.

*/

atomic_t _mapcount;

struct { /* SLUB */

unsigned inuse:16;

unsigned objects:15;

unsigned frozen:1;

};

int units; /* SLOB */

};

atomic_t _count; /* Usage count, see below. */

};

};

};

/* Third double word block */

union {

struct list_head lru; /* Pageout list, eg. active_list

* protected by zone->lru_lock !

*/

struct { /* slub per cpu partial pages */

struct page *next; /* Next partial slab */

#ifdef CONFIG_64BIT

int pages; /* Nr of partial slabs left */

int pobjects; /* Approximate # of objects */

#else

short int pages;

short int pobjects;

#endif

};

struct list_head list; /* slobs list of pages */

struct { /* slab fields */

struct kmem_cache *slab_cache;

struct slab *slab_page;

};

};

/* Remainder is not double word aligned */

union {

unsigned long private; /* Mapping-private opaque data:

* usually used for buffer_heads

* if PagePrivate set; used for

* swp_entry_t if PageSwapCache;

* indicates order in the buddy

* system if PG_buddy is set.

*/

#if USE_SPLIT_PTLOCKS

spinlock_t ptl;

#endif

struct kmem_cache *slab; /* SLUB: Pointer to slab */

struct page *first_page; /* Compound tail pages */

};

/*

* On machines where all RAM is mapped into kernel address space,

* we can simply calculate the virtual address. On machines with

* highmem some memory is mapped into kernel virtual memory

* dynamically, so we need a place to store that address.

* Note that this field could be 16 bits on x86 ... ;)

*

* Architectures with slow multiplication can define

* WANT_PAGE_VIRTUAL in asm/page.h

*/

#if defined(WANT_PAGE_VIRTUAL)

void *virtual; /* Kernel virtual address (NULL if

not kmapped, ie. highmem) */

#endif /* WANT_PAGE_VIRTUAL */

#ifdef CONFIG_WANT_PAGE_DEBUG_FLAGS

unsigned long debug_flags; /* Use atomic bitops on this */

#endif

#ifdef CONFIG_KMEMCHECK

/*

* kmemcheck wants to track the status of each byte in a page; this

* is a pointer to such a status block. NULL if not tracked.

*/

void *shadow;

#endif

}

typedef struct pglist_data {

struct zone <span style="white-space:pre"> </span>node_zones[MAX_NR_ZONES]; 节点管理区描述数组

struct zonelist node_zonelists[MAX_ZONELISTS];

//页分配器使用的zonelist数据结构;该节点的备用内存区。当节点没有可用内存时,就从备用区中分配内存

int nr_zones; 节点中管理区的个数

#ifdef CONFIG_FLAT_NODE_MEM_MAP /* means !SPARSEMEM */

struct page *node_mem_map<span style="white-space:pre"> </span>节点中页描述符数组

#ifdef CONFIG_MEMCG

struct page_cgroup *node_page_cgroup;

#endif

#endif

#ifndef CONFIG_NO_BOOTMEM

struct bootmem_data *bdata;<span style="white-space:pre"> </span>用在内核初始化阶段

#endif

#ifdef CONFIG_MEMORY_HOTPLUG

/*

* Must be held any time you expect node_start_pfn, node_present_pages

* or node_spanned_pages stay constant. Holding this will also

* guarantee that any pfn_valid() stays that way.

*

* Nests above zone->lock and zone->size_seqlock.

*/

spinlock_t node_size_lock;

#endif

unsigned long node_start_pfn;<span style="white-space:pre"> </span>节点中第一个页框的下标

unsigned long node_present_pages; /* total number of physical pages */

unsigned long node_spanned_pages; /* total size of physical page

range, including holes */

int node_id;<span style="white-space:pre"> </span>节点标识符

wait_queue_head_t kswapd_wait;<span style="white-space:pre"> </span>kswapd页换出守护进程使用的等待队列

wait_queue_head_t pfmemalloc_wait;

struct task_struct *kswapd; 指向kswapd的内核线程/* Protected by lock_memory_hotplug() */

int kswapd_max_order; <span style="white-space:pre"> </span>kswapd线程要创建空闲块取对数的值

enum zone_type classzone_idx;

} pg_data_t;每个内存节点的物理内存划分3个管理区 zone_DMA /zone_normal /zone_highmem

管理区描述符字段

struct zone {

/* Fields commonly accessed by the page allocator */

/* zone watermarks, access with *_wmark_pages(zone) macros */

/*本管理区的三个水线值:高水线(比较充足)、低水线、MIN水线。*/

unsigned long watermark[NR_WMARK];

/*

* When free pages are below this point, additional steps are taken

* when reading the number of free pages to avoid per-cpu counter

* drift allowing watermarks to be breached

*/

unsigned long percpu_drift_mark;

/*

* We don't know if the memory that we're going to allocate will be freeable

* or/and it will be released eventually, so to avoid totally wasting several

* GB of ram we must reserve some of the lower zone memory (otherwise we risk

* to run OOM on the lower zones despite there's tons of freeable ram

* on the higher zones). This array is recalculated at runtime if the

* sysctl_lowmem_reserve_ratio sysctl changes.

*/

<span style="white-space:pre"> </span>/ * 当高端内存、normal内存区域中无法分配到内存时,需要从normal、DMA区域中分配内存。

* 为了避免DMA区域被消耗光,需要额外保留一些内存供驱动使用。

* 该字段就是指从上级内存区退到回内存区时,需要额外保留的内存数量。

*/

unsigned long lowmem_reserve[MAX_NR_ZONES];

/*

* This is a per-zone reserve of pages that should not be

* considered dirtyable memory.

*/

unsigned long dirty_balance_reserve;

#ifdef CONFIG_NUMA

int node; /*所属的NUMA节点。*/

/*

* zone reclaim becomes active if more unmapped pages exist.

*//*当可回收的页超过此值时,将进行页面回收。*/

unsigned long min_unmapped_pages;

/*当管理区中,用于slab的可回收页大于此值时,将回收slab中的缓存页。*/

unsigned long min_slab_pages;

#endif

<span style="white-space:pre"> </span>/*每cpu高速缓存所用到,

<span style="white-space:pre"> </span> <span style="white-space:pre"> </span> <span style="white-space:pre">* 每CPU的页面缓存。

* 当分配单个页面时,首先从该缓存中分配页面。这样可以:

*避免使用全局的锁

* 避免同一个页面反复被不同的CPU分配,引起缓存行的失效。

* 避免将管理区中的大块分割成碎片。

*/ </span>

struct per_cpu_pageset __percpu *pageset;

/*

* free areas of different sizes

*/

spinlock_t lock;

int all_unreclaimable; /* All pages pinned */

#if defined CONFIG_COMPACTION || defined CONFIG_CMA

/* pfn where the last incremental compaction isolated free pages */

unsigned long compact_cached_free_pfn;

#endif

#ifdef CONFIG_MEMORY_HOTPLUG

/* see spanned/present_pages for more description */

<span style="white-space:pre"> /*该锁用于保护伙伴系统数据结构。即保护free_area相关数据。*</span>/

seqlock_t span_seqlock;

#endif

#ifdef CONFIG_CMA

/*

* CMA needs to increase watermark levels during the allocation

* process to make sure that the system is not starved.

*/

unsigned long min_cma_pages;

#endif

<span style="white-space:pre"> /*伙伴系统的主要变量。这个数组定义了11个队列,每个队列中的元素都是大小为2^n的页面*/ </span>

struct free_area free_area[MAX_ORDER];

#ifndef CONFIG_SPARSEMEM

/*

* Flags for a pageblock_nr_pages block. See pageblock-flags.h.

* In SPARSEMEM, this map is stored in struct mem_section

*/ /*本管理区里的页面标志数组*/

unsigned long *pageblock_flags;

#endif /* CONFIG_SPARSEMEM */

#ifdef CONFIG_COMPACTION

/*

* On compaction failure, 1<<compact_defer_shift compactions

* are skipped before trying again. The number attempted since

* last failure is tracked with compact_considered.

*/

unsigned int compact_considered;

unsigned int compact_defer_shift;

int compact_order_failed;

#endif

<span style="white-space:pre"> /*填充的未用字段,确保后面的字段是缓存行对齐的*/ </span>

ZONE_PADDING(_pad1_)

<span style="white-space:pre"> </span>/*

<span style="white-space:pre"> </span> * lru用于确定哪些字段是活跃的,哪些不是活跃的,

<span style="white-space:pre"> </span> *并据此确定应当被写回到磁盘以释放内存。

<span style="white-space:pre"> </span>*/

/* Fields commonly accessed by the page reclaim scanner */

spinlock_t lru_lock;

struct lruvec lruvec;

unsigned long pages_scanned; /* since last reclaim */

unsigned long flags; /* zone flags, see below */

/* Zone statistics */

atomic_long_t vm_stat[NR_VM_ZONE_STAT_ITEMS];

/*

* The target ratio of ACTIVE_ANON to INACTIVE_ANON pages on

* this zone's LRU. Maintained by the pageout code.

*/

unsigned int inactive_ratio;

ZONE_PADDING(_pad2_)

/* Rarely used or read-mostly fields */

/*

* wait_table -- the array holding the hash table

* wait_table_hash_nr_entries -- the size of the hash table array

* wait_table_bits -- wait_table_size == (1 << wait_table_bits)

*

* The purpose of all these is to keep track of the people

* waiting for a page to become available and make them

* runnable again when possible. The trouble is that this

* consumes a lot of space, especially when so few things

* wait on pages at a given time. So instead of using

* per-page waitqueues, we use a waitqueue hash table.

*

* The bucket discipline is to sleep on the same queue when

* colliding and wake all in that wait queue when removing.

* When something wakes, it must check to be sure its page is

* truly available, a la thundering herd. The cost of a

* collision is great, but given the expected load of the

* table, they should be so rare as to be outweighed by the

* benefits from the saved space.

*

* __wait_on_page_locked() and unlock_page() in mm/filemap.c, are the

* primary users of these fields, and in mm/page_alloc.c

* free_area_init_core() performs the initialization of them.

*/

wait_queue_head_t * wait_table;

unsigned long wait_table_hash_nr_entries;

unsigned long wait_table_bits;

/*

* Discontig memory support fields.

*/ /*管理区属于的节点*/

struct pglist_data *zone_pgdat;

/* zone_start_pfn == zone_start_paddr >> PAGE_SHIFT */

unsigned long zone_start_pfn; /*管理区的页面在mem_map中的偏移*/

/*

* zone_start_pfn, spanned_pages and present_pages are all

* protected by span_seqlock. It is a seqlock because it has

* to be read outside of zone->lock, and it is done in the main

* allocator path. But, it is written quite infrequently.

*

* The lock is declared along with zone->lock because it is

* frequently read in proximity to zone->lock. It's good to

* give them a chance of being in the same cacheline.

*/

unsigned long spanned_pages; /* total size, including holes */

unsigned long present_pages; /* amount of memory (excluding holes) */

/*

* rarely used fields:

*/

const char *name;

#ifdef CONFIG_MEMORY_ISOLATION

/*

* the number of MIGRATE_ISOLATE *pageblock*.

* We need this for free page counting. Look at zone_watermark_ok_safe.

* It's protected by zone->lock

*/

int nr_pageblock_isolate;

#endif

}

4807

4807

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?