利用MapRuduce来统计HDFS中指定目录下所有文件中单词以及单词出现的次数,结果输出到另一个HDFS目录中。

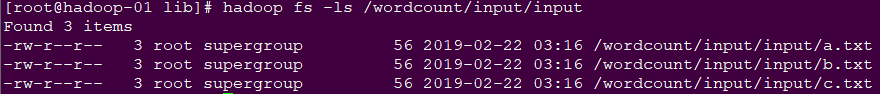

待统计HDFS目录/wordcount/input/input

待统计文件示意:

MapReduce分布式计算框架

map阶段:读取文本文件中的每一行,执行map方法,将处理结果key-value写入到context中

reduce阶段:相同key值的一组数据进行聚合,执行reduce方法,将处理结果写入到context中

map,reduce阶段的数据需要序列化以及反序列化,因此类型需要实现特定的接口Writable。

新建Maven工程,添加依赖 注意依赖包要正确下载,否则会报各种jar包找不到。

<dependency> <groupId>org.apache.hadoop</groupId> <artifactId>hadoop-client</artifactId> <version>2.8.1</version> </dependency>

实现Map接口

输入key为每一行的起始偏移量Long

输入value为每一行的内容String--Text

public class WordcountMapper extends Mapper<LongWritable, Text, Text, IntWritable>{ @Override protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException { String line = value.toString(); String[] words = line.split(" "); for(String word:words){ context.write(new Text(word), new IntWritable(1)); } } }

实现Reduce接口

输入key为每一组的相同key值

输入values为每一组相同key值不同value值集合,可以使用迭代器进行迭代

public class WordcountReducer extends Reducer<Text, IntWritable, Text, IntWritable>{ @Override protected void reduce(Text key, Iterable<IntWritable> values, Context context) throws IOException, InterruptedException { int count = 0; Iterator<IntWritable> iterator = values.iterator(); while(iterator.hasNext()){ IntWritable value = iterator.next(); count += value.get(); } context.write(key, new IntWritable(count)); } }

代码编写完成,现在编写提交任务主类,可以有三种方式:

1.在Windows平台提交任务到Yarn

提交任务实现类此处使用Windows向Linux中Yarn平台提交任务来计算

public class JobSubmitter { public static void main(String[] args) throws Exception { // 在代码中设置JVM系统参数,用于给job对象来获取访问HDFS的用户身份 System.setProperty("HADOOP_USER_NAME", "root"); Configuration conf = new Configuration(); // 1、设置job运行时要访问的默认文件系统 conf.set("fs.defaultFS", "hdfs://hadoop-01:9000"); // 2、设置job提交运行地点 yarn local conf.set("mapreduce.framework.name", "yarn"); conf.set("yarn.resourcemanager.hostname", "hadoop-01"); // 3、从windows系统上运行这个job提交客户端程序,则需要加这个跨平台提交的参数 conf.set("mapreduce.app-submission.cross-platform","true"); Job job = Job.getInstance(conf); // 1、封装参数:jar包所在的位置 job.setJar("F:/EclipseWorkSpace/mapreduce24/target/mapreduce24-0.0.1-SNAPSHOT.jar"); //job.setJarByClass(JobSubmitter.class); // 2、封装参数: 本次job所要调用的Mapper实现类、Reducer实现类 job.setMapperClass(WordcountMapper.class); job.setReducerClass(WordcountReducer.class); // 3、封装参数:本次job的Mapper实现类、Reducer实现类产生的结果数据的key、value类型 job.setMapOutputKeyClass(Text.class); job.setMapOutputValueClass(IntWritable.class); job.setOutputKeyClass(Text.class); job.setOutputValueClass(IntWritable.class); //输出路径 如果已经存在则删除 Path output = new Path("/wordcount/output"); FileSystem fs = FileSystem.get(new URI("hdfs://hadoop-01:9000"), conf, "root"); if(fs.exists(output)){ fs.delete(output, true); } // 4、封装参数:本次job要处理的输入数据集所在路径、最终结果的输出路径 FileInputFormat.setInputPaths(job, new Path("/wordcount/input/input")); FileOutputFormat.setOutputPath(job, output); // 注意:输出路径必须不存在 // 5、封装参数:想要启动的reduce task的数量 job.setNumReduceTasks(2); // 6、提交job给yarn boolean res = job.waitForCompletion(true); System.exit(res ? 0 : -1); } }

输出日志:

[WARN ] 2019-02-22 17:26:30,653 method:org.apache.hadoop.util.NativeCodeLoader.<clinit>(NativeCodeLoader.java:62) Unable to load native-hadoop library for your platform... using builtin-java classes where applicable [INFO ] 2019-02-22 17:26:32,068 method:org.apache.hadoop.yarn.client.RMProxy.createRMProxy(RMProxy.java:123) Connecting to ResourceManager at hadoop-01/192.168.3.11:8032 [WARN ] 2019-02-22 17:26:32,759 method:org.apache.hadoop.mapreduce.JobResourceUploader.uploadFiles(JobResourceUploader.java:64) Hadoop command-line option parsing not performed. Implement the Tool interface and execute your application with ToolRunner to remedy this. [INFO ] 2019-02-22 17:26:33,059 method:org.apache.hadoop.mapreduce.lib.input.FileInputFormat.listStatus(FileInputFormat.java:289) Total input files to process : 3 [INFO ] 2019-02-22 17:26:33,170 method:org.apache.hadoop.mapreduce.JobSubmitter.submitJobInternal(JobSubmitter.java:200) number of splits:3 [INFO ] 2019-02-22 17:26:33,293 method:org.apache.hadoop.mapreduce.JobSubmitter.printTokens(JobSubmitter.java:289) Submitting tokens for job: job_1550821370740_0002 [INFO ] 2019-02-22 17:26:33,485 method:org.apache.hadoop.yarn.client.api.impl.YarnClientImpl.submitApplication(YarnClientImpl.java:296) Submitted application application_1550821370740_0002 [INFO ] 2019-02-22 17:26:33,542 method:org.apache.hadoop.mapreduce.Job.submit(Job.java:1345) The url to track the job: http://hadoop-01:8088/proxy/application_1550821370740_0002/ [INFO ] 2019-02-22 17:26:33,543 method:org.apache.hadoop.mapreduce.Job.monitorAndPrintJob(Job.java:1390) Running job: job_1550821370740_0002 [INFO ] 2019-02-22 17:26:42,935 method:org.apache.hadoop.mapreduce.Job.monitorAndPrintJob(Job.java:1411) Job job_1550821370740_0002 running in uber mode : false [INFO ] 2019-02-22 17:26:42,938 method:org.apache.hadoop.mapreduce.Job.monitorAndPrintJob(Job.java:1418) map 0% reduce 0% [INFO ] 2019-02-22 17:27:03,215 method:org.apache.hadoop.mapreduce.Job.monitorAndPrintJob(Job.java:1418) map 67% reduce 0% [INFO ] 2019-02-22 17:27:08,250 method:org.apache.hadoop.mapreduce.Job.monitorAndPrintJob(Job.java:1418) map 100% reduce 0% [INFO ] 2019-02-22 17:27:10,263 method:org.apache.hadoop.mapreduce.Job.monitorAndPrintJob(Job.java:1418) map 100% reduce 50% [INFO ] 2019-02-22 17:27:13,292 method:org.apache.hadoop.mapreduce.Job.monitorAndPrintJob(Job.java:1418) map 100% reduce 100% [INFO ] 2019-02-22 17:27:13,314 method:org.apache.hadoop.mapreduce.Job.monitorAndPrintJob(Job.java:1429) Job job_1550821370740_0002 completed successfully [INFO ] 2019-02-22 17:27:13,620 method:org.apache.hadoop.mapreduce.Job.monitorAndPrintJob(Job.java:1436) Counters: 50 File System Counters FILE: Number of bytes read=684 FILE: Number of bytes written=788394 FILE: Number of read operations=0 FILE: Number of large read operations=0 FILE: Number of write operations=0 HDFS: Number of bytes read=510 HDFS: Number of bytes written=51 HDFS: Number of read operations=15 HDFS: Number of large read operations=0 HDFS: Number of write operations=4 Job Counters Killed reduce tasks=1 Launched map tasks=3 Launched reduce tasks=2 Data-local map tasks=3 Total time spent by all maps in occupied slots (ms)=37460 Total time spent by all reduces in occupied slots (ms)=8286 Total time spent by all map tasks (ms)=37460 Total time spent by all reduce tasks (ms)=8286 Total vcore-milliseconds taken by all map tasks=37460 Total vcore-milliseconds taken by all reduce tasks=8286 Total megabyte-milliseconds taken by all map tasks=38359040 Total megabyte-milliseconds taken by all reduce tasks=8484864 Map-Reduce Framework Map input records=12 Map output records=84 Map output bytes=504 Map output materialized bytes=708 Input split bytes=342 Combine input records=0 Combine output records=0 Reduce input groups=12 Reduce shuffle bytes=708 Reduce input records=84 Reduce output records=12 Spilled Records=168 Shuffled Maps =6 Failed Shuffles=0 Merged Map outputs=6 GC time elapsed (ms)=1178 CPU time spent (ms)=23910 Physical memory (bytes) snapshot=1213902848 Virtual memory (bytes) snapshot=4550701056 Total committed heap usage (bytes)=739770368 Shuffle Errors BAD_ID=0 CONNECTION=0 IO_ERROR=0 WRONG_LENGTH=0 WRONG_MAP=0 WRONG_REDUCE=0 File Input Format Counters Bytes Read=168 File Output Format Counters Bytes Written=51

最终结果:

2.在Linux平台运行Jar来启动任务

public class JobSubmitterLinuxToYarn { public static void main(String[] args) throws Exception { Configuration conf = new Configuration(); conf.set("fs.defaultFS", "hdfs://hadoop-01:9000"); conf.set("fs.hdfs.impl", "org.apache.hadoop.hdfs.DistributedFileSystem"); Job job = Job.getInstance(conf); job.setJarByClass(JobSubmitterLinuxToYarn.class); job.setMapperClass(WordcountMapper.class); job.setReducerClass(WordcountReducer.class); job.setMapOutputKeyClass(Text.class); job.setMapOutputValueClass(IntWritable.class); job.setOutputKeyClass(Text.class); job.setOutputValueClass(IntWritable.class); Path output = new Path("/wordcount/output"); FileSystem fs = FileSystem.get(new URI("hdfs://hadoop-01:9000"), conf, "root"); if(fs.exists(output)){ fs.delete(output, true); } FileInputFormat.setInputPaths(job, new Path("/wordcount/input/input")); FileOutputFormat.setOutputPath(job, output); job.setNumReduceTasks(3); boolean res = job.waitForCompletion(true); System.exit(res?0:1); } }

mvn install 生成jar包,上传到linux服务器,执行启动命令

hadoop jar xx.jar xx.xx.className

hadoop jar mapreduce24-0.0.1-SNAPSHOT.jar cn.edu360.mr.wc.JobSubmitterLinuxToYarn

19/02/22 06:46:45 INFO Configuration.deprecation: session.id is deprecated. Instead, use dfs.metrics.session-id 19/02/22 06:46:45 INFO jvm.JvmMetrics: Initializing JVM Metrics with processName=JobTracker, sessionId= 19/02/22 06:46:58 WARN mapreduce.JobResourceUploader: Hadoop command-line option parsing not performed. Implement the Tool interface and execute your application with ToolRunner to remedy this. 19/02/22 06:46:58 INFO input.FileInputFormat: Total input files to process : 3 19/02/22 06:46:58 INFO mapreduce.JobSubmitter: number of splits:3 19/02/22 06:47:00 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_local1465694668_0001 19/02/22 06:47:00 INFO mapreduce.Job: The url to track the job: http://localhost:8080/ 19/02/22 06:47:00 INFO mapreduce.Job: Running job: job_local1465694668_0001 19/02/22 06:47:00 INFO mapred.LocalJobRunner: OutputCommitter set in config null 19/02/22 06:47:00 INFO output.FileOutputCommitter: File Output Committer Algorithm version is 1 19/02/22 06:47:00 INFO output.FileOutputCommitter: FileOutputCommitter skip cleanup _temporary folders under output directory:false, ignore cleanup failures: false 19/02/22 06:47:00 INFO mapred.LocalJobRunner: OutputCommitter is org.apache.hadoop.mapreduce.lib.output.FileOutputCommitter 19/02/22 06:47:01 INFO mapreduce.Job: Job job_local1465694668_0001 running in uber mode : false 19/02/22 06:47:02 INFO mapred.LocalJobRunner: Waiting for map tasks 19/02/22 06:47:02 INFO mapred.LocalJobRunner: Starting task: attempt_local1465694668_0001_m_000000_0 19/02/22 06:47:03 INFO mapreduce.Job: map 0% reduce 0% 19/02/22 06:47:04 INFO output.FileOutputCommitter: File Output Committer Algorithm version is 1 19/02/22 06:47:04 INFO output.FileOutputCommitter: FileOutputCommitter skip cleanup _temporary folders under output directory:false, ignore cleanup failures: false 19/02/22 06:47:04 INFO mapred.Task: Using ResourceCalculatorProcessTree : [ ] 19/02/22 06:47:04 INFO mapred.MapTask: Processing split: hdfs://hadoop-01:9000/wordcount/input/input/a.txt:0+56 19/02/22 06:47:05 INFO mapred.MapTask: (EQUATOR) 0 kvi 26214396(104857584) 19/02/22 06:47:05 INFO mapred.MapTask: mapreduce.task.io.sort.mb: 100 19/02/22 06:47:05 INFO mapred.MapTask: soft limit at 83886080 19/02/22 06:47:05 INFO mapred.MapTask: bufstart = 0; bufvoid = 104857600 19/02/22 06:47:05 INFO mapred.MapTask: kvstart = 26214396; length = 6553600 19/02/22 06:47:05 INFO mapred.MapTask: Map output collector class = org.apache.hadoop.mapred.MapTask$MapOutputBuffer 19/02/22 06:47:25 INFO mapred.LocalJobRunner: 19/02/22 06:47:26 INFO mapred.MapTask: Starting flush of map output 19/02/22 06:47:26 INFO mapred.MapTask: Spilling map output 19/02/22 06:47:26 INFO mapred.MapTask: bufstart = 0; bufend = 168; bufvoid = 104857600 19/02/22 06:47:26 INFO mapred.MapTask: kvstart = 26214396(104857584); kvend = 26214288(104857152); length = 109/6553600 19/02/22 06:47:26 INFO mapred.MapTask: Finished spill 0 19/02/22 06:47:26 INFO mapred.Task: Task:attempt_local1465694668_0001_m_000000_0 is done. And is in the process of committing 19/02/22 06:47:26 INFO mapred.LocalJobRunner: map 19/02/22 06:47:26 INFO mapred.Task: Task 'attempt_local1465694668_0001_m_000000_0' done. 19/02/22 06:47:26 INFO mapred.Task: Final Counters for attempt_local1465694668_0001_m_000000_0: Counters: 22 File System Counters FILE: Number of bytes read=8507 FILE: Number of bytes written=377773 FILE: Number of read operations=0 FILE: Number of large read operations=0 FILE: Number of write operations=0 HDFS: Number of bytes read=56 HDFS: Number of bytes written=0 HDFS: Number of read operations=6 HDFS: Number of large read operations=0 HDFS: Number of write operations=2 Map-Reduce Framework Map input records=4 Map output records=28 Map output bytes=168 Map output materialized bytes=242 Input split bytes=114 Combine input records=0 Spilled Records=28 Failed Shuffles=0 Merged Map outputs=0 GC time elapsed (ms)=11102 Total committed heap usage (bytes)=341835776 File Input Format Counters Bytes Read=56 ............ 19/02/22 06:47:32 INFO mapred.LocalJobRunner: Finishing task: attempt_local1465694668_0001_r_000002_0 19/02/22 06:47:32 INFO mapred.LocalJobRunner: reduce task executor complete. 19/02/22 06:47:33 INFO mapreduce.Job: map 100% reduce 100% 19/02/22 06:47:33 INFO mapreduce.Job: Job job_local1465694668_0001 completed successfully 19/02/22 06:47:33 INFO mapreduce.Job: Counters: 35 File System Counters FILE: Number of bytes read=60627 FILE: Number of bytes written=2270604 FILE: Number of read operations=0 FILE: Number of large read operations=0 FILE: Number of write operations=0 HDFS: Number of bytes read=840 HDFS: Number of bytes written=96 HDFS: Number of read operations=72 HDFS: Number of large read operations=0 HDFS: Number of write operations=24 Map-Reduce Framework Map input records=12 Map output records=84 Map output bytes=504 Map output materialized bytes=726 Input split bytes=342 Combine input records=0 Combine output records=0 Reduce input groups=12 Reduce shuffle bytes=726 Reduce input records=84 Reduce output records=12 Spilled Records=168 Shuffled Maps =9 Failed Shuffles=0 Merged Map outputs=9 GC time elapsed (ms)=11114 Total committed heap usage (bytes)=2595225600 Shuffle Errors BAD_ID=0 CONNECTION=0 IO_ERROR=0 WRONG_LENGTH=0 WRONG_MAP=0 WRONG_REDUCE=0 File Input Format Counters Bytes Read=168 File Output Format Counters Bytes Written=51

最终结果 结果文件数量与ReduceTask任务数量有关

3.在Windows本地模拟运行MapReduce任务

public class JobSubmitterWindowsLocal {

public static void main(String[] args) throws Exception {

Configuration conf = new Configuration();

//conf.set("fs.defaultFS", "file:///");

//conf.set("mapreduce.framework.name", "local");

Job job = Job.getInstance(conf);

job.setJarByClass(JobSubmitterWindowsLocal.class);

job.setMapperClass(WordcountMapper.class);

job.setReducerClass(WordcountReducer.class);

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(IntWritable.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(IntWritable.class);

FileInputFormat.setInputPaths(job, new Path("F:/hadoop-2.8.1/data/wordcount/input"));

FileOutputFormat.setOutputPath(job, new Path("F:/hadoop-2.8.1/data/wordcount/output2"));

job.setNumReduceTasks(3);

boolean res = job.waitForCompletion(true);

System.exit(res?0:1);

}

}日志输出

[WARN ] 2019-02-22 20:00:27,142 method:org.apache.hadoop.util.NativeCodeLoader.<clinit>(NativeCodeLoader.java:62)

Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

[INFO ] 2019-02-22 20:00:27,653 method:org.apache.hadoop.conf.Configuration.warnOnceIfDeprecated(Configuration.java:1181)

session.id is deprecated. Instead, use dfs.metrics.session-id

[INFO ] 2019-02-22 20:00:27,654 method:org.apache.hadoop.metrics.jvm.JvmMetrics.init(JvmMetrics.java:79)

Initializing JVM Metrics with processName=JobTracker, sessionId=

[WARN ] 2019-02-22 20:00:28,921 method:org.apache.hadoop.mapreduce.JobResourceUploader.uploadFiles(JobResourceUploader.java:64)

Hadoop command-line option parsing not performed. Implement the Tool interface and execute your application with ToolRunner to remedy this.

[WARN ] 2019-02-22 20:00:29,003 method:org.apache.hadoop.mapreduce.JobResourceUploader.uploadFiles(JobResourceUploader.java:171)

No job jar file set. User classes may not be found. See Job or Job#setJar(String).

[INFO ] 2019-02-22 20:00:29,265 method:org.apache.hadoop.mapreduce.lib.input.FileInputFormat.listStatus(FileInputFormat.java:289)

Total input files to process : 3

[INFO ] 2019-02-22 20:00:30,257 method:org.apache.hadoop.mapreduce.JobSubmitter.submitJobInternal(JobSubmitter.java:200)

number of splits:3

[INFO ] 2019-02-22 20:00:30,983 method:org.apache.hadoop.mapreduce.JobSubmitter.printTokens(JobSubmitter.java:289)

Submitting tokens for job: job_local334746331_0001

[INFO ] 2019-02-22 20:00:34,056 method:org.apache.hadoop.mapreduce.JobSubmitter.submitJobInternal(JobSubmitter.java:251)

Cleaning up the staging area file:/tmp/hadoop/mapred/staging/chen334746331/.staging/job_local334746331_0001

Exception in thread "main" java.lang.UnsatisfiedLinkError: org.apache.hadoop.io.nativeio.NativeIO$Windows.access0(Ljava/lang/String;I)Z

at org.apache.hadoop.io.nativeio.NativeIO$Windows.access0(Native Method)

at org.apache.hadoop.io.nativeio.NativeIO$Windows.access(NativeIO.java:606)

at org.apache.hadoop.fs.FileUtil.canRead(FileUtil.java:958)

at org.apache.hadoop.util.DiskChecker.checkAccessByFileMethods(DiskChecker.java:203)

at org.apache.hadoop.util.DiskChecker.checkDirAccess(DiskChecker.java:190)

at org.apache.hadoop.util.DiskChecker.checkDir(DiskChecker.java:124)

at org.apache.hadoop.fs.LocalDirAllocator$AllocatorPerContext.confChanged(LocalDirAllocator.java:314)

at org.apache.hadoop.fs.LocalDirAllocator$AllocatorPerContext.getLocalPathForWrite(LocalDirAllocator.java:377)

at org.apache.hadoop.fs.LocalDirAllocator.getLocalPathForWrite(LocalDirAllocator.java:151)

at org.apache.hadoop.fs.LocalDirAllocator.getLocalPathForWrite(LocalDirAllocator.java:132)

at org.apache.hadoop.fs.LocalDirAllocator.getLocalPathForWrite(LocalDirAllocator.java:116)

at org.apache.hadoop.mapred.LocalDistributedCacheManager.setup(LocalDistributedCacheManager.java:125)

at org.apache.hadoop.mapred.LocalJobRunner$Job.<init>(LocalJobRunner.java:171)

at org.apache.hadoop.mapred.LocalJobRunner.submitJob(LocalJobRunner.java:758)

at org.apache.hadoop.mapreduce.JobSubmitter.submitJobInternal(JobSubmitter.java:242)

at org.apache.hadoop.mapreduce.Job$11.run(Job.java:1341)

at org.apache.hadoop.mapreduce.Job$11.run(Job.java:1338)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1807)

at org.apache.hadoop.mapreduce.Job.submit(Job.java:1338)

at org.apache.hadoop.mapreduce.Job.waitForCompletion(Job.java:1359)

at cn.edu360.mr.wc.JobSubmitterWindowsLocal.main(JobSubmitterWindowsLocal.java:38)hadoop需要访问本地文件,使用nativeIO来访问,安装hadoop windows本地链接库,配置环境变量HADOOP_HOME以及Path路径HADOOP_HOME/bin

重新执行main方法

[INFO ] 2019-02-22 20:10:33,123 method:org.apache.hadoop.conf.Configuration.warnOnceIfDeprecated(Configuration.java:1181)

session.id is deprecated. Instead, use dfs.metrics.session-id

[INFO ] 2019-02-22 20:10:33,127 method:org.apache.hadoop.metrics.jvm.JvmMetrics.init(JvmMetrics.java:79)

Initializing JVM Metrics with processName=JobTracker, sessionId=

[WARN ] 2019-02-22 20:10:33,917 method:org.apache.hadoop.mapreduce.JobResourceUploader.uploadFiles(JobResourceUploader.java:64)

Hadoop command-line option parsing not performed. Implement the Tool interface and execute your application with ToolRunner to remedy this.

[WARN ] 2019-02-22 20:10:33,940 method:org.apache.hadoop.mapreduce.JobResourceUploader.uploadFiles(JobResourceUploader.java:171)

No job jar file set. User classes may not be found. See Job or Job#setJar(String).

[INFO ] 2019-02-22 20:10:34,193 method:org.apache.hadoop.mapreduce.lib.input.FileInputFormat.listStatus(FileInputFormat.java:289)

Total input files to process : 3

[INFO ] 2019-02-22 20:10:34,226 method:org.apache.hadoop.mapreduce.JobSubmitter.submitJobInternal(JobSubmitter.java:200)

number of splits:3

[INFO ] 2019-02-22 20:10:34,331 method:org.apache.hadoop.mapreduce.JobSubmitter.printTokens(JobSubmitter.java:289)

Submitting tokens for job: job_local91916295_0001

[INFO ] 2019-02-22 20:10:34,486 method:org.apache.hadoop.mapreduce.Job.submit(Job.java:1345)

The url to track the job: http://localhost:8080/

[INFO ] 2019-02-22 20:10:34,487 method:org.apache.hadoop.mapreduce.Job.monitorAndPrintJob(Job.java:1390)

Running job: job_local91916295_0001

[INFO ] 2019-02-22 20:10:34,504 method:org.apache.hadoop.mapred.LocalJobRunner$Job.createOutputCommitter(LocalJobRunner.java:498)

OutputCommitter set in config null

[INFO ] 2019-02-22 20:10:34,518 method:org.apache.hadoop.mapreduce.lib.output.FileOutputCommitter.<init>(FileOutputCommitter.java:123)

File Output Committer Algorithm version is 1

[INFO ] 2019-02-22 20:10:34,518 method:org.apache.hadoop.mapreduce.lib.output.FileOutputCommitter.<init>(FileOutputCommitter.java:138)

FileOutputCommitter skip cleanup _temporary folders under output directory:false, ignore cleanup failures: false

[INFO ] 2019-02-22 20:10:34,519 method:org.apache.hadoop.mapred.LocalJobRunner$Job.createOutputCommitter(LocalJobRunner.java:516)

OutputCommitter is org.apache.hadoop.mapreduce.lib.output.FileOutputCommitter

[INFO ] 2019-02-22 20:10:34,573 method:org.apache.hadoop.mapred.LocalJobRunner$Job.runTasks(LocalJobRunner.java:475)

Waiting for map tasks

[INFO ] 2019-02-22 20:10:34,573 method:org.apache.hadoop.mapred.LocalJobRunner$Job$MapTaskRunnable.run(LocalJobRunner.java:251)

Starting task: attempt_local91916295_0001_m_000000_0

[INFO ] 2019-02-22 20:10:34,599 method:org.apache.hadoop.mapreduce.lib.output.FileOutputCommitter.<init>(FileOutputCommitter.java:123)

File Output Committer Algorithm version is 1

[INFO ] 2019-02-22 20:10:34,600 method:org.apache.hadoop.mapreduce.lib.output.FileOutputCommitter.<init>(FileOutputCommitter.java:138)

FileOutputCommitter skip cleanup _temporary folders under output directory:false, ignore cleanup failures: false

[INFO ] 2019-02-22 20:10:34,636 method:org.apache.hadoop.yarn.util.ProcfsBasedProcessTree.isAvailable(ProcfsBasedProcessTree.java:168)

ProcfsBasedProcessTree currently is supported only on Linux.

[INFO ] 2019-02-22 20:10:34,704 method:org.apache.hadoop.mapred.Task.initialize(Task.java:619)

Using ResourceCalculatorProcessTree : org.apache.hadoop.yarn.util.WindowsBasedProcessTree@6d97a426

[INFO ] 2019-02-22 20:10:34,711 method:org.apache.hadoop.mapred.MapTask.runNewMapper(MapTask.java:756)

Processing split: file:/F:/hadoop-2.8.1/data/wordcount/input/a.txt:0+56

[INFO ] 2019-02-22 20:10:34,808 method:org.apache.hadoop.mapred.MapTask$MapOutputBuffer.setEquator(MapTask.java:1205)

(EQUATOR) 0 kvi 26214396(104857584)

[INFO ] 2019-02-22 20:10:34,808 method:org.apache.hadoop.mapred.MapTask$MapOutputBuffer.init(MapTask.java:998)

mapreduce.task.io.sort.mb: 100

[INFO ] 2019-02-22 20:10:34,809 method:org.apache.hadoop.mapred.MapTask$MapOutputBuffer.init(MapTask.java:999)

soft limit at 83886080

[INFO ] 2019-02-22 20:10:34,809 method:org.apache.hadoop.mapred.MapTask$MapOutputBuffer.init(MapTask.java:1000)

bufstart = 0; bufvoid = 104857600

[INFO ] 2019-02-22 20:10:34,809 method:org.apache.hadoop.mapred.MapTask$MapOutputBuffer.init(MapTask.java:1001)

kvstart = 26214396; length = 6553600

[INFO ] 2019-02-22 20:10:34,817 method:org.apache.hadoop.mapred.MapTask.createSortingCollector(MapTask.java:403)

Map output collector class = org.apache.hadoop.mapred.MapTask$MapOutputBuffer

[INFO ] 2019-02-22 20:10:34,846 method:org.apache.hadoop.mapred.LocalJobRunner$Job.statusUpdate(LocalJobRunner.java:618)

[INFO ] 2019-02-22 20:10:34,847 method:org.apache.hadoop.mapred.MapTask$MapOutputBuffer.flush(MapTask.java:1462)

Starting flush of map output

[INFO ] 2019-02-22 20:10:34,847 method:org.apache.hadoop.mapred.MapTask$MapOutputBuffer.flush(MapTask.java:1484)

Spilling map output

[INFO ] 2019-02-22 20:10:34,847 method:org.apache.hadoop.mapred.MapTask$MapOutputBuffer.flush(MapTask.java:1485)

bufstart = 0; bufend = 168; bufvoid = 104857600

[INFO ] 2019-02-22 20:10:34,847 method:org.apache.hadoop.mapred.MapTask$MapOutputBuffer.flush(MapTask.java:1487)

kvstart = 26214396(104857584); kvend = 26214288(104857152); length = 109/6553600

[INFO ] 2019-02-22 20:10:34,924 method:org.apache.hadoop.mapred.MapTask$MapOutputBuffer.sortAndSpill(MapTask.java:1669)

Finished spill 0

[INFO ] 2019-02-22 20:10:34,946 method:org.apache.hadoop.mapred.Task.done(Task.java:1099)

Task:attempt_local91916295_0001_m_000000_0 is done. And is in the process of committing

[INFO ] 2019-02-22 20:10:34,955 method:org.apache.hadoop.mapred.LocalJobRunner$Job.statusUpdate(LocalJobRunner.java:618)

map

[INFO ] 2019-02-22 20:10:34,955 method:org.apache.hadoop.mapred.Task.sendDone(Task.java:1219)

Task 'attempt_local91916295_0001_m_000000_0' done.

[INFO ] 2019-02-22 20:10:34,955 method:org.apache.hadoop.mapred.LocalJobRunner$Job$MapTaskRunnable.run(LocalJobRunner.java:276)

Finishing task: attempt_local91916295_0001_m_000000_0

[INFO ] 2019-02-22 20:10:34,955 method:org.apache.hadoop.mapred.LocalJobRunner$Job$MapTaskRunnable.run(LocalJobRunner.java:251)

Starting task: attempt_local91916295_0001_m_000001_0

[INFO ] 2019-02-22 20:10:34,956 method:org.apache.hadoop.mapreduce.lib.output.FileOutputCommitter.<init>(FileOutputCommitter.java:123)

File Output Committer Algorithm version is 1

[INFO ] 2019-02-22 20:10:34,956 method:org.apache.hadoop.mapreduce.lib.output.FileOutputCommitter.<init>(FileOutputCommitter.java:138)

FileOutputCommitter skip cleanup _temporary folders under output directory:false, ignore cleanup failures: false

[INFO ] 2019-02-22 20:10:34,957 method:org.apache.hadoop.yarn.util.ProcfsBasedProcessTree.isAvailable(ProcfsBasedProcessTree.java:168)

ProcfsBasedProcessTree currently is supported only on Linux.

[INFO ] 2019-02-22 20:10:35,020 method:org.apache.hadoop.mapred.Task.initialize(Task.java:619)

Using ResourceCalculatorProcessTree : org.apache.hadoop.yarn.util.WindowsBasedProcessTree@6a2a0768

[INFO ] 2019-02-22 20:10:35,024 method:org.apache.hadoop.mapred.MapTask.runNewMapper(MapTask.java:756)

Processing split: file:/F:/hadoop-2.8.1/data/wordcount/input/b.txt:0+56

[INFO ] 2019-02-22 20:10:35,091 method:org.apache.hadoop.mapred.MapTask$MapOutputBuffer.setEquator(MapTask.java:1205)

(EQUATOR) 0 kvi 26214396(104857584)

[INFO ] 2019-02-22 20:10:35,092 method:org.apache.hadoop.mapred.MapTask$MapOutputBuffer.init(MapTask.java:998)

mapreduce.task.io.sort.mb: 100

[INFO ] 2019-02-22 20:10:35,092 method:org.apache.hadoop.mapred.MapTask$MapOutputBuffer.init(MapTask.java:999)

soft limit at 83886080

[INFO ] 2019-02-22 20:10:35,092 method:org.apache.hadoop.mapred.MapTask$MapOutputBuffer.init(MapTask.java:1000)

bufstart = 0; bufvoid = 104857600

[INFO ] 2019-02-22 20:10:35,092 method:org.apache.hadoop.mapred.MapTask$MapOutputBuffer.init(MapTask.java:1001)

kvstart = 26214396; length = 6553600

[INFO ] 2019-02-22 20:10:35,093 method:org.apache.hadoop.mapred.MapTask.createSortingCollector(MapTask.java:403)

Map output collector class = org.apache.hadoop.mapred.MapTask$MapOutputBuffer

[INFO ] 2019-02-22 20:10:35,096 method:org.apache.hadoop.mapred.LocalJobRunner$Job.statusUpdate(LocalJobRunner.java:618)

[INFO ] 2019-02-22 20:10:35,097 method:org.apache.hadoop.mapred.MapTask$MapOutputBuffer.flush(MapTask.java:1462)

Starting flush of map output

[INFO ] 2019-02-22 20:10:35,097 method:org.apache.hadoop.mapred.MapTask$MapOutputBuffer.flush(MapTask.java:1484)

Spilling map output

[INFO ] 2019-02-22 20:10:35,097 method:org.apache.hadoop.mapred.MapTask$MapOutputBuffer.flush(MapTask.java:1485)

bufstart = 0; bufend = 168; bufvoid = 104857600

[INFO ] 2019-02-22 20:10:35,097 method:org.apache.hadoop.mapred.MapTask$MapOutputBuffer.flush(MapTask.java:1487)

kvstart = 26214396(104857584); kvend = 26214288(104857152); length = 109/6553600

[INFO ] 2019-02-22 20:10:35,125 method:org.apache.hadoop.mapred.MapTask$MapOutputBuffer.sortAndSpill(MapTask.java:1669)

Finished spill 0

[INFO ] 2019-02-22 20:10:35,131 method:org.apache.hadoop.mapred.Task.done(Task.java:1099)

Task:attempt_local91916295_0001_m_000001_0 is done. And is in the process of committing

[INFO ] 2019-02-22 20:10:35,135 method:org.apache.hadoop.mapred.LocalJobRunner$Job.statusUpdate(LocalJobRunner.java:618)

map

[INFO ] 2019-02-22 20:10:35,135 method:org.apache.hadoop.mapred.Task.sendDone(Task.java:1219)

Task 'attempt_local91916295_0001_m_000001_0' done.

[INFO ] 2019-02-22 20:10:35,135 method:org.apache.hadoop.mapred.LocalJobRunner$Job$MapTaskRunnable.run(LocalJobRunner.java:276)

Finishing task: attempt_local91916295_0001_m_000001_0

[INFO ] 2019-02-22 20:10:35,135 method:org.apache.hadoop.mapred.LocalJobRunner$Job$MapTaskRunnable.run(LocalJobRunner.java:251)

Starting task: attempt_local91916295_0001_m_000002_0

[INFO ] 2019-02-22 20:10:35,136 method:org.apache.hadoop.mapreduce.lib.output.FileOutputCommitter.<init>(FileOutputCommitter.java:123)

File Output Committer Algorithm version is 1

[INFO ] 2019-02-22 20:10:35,137 method:org.apache.hadoop.mapreduce.lib.output.FileOutputCommitter.<init>(FileOutputCommitter.java:138)

FileOutputCommitter skip cleanup _temporary folders under output directory:false, ignore cleanup failures: false

[INFO ] 2019-02-22 20:10:35,137 method:org.apache.hadoop.yarn.util.ProcfsBasedProcessTree.isAvailable(ProcfsBasedProcessTree.java:168)

ProcfsBasedProcessTree currently is supported only on Linux.

[INFO ] 2019-02-22 20:10:35,218 method:org.apache.hadoop.mapred.Task.initialize(Task.java:619)

Using ResourceCalculatorProcessTree : org.apache.hadoop.yarn.util.WindowsBasedProcessTree@510907be

[INFO ] 2019-02-22 20:10:35,221 method:org.apache.hadoop.mapred.MapTask.runNewMapper(MapTask.java:756)

Processing split: file:/F:/hadoop-2.8.1/data/wordcount/input/c.txt:0+56

[INFO ] 2019-02-22 20:10:35,283 method:org.apache.hadoop.mapred.MapTask$MapOutputBuffer.setEquator(MapTask.java:1205)

(EQUATOR) 0 kvi 26214396(104857584)

[INFO ] 2019-02-22 20:10:35,283 method:org.apache.hadoop.mapred.MapTask$MapOutputBuffer.init(MapTask.java:998)

mapreduce.task.io.sort.mb: 100

[INFO ] 2019-02-22 20:10:35,283 method:org.apache.hadoop.mapred.MapTask$MapOutputBuffer.init(MapTask.java:999)

soft limit at 83886080

[INFO ] 2019-02-22 20:10:35,283 method:org.apache.hadoop.mapred.MapTask$MapOutputBuffer.init(MapTask.java:1000)

bufstart = 0; bufvoid = 104857600

[INFO ] 2019-02-22 20:10:35,283 method:org.apache.hadoop.mapred.MapTask$MapOutputBuffer.init(MapTask.java:1001)

kvstart = 26214396; length = 6553600

[INFO ] 2019-02-22 20:10:35,284 method:org.apache.hadoop.mapred.MapTask.createSortingCollector(MapTask.java:403)

Map output collector class = org.apache.hadoop.mapred.MapTask$MapOutputBuffer

[INFO ] 2019-02-22 20:10:35,287 method:org.apache.hadoop.mapred.LocalJobRunner$Job.statusUpdate(LocalJobRunner.java:618)

[INFO ] 2019-02-22 20:10:35,287 method:org.apache.hadoop.mapred.MapTask$MapOutputBuffer.flush(MapTask.java:1462)

Starting flush of map output

[INFO ] 2019-02-22 20:10:35,288 method:org.apache.hadoop.mapred.MapTask$MapOutputBuffer.flush(MapTask.java:1484)

Spilling map output

[INFO ] 2019-02-22 20:10:35,288 method:org.apache.hadoop.mapred.MapTask$MapOutputBuffer.flush(MapTask.java:1485)

bufstart = 0; bufend = 168; bufvoid = 104857600

[INFO ] 2019-02-22 20:10:35,288 method:org.apache.hadoop.mapred.MapTask$MapOutputBuffer.flush(MapTask.java:1487)

kvstart = 26214396(104857584); kvend = 26214288(104857152); length = 109/6553600

[INFO ] 2019-02-22 20:10:35,301 method:org.apache.hadoop.mapred.MapTask$MapOutputBuffer.sortAndSpill(MapTask.java:1669)

Finished spill 0

[INFO ] 2019-02-22 20:10:35,307 method:org.apache.hadoop.mapred.Task.done(Task.java:1099)

Task:attempt_local91916295_0001_m_000002_0 is done. And is in the process of committing

[INFO ] 2019-02-22 20:10:35,309 method:org.apache.hadoop.mapred.LocalJobRunner$Job.statusUpdate(LocalJobRunner.java:618)

map

[INFO ] 2019-02-22 20:10:35,310 method:org.apache.hadoop.mapred.Task.sendDone(Task.java:1219)

Task 'attempt_local91916295_0001_m_000002_0' done.

[INFO ] 2019-02-22 20:10:35,310 method:org.apache.hadoop.mapred.LocalJobRunner$Job$MapTaskRunnable.run(LocalJobRunner.java:276)

Finishing task: attempt_local91916295_0001_m_000002_0

[INFO ] 2019-02-22 20:10:35,310 method:org.apache.hadoop.mapred.LocalJobRunner$Job.runTasks(LocalJobRunner.java:483)

map task executor complete.

[INFO ] 2019-02-22 20:10:35,338 method:org.apache.hadoop.mapred.LocalJobRunner$Job.runTasks(LocalJobRunner.java:475)

Waiting for reduce tasks

[INFO ] 2019-02-22 20:10:35,338 method:org.apache.hadoop.mapred.LocalJobRunner$Job$ReduceTaskRunnable.run(LocalJobRunner.java:329)

Starting task: attempt_local91916295_0001_r_000000_0

[INFO ] 2019-02-22 20:10:35,345 method:org.apache.hadoop.mapreduce.lib.output.FileOutputCommitter.<init>(FileOutputCommitter.java:123)

File Output Committer Algorithm version is 1

[INFO ] 2019-02-22 20:10:35,345 method:org.apache.hadoop.mapreduce.lib.output.FileOutputCommitter.<init>(FileOutputCommitter.java:138)

FileOutputCommitter skip cleanup _temporary folders under output directory:false, ignore cleanup failures: false

[INFO ] 2019-02-22 20:10:35,346 method:org.apache.hadoop.yarn.util.ProcfsBasedProcessTree.isAvailable(ProcfsBasedProcessTree.java:168)

ProcfsBasedProcessTree currently is supported only on Linux.

[INFO ] 2019-02-22 20:10:35,407 method:org.apache.hadoop.mapred.Task.initialize(Task.java:619)

Using ResourceCalculatorProcessTree : org.apache.hadoop.yarn.util.WindowsBasedProcessTree@4c57e581

[INFO ] 2019-02-22 20:10:35,426 method:org.apache.hadoop.mapred.ReduceTask.run(ReduceTask.java:362)

Using ShuffleConsumerPlugin: org.apache.hadoop.mapreduce.task.reduce.Shuffle@152baa1

[INFO ] 2019-02-22 20:10:35,440 method:org.apache.hadoop.mapreduce.task.reduce.MergeManagerImpl.<init>(MergeManagerImpl.java:206)

MergerManager: memoryLimit=1323407744, maxSingleShuffleLimit=330851936, mergeThreshold=873449152, ioSortFactor=10, memToMemMergeOutputsThreshold=10

[INFO ] 2019-02-22 20:10:35,442 method:org.apache.hadoop.mapreduce.task.reduce.EventFetcher.run(EventFetcher.java:61)

attempt_local91916295_0001_r_000000_0 Thread started: EventFetcher for fetching Map Completion Events

[INFO ] 2019-02-22 20:10:35,495 method:org.apache.hadoop.mapreduce.task.reduce.LocalFetcher.copyMapOutput(LocalFetcher.java:145)

localfetcher#1 about to shuffle output of map attempt_local91916295_0001_m_000001_0 decomp: 34 len: 38 to MEMORY

[INFO ] 2019-02-22 20:10:35,504 method:org.apache.hadoop.mapreduce.Job.monitorAndPrintJob(Job.java:1411)

Job job_local91916295_0001 running in uber mode : false

[INFO ] 2019-02-22 20:10:35,505 method:org.apache.hadoop.mapreduce.Job.monitorAndPrintJob(Job.java:1418)

map 100% reduce 0%

[INFO ] 2019-02-22 20:10:35,511 method:org.apache.hadoop.mapreduce.task.reduce.InMemoryMapOutput.doShuffle(InMemoryMapOutput.java:93)

Read 34 bytes from map-output for attempt_local91916295_0001_m_000001_0

[INFO ] 2019-02-22 20:10:35,512 method:org.apache.hadoop.mapreduce.task.reduce.MergeManagerImpl.closeInMemoryFile(MergeManagerImpl.java:321)

closeInMemoryFile -> map-output of size: 34, inMemoryMapOutputs.size() -> 1, commitMemory -> 0, usedMemory ->34

[INFO ] 2019-02-22 20:10:35,517 method:org.apache.hadoop.mapreduce.task.reduce.LocalFetcher.copyMapOutput(LocalFetcher.java:145)

localfetcher#1 about to shuffle output of map attempt_local91916295_0001_m_000000_0 decomp: 34 len: 38 to MEMORY

[INFO ] 2019-02-22 20:10:35,518 method:org.apache.hadoop.mapreduce.task.reduce.InMemoryMapOutput.doShuffle(InMemoryMapOutput.java:93)

Read 34 bytes from map-output for attempt_local91916295_0001_m_000000_0

[INFO ] 2019-02-22 20:10:35,518 method:org.apache.hadoop.mapreduce.task.reduce.MergeManagerImpl.closeInMemoryFile(MergeManagerImpl.java:321)

closeInMemoryFile -> map-output of size: 34, inMemoryMapOutputs.size() -> 2, commitMemory -> 34, usedMemory ->68

[INFO ] 2019-02-22 20:10:35,523 method:org.apache.hadoop.mapreduce.task.reduce.LocalFetcher.copyMapOutput(LocalFetcher.java:145)

localfetcher#1 about to shuffle output of map attempt_local91916295_0001_m_000002_0 decomp: 34 len: 38 to MEMORY

[INFO ] 2019-02-22 20:10:35,524 method:org.apache.hadoop.mapreduce.task.reduce.InMemoryMapOutput.doShuffle(InMemoryMapOutput.java:93)

Read 34 bytes from map-output for attempt_local91916295_0001_m_000002_0

[INFO ] 2019-02-22 20:10:35,524 method:org.apache.hadoop.mapreduce.task.reduce.MergeManagerImpl.closeInMemoryFile(MergeManagerImpl.java:321)

closeInMemoryFile -> map-output of size: 34, inMemoryMapOutputs.size() -> 3, commitMemory -> 68, usedMemory ->102

[INFO ] 2019-02-22 20:10:35,524 method:org.apache.hadoop.mapreduce.task.reduce.EventFetcher.run(EventFetcher.java:76)

EventFetcher is interrupted.. Returning

[INFO ] 2019-02-22 20:10:35,525 method:org.apache.hadoop.mapred.LocalJobRunner$Job.statusUpdate(LocalJobRunner.java:618)

3 / 3 copied.

[INFO ] 2019-02-22 20:10:35,525 method:org.apache.hadoop.mapreduce.task.reduce.MergeManagerImpl.finalMerge(MergeManagerImpl.java:693)

finalMerge called with 3 in-memory map-outputs and 0 on-disk map-outputs

[INFO ] 2019-02-22 20:10:35,887 method:org.apache.hadoop.mapred.Merger$MergeQueue.merge(Merger.java:606)

Merging 3 sorted segments

[INFO ] 2019-02-22 20:10:35,887 method:org.apache.hadoop.mapred.Merger$MergeQueue.merge(Merger.java:705)

Down to the last merge-pass, with 3 segments left of total size: 90 bytes

[INFO ] 2019-02-22 20:10:35,889 method:org.apache.hadoop.mapreduce.task.reduce.MergeManagerImpl.finalMerge(MergeManagerImpl.java:760)

Merged 3 segments, 102 bytes to disk to satisfy reduce memory limit

[INFO ] 2019-02-22 20:10:35,890 method:org.apache.hadoop.mapreduce.task.reduce.MergeManagerImpl.finalMerge(MergeManagerImpl.java:790)

Merging 1 files, 102 bytes from disk

[INFO ] 2019-02-22 20:10:35,890 method:org.apache.hadoop.mapreduce.task.reduce.MergeManagerImpl.finalMerge(MergeManagerImpl.java:805)

Merging 0 segments, 0 bytes from memory into reduce

[INFO ] 2019-02-22 20:10:35,891 method:org.apache.hadoop.mapred.Merger$MergeQueue.merge(Merger.java:606)

Merging 1 sorted segments

[INFO ] 2019-02-22 20:10:35,892 method:org.apache.hadoop.mapred.Merger$MergeQueue.merge(Merger.java:705)

Down to the last merge-pass, with 1 segments left of total size: 94 bytes

[INFO ] 2019-02-22 20:10:35,892 method:org.apache.hadoop.mapred.LocalJobRunner$Job.statusUpdate(LocalJobRunner.java:618)

3 / 3 copied.

[INFO ] 2019-02-22 20:10:36,022 method:org.apache.hadoop.conf.Configuration.warnOnceIfDeprecated(Configuration.java:1181)

mapred.skip.on is deprecated. Instead, use mapreduce.job.skiprecords

[INFO ] 2019-02-22 20:10:36,170 method:org.apache.hadoop.mapred.Task.done(Task.java:1099)

Task:attempt_local91916295_0001_r_000000_0 is done. And is in the process of committing

[INFO ] 2019-02-22 20:10:36,171 method:org.apache.hadoop.mapred.LocalJobRunner$Job.statusUpdate(LocalJobRunner.java:618)

3 / 3 copied.

[INFO ] 2019-02-22 20:10:36,171 method:org.apache.hadoop.mapred.Task.commit(Task.java:1260)

Task attempt_local91916295_0001_r_000000_0 is allowed to commit now

[INFO ] 2019-02-22 20:10:36,174 method:org.apache.hadoop.mapreduce.lib.output.FileOutputCommitter.commitTask(FileOutputCommitter.java:582)

Saved output of task 'attempt_local91916295_0001_r_000000_0' to file:/F:/hadoop-2.8.1/data/wordcount/output2/_temporary/0/task_local91916295_0001_r_000000

[INFO ] 2019-02-22 20:10:36,175 method:org.apache.hadoop.mapred.LocalJobRunner$Job.statusUpdate(LocalJobRunner.java:618)

reduce > reduce

[INFO ] 2019-02-22 20:10:36,175 method:org.apache.hadoop.mapred.Task.sendDone(Task.java:1219)

Task 'attempt_local91916295_0001_r_000000_0' done.

[INFO ] 2019-02-22 20:10:36,176 method:org.apache.hadoop.mapred.LocalJobRunner$Job$ReduceTaskRunnable.run(LocalJobRunner.java:352)

Finishing task: attempt_local91916295_0001_r_000000_0

[INFO ] 2019-02-22 20:10:36,176 method:org.apache.hadoop.mapred.LocalJobRunner$Job$ReduceTaskRunnable.run(LocalJobRunner.java:329)

Starting task: attempt_local91916295_0001_r_000001_0

[INFO ] 2019-02-22 20:10:36,177 method:org.apache.hadoop.mapreduce.lib.output.FileOutputCommitter.<init>(FileOutputCommitter.java:123)

File Output Committer Algorithm version is 1

[INFO ] 2019-02-22 20:10:36,177 method:org.apache.hadoop.mapreduce.lib.output.FileOutputCommitter.<init>(FileOutputCommitter.java:138)

FileOutputCommitter skip cleanup _temporary folders under output directory:false, ignore cleanup failures: false

[INFO ] 2019-02-22 20:10:36,177 method:org.apache.hadoop.yarn.util.ProcfsBasedProcessTree.isAvailable(ProcfsBasedProcessTree.java:168)

ProcfsBasedProcessTree currently is supported only on Linux.

[INFO ] 2019-02-22 20:10:36,249 method:org.apache.hadoop.mapred.Task.initialize(Task.java:619)

Using ResourceCalculatorProcessTree : org.apache.hadoop.yarn.util.WindowsBasedProcessTree@5ba8acc4

[INFO ] 2019-02-22 20:10:36,250 method:org.apache.hadoop.mapred.ReduceTask.run(ReduceTask.java:362)

Using ShuffleConsumerPlugin: org.apache.hadoop.mapreduce.task.reduce.Shuffle@2e910e30

[INFO ] 2019-02-22 20:10:36,251 method:org.apache.hadoop.mapreduce.task.reduce.MergeManagerImpl.<init>(MergeManagerImpl.java:206)

MergerManager: memoryLimit=1323407744, maxSingleShuffleLimit=330851936, mergeThreshold=873449152, ioSortFactor=10, memToMemMergeOutputsThreshold=10

[INFO ] 2019-02-22 20:10:36,251 method:org.apache.hadoop.mapreduce.task.reduce.EventFetcher.run(EventFetcher.java:61)

attempt_local91916295_0001_r_000001_0 Thread started: EventFetcher for fetching Map Completion Events

[INFO ] 2019-02-22 20:10:36,259 method:org.apache.hadoop.mapreduce.task.reduce.LocalFetcher.copyMapOutput(LocalFetcher.java:145)

localfetcher#2 about to shuffle output of map attempt_local91916295_0001_m_000001_0 decomp: 58 len: 62 to MEMORY

[INFO ] 2019-02-22 20:10:36,261 method:org.apache.hadoop.mapreduce.task.reduce.InMemoryMapOutput.doShuffle(InMemoryMapOutput.java:93)

Read 58 bytes from map-output for attempt_local91916295_0001_m_000001_0

[INFO ] 2019-02-22 20:10:36,261 method:org.apache.hadoop.mapreduce.task.reduce.MergeManagerImpl.closeInMemoryFile(MergeManagerImpl.java:321)

closeInMemoryFile -> map-output of size: 58, inMemoryMapOutputs.size() -> 1, commitMemory -> 0, usedMemory ->58

[INFO ] 2019-02-22 20:10:36,266 method:org.apache.hadoop.mapreduce.task.reduce.LocalFetcher.copyMapOutput(LocalFetcher.java:145)

localfetcher#2 about to shuffle output of map attempt_local91916295_0001_m_000000_0 decomp: 58 len: 62 to MEMORY

[INFO ] 2019-02-22 20:10:36,267 method:org.apache.hadoop.mapreduce.task.reduce.InMemoryMapOutput.doShuffle(InMemoryMapOutput.java:93)

Read 58 bytes from map-output for attempt_local91916295_0001_m_000000_0

[INFO ] 2019-02-22 20:10:36,267 method:org.apache.hadoop.mapreduce.task.reduce.MergeManagerImpl.closeInMemoryFile(MergeManagerImpl.java:321)

closeInMemoryFile -> map-output of size: 58, inMemoryMapOutputs.size() -> 2, commitMemory -> 58, usedMemory ->116

[INFO ] 2019-02-22 20:10:36,273 method:org.apache.hadoop.mapreduce.task.reduce.LocalFetcher.copyMapOutput(LocalFetcher.java:145)

localfetcher#2 about to shuffle output of map attempt_local91916295_0001_m_000002_0 decomp: 58 len: 62 to MEMORY

[INFO ] 2019-02-22 20:10:36,274 method:org.apache.hadoop.mapreduce.task.reduce.InMemoryMapOutput.doShuffle(InMemoryMapOutput.java:93)

Read 58 bytes from map-output for attempt_local91916295_0001_m_000002_0

[INFO ] 2019-02-22 20:10:36,274 method:org.apache.hadoop.mapreduce.task.reduce.MergeManagerImpl.closeInMemoryFile(MergeManagerImpl.java:321)

closeInMemoryFile -> map-output of size: 58, inMemoryMapOutputs.size() -> 3, commitMemory -> 116, usedMemory ->174

[INFO ] 2019-02-22 20:10:36,274 method:org.apache.hadoop.mapreduce.task.reduce.EventFetcher.run(EventFetcher.java:76)

EventFetcher is interrupted.. Returning

[INFO ] 2019-02-22 20:10:36,275 method:org.apache.hadoop.mapred.LocalJobRunner$Job.statusUpdate(LocalJobRunner.java:618)

3 / 3 copied.

[INFO ] 2019-02-22 20:10:36,275 method:org.apache.hadoop.mapreduce.task.reduce.MergeManagerImpl.finalMerge(MergeManagerImpl.java:693)

finalMerge called with 3 in-memory map-outputs and 0 on-disk map-outputs

[INFO ] 2019-02-22 20:10:36,510 method:org.apache.hadoop.mapreduce.Job.monitorAndPrintJob(Job.java:1418)

map 100% reduce 33%

[INFO ] 2019-02-22 20:10:36,514 method:org.apache.hadoop.mapred.Merger$MergeQueue.merge(Merger.java:606)

Merging 3 sorted segments

[INFO ] 2019-02-22 20:10:36,515 method:org.apache.hadoop.mapred.Merger$MergeQueue.merge(Merger.java:705)

Down to the last merge-pass, with 3 segments left of total size: 162 bytes

[INFO ] 2019-02-22 20:10:36,516 method:org.apache.hadoop.mapreduce.task.reduce.MergeManagerImpl.finalMerge(MergeManagerImpl.java:760)

Merged 3 segments, 174 bytes to disk to satisfy reduce memory limit

[INFO ] 2019-02-22 20:10:36,517 method:org.apache.hadoop.mapreduce.task.reduce.MergeManagerImpl.finalMerge(MergeManagerImpl.java:790)

Merging 1 files, 174 bytes from disk

[INFO ] 2019-02-22 20:10:36,518 method:org.apache.hadoop.mapreduce.task.reduce.MergeManagerImpl.finalMerge(MergeManagerImpl.java:805)

Merging 0 segments, 0 bytes from memory into reduce

[INFO ] 2019-02-22 20:10:36,518 method:org.apache.hadoop.mapred.Merger$MergeQueue.merge(Merger.java:606)

Merging 1 sorted segments

[INFO ] 2019-02-22 20:10:36,518 method:org.apache.hadoop.mapred.Merger$MergeQueue.merge(Merger.java:705)

Down to the last merge-pass, with 1 segments left of total size: 166 bytes

[INFO ] 2019-02-22 20:10:36,519 method:org.apache.hadoop.mapred.LocalJobRunner$Job.statusUpdate(LocalJobRunner.java:618)

3 / 3 copied.

[INFO ] 2019-02-22 20:10:36,524 method:org.apache.hadoop.mapred.Task.done(Task.java:1099)

Task:attempt_local91916295_0001_r_000001_0 is done. And is in the process of committing

[INFO ] 2019-02-22 20:10:36,525 method:org.apache.hadoop.mapred.LocalJobRunner$Job.statusUpdate(LocalJobRunner.java:618)

3 / 3 copied.

[INFO ] 2019-02-22 20:10:36,525 method:org.apache.hadoop.mapred.Task.commit(Task.java:1260)

Task attempt_local91916295_0001_r_000001_0 is allowed to commit now

[INFO ] 2019-02-22 20:10:36,527 method:org.apache.hadoop.mapreduce.lib.output.FileOutputCommitter.commitTask(FileOutputCommitter.java:582)

Saved output of task 'attempt_local91916295_0001_r_000001_0' to file:/F:/hadoop-2.8.1/data/wordcount/output2/_temporary/0/task_local91916295_0001_r_000001

[INFO ] 2019-02-22 20:10:36,528 method:org.apache.hadoop.mapred.LocalJobRunner$Job.statusUpdate(LocalJobRunner.java:618)

reduce > reduce

[INFO ] 2019-02-22 20:10:36,528 method:org.apache.hadoop.mapred.Task.sendDone(Task.java:1219)

Task 'attempt_local91916295_0001_r_000001_0' done.

[INFO ] 2019-02-22 20:10:36,528 method:org.apache.hadoop.mapred.LocalJobRunner$Job$ReduceTaskRunnable.run(LocalJobRunner.java:352)

Finishing task: attempt_local91916295_0001_r_000001_0

[INFO ] 2019-02-22 20:10:36,528 method:org.apache.hadoop.mapred.LocalJobRunner$Job$ReduceTaskRunnable.run(LocalJobRunner.java:329)

Starting task: attempt_local91916295_0001_r_000002_0

[INFO ] 2019-02-22 20:10:36,528 method:org.apache.hadoop.mapreduce.lib.output.FileOutputCommitter.<init>(FileOutputCommitter.java:123)

File Output Committer Algorithm version is 1

[INFO ] 2019-02-22 20:10:36,529 method:org.apache.hadoop.mapreduce.lib.output.FileOutputCommitter.<init>(FileOutputCommitter.java:138)

FileOutputCommitter skip cleanup _temporary folders under output directory:false, ignore cleanup failures: false

[INFO ] 2019-02-22 20:10:36,529 method:org.apache.hadoop.yarn.util.ProcfsBasedProcessTree.isAvailable(ProcfsBasedProcessTree.java:168)

ProcfsBasedProcessTree currently is supported only on Linux.

[INFO ] 2019-02-22 20:10:36,587 method:org.apache.hadoop.mapred.Task.initialize(Task.java:619)

Using ResourceCalculatorProcessTree : org.apache.hadoop.yarn.util.WindowsBasedProcessTree@4698f8ef

[INFO ] 2019-02-22 20:10:36,588 method:org.apache.hadoop.mapred.ReduceTask.run(ReduceTask.java:362)

Using ShuffleConsumerPlugin: org.apache.hadoop.mapreduce.task.reduce.Shuffle@5848b274

[INFO ] 2019-02-22 20:10:36,588 method:org.apache.hadoop.mapreduce.task.reduce.MergeManagerImpl.<init>(MergeManagerImpl.java:206)

MergerManager: memoryLimit=1323407744, maxSingleShuffleLimit=330851936, mergeThreshold=873449152, ioSortFactor=10, memToMemMergeOutputsThreshold=10

[INFO ] 2019-02-22 20:10:36,589 method:org.apache.hadoop.mapreduce.task.reduce.EventFetcher.run(EventFetcher.java:61)

attempt_local91916295_0001_r_000002_0 Thread started: EventFetcher for fetching Map Completion Events

[INFO ] 2019-02-22 20:10:36,594 method:org.apache.hadoop.mapreduce.task.reduce.LocalFetcher.copyMapOutput(LocalFetcher.java:145)

localfetcher#3 about to shuffle output of map attempt_local91916295_0001_m_000001_0 decomp: 138 len: 142 to MEMORY

[INFO ] 2019-02-22 20:10:36,595 method:org.apache.hadoop.mapreduce.task.reduce.InMemoryMapOutput.doShuffle(InMemoryMapOutput.java:93)

Read 138 bytes from map-output for attempt_local91916295_0001_m_000001_0

[INFO ] 2019-02-22 20:10:36,595 method:org.apache.hadoop.mapreduce.task.reduce.MergeManagerImpl.closeInMemoryFile(MergeManagerImpl.java:321)

closeInMemoryFile -> map-output of size: 138, inMemoryMapOutputs.size() -> 1, commitMemory -> 0, usedMemory ->138

[INFO ] 2019-02-22 20:10:36,599 method:org.apache.hadoop.mapreduce.task.reduce.LocalFetcher.copyMapOutput(LocalFetcher.java:145)

localfetcher#3 about to shuffle output of map attempt_local91916295_0001_m_000000_0 decomp: 138 len: 142 to MEMORY

[INFO ] 2019-02-22 20:10:36,601 method:org.apache.hadoop.mapreduce.task.reduce.InMemoryMapOutput.doShuffle(InMemoryMapOutput.java:93)

Read 138 bytes from map-output for attempt_local91916295_0001_m_000000_0

[INFO ] 2019-02-22 20:10:36,601 method:org.apache.hadoop.mapreduce.task.reduce.MergeManagerImpl.closeInMemoryFile(MergeManagerImpl.java:321)

closeInMemoryFile -> map-output of size: 138, inMemoryMapOutputs.size() -> 2, commitMemory -> 138, usedMemory ->276

[INFO ] 2019-02-22 20:10:36,606 method:org.apache.hadoop.mapreduce.task.reduce.LocalFetcher.copyMapOutput(LocalFetcher.java:145)

localfetcher#3 about to shuffle output of map attempt_local91916295_0001_m_000002_0 decomp: 138 len: 142 to MEMORY

[INFO ] 2019-02-22 20:10:36,607 method:org.apache.hadoop.mapreduce.task.reduce.InMemoryMapOutput.doShuffle(InMemoryMapOutput.java:93)

Read 138 bytes from map-output for attempt_local91916295_0001_m_000002_0

[INFO ] 2019-02-22 20:10:36,607 method:org.apache.hadoop.mapreduce.task.reduce.MergeManagerImpl.closeInMemoryFile(MergeManagerImpl.java:321)

closeInMemoryFile -> map-output of size: 138, inMemoryMapOutputs.size() -> 3, commitMemory -> 276, usedMemory ->414

[INFO ] 2019-02-22 20:10:36,607 method:org.apache.hadoop.mapreduce.task.reduce.EventFetcher.run(EventFetcher.java:76)

EventFetcher is interrupted.. Returning

[INFO ] 2019-02-22 20:10:36,608 method:org.apache.hadoop.mapred.LocalJobRunner$Job.statusUpdate(LocalJobRunner.java:618)

3 / 3 copied.

[INFO ] 2019-02-22 20:10:36,608 method:org.apache.hadoop.mapreduce.task.reduce.MergeManagerImpl.finalMerge(MergeManagerImpl.java:693)

finalMerge called with 3 in-memory map-outputs and 0 on-disk map-outputs

[INFO ] 2019-02-22 20:10:36,618 method:org.apache.hadoop.mapred.Merger$MergeQueue.merge(Merger.java:606)

Merging 3 sorted segments

[INFO ] 2019-02-22 20:10:36,618 method:org.apache.hadoop.mapred.Merger$MergeQueue.merge(Merger.java:705)

Down to the last merge-pass, with 3 segments left of total size: 402 bytes

[INFO ] 2019-02-22 20:10:36,620 method:org.apache.hadoop.mapreduce.task.reduce.MergeManagerImpl.finalMerge(MergeManagerImpl.java:760)

Merged 3 segments, 414 bytes to disk to satisfy reduce memory limit

[INFO ] 2019-02-22 20:10:36,621 method:org.apache.hadoop.mapreduce.task.reduce.MergeManagerImpl.finalMerge(MergeManagerImpl.java:790)

Merging 1 files, 414 bytes from disk

[INFO ] 2019-02-22 20:10:36,621 method:org.apache.hadoop.mapreduce.task.reduce.MergeManagerImpl.finalMerge(MergeManagerImpl.java:805)

Merging 0 segments, 0 bytes from memory into reduce

[INFO ] 2019-02-22 20:10:36,621 method:org.apache.hadoop.mapred.Merger$MergeQueue.merge(Merger.java:606)

Merging 1 sorted segments

[INFO ] 2019-02-22 20:10:36,622 method:org.apache.hadoop.mapred.Merger$MergeQueue.merge(Merger.java:705)

Down to the last merge-pass, with 1 segments left of total size: 406 bytes

[INFO ] 2019-02-22 20:10:36,622 method:org.apache.hadoop.mapred.LocalJobRunner$Job.statusUpdate(LocalJobRunner.java:618)

3 / 3 copied.

[INFO ] 2019-02-22 20:10:36,749 method:org.apache.hadoop.mapred.Task.done(Task.java:1099)

Task:attempt_local91916295_0001_r_000002_0 is done. And is in the process of committing

[INFO ] 2019-02-22 20:10:36,752 method:org.apache.hadoop.mapred.LocalJobRunner$Job.statusUpdate(LocalJobRunner.java:618)

3 / 3 copied.

[INFO ] 2019-02-22 20:10:36,752 method:org.apache.hadoop.mapred.Task.commit(Task.java:1260)

Task attempt_local91916295_0001_r_000002_0 is allowed to commit now

[INFO ] 2019-02-22 20:10:36,759 method:org.apache.hadoop.mapreduce.lib.output.FileOutputCommitter.commitTask(FileOutputCommitter.java:582)

Saved output of task 'attempt_local91916295_0001_r_000002_0' to file:/F:/hadoop-2.8.1/data/wordcount/output2/_temporary/0/task_local91916295_0001_r_000002

[INFO ] 2019-02-22 20:10:36,761 method:org.apache.hadoop.mapred.LocalJobRunner$Job.statusUpdate(LocalJobRunner.java:618)

reduce > reduce

[INFO ] 2019-02-22 20:10:36,761 method:org.apache.hadoop.mapred.Task.sendDone(Task.java:1219)

Task 'attempt_local91916295_0001_r_000002_0' done.

[INFO ] 2019-02-22 20:10:36,761 method:org.apache.hadoop.mapred.LocalJobRunner$Job$ReduceTaskRunnable.run(LocalJobRunner.java:352)

Finishing task: attempt_local91916295_0001_r_000002_0

[INFO ] 2019-02-22 20:10:36,761 method:org.apache.hadoop.mapred.LocalJobRunner$Job.runTasks(LocalJobRunner.java:483)

reduce task executor complete.

[INFO ] 2019-02-22 20:10:37,510 method:org.apache.hadoop.mapreduce.Job.monitorAndPrintJob(Job.java:1418)

map 100% reduce 100%

[INFO ] 2019-02-22 20:10:37,511 method:org.apache.hadoop.mapreduce.Job.monitorAndPrintJob(Job.java:1429)

Job job_local91916295_0001 completed successfully

[INFO ] 2019-02-22 20:10:37,869 method:org.apache.hadoop.mapreduce.Job.monitorAndPrintJob(Job.java:1436)

Counters: 30

File System Counters

FILE: Number of bytes read=12918

FILE: Number of bytes written=1907700

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

Map-Reduce Framework

Map input records=12

Map output records=84

Map output bytes=504

Map output materialized bytes=726

Input split bytes=339

Combine input records=0

Combine output records=0

Reduce input groups=12

Reduce shuffle bytes=726

Reduce input records=84

Reduce output records=12

Spilled Records=168

Shuffled Maps =9

Failed Shuffles=0

Merged Map outputs=9

GC time elapsed (ms)=5

Total committed heap usage (bytes)=2354577408

Shuffle Errors

BAD_ID=0

CONNECTION=0

IO_ERROR=0

WRONG_LENGTH=0

WRONG_MAP=0

WRONG_REDUCE=0

File Input Format Counters

Bytes Read=168

File Output Format Counters

Bytes Written=87三种方法提交任务主要通过Configuration对象来设置相关参数,以及注意相关文件系统的路径表示。为了便于调试,可以使用本地运行的方式来调试mapreduce程序,提高程序的运行速度。

计算过程分析

原始文件 a.txt b.txt c.txt

map由MapTask来实现,reduce由ReduceTask来实现。MapTask的数量由输入文件以及切片大小决定,根据每个文件的大小以及切片大小计算每个文件需要的MapTask数量,这里由于每个文件都很小因此会启动三个MapTask任务。

以a.txt第一行举例a c v b n h f

执行map方法之后产生的key-value值为(a,1) (c, 1) (v, 1) (b, 1) (n, 1) (h, 1) (f, 1)

每一行均会产生大量的key-value对

reduce数量由客户端指定,job.setNumReduceTasks(2)

对于map阶段产生的key值进行hash分组,分配到指定的ReduceTask中,执行reduce方法

每个ReduceTask任务要处理多个分组,每个分组key值相同,values值组成迭代器集合,每个分组执行一次reduce方法

int count = 0;

Iterator<IntWritable> iterator = values.iterator();

while(iterator.hasNext()){

IntWritable value = iterator.next();

count += value.get();

}因此可以统计每个分组的次数,即每个单词出现的次数。

329

329

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?