本篇主要分析IDT的初始化流程。nei

IDT简介

IDT——interrupt description table,用来描述中断异常向量,表中的每一个entry对应一个向量。

IDT entry:

这里写图片描述

每个entry为8bytes,有以下关键bit:

16~31:code segment selector

0~15 & 46-64:segment offset (根据以上两项可确定中断处理函数的地址)

Type:区分中断门、陷阱门、任务门等

DPL:Descriptor Privilege Level, 访问特权级

P:该描述符是否在内存中

desc_struct :

kernel中描述IDT entry的数据结构为

87 typedef struct desc_struct gate_desc;

14 /*

15 * FIXME: Accessing the desc_struct through its fields is more elegant,

16 * and should be the one valid thing to do. However, a lot of open code

17 * still touches the a and b accessors, and doing this allow us to do it

18 * incrementally. We keep the signature as a struct, rather than an union,

19 * so we can get rid of it transparently in the future -- glommer

20 */

21 /* 8 byte segment descriptor */

22 struct desc_struct {

23 union {

24 struct {

25 unsigned int a;

26 unsigned int b;

27 };

28 struct {

29 u16 limit0;

30 u16 base0;

31 unsigned base1: 8, type: 4, s: 1, dpl: 2, p: 1;

32 unsigned limit: 4, avl: 1, l: 1, d: 1, g: 1, base2: 8;

33 };

34 };

35 } __attribute__((packed));

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

idt_table:

kernel中描述IDT:

77 /* Must be page-aligned because the real IDT is used in a fixmap. */

78 gate_desc idt_table[NR_VECTORS] __page_aligned_bss;

1

2

初始化IDT

setup_once:

第一次初始化,通过汇编形式,这里应该是设置特权级别和code segment?对汇编就不做太多研究了。

470 /*

471 * setup_once

472 *

473 * The setup work we only want to run on the BSP.

474 *

475 * Warning: %esi is live across this function.

476 */

477 __INIT

478 setup_once:

479 /*

480 * Set up a idt with 256 interrupt gates that push zero if there

481 * is no error code and then jump to early_idt_handler_common.

482 * It doesn't actually load the idt - that needs to be done on

483 * each CPU. Interrupts are enabled elsewhere, when we can be

484 * relatively sure everything is ok.

485 */

486

487 movl $idt_table,%edi

488 movl $early_idt_handler_array,%eax

489 movl $NUM_EXCEPTION_VECTORS,%ecx

490 1:

491 movl %eax,(%edi)

492 movl %eax,4(%edi)

493 /* interrupt gate, dpl=0, present */

494 movl $(0x8E000000 + __KERNEL_CS),2(%edi)

495 addl $EARLY_IDT_HANDLER_SIZE,%eax

496 addl $8,%edi

497 loop 1b

498

499 movl $256 - NUM_EXCEPTION_VECTORS,%ecx

500 movl $ignore_int,%edx

501 movl $(__KERNEL_CS << 16),%eax

502 movw %dx,%ax /* selector = 0x0010 = cs */

503 movw $0x8E00,%dx /* interrupt gate - dpl=0, present */

504 2:

505 movl %eax,(%edi)

506 movl %edx,4(%edi)

507 addl $8,%edi

508 loop 2b

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

early_trap_init:

第二次初始化,通过early_trap_init和trap_init初始化IDT中kernel保留的vector,比如前32个vector和system call(0x80)等。系统保留的向量和set_intr_gate等分析见附录。

742 /* Set of traps needed for early debugging. */

743 void __init early_trap_init(void)

744 {

745 set_intr_gate_ist(X86_TRAP_DB, &debug, DEBUG_STACK);

746 /* int3 can be called from all */

747 set_system_intr_gate_ist(X86_TRAP_BP, &int3, DEBUG_STACK);

748 #ifdef CONFIG_X86_32

749 set_intr_gate(X86_TRAP_PF, page_fault);

750 #endif

751 load_idt(&idt_descr);

752 }

761 void __init trap_init(void)

762 {

763 int i;

764

765 #ifdef CONFIG_EISA

766 void __iomem *p = early_ioremap(0x0FFFD9, 4);

767

768 if (readl(p) == 'E' + ('I'<<8) + ('S'<<16) + ('A'<<24))

769 EISA_bus = 1;

770 early_iounmap(p, 4);

771 #endif

772

773 set_intr_gate(X86_TRAP_DE, divide_error);

774 set_intr_gate_ist(X86_TRAP_NMI, &nmi, NMI_STACK); //中断门

775 /* int4 can be called from all */

776 set_system_intr_gate(X86_TRAP_OF, &overflow);

777 set_intr_gate(X86_TRAP_BR, bounds);

778 set_intr_gate(X86_TRAP_UD, invalid_op);

779 set_intr_gate(X86_TRAP_NM, device_not_available);

780 #ifdef CONFIG_X86_32

781 set_task_gate(X86_TRAP_DF, GDT_ENTRY_DOUBLEFAULT_TSS); //任务门

782 #else

783 set_intr_gate_ist(X86_TRAP_DF, &double_fault, DOUBLEFAULT_STACK);

784 #endif

785 set_intr_gate(X86_TRAP_OLD_MF, coprocessor_segment_overrun);

786 set_intr_gate(X86_TRAP_TS, invalid_TSS);

787 set_intr_gate(X86_TRAP_NP, segment_not_present);

788 set_intr_gate(X86_TRAP_SS, stack_segment);

789 set_intr_gate(X86_TRAP_GP, general_protection);

790 set_intr_gate(X86_TRAP_SPURIOUS, spurious_interrupt_bug);

791 set_intr_gate(X86_TRAP_MF, coprocessor_error);

792 set_intr_gate(X86_TRAP_AC, alignment_check);

793 #ifdef CONFIG_X86_MCE

794 set_intr_gate_ist(X86_TRAP_MC, &machine_check, MCE_STACK);

795 #endif

796 set_intr_gate(X86_TRAP_XF, simd_coprocessor_error);

797

798 /* Reserve all the builtin and the syscall vector: */

799 for (i = 0; i < FIRST_EXTERNAL_VECTOR; i++) //FIRST_EXTERN_VECTOR的值为32,即前32个中断/异常为系统保留,并且在used_sectors中设置对应的bit为1

800 set_bit(i, used_vectors);

801

802 #ifdef CONFIG_IA32_EMULATION

803 set_system_intr_gate(IA32_SYSCALL_VECTOR, ia32_syscall);

804 set_bit(IA32_SYSCALL_VECTOR, used_vectors);

805 #endif

806

807 #ifdef CONFIG_X86_32

808 set_system_trap_gate(SYSCALL_VECTOR, &system_call); //syscall vector 中断号为0x80,,初始化为陷阱门

809 set_bit(SYSCALL_VECTOR, used_vectors);

810 #endif

811

812 /*

813 * Set the IDT descriptor to a fixed read-only location, so that the

814 * "sidt" instruction will not leak the location of the kernel, and

815 * to defend the IDT against arbitrary memory write vulnerabilities.

816 * It will be reloaded in cpu_init() */

817 __set_fixmap(FIX_RO_IDT, __pa_symbol(idt_table), PAGE_KERNEL_RO); //将中断描述符表(IDT)采用固定映射(fixed map)

818 idt_descr.address = fix_to_virt(FIX_RO_IDT); //转换成虚拟地址

819

820 /*

821 * Should be a barrier for any external CPU state:

822 */

823 cpu_init(); //会调用load_current_idt

824

825 x86_init.irqs.trap_init(); //x86_init.pci.init_irq = x86_init_noop; do nothing

826

827 #ifdef CONFIG_X86_64

828 memcpy(&debug_idt_table, &idt_table, IDT_ENTRIES * 16);

829 set_nmi_gate(X86_TRAP_DB, &debug);

830 set_nmi_gate(X86_TRAP_BP, &int3);

831 #endif

832 }

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

在调用init_IRQ之前还有early_irq_init,但是early_irq_init没有IDT相关的初始化,所以暂不分析,放到irq_desc中再做分析。

init_IRQ

第三次初始化:

85 void __init init_IRQ(void)

86 {

87 int i;

88

89 /*

90 * We probably need a better place for this, but it works for

91 * now ...

92 */

93 x86_add_irq_domains(); //在cht平台上此函数为空

94

95 /*

96 * On cpu 0, Assign IRQ0_VECTOR..IRQ15_VECTOR's to IRQ 0..15.

97 * If these IRQ's are handled by legacy interrupt-controllers like PIC,

98 * then this configuration will likely be static after the boot. If

99 * these IRQ's are handled by more mordern controllers like IO-APIC,

100 * then this vector space can be freed and re-used dynamically as the

101 * irq's migrate etc.

102 */

103 for (i = 0; i < legacy_pic->nr_legacy_irqs; i++) //设置CPU 0的vector_irq数组的48-63为legacy irq

104 per_cpu(vector_irq, 0)[IRQ0_VECTOR + i] = i; //每个CPU有一个per_cpu变量叫vector_irq,一个数组,来描述irq号和vector号的关联,大小为256,index为vector号,对应值为irq num。

105

106 x86_init.irqs.intr_init(); //intr_init = native_init_IRQ

107 }

native_init_IRQ对IDT表中剩下的所有表项进行初始化

197 void __init native_init_IRQ(void)

198 {

199 int i;

200

201 /* Execute any quirks before the call gates are initialised: */

202 x86_init.irqs.pre_vector_init(); //调用init_ISA_irqs,设置了radix tree中对应legacy irq的irq_desc的内容,如desc->irq_data.chip、 desc->handle_irq、 desc->name 等

203

204 apic_intr_init(); //apic和一些系统vector的初始化,设置used_vectors,填充对应的idt table选项和used_vectors

205

206 /*

207 * Cover the whole vector space, no vector can escape

208 * us. (some of these will be overridden and become

209 * 'special' SMP interrupts)

210 */

211 i = FIRST_EXTERNAL_VECTOR;

212 for_each_clear_bit_from(i, used_vectors, NR_VECTORS) { //共有256个vector,FIRST_EXTERN_VECTOR为0x20,初始化剩余的IDT表项(剩余项中也有一些是保留的,比如系统调用等)

213 /* IA32_SYSCALL_VECTOR could be used in trap_init already. */

214 set_intr_gate(i, interrupt[i - FIRST_EXTERNAL_VECTOR]); //调用set_intr_gate将剩余未初始化的向量全部设置中断门,并且用interrupt数组中的元素作为中断处理函数

215 } //interrupt数组初始化见

216

217 if (!acpi_ioapic && !of_ioapic)

218 setup_irq(2, &irq2);

219

220 #ifdef CONFIG_X86_32

221 irq_ctx_init(smp_processor_id());

222 #endif

223 }

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

到此为止,IDT的初始化已经完成了,当系统发生中断时,会通过IDT找到interrupt数组中对应向量的处理指针,这个指针并不是直接指向该irq的ISR。有点绕人,没关系,分析完irq_desc就知道了。

附录:

x86中前32个中断和异常为系统

109 /* Interrupts/Exceptions */

110 enum {

111 X86_TRAP_DE = 0, /* 0, Divide-by-zero */

112 X86_TRAP_DB, /* 1, Debug */

113 X86_TRAP_NMI, /* 2, Non-maskable Interrupt */

114 X86_TRAP_BP, /* 3, Breakpoint */

115 X86_TRAP_OF, /* 4, Overflow */

116 X86_TRAP_BR, /* 5, Bound Range Exceeded */

117 X86_TRAP_UD, /* 6, Invalid Opcode */

118 X86_TRAP_NM, /* 7, Device Not Available */

119 X86_TRAP_DF, /* 8, Double Fault */

120 X86_TRAP_OLD_MF, /* 9, Coprocessor Segment Overrun */

121 X86_TRAP_TS, /* 10, Invalid TSS */

122 X86_TRAP_NP, /* 11, Segment Not Present */

123 X86_TRAP_SS, /* 12, Stack Segment Fault */

124 X86_TRAP_GP, /* 13, General Protection Fault */

125 X86_TRAP_PF, /* 14, Page Fault */

126 X86_TRAP_SPURIOUS, /* 15, Spurious Interrupt */

127 X86_TRAP_MF, /* 16, x87 Floating-Point Exception */

128 X86_TRAP_AC, /* 17, Alignment Check */

129 X86_TRAP_MC, /* 18, Machine Check */

130 X86_TRAP_XF, /* 19, SIMD Floating-Point Exception */

131 X86_TRAP_IRET = 32, /* 32, IRET Exception */

132 }; set_intr_gate_ist

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

不管是中断还是异常最后调用的接口和流程都是相似的

436 static inline void set_intr_gate_ist(int n, void *addr, unsigned ist) //X86_TRAP_DB, &debug, 0

437 {

438 BUG_ON((unsigned)n > 0xFF); //中断号必须小于0xff

439 _set_gate(n, GATE_INTERRUPT, addr, 0, ist, __KERNEL_CS);

440 }

set_gate调用pack_gate组装成一个门描述符格式,并调用write_idt_entry写入IDT表中相应的描述符中

359 static inline void _set_gate(int gate, unsigned type, void *addr, //X86_TRAP_DB, GATE_INTERRUPT, &debug

360 unsigned dpl, unsigned ist, unsigned seg) //0, 0, __KERNEL_CS 中断号为X86_TRAP_DB,中断门描述符类型为GATE_INTERRUPT, DPL为0表示只有内核态才能访问,__KERNEL_CS为段选择子

361 {

362 gate_desc s;

363

364 pack_gate(&s, type, (unsigned long)addr, dpl, ist, seg);

365 /*

366 * does not need to be atomic because it is only done once at

367 * setup time

368 */

369 write_idt_entry(idt_table, gate, &s);

370 write_trace_idt_entry(gate, &s);

371 }

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

interrupt数组

740 /*

741 * Build the entry stubs and pointer table with some assembler magic.

742 * We pack 7 stubs into a single 32-byte chunk, which will fit in a

743 * single cache line on all modern x86 implementations.

744 */

745 .section .init.rodata,"a" //定义一个段,.init.rodata表示该段可以被读写操作,“a” section is allocateable

746 ENTRY(interrupt) //定义数据段的入口为interrupt

747 .section .entry.text, "ax" //x表示可执行代码段

748 .p2align 5 //32字节对其

749 .p2align CONFIG_X86_L1_CACHE_SHIFT

750 ENTRY(irq_entries_start) //代码段的入口定义为irq_entries_start

751 RING0_INT_FRAME //

752 vector=FIRST_EXTERNAL_VECTOR //0-31号为内部向量,外部向量从0x20开始

753 .rept (NR_VECTORS-FIRST_EXTERNAL_VECTOR+6)/7 //(256-32+6) / 7 = 32,循环32次

754 .balign 32 //32字节对齐

755 .rept 7 // 循环7次,共有 32*7=224 次

756 .if vector < NR_VECTORS

757 .if vector <> FIRST_EXTERNAL_VECTOR

758 CFI_ADJUST_CFA_OFFSET -4

759 .endif

760 1: pushl_cfi $(~vector+0x80) /* Note: always in signed byte range */ //将~vector+0x80压栈

761 .if ((vector-FIRST_EXTERNAL_VECTOR)%7) <> 6 //猜测:(vector-FIRST_EXTERNAL_VECTOR)%7 == 6的那些vector(38 45 52 59 66 73 80...)之前已经设置过或者保留(比如系统中断),其余外部中断跳转到common_interrupt

762 jmp 2f //jmp to common_interrupt

763 .endif

764 .previous

765 .long 1b //保存1的地址,包含了压栈和跳转到common_interrupt

766 .section .entry.text, "ax"

767 vector=vector+1 //vector自加1,初始化下一个vector

768 .endif

769 .endr

770 2: jmp common_interrupt

771 .endr

772 END(irq_entries_start)

773

774 .previous

775 END(interrupt)

776 .previous

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

每个中断的处理函数主要做了三件事:

1. 将~vector+0x80压栈

2. 跳转到common_interrupt

3. 依次保存1的地址(指向了1和2的代码内容)到interrupt数组(即interrupt标志的内存位置)对应位置

初始化interrupt数组之后,对应内存中保存了各个vector的 中断处理函数的地址,大概如下:

数据段中的interrupt[0]指向这里

pushl (~0x21+0x80)

jmp common_interrupt

nop

数据段中的interrupt[2]指向这里

pushl $(~0x22+0x80)

jmp common_interrupt

nop

……

编译以后产生的代码段数据段布局如下:

简介

irq_desc数据结构用于描述一个irq对应的各种信息,主要有以下方面:

irq_data,描述该irq的irq number,irq chip,irq domain,处理器亲和力等等

handle_irq,highlevel irq-events handler,流处理函数

irq_action,一个链表,每个成员包含该irq中断处理函数等信息

depth,中断嵌套深度

name,cat /proc/interrupts时显示的名称

等等

每个irq对应一个irq_desc,kernel中管理irq_descs有两种方式:

1、如果定义 CONFIG_SPARSE_IRQ,则所有irq_descs以radix tree的形式管理

2、否则所有irq_descs放在一个全局数组中,并对某些成员进行初始化,如下

260 struct irq_desc irq_desc[NR_IRQS] __cacheline_aligned_in_smp = {

261 [0 ... NR_IRQS-1] = {

262 .handle_irq = handle_bad_irq,

263 .depth = 1,

264 .lock = __RAW_SPIN_LOCK_UNLOCKED(irq_desc->lock),

265 }

266 };

1

2

3

4

5

6

7

系统启动时初始化irq_descs

1、early_irq_init

1.1 Radix tree形式

只对系统的16个legacy中断进行irq_desc的初始化

229 int __init early_irq_init(void)

230 {

231 int i, initcnt, node = first_online_node;

232 struct irq_desc *desc;

233

234 init_irq_default_affinity(); //默认的中断亲和力是所有CPU,如果想绑定cpu到某个cpu上该怎么做?http://blog.csdn.net/kingmax26/article/details/5788732

235

236 /* Let arch update nr_irqs and return the nr of preallocated irqs */

237 initcnt = arch_probe_nr_irqs(); //不同架构的preallocated irq数目不同,x86是16

~ 238 printk(KERN_ERR "yin_test NR_IRQS:%d nr_irqs:%d initcnt %d\n",

+ 239 NR_IRQS, nr_irqs, initcnt);

240

241 if (WARN_ON(nr_irqs > IRQ_BITMAP_BITS))

242 nr_irqs = IRQ_BITMAP_BITS;

243

244 if (WARN_ON(initcnt > IRQ_BITMAP_BITS))

245 initcnt = IRQ_BITMAP_BITS;

246

247 if (initcnt > nr_irqs)

248 nr_irqs = initcnt;

249

250 for (i = 0; i < initcnt; i++) { //对以上的16个irq进行irq_desc的初始化

251 desc = alloc_desc(i, node, NULL); //分配irq_desc并对其中某些成员进行初始化

252 set_bit(i, allocated_irqs); //set bit in allocated_irqs

253 irq_insert_desc(i, desc); //插入到radix tree中

254 }

255 return arch_early_irq_init(); //设置以上16个legacy irq的chip_data,void类型

256 }

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

1.2 全局数组

260 struct irq_desc irq_desc[NR_IRQS] __cacheline_aligned_in_smp = {

261 [0 ... NR_IRQS-1] = {

262 .handle_irq = handle_bad_irq,

263 .depth = 1,

264 .lock = __RAW_SPIN_LOCK_UNLOCKED(irq_desc->lock),

265 }

266 };

267

268 int __init early_irq_init(void)

269 {

270 int count, i, node = first_online_node;

271 struct irq_desc *desc;

272

273 init_irq_default_affinity();

274

~ 275 printk(KERN_INFO "NR_IRQS:%d, adasda\n", NR_IRQS);

+ 276 13131

277

278 desc = irq_desc;

279 count = ARRAY_SIZE(irq_desc);

280

281 for (i = 0; i < count; i++) { //遍历数组,对成员进行初始化

282 desc[i].kstat_irqs = alloc_percpu(unsigned int);

283 alloc_masks(&desc[i], GFP_KERNEL, node);

284 raw_spin_lock_init(&desc[i].lock);

285 lockdep_set_class(&desc[i].lock, &irq_desc_lock_class);

286 desc_set_defaults(i, &desc[i], node, NULL);

287 }

288 return arch_early_irq_init();

289 }

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

可以看到,这种方法就比较简单粗暴了,直接静态定义,然后依次对数组元素初始化。

2.init_IRQ

init_IRQ不仅对IDT进行初始化,也对irq_desc进行了初始化

85 void __init init_IRQ(void)

86 {

87 int i;

88

89 /*

90 * We probably need a better place for this, but it works for

91 * now ...

92 */

93 x86_add_irq_domains(); //在x86平台上此函数为空

94

95 /* //默认使用legacy irq PIC(8259),如果使用APIC,那么后续将被释放和动态reused

96 * On cpu 0, Assign IRQ0_VECTOR..IRQ15_VECTOR's to IRQ 0..15.

97 * If these IRQ's are handled by legacy interrupt-controllers like PIC,

98 * then this configuration will likely be static after the boot. If

99 * these IRQ's are handled by more mordern controllers like IO-APIC,

100 * then this vector space can be freed and re-used dynamically as the

101 * irq's migrate etc.

102 */

103 for (i = 0; i < legacy_pic->nr_legacy_irqs; i++) //设置CPU 0的vector_irq数组的48-63为legacy irq 0~15

104 per_cpu(vector_irq, 0)[IRQ0_VECTOR + i] = i; //每个CPU有一个per_cpu变量叫vector_irq,一个数组,来描述irq号和vector号的关联,大小为256,index为vector号,对应值为irq num。

105

106 x86_init.irqs.intr_init(); //intr_init = native_init_IRQ

107 }

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

2.1 native_init_IRQ

197 void __init native_init_IRQ(void)

198 {

199 int i;

200

201 /* Execute any quirks before the call gates are initialised: */

202 x86_init.irqs.pre_vector_init(); //调用init_ISA_irqs

203

204 apic_intr_init();

205

206 /*

207 * Cover the whole vector space, no vector can escape

208 * us. (some of these will be overridden and become

209 * 'special' SMP interrupts)

210 */

211 i = FIRST_EXTERNAL_VECTOR;

212 for_each_clear_bit_from(i, used_vectors, NR_VECTORS) {

213 /* IA32_SYSCALL_VECTOR could be used in trap_init already. */

214 set_intr_gate(i, interrupt[i - FIRST_EXTERNAL_VECTOR]);

215 }

216

217 if (!acpi_ioapic && !of_ioapic)

218 setup_irq(2, &irq2);

219

220 #ifdef CONFIG_X86_32

221 irq_ctx_init(smp_processor_id());

222 #endif

223 }

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

70 void __init init_ISA_irqs(void)

71 {

72 struct irq_chip *chip = legacy_pic->chip;

73 const char *name = chip->name;

74 int i;

75

+ 76 pr_err("yin_test, %s, %d, chip->name %s\n",

+ 77 __func__, __LINE__, chip->name);

78 #if defined(CONFIG_X86_64) || defined(CONFIG_X86_LOCAL_APIC)

79 init_bsp_APIC();

80 #endif

81 legacy_pic->init(0);

82

+ 83 pr_err("yin_test, %s, %d\n",

+ 84 __func__, __LINE__);

85 for (i = 0; i < legacy_pic->nr_legacy_irqs; i++)

86 irq_set_chip_and_handler_name(i, chip, handle_level_irq, name); //设置irq_desc的chip、handle、name,x86默认使用legacy irq chip 8259,当然我们用的是APIC。这个函数后面会重点分析。

87 }

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

2.2 irq_set_chip_and_handler_name

696 void

697 irq_set_chip_and_handler_name(unsigned int irq, struct irq_chip *chip,

698 irq_flow_handler_t handle, const char *name)

699 {

700 irq_set_chip(irq, chip);

701 __irq_set_handler(irq, handle, 0, name);

702 }

703 EXPORT_SYMBOL_GPL(irq_set_chip_and_handler_name);

1

2

3

4

5

6

7

8

23 /**

24 * irq_set_chip - set the irq chip for an irq

25 * @irq: irq number

26 * @chip: pointer to irq chip description structure

27 */

28 int irq_set_chip(unsigned int irq, struct irq_chip *chip)

29 {

30 unsigned long flags;

31 struct irq_desc *desc = irq_get_desc_lock(irq, &flags, 0);

32

33 if (!desc)

34 return -EINVAL;

35

36 if (!chip)

37 chip = &no_irq_chip;

38

39 desc->irq_data.chip = chip; //设置该irq对应的pic chip

40 irq_put_desc_unlock(desc, flags);

41 /*

42 * For !CONFIG_SPARSE_IRQ make the irq show up in

43 * allocated_irqs. For the CONFIG_SPARSE_IRQ case, it is

44 * already marked, and this call is harmless.

45 */

46 irq_reserve_irq(irq);

47 return 0;

48 }

49 EXPORT_SYMBOL(irq_set_chip);

50

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

657 void

658 __irq_set_handler(unsigned int irq, irq_flow_handler_t handle, int is_chained,

659 const char *name)

660 {

661 unsigned long flags;

662 struct irq_desc *desc = irq_get_desc_buslock(irq, &flags, 0); //在table中查找irq得到irq_desc

663

664 if (!desc)

665 return;

666

667 if (!handle) {

668 handle = handle_bad_irq;

669 } else {

670 if (WARN_ON(desc->irq_data.chip == &no_irq_chip))

671 goto out;

672 }

673

674 /* Uninstall? */

675 if (handle == handle_bad_irq) {

676 if (desc->irq_data.chip != &no_irq_chip)

677 mask_ack_irq(desc);

678 irq_state_set_disabled(desc);

679 desc->depth = 1;

680 }

681 desc->handle_irq = handle; //设置handle_irq

682 desc->name = name;

683

684 if (handle != handle_bad_irq && is_chained) {

685 irq_settings_set_noprobe(desc);

686 irq_settings_set_norequest(desc);

687 irq_settings_set_nothread(desc);

688 irq_settings_set_chained(desc);

689 irq_startup(desc, true);

690 }

691 out:

692 irq_put_desc_busunlock(desc, flags);

693 }

694 EXPORT_SYMBOL_GPL(__irq_set_handler);

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

代码逻辑比较简单,我比较感兴趣的是这个desc->handle_irq的作用是什么?和irqaction中的handler、thread_fn有何区别?下一章说明

附录:

irq_desc

16 /**

17 * struct irq_desc - interrupt descriptor

18 * @irq_data: per irq and chip data passed down to chip functions

19 * @kstat_irqs: irq stats per cpu

20 * @handle_irq: highlevel irq-events handler

21 * @preflow_handler: handler called before the flow handler (currently used by sparc)

22 * @action: the irq action chain

23 * @status: status information

24 * @core_internal_state__do_not_mess_with_it: core internal status information

25 * @depth: disable-depth, for nested irq_disable() calls

26 * @wake_depth: enable depth, for multiple irq_set_irq_wake() callers

27 * @irq_count: stats field to detect stalled irqs

28 * @last_unhandled: aging timer for unhandled count

29 * @irqs_unhandled: stats field for spurious unhandled interrupts

30 * @threads_handled: stats field for deferred spurious detection of threaded handlers

31 * @threads_handled_last: comparator field for deferred spurious detection of theraded handlers

32 * @lock: locking for SMP

33 * @affinity_hint: hint to user space for preferred irq affinity

34 * @affinity_notify: context for notification of affinity changes

35 * @pending_mask: pending rebalanced interrupts

36 * @threads_oneshot: bitfield to handle shared oneshot threads

37 * @threads_active: number of irqaction threads currently running

38 * @wait_for_threads: wait queue for sync_irq to wait for threaded handlers

39 * @dir: /proc/irq/ procfs entry

40 * @name: flow handler name for /proc/interrupts output

41 */

42 struct irq_desc {

43 struct irq_data irq_data;

44 unsigned int __percpu *kstat_irqs;

45 irq_flow_handler_t handle_irq;

46 #ifdef CONFIG_IRQ_PREFLOW_FASTEOI

47 irq_preflow_handler_t preflow_handler;

48 #endif

49 struct irqaction *action; /* IRQ action list */

50 unsigned int status_use_accessors;

51 unsigned int core_internal_state__do_not_mess_with_it;

52 unsigned int depth; /* nested irq disables */

53 unsigned int wake_depth; /* nested wake enables */

54 unsigned int irq_count; /* For detecting broken IRQs */

55 unsigned long last_unhandled; /* Aging timer for unhandled count */

56 unsigned int irqs_unhandled;

57 atomic_t threads_handled;

58 int threads_handled_last;

59 raw_spinlock_t lock;

60 struct cpumask *percpu_enabled;

61 #ifdef CONFIG_SMP

62 const struct cpumask *affinity_hint;

63 struct irq_affinity_notify *affinity_notify;

64 #ifdef CONFIG_GENERIC_PENDING_IRQ

65 cpumask_var_t pending_mask;

66 #endif

67 #endif

68 unsigned long threads_oneshot;

69 atomic_t threads_active;

70 wait_queue_head_t wait_for_threads;

71 #ifdef CONFIG_PROC_FS

72 struct proc_dir_entry *dir;

73 #endif

74 int parent_irq;

75 struct module *owner;

76 const char *name;

77 } ____cacheline_internodealigned_in_smp;

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

irq_data

126 /**

127 * struct irq_data - per irq and irq chip data passed down to chip functions

128 * @mask: precomputed bitmask for accessing the chip registers

129 * @irq: interrupt number

130 * @hwirq: hardware interrupt number, local to the interrupt domain

131 * @node: node index useful for balancing

132 * @state_use_accessors: status information for irq chip functions.

133 * Use accessor functions to deal with it

134 * @chip: low level interrupt hardware access

135 * @domain: Interrupt translation domain; responsible for mapping

136 * between hwirq number and linux irq number.

137 * @handler_data: per-IRQ data for the irq_chip methods

138 * @chip_data: platform-specific per-chip private data for the chip

139 * methods, to allow shared chip implementations

140 * @msi_desc: MSI descriptor

141 * @affinity: IRQ affinity on SMP

142 *

143 * The fields here need to overlay the ones in irq_desc until we

144 * cleaned up the direct references and switched everything over to

145 * irq_data.

146 */

147 struct irq_data {

148 u32 mask;

149 unsigned int irq;

150 unsigned long hwirq;

151 unsigned int node;

152 unsigned int state_use_accessors;

153 struct irq_chip *chip;

154 struct irq_domain *domain;

155 void *handler_data;

156 void *chip_data;

157 struct msi_desc *msi_desc;

158 cpumask_var_t affinity;

159 };

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

irq_chip

281 /**

282 * struct irq_chip - hardware interrupt chip descriptor

283 *

284 * @name: name for /proc/interrupts

285 * @irq_startup: start up the interrupt (defaults to ->enable if NULL)

286 * @irq_shutdown: shut down the interrupt (defaults to ->disable if NULL)

287 * @irq_enable: enable the interrupt (defaults to chip->unmask if NULL)

288 * @irq_disable: disable the interrupt

289 * @irq_ack: start of a new interrupt

290 * @irq_mask: mask an interrupt source

291 * @irq_mask_ack: ack and mask an interrupt source

292 * @irq_unmask: unmask an interrupt source

293 * @irq_eoi: end of interrupt

294 * @irq_set_affinity: set the CPU affinity on SMP machines

295 * @irq_retrigger: resend an IRQ to the CPU

296 * @irq_set_type: set the flow type (IRQ_TYPE_LEVEL/etc.) of an IRQ

297 * @irq_set_wake: enable/disable power-management wake-on of an IRQ

298 * @irq_bus_lock: function to lock access to slow bus (i2c) chips

299 * @irq_bus_sync_unlock:function to sync and unlock slow bus (i2c) chips

300 * @irq_cpu_online: configure an interrupt source for a secondary CPU

301 * @irq_cpu_offline: un-configure an interrupt source for a secondary CPU

302 * @irq_suspend: function called from core code on suspend once per chip

303 * @irq_resume: function called from core code on resume once per chip

304 * @irq_pm_shutdown: function called from core code on shutdown once per chip

305 * @irq_calc_mask: Optional function to set irq_data.mask for special cases

306 * @irq_print_chip: optional to print special chip info in show_interrupts

307 * @flags: chip specific flags

308 */

309 struct irq_chip {

310 const char *name;

311 unsigned int (*irq_startup)(struct irq_data *data);

312 void (*irq_shutdown)(struct irq_data *data);

313 void (*irq_enable)(struct irq_data *data);

314 void (*irq_disable)(struct irq_data *data);

315

316 void (*irq_ack)(struct irq_data *data);

317 void (*irq_mask)(struct irq_data *data);

318 void (*irq_mask_ack)(struct irq_data *data);

319 void (*irq_unmask)(struct irq_data *data);

320 void (*irq_eoi)(struct irq_data *data);

321

322 int (*irq_set_affinity)(struct irq_data *data, const struct cpumask *dest, bool force);

323 int (*irq_retrigger)(struct irq_data *data);

324 int (*irq_set_type)(struct irq_data *data, unsigned int flow_type);

325 int (*irq_set_wake)(struct irq_data *data, unsigned int on);

326

327 void (*irq_bus_lock)(struct irq_data *data);

328 void (*irq_bus_sync_unlock)(struct irq_data *data);

329

330 void (*irq_cpu_online)(struct irq_data *data);

331 void (*irq_cpu_offline)(struct irq_data *data);

332

333 void (*irq_suspend)(struct irq_data *data);

334 void (*irq_resume)(struct irq_data *data);

335 void (*irq_pm_shutdown)(struct irq_data *data);

336

337 void (*irq_calc_mask)(struct irq_data *data);

338

339 void (*irq_print_chip)(struct irq_data *data, struct seq_file *p);

340

341 unsigned long flags;

342 };

x86 kernel 中断分析三——中断处理流程

CPU检测中断

CPU在执行每条程序之前会检测是否有中断到达,即中断控制器是否有发送中断信号过来

查找IDT

CPU根据中断向量到IDT中读取对应的中断描述符表项,根据段选择符合偏移确定中断服务程序的地址见附录2

interrupt数组

在分析一中,我们看到,填充IDT中断服务程序的是interrupt数组的内容,所以第2步跳转到interrupt数组对应的表项,表项的内容之前也已分析过

push vector num and jmp to common_interrupt

778 /*

779 * the CPU automatically disables interrupts when executing an IRQ vector,

780 * so IRQ-flags tracing has to follow that:

781 */

782 .p2align CONFIG_X86_L1_CACHE_SHIFT

783 common_interrupt:

784 ASM_CLAC

785 addl $-0x80,(%esp) /* Adjust vector into the [-256,-1] range */

786 SAVE_ALL

787 TRACE_IRQS_OFF

788 movl %esp,%eax

789 call do_IRQ

790 jmp ret_from_intr

791 ENDPROC(common_interrupt)

792 CFI_ENDPROC- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

addl $-0x80,(%esp)

根据第一篇分析,此时栈顶是(~vector + 0x80),这里减去0x80,所以值为vector num取反,范围在[-256, -1]。这么做是为了和系统调用区分,正值为系统调用号,负值为中断向量。

SAVE_ALL

保存现场,将所有寄存器的值压栈(cs eip ss esp由系统自动保存)

186 .macro SAVE_ALL

187 cld

188 PUSH_GS

189 pushl_cfi %fs

190 /*CFI_REL_OFFSET fs, 0;*/

191 pushl_cfi %es

192 /*CFI_REL_OFFSET es, 0;*/

193 pushl_cfi %ds

194 /*CFI_REL_OFFSET ds, 0;*/

195 pushl_cfi %eax

196 CFI_REL_OFFSET eax, 0

197 pushl_cfi %ebp

198 CFI_REL_OFFSET ebp, 0

199 pushl_cfi %edi

200 CFI_REL_OFFSET edi, 0

201 pushl_cfi %esi

202 CFI_REL_OFFSET esi, 0

203 pushl_cfi %edx

204 CFI_REL_OFFSET edx, 0

205 pushl_cfi %ecx

206 CFI_REL_OFFSET ecx, 0

207 pushl_cfi %ebx

208 CFI_REL_OFFSET ebx, 0

209 movl $(__USER_DS), %edx

210 movl %edx, %ds

211 movl %edx, %es

212 movl $(__KERNEL_PERCPU), %edx

213 movl %edx, %fs

214 SET_KERNEL_GS %edx

215 .endm- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

movl %esp,%eax

将esp的值赋值给eax,eax作为do_IRQ的第一个参数,esp的值是以上压栈的寄存器的内容,以pt_reg形式传过去。

call do_IRQ

175 /*

176 * do_IRQ handles all normal device IRQ's (the special

177 * SMP cross-CPU interrupts have their own specific

178 * handlers).

179 */

180 __visible unsigned int __irq_entry do_IRQ(struct pt_regs *regs)

181 {

182 struct pt_regs *old_regs = set_irq_regs(regs);

183

184 /* high bit used in ret_from_ code */

185 unsigned vector = ~regs->orig_ax; //获取向量号,这里有一个取反的操作,与之前的取反相对应得到正的向量号

186 unsigned irq;

187

188 irq_enter();

189 exit_idle();

190

191 irq = __this_cpu_read(vector_irq[vector]); //通过向量号得到中断号

192

193 if (!handle_irq(irq, regs)) {

194 ack_APIC_irq();

195

196 if (irq != VECTOR_RETRIGGERED) {

197 pr_emerg_ratelimited("%s: %d.%d No irq handler for vector (irq %d)\n",

198 __func__, smp_processor_id(),

199 vector, irq);

200 } else {

201 __this_cpu_write(vector_irq[vector], VECTOR_UNDEFINED);

202 }

203 }

204

205 irq_exit();

206

207 set_irq_regs(old_regs);

208 return 1;

209 }- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

irq_enter

319 /*

320 * Enter an interrupt context. //进入中断上下文,因为首先处理的是硬中断,所以我们可以把irq_enter认为是硬中断的开始

321 */

322 void irq_enter(void)

323 {

324 rcu_irq_enter(); //inform RCU that current CPU is entering irq away from idle

325 if (is_idle_task(current) && !in_interrupt()) { //如果当前是pid==0的idle task并且不处于中断上下文中

326 /*

327 * Prevent raise_softirq from needlessly waking up ksoftirqd

328 * here, as softirq will be serviced on return from interrupt.

329 */

330 local_bh_disable();

331 tick_irq_enter(); //idle进程会被中断或者其他进程抢占,在系统中断过程中用irq_enter->tick_irq_enter()恢复周期性tick以得到正确的jiffies值(这段注释摘录自http://blog.chinaunix.net/uid-29675110-id-4365095.html)

332 _local_bh_enable();

333 }

334

335 __irq_enter();

336 }- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

__irq_enter

28 /*

29 * It is safe to do non-atomic ops on ->hardirq_context,

30 * because NMI handlers may not preempt and the ops are

31 * always balanced, so the interrupted value of ->hardirq_context

32 * will always be restored.

33 */

34 #define __irq_enter() \

35 do { \

36 account_irq_enter_time(current); \

37 preempt_count_add(HARDIRQ_OFFSET); \ //HARDIRQ_OFFSET等于1左移16位,即将preempt_count第16 bit加1,preempt_count的格式见附录

38 trace_hardirq_enter(); \

39 } while (0)- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

exit_idle

如果系统正处在idle状态,那么退出IDLE

258 /* Called from interrupts to signify idle end */

259 void exit_idle(void)

260 {

261 /* idle loop has pid 0 */ //如果当前进程不为0,直接退出,不需要退出 idle

262 if (current->pid)

263 return;

264 __exit_idle(); //如果是idle进程,那么通过__exit_idle调用一系列notification

265 }- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

handle_irq

165 bool handle_irq(unsigned irq, struct pt_regs *regs)

166 {

167 struct irq_desc *desc;

168 int overflow;

169

170 overflow = check_stack_overflow(); //x86架构下如果sp指针距离栈底的位置小于1KB,则认为有stack overflow的风险

171

172 desc = irq_to_desc(irq); //获取desc,从刚开始的vector num-->irq num--> desc

173 if (unlikely(!desc))

174 return false;

175 //如果发生中断时,CPU正在执行用户空间的代码,处理中断需切换到内核栈,但此时内核栈是空的,所以无需再切换到中断栈

176 if (user_mode_vm(regs) || !execute_on_irq_stack(overflow, desc, irq)) { // 在CPU的irq stack执行,否则在当前进程的栈执行,调用下面的desc->handle_irq

177 if (unlikely(overflow))

178 print_stack_overflow();

179 desc->handle_irq(irq, desc);

180 }

181

182 return true;

183 }- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

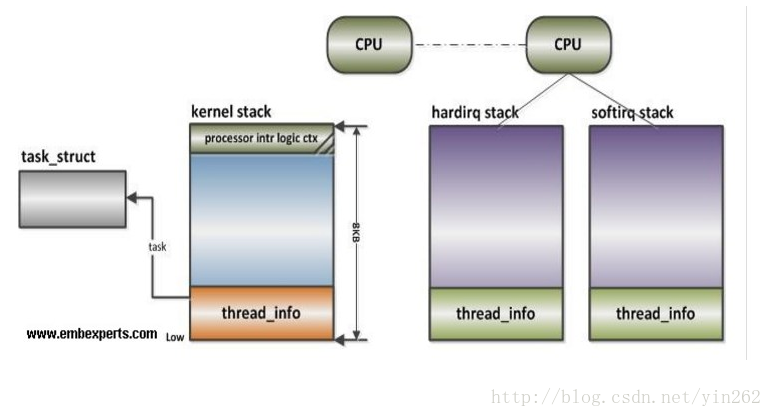

中断栈的定义及初始化

按照目前的内核设计,中断有自己的栈,用来执行中断服务程序,这样是为了防止中断嵌套破坏与之共享的

中断栈的定义,可以看到与进程上下文的布局相同,thread info + stack

58 /*

59 * per-CPU IRQ handling contexts (thread information and stack)

60 */

61 union irq_ctx {

62 struct thread_info tinfo;

63 u32 stack[THREAD_SIZE/sizeof(u32)];

64 } __attribute__((aligned(THREAD_SIZE)));- 1

- 2

- 3

- 4

- 5

- 6

- 7

中断栈的初始化:

创建percpu变量hardirq_ctx和softirq_ctx,类型为irq_ctx,所以每个cpu的软硬中断有各自的stack

66 static DEFINE_PER_CPU(union irq_ctx *, hardirq_ctx);

67 static DEFINE_PER_CPU(union irq_ctx *, softirq_ctx);

- 1

- 2

- 3

native_init_IRQ->irq_ctx_init

hardirq_ctx和softirq_ctx的初始化方式相同,如下

116 /*

117 * allocate per-cpu stacks for hardirq and for softirq processing

118 */

119 void irq_ctx_init(int cpu)

120 {

121 union irq_ctx *irqctx;

122

123 if (per_cpu(hardirq_ctx, cpu))

124 return;

125

126 irqctx = page_address(alloc_pages_node(cpu_to_node(cpu), //分配2个page

127 THREADINFO_GFP,

128 THREAD_SIZE_ORDER));

129 memset(&irqctx->tinfo, 0, sizeof(struct thread_info)); //初始化其中的部分成员

130 irqctx->tinfo.cpu = cpu;

131 irqctx->tinfo.addr_limit = MAKE_MM_SEG(0);

132

133 per_cpu(hardirq_ctx, cpu) = irqctx; //赋值给hardirq_ctx

134

135 irqctx = page_address(alloc_pages_node(cpu_to_node(cpu),

136 THREADINFO_GFP,

137 THREAD_SIZE_ORDER));

138 memset(&irqctx->tinfo, 0, sizeof(struct thread_info));

139 irqctx->tinfo.cpu = cpu;

140 irqctx->tinfo.addr_limit = MAKE_MM_SEG(0);

141

142 per_cpu(softirq_ctx, cpu) = irqctx;

143

144 printk(KERN_DEBUG "CPU %u irqstacks, hard=%p soft=%p\n",

145 cpu, per_cpu(hardirq_ctx, cpu), per_cpu(softirq_ctx, cpu));

146 }- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

网上找的一张图,如下

中断栈的切换

发生中断时需要从当前进程栈切换到中断栈

80 static inline int

81 execute_on_irq_stack(int overflow, struct irq_desc *desc, int irq)

82 {

83 union irq_ctx *curctx, *irqctx;

84 u32 *isp, arg1, arg2;

85

86 curctx = (union irq_ctx *) current_thread_info(); //获取当前进程的process context,即栈的起始地址

87 irqctx = __this_cpu_read(hardirq_ctx); //获取硬中断的hardirq context,即栈的起始地址

88

89 /*

90 * this is where we switch to the IRQ stack. However, if we are

91 * already using the IRQ stack (because we interrupted a hardirq

92 * handler) we can't do that and just have to keep using the

93 * current stack (which is the irq stack already after all)

94 */

95 if (unlikely(curctx == irqctx)) //如果当前进程的栈和中断栈相同,说明发生了中断嵌套,此时当前进程就是一个中断的服务例程

96 return 0; //这种情况下不能进行栈的切换,还是在当前栈中运行,只要返回0即可

97

98 /* build the stack frame on the IRQ stack */

99 isp = (u32 *) ((char *)irqctx + sizeof(*irqctx)); //获取中断栈的isp

100 irqctx->tinfo.task = curctx->tinfo.task; //获取当前进程的task和stack point

101 irqctx->tinfo.previous_esp = current_stack_pointer;

102

103 if (unlikely(overflow))

104 call_on_stack(print_stack_overflow, isp);

105

106 asm volatile("xchgl %%ebx,%%esp \n" //具体的栈切换发生在以下汇编中,基本上就是保存现场,进行切换,不深入研究汇编了...

107 "call *%%edi \n"

108 "movl %%ebx,%%esp \n"

109 : "=a" (arg1), "=d" (arg2), "=b" (isp)

110 : "0" (irq), "1" (desc), "2" (isp),

111 "D" (desc->handle_irq) //不管是共享栈还是独立栈,最后都会调用到irq desc对应的handle_irq

112 : "memory", "cc", "ecx");

113 return 1;

114 }- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

handle_level_irq

kernel中对于中断有一系列的中断流处理函数

handle_simple_irq 用于简易流控处理;

handle_level_irq 用于电平触发中断的流控处理;

handle_edge_irq 用于边沿触发中断的流控处理;

handle_fasteoi_irq 用于需要响应eoi的中断控制器;

handle_percpu_irq 用于只在单一cpu响应的中断;

handle_nested_irq 用于处理使用线程的嵌套中断;- 1

- 2

- 3

- 4

- 5

- 6

我们在第二篇分析中,init_ISA_irqs把legacy irq的中断流处理函数都设置为handle_level_irq,以此为例做分析:

//level type中断,当硬件中断line的电平处于active level时就一直保持有中断请求,这就要求处理中断过程中屏蔽中断,响应硬件后打开中断

387 /**

388 * handle_level_irq - Level type irq handler //电平触发的中断处理函数

389 * @irq: the interrupt number

390 * @desc: the interrupt description structure for this irq

391 *

392 * Level type interrupts are active as long as the hardware line has

393 * the active level. This may require to mask the interrupt and unmask

394 * it after the associated handler has acknowledged the device, so the

395 * interrupt line is back to inactive.

396 */

397 void

398 handle_level_irq(unsigned int irq, struct irq_desc *desc)

399 {

400 raw_spin_lock(&desc->lock); //上锁

401 mask_ack_irq(desc); //mask对应的中断,否则一直接收来自interrupt line的中断信号

402

403 if (unlikely(irqd_irq_inprogress(&desc->irq_data))) //如果该中断正在其他cpu上被处理

404 if (!irq_check_poll(desc)) //这边不是很理解,irq的IRQS_POLL_INPROGRESS(polling in a progress)是什么意思?只能等后续代码遇到这个宏的时候再说。如果是在该状态,cpu relax,等待完成

405 goto out_unlock; //直接解锁退出

406 //清除IRQS_REPLAY和IRQS_WAITING标志位

407 desc->istate &= ~(IRQS_REPLAY | IRQS_WAITING);

408 kstat_incr_irqs_this_cpu(irq, desc); //该CPU上该irq触发次数加1,总的中断触发次数加1

409

410 /*

411 * If its disabled or no action available

412 * keep it masked and get out of here

413 */

414 if (unlikely(!desc->action || irqd_irq_disabled(&desc->irq_data))) {

415 desc->istate |= IRQS_PENDING; //设置为pending

416 goto out_unlock;

417 }

418

419 handle_irq_event(desc); //核心函数

420

421 cond_unmask_irq(desc); //使能中断线

422

423 out_unlock:

424 raw_spin_unlock(&desc->lock);

425 }

426 EXPORT_SYMBOL_GPL(handle_level_irq);- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

handle irq event

182 irqreturn_t handle_irq_event(struct irq_desc *desc)

183 {

184 struct irqaction *action = desc->action; //获取irqaction链表

185 irqreturn_t ret;

186

187 desc->istate &= ~IRQS_PENDING; //正式进入处理流程,清除irq desc的pending标志位

188 irqd_set(&desc->irq_data, IRQD_IRQ_INPROGRESS); //处理中断前设置IRQD_IRQ_INPROGRESS标志

189 raw_spin_unlock(&desc->lock);

190

191 ret = handle_irq_event_percpu(desc, action);

192

193 raw_spin_lock(&desc->lock);

194 irqd_clear(&desc->irq_data, IRQD_IRQ_INPROGRESS); //处理中断后清除IRQD_IRQ_INPROGRESS标志

195 return ret;

196 }- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

handle_irq_event_percpu

132 irqreturn_t

133 handle_irq_event_percpu(struct irq_desc *desc, struct irqaction *action)

134 {

135 irqreturn_t retval = IRQ_NONE;

136 unsigned int flags = 0, irq = desc->irq_data.irq;

137

138 do {

139 irqreturn_t res;

140

141 trace_irq_handler_entry(irq, action);

142 res = action->handler(irq, action->dev_id); //调用硬中断处理函数

143 trace_irq_handler_exit(irq, action, res);

144

145 if (WARN_ONCE(!irqs_disabled(),"irq %u handler %pF enabled interrupts\n",

146 irq, action->handler))

147 local_irq_disable();

148

149 switch (res) {

150 case IRQ_WAKE_THREAD: //线程化中断的硬中断,通常只是响应一下硬件ack,就返会IRQ_WAKE_THREAD,唤醒软中断线程

151 /*

152 * Catch drivers which return WAKE_THREAD but

153 * did not set up a thread function

154 */

155 if (unlikely(!action->thread_fn)) {

156 warn_no_thread(irq, action);

157 break;

158 }

159

160 irq_wake_thread(desc, action); //唤醒软中断线程

161

162 /* Fall through to add to randomness */

163 case IRQ_HANDLED: //表示已经在硬中断中处理完毕

164 flags |= action->flags;

165 break;

166

167 default:

168 break;

169 }

170

171 retval |= res;

172 action = action->next; //对于共享中断,所有irqaction挂在同一desc下

173 } while (action);

174

175 add_interrupt_randomness(irq, flags); //这块代码其实和中断流程的关系不大,利用用户和外设作为噪声源,为内核随机熵池做贡献....(http://jingpin.jikexueyuan.com/article/23923.html)

176

177 if (!noirqdebug)

178 note_interrupt(irq, desc, retval);

179 return retval;

180 }- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

- 29

- 30

- 31

- 32

- 33

- 34

- 35

- 36

- 37

- 38

- 39

- 40

- 41

- 42

- 43

- 44

- 45

- 46

- 47

- 48

- 49

以上就是中断处理流程的简要分析,有个问题,中action的handler及线程化的软中断从何而来?下篇分析见。

附录1:

CPU使用IDT查到的中断服务程序的段选择符从GDT中取得相应的段描述符,段描述符里保存了中断服务程序的段基址和属性信息,此时CPU就得到了中断服务程序的起始地址。这里,CPU会根据当前cs寄存器里的CPL和GDT的段描述符的DPL,以确保中断服务程序是高于当前程序的,如果这次中断是编程异常(如:int 80h系统调用),那么还要检查CPL和IDT表中中断描述符的DPL,以保证当前程序有权限使用中断服务程序,这可以避免用户应用程序访问特殊的陷阱门和中断门[3]。

如下图显示了从中断向量到GDT中相应中断服务程序起始位置的定位方式:

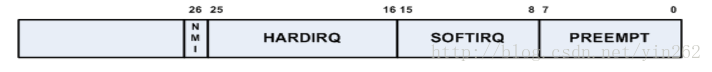

附录2. preempt_count:

44 #define HARDIRQ_OFFSET (1UL << HARDIRQ_SHIFT) // 1左移16位

32 #define HARDIRQ_SHIFT (SOFTIRQ_SHIFT + SOFTIRQ_BITS) // 8 + 8 = 16

31 #define SOFTIRQ_SHIFT (PREEMPT_SHIFT + PREEMPT_BITS) // 0 + 8 = 8

30 #define PREEMPT_SHIFT 0

25 #define PREEMPT_BITS 8

26 #define SOFTIRQ_BITS 8

2500 void __kprobes preempt_count_add(int val)

2501 {

2502 #ifdef CONFIG_DEBUG_PREEMPT

2503 /*

2504 * Underflow?

2505 */

2506 if (DEBUG_LOCKS_WARN_ON((preempt_count() < 0)))

2507 return;

2508 #endif

2509 __preempt_count_add(val); //除去debug相关的内容,只有这一行关键代码,将preempt_count中第16 bit加1

2510 #ifdef CONFIG_DEBUG_PREEMPT

2511 /*

2512 * Spinlock count overflowing soon?

2513 */

2514 DEBUG_LOCKS_WARN_ON((preempt_count() & PREEMPT_MASK) >=

2515 PREEMPT_MASK - 10);

2516 #endif

2517 if (preempt_count() == val)

2518 trace_preempt_off(CALLER_ADDR0, get_parent_ip(CALLER_ADDR1));

2519 }

2520 EXPORT_SYMBOL(preempt_count_add);- 1

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

- 11

- 12

- 13

- 14

- 15

- 16

- 17

- 18

- 19

- 20

- 21

- 22

- 23

- 24

- 25

- 26

- 27

- 28

preempt_count的布局如下:

kernel 中断分析之四——中断申请[上]

showstopper_x

于 2017-01-04 22:43:13 发布 2729

收藏 1

分类专栏: interrupt 文章标签: kernel interrupt

版权

interrupt 专栏收录该内容

10 篇文章 1 订阅

订阅专栏

前言

从分析三可知,中断处理最终调用了irqaction的handler(interrupt context),在必要的情况下唤醒中断处理线程调用thread_fn(process context)。

对应的中断服务例程是在驱动初始化阶段,普通中断通过setup_irq、request_irq或者request_threaded_irq进行申请,percpu中断通过request_percpu_irq、setup_percpu_irq,本篇主要对这上接口进行分析,__setup_irq放在kernel 中断分析之四——中断申请[下]中分析。

request_threaded_irq

关于中断线程化的目的和对系统performance的提升,可以看这篇文章Moving interrupts to threads(翻译)。

1349 /**

1350 * request_threaded_irq - allocate an interrupt line

1351 * @irq: Interrupt line to allocate

1352 * @handler: Function to be called when the IRQ occurs.

1353 * Primary handler for threaded interrupts

1354 * If NULL and thread_fn != NULL the default

1355 * primary handler is installed

1356 * @thread_fn: Function called from the irq handler thread

1357 * If NULL, no irq thread is created

1358 * @irqflags: Interrupt type flags

1359 * @devname: An ascii name for the claiming device

1360 * @dev_id: A cookie passed back to the handler function

1361 *

1362 * This call allocates interrupt resources and enables the

1363 * interrupt line and IRQ handling. From the point this

1364 * call is made your handler function may be invoked. Since

1365 * your handler function must clear any interrupt the board

1366 * raises, you must take care both to initialise your hardware

1367 * and to set up the interrupt handler in the right order.

1368 *

1369 * If you want to set up a threaded irq handler for your device

1370 * then you need to supply @handler and @thread_fn. @handler is

1371 * still called in hard interrupt context and has to check

1372 * whether the interrupt originates from the device. If yes it

1373 * needs to disable the interrupt on the device and return

1374 * IRQ_WAKE_THREAD which will wake up the handler thread and run

1375 * @thread_fn. This split handler design is necessary to support

1376 * shared interrupts.

1377 *

1378 * Dev_id must be globally unique. Normally the address of the

1379 * device data structure is used as the cookie. Since the handler

1380 * receives this value it makes sense to use it.

1381 *

1382 * If your interrupt is shared you must pass a non NULL dev_id

1383 * as this is required when freeing the interrupt.

1384 *

1385 * Flags:

1386 *

1387 * IRQF_SHARED Interrupt is shared

1388 * IRQF_TRIGGER_* Specify active edge(s) or level

1389 *

1390 */

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

内核中很少有如此大篇幅的注释。该API的目的是分配一条中断线,参数如下:

irq:中断号,代表一条interrupt line;

handler:注释中叫做primary handler,工作在interrupt context下。如果handler为NULL,thread_fn不为NULL,那么内核会为handler赋值一个default handler指针,具体作用后面会讲;

thread_fn: 内核将会创建一个线程——irq handler thread(对应irqaction的thread成员),该线程会调用thread_fn,以实现bottom half的线程化;

irqflags:描述中断类型;

devname: 该irqaction的名称;

dev_id:handler中会用到最为参数,如果中断共享的话,该参数不能为NULL。

注释1370-1376行:

@handler 仍然运行在硬中断上下文,必须检测中断是否来自对应的device,如果是,那么,硬中断中disable该设备上的该中断,然后返回IRQ_WAKE_THREAD。系统将会根据该返回值(见分析三)唤醒中断线程并调用@thread_fn。

request_threaded_irq的源码如下:

1391 int request_threaded_irq(unsigned int irq, irq_handler_t handler,

1392 irq_handler_t thread_fn, unsigned long irqflags,

1393 const char *devname, void *dev_id)

1394 {

1395 struct irqaction *action;

1396 struct irq_desc *desc;

1397 int retval;

1398

1399 /*

1400 * Sanity-check: shared interrupts must pass in a real dev-ID,

1401 * otherwise we'll have trouble later trying to figure out

1402 * which interrupt is which (messes up the interrupt freeing

1403 * logic etc).

1404 */

1405 if ((irqflags & IRQF_SHARED) && !dev_id) //共享中断的情况下必须声明dev_id

1406 return -EINVAL;

1407

1408 desc = irq_to_desc(irq); //获取irqdesc

1409 if (!desc)

1410 return -EINVAL;

1411

1412 if (!irq_settings_can_request(desc) || //某些中断,比如系统保留的中断,不允许申请,会设置IRQ_NOREQUEST标志

1413 WARN_ON(irq_settings_is_per_cpu_devid(desc))) //如果申请的中断时percpu中断,比如timer,也会返回失败

1414 return -EINVAL;

1415

1416 if (!handler) { //如果handler为NULL但thread_fn不为NULL,赋值handler为irq_default_primary_handler

1417 if (!thread_fn) // handler和thread_fn不能同时为NULL

1418 return -EINVAL;

1419 handler = irq_default_primary_handler; //直接返回IRQ_WAKE_THREAD

1420 }

1421 // 分配并初始化新的irqaction

1422 action = kzalloc(sizeof(struct irqaction), GFP_KERNEL);

1423 if (!action)

1424 return -ENOMEM;

1425

1426 action->handler = handler;

1427 action->thread_fn = thread_fn;

1428 action->flags = irqflags;

1429 action->name = devname;

1430 action->dev_id = dev_id;

1431

1432 chip_bus_lock(desc);

1433 retval = __setup_irq(irq, desc, action); //大部分工作都放到了__setup_irq中

1434 chip_bus_sync_unlock(desc);

1435

1436 if (retval)

1437 kfree(action);

1438

1439 #ifdef CONFIG_DEBUG_SHIRQ_FIXME

1440 if (!retval && (irqflags & IRQF_SHARED)) {

1441 /*

1442 * It's a shared IRQ -- the driver ought to be prepared for it

1443 * to happen immediately, so let's make sure....

1444 * We disable the irq to make sure that a 'real' IRQ doesn't

1445 * run in parallel with our fake.

1446 */

1447 unsigned long flags;

1448

1449 disable_irq(irq);

1450 local_irq_save(flags);

1451

1452 handler(irq, dev_id);

1453

1454 local_irq_restore(flags);

1455 enable_irq(irq);

1456 }

1457 #endif

1458 return retval;

1459 }

EXPORT_SYMBOL(request_threaded_irq);

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

request_irq

127 static inline int __must_check

128 request_irq(unsigned int irq, irq_handler_t handler, unsigned long flags,

129 const char *name, void *dev)

130 {

131 return request_threaded_irq(irq, handler, NULL, flags, name, dev);

132 }

1

2

3

4

5

6

request_irq是对request_threaded_irq的封装,thread_fn为NULL,不涉及中断线程化。

setup_irq

1196 /**

1197 * setup_irq - setup an interrupt

1198 * @irq: Interrupt line to setup

1199 * @act: irqaction for the interrupt

1200 *

1201 * Used to statically setup interrupts in the early boot process.

1202 */

1203 int setup_irq(unsigned int irq, struct irqaction *act)

1204 {

1205 int retval;

1206 struct irq_desc *desc = irq_to_desc(irq);

1207

1208 if (WARN_ON(irq_settings_is_per_cpu_devid(desc)))

1209 return -EINVAL;

1210 chip_bus_lock(desc);

1211 retval = __setup_irq(irq, desc, act);

1212 chip_bus_sync_unlock(desc);

1213

1214 return retval;

1215 }

1216 EXPORT_SYMBOL_GPL(setup_irq);

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

setup_irq一般在系统早期启动的时候被调用(比如系统时钟),用静态定义的irqaction作为参数,这么做的原因是系统启动初期可能slab还没有初始化好,不能像request_threaded_irq那样动态分配irqaction。并且setup_irq的参数irqaction都是没有thread_fn的(我看到的都没有),说明不涉及线程化。流程比较简单,上锁后直接调用__setup_irq。

request_percpu_irq

1642 /**

1643 * request_percpu_irq - allocate a percpu interrupt line

1644 * @irq: Interrupt line to allocate

1645 * @handler: Function to be called when the IRQ occurs.

1646 * @devname: An ascii name for the claiming device

1647 * @dev_id: A percpu cookie passed back to the handler function

1648 *

1649 * This call allocates interrupt resources, but doesn't

1650 * automatically enable the interrupt. It has to be done on each

1651 * CPU using enable_percpu_irq().

1652 *

1653 * Dev_id must be globally unique. It is a per-cpu variable, and

1654 * the handler gets called with the interrupted CPU's instance of

1655 * that variable.

1656 */

1657 int request_percpu_irq(unsigned int irq, irq_handler_t handler,

1658 const char *devname, void __percpu *dev_id)

1659 {

1660 struct irqaction *action;

1661 struct irq_desc *desc;

1662 int retval;

1663

1664 if (!dev_id)

1665 return -EINVAL;

1666

1667 desc = irq_to_desc(irq);

1668 if (!desc || !irq_settings_can_request(desc) ||

1669 !irq_settings_is_per_cpu_devid(desc))

1670 return -EINVAL;

1671

1672 action = kzalloc(sizeof(struct irqaction), GFP_KERNEL);

1673 if (!action)

1674 return -ENOMEM;

1675

1676 action->handler = handler;

1677 action->flags = IRQF_PERCPU | IRQF_NO_SUSPEND;

1678 action->name = devname;

1679 action->percpu_dev_id = dev_id;

1680

1681 chip_bus_lock(desc);

1682 retval = __setup_irq(irq, desc, action);

1683 chip_bus_sync_unlock(desc);

1684

1685 if (retval)

1686 kfree(action);

1687

1688 return retval;

1689 }

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

申请percpu irq,动态分配irqaction,并没有初始化thread_fn,所以不涉及线程化。

setup_percpu_irq

1621 /**

1622 * setup_percpu_irq - setup a per-cpu interrupt

1623 * @irq: Interrupt line to setup

1624 * @act: irqaction for the interrupt

1625 *

1626 * Used to statically setup per-cpu interrupts in the early boot process.

1627 */

1628 int setup_percpu_irq(unsigned int irq, struct irqaction *act)

1629 {

1630 struct irq_desc *desc = irq_to_desc(irq);

1631 int retval;

1632

1633 if (!desc || !irq_settings_is_per_cpu_devid(desc))

1634 return -EINVAL;

1635 chip_bus_lock(desc);

1636 retval = __setup_irq(irq, desc, act);

1637 chip_bus_sync_unlock(desc);

1638

1639 return retval;

1640 }

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

申请percpu irq,系统启动早期调用,不涉及中断线程化。

前言

在kernel 中断分析之四——中断申请 [上]中,request_irq、request_threaded_irq、setup_irq、setup_percpu_irq、request_percpu_irq最终都调用了__setup_irq,本篇对该API进行分析,由于代码比较长,分段分析。

请注意,在分析过程中,遇到一些拿捏不定的地方,以用粗体表示,如果有理解错误,欢迎指正。

__setup_irq——线程化处理

895 /*

896 * Internal function to register an irqaction - typically used to

897 * allocate special interrupts that are part of the architecture.

898 */

899 static int

900 __setup_irq(unsigned int irq, struct irq_desc *desc, struct irqaction *new)

901 {

902 struct irqaction *old, **old_ptr;

903 unsigned long flags, thread_mask = 0;

904 int ret, nested, shared = 0;

905 cpumask_var_t mask;

906

907 if (!desc)

908 return -EINVAL;

909

910 if (desc->irq_data.chip == &no_irq_chip)

911 return -ENOSYS;

912 if (!try_module_get(desc->owner))

913 return -ENODEV;

914

915 /*

916 * Check whether the interrupt nests into another interrupt

917 * thread.

918 */

919 nested = irq_settings_is_nested_thread(desc); //判断是否是嵌套中断线程,关于中断嵌套的处理,在后续有分析

920 if (nested) {

921 if (!new->thread_fn) { //嵌套中断不需要有handler,但是thread_fn要有的

922 ret = -EINVAL;

923 goto out_mput;

924 }

925 /*

926 * Replace the primary handler which was provided from

927 * the driver for non nested interrupt handling by the

928 * dummy function which warns when called.

929 */

930 new->handler = irq_nested_primary_handler; //抛出一个警告,nested irq的调用时父中断的handler中处理的,而不是在这里

931 } else {

932 if (irq_settings_can_thread(desc)) //有的中断不允许线程化,设置了IRQ_NOTHREAD标志

933 irq_setup_forced_threading(new); //强制线程化

934 }

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

irq_setup_forced_threading

879 static void irq_setup_forced_threading(struct irqaction *new)

880 {

881 if (!force_irqthreads) //该全局变量用于表示系统是否允许中断线程化

882 return;

883 if (new->flags & (IRQF_NO_THREAD | IRQF_PERCPU | IRQF_ONESHOT)) //IRQF_NO_THREAD 或者percpu中断或者已经线程化的中断,直接返回

884 return;

885

886 new->flags |= IRQF_ONESHOT; //线程化中断需要设置IRQF_ONESHOT标志

887//如果thread为NULL(那么handler必不为NULL),此时为了线程化,强制将handler赋给thread_fn,handler设置为irq_default_primary_handler

888 if (!new->thread_fn) {

889 set_bit(IRQTF_FORCED_THREAD, &new->thread_flags); //thread_flags设置IRQTF_FORCED_THREAD,表示经过强制线程化

890 new->thread_fn = new->handler;

891 new->handler = irq_default_primary_handler;

892 }

893 }

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

我对上述代码的理解,不一定正确:目前的内核实现机制,非nested irq,且非IRQ_NOTHREAD,内核都会将其强制线程化。

以下是IRQF_ONESHOT 的注释:

52 * IRQF_ONESHOT - Interrupt is not reenabled after the hardirq handler finished.

53 * Used by threaded interrupts which need to keep the

54 * irq line disabled until the threaded handler has been run.

1

2

3

另外摘录upstream上IRQF_ONESHOT的commit message如下:

For threaded interrupt handlers we expect the hard interrupt handler

part to mask the interrupt on the originating device. The interrupt

line itself is reenabled after the hard interrupt handler has

executed.

This requires access to the originating device from hard interrupt

context which is not always possible. There are devices which can only

be accessed via a bus (i2c, spi, ...). The bus access requires thread

context. For such devices we need to keep the interrupt line masked

until the threaded handler has executed.

Add a new flag IRQF_ONESHOT which allows drivers to request that the

interrupt is not unmasked after the hard interrupt context handler has

been executed and the thread has been woken. The interrupt line is

unmasked after the thread handler function has been executed.

Note that for now IRQF_ONESHOT cannot be used with IRQF_SHARED to

avoid complex accounting mechanisms.

For oneshot interrupts the primary handler simply returns

IRQ_WAKE_THREAD and does nothing else. A generic implementation

irq_oneshot_primary_handler() is provided to avoid useless copies all

over the place.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

所以有:

1. 在线程化中断处理函数中,hardirq在interrupt context中执行时是mask产生中断的外设的对应irq line的,完成硬中断操作后中断线被reenable;

2. 然而中断上下文中并不是能够access所有的外设,比如某些设备必须要通过i2c、spi等bus才能access,而bus access需要在进程上下文中才能实现(这里不太明白),也就是说,需要将处理中断的代码放到进程上下文中(我的理解),所以对于这些设备,在进程上下文中也要关闭中断;

3. IRQF_ONESHOT 应运而生,该flag允许中断线程运行期间对应的interrupt line一直是mask的。运行结束后unmask。

设置IRQF_ONESHOT 的情况下,在硬中断处理完毕后,仍然不能打开对应的中断(the irq line disabled),直到线程化handler处理完毕。

__setup_irq——创建irq handler thread

936 /*

937 * Create a handler thread when a thread function is supplied

938 * and the interrupt does not nest into another interrupt

939 * thread.

940 */

941 if (new->thread_fn && !nested) {

942 struct task_struct *t;

943 static const struct sched_param param = {

944 .sched_priority = MAX_USER_RT_PRIO/2, //线程的优先级

945 };

946 //创建一个名为irq/irq-name的线程,该线程调用irq_thread,参数为新的irqaction,只是创建,并没有唤醒

947 t = kthread_create(irq_thread, new, "irq/%d-%s", irq,

948 new->name);

949 if (IS_ERR(t)) {

950 ret = PTR_ERR(t);

951 goto out_mput;

952 }

953

954 sched_setscheduler_nocheck(t, SCHED_FIFO, ¶m); //设置调度策略和优先级

955

956 /*

957 * We keep the reference to the task struct even if

958 * the thread dies to avoid that the interrupt code

959 * references an already freed task_struct.

960 */

961 get_task_struct(t); //将该线程的task_struct的reference加1,防止线程die以后task_struct被释放??

962 new->thread = t;

963 /* //这边的注释我没有看懂,为什么设置affinity对共享中断很重要,在irq_thread中会check affinity,到那个时候再看做了什么。

964 * Tell the thread to set its affinity. This is

965 * important for shared interrupt handlers as we do

966 * not invoke setup_affinity() for the secondary

967 * handlers as everything is already set up. Even for

968 * interrupts marked with IRQF_NO_BALANCE this is

969 * correct as we want the thread to move to the cpu(s)

970 * on which the requesting code placed the interrupt.

971 */

972 set_bit(IRQTF_AFFINITY, &new->thread_flags);

973 }

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

__setup_irq——添加new irqaction

974

975 if (!alloc_cpumask_var(&mask, GFP_KERNEL)) {

976 ret = -ENOMEM;

977 goto out_thread;

978 }

979//一些interrupt controller注册的时候就已经设置IRQCHIP_ONESHOT_SAFE,这种情况下驱动工程师不需要使用IRQF_ONESHOT

980 /*

981 * Drivers are often written to work w/o knowledge about the

982 * underlying irq chip implementation, so a request for a

983 * threaded irq without a primary hard irq context handler

984 * requires the ONESHOT flag to be set. Some irq chips like

985 * MSI based interrupts are per se one shot safe. Check the

986 * chip flags, so we can avoid the unmask dance at the end of

987 * the threaded handler for those.

988 */

989 if (desc->irq_data.chip->flags & IRQCHIP_ONESHOT_SAFE)

990 new->flags &= ~IRQF_ONESHOT;

991

992 /*

993 * The following block of code has to be executed atomically

994 */

995 raw_spin_lock_irqsave(&desc->lock, flags); //操作irqaction链表的时候要上锁

996 old_ptr = &desc->action;

997 old = *old_ptr;

998 if (old) { //irqaction链表不为空,说明存在共享中断的情况

999 /*

1000 * Can't share interrupts unless both agree to and are

1001 * the same type (level, edge, polarity). So both flag

1002 * fields must have IRQF_SHARED set and the bits which

1003 * set the trigger type must match. Also all must

1004 * agree on ONESHOT.

1005 */

1006 if (!((old->flags & new->flags) & IRQF_SHARED) ||

1007 ((old->flags ^ new->flags) & IRQF_TRIGGER_MASK) ||

1008 ((old->flags ^ new->flags) & IRQF_ONESHOT))

1009 goto mismatch;

1010//共享中断必须有相同的属性(触发方式、oneshot、percpu等)

1011 /* All handlers must agree on per-cpuness */

1012 if ((old->flags & IRQF_PERCPU) !=

1013 (new->flags & IRQF_PERCPU))

1014 goto mismatch;

1015//只是遍历irqaction链表得到thread_mask,每个bit代表一个irqaction,添加的操作在后面

1016 /* add new interrupt at end of irq queue */

1017 do {

1018 /*

1019 * Or all existing action->thread_mask bits,

1020 * so we can find the next zero bit for this

1021 * new action.

1022 */

1023 thread_mask |= old->thread_mask; //循环结束之后系统thread_mask中置1的bit位表示所有之前挂载过得共享中断的irqaction

1024 old_ptr = &old->next;

1025 old = *old_ptr;

1026 } while (old);

1027 shared = 1; //表示中断共享

1028 }

1029

1030 /*

1031 * Setup the thread mask for this irqaction for ONESHOT. For

1032 * !ONESHOT irqs the thread mask is 0 so we can avoid a

1033 * conditional in irq_wake_thread().

1034 */

1035 if (new->flags & IRQF_ONESHOT) { //线程化处理函数

1036 /*

1037 * Unlikely to have 32 resp 64 irqs sharing one line,

1038 * but who knows.

1039 */

1040 if (thread_mask == ~0UL) { //已经超过了所能挂载的最大共享中断数目,退出。这里我觉得应该加一个警告,否则开发人员可能不知道发生了什么。

1041 ret = -EBUSY;

1042 goto out_mask;

1043 }

1044 /*

1045 * The thread_mask for the action is or'ed to

1046 * desc->thread_active to indicate that the

1047 * IRQF_ONESHOT thread handler has been woken, but not

1048 * yet finished. The bit is cleared when a thread

1049 * completes. When all threads of a shared interrupt

1050 * line have completed desc->threads_active becomes

1051 * zero and the interrupt line is unmasked. See

1052 * handle.c:irq_wake_thread() for further information.

1053 *

1054 * If no thread is woken by primary (hard irq context)

1055 * interrupt handlers, then desc->threads_active is

1056 * also checked for zero to unmask the irq line in the

1057 * affected hard irq flow handlers

1058 * (handle_[fasteoi|level]_irq).

1059 *

1060 * The new action gets the first zero bit of

1061 * thread_mask assigned. See the loop above which or's

1062 * all existing action->thread_mask bits.

1063 */

1064 new->thread_mask = 1 << ffz(thread_mask); //找到第一个非0的bit作为新irqaction的bit标志位

1065

1066 } else if (new->handler == irq_default_primary_handler &&

1067 !(desc->irq_data.chip->flags & IRQCHIP_ONESHOT_SAFE)) {

1068 /*

1069 * The interrupt was requested with handler = NULL, so

1070 * we use the default primary handler for it. But it

1071 * does not have the oneshot flag set. In combination

1072 * with level interrupts this is deadly, because the

1073 * default primary handler just wakes the thread, then

1074 * the irq lines is reenabled, but the device still

1075 * has the level irq asserted. Rinse and repeat....

1076 //存在这样一种情况,handler为NULL,但是并没有使用ONESHOT flag注册,那么在唤醒线程执行后就不会disable对应的中断线,可能造成不停的重入.

这也说明,在handler为NULL的情况下,驱动工程师应该指明ONESHOT,否则将不work

1077 * While this works for edge type interrupts, we play

1078 * it safe and reject unconditionally because we can't

1079 * say for sure which type this interrupt really

1080 * has. The type flags are unreliable as the

1081 * underlying chip implementation can override them.

1082 */

1083 pr_err("Threaded irq requested with handler=NULL and !ONESHOT for irq %d\n",

1084 irq);

1085 ret = -EINVAL;

1086 goto out_mask;

1087 }

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101