目录

目录

部署keepalived+haproxy(或者nginx)高可用负载均衡器

最低配置4G内存,2个2核:

搭建kubernerers

安装docker

下载docker

---

- name: 安装docker

hosts: 172.25.250.99

tasks:

- name: 安装必需的软件包

yum:

name: "{{item.package}}"

state: present

with_items:

- {package: "yum-utils"}

- {package: "device-mapper-persistent-data"}

- {package: "lvm2"}

- name: 获取docker-ce 的源

get_url:

url: https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

dest: /etc/yum.repos.d/docker-ce.repo

- name: 安装docker

yum:

name: docker-ce

state: present

# - name: pip安装python3

#package:

#name: python3

#state: present

# - name: 安装docker的python包

# pip:

# name: docker

- name: 开机自动启动docker服务

service:

name: docker

state: started

enabled: yes

- name: 关闭防火墙

service:

name: firewalld

state: stopped

enabled: no

- name: 关闭SElinux

selinux:

state: disabled

- name: 创建/etc/docker目录

file:

path: /etc/docker

state: directory

- name: 创建/etc/docker/daemon.json

file:

path: /etc/docker/daemon.json

state: touch

- name: 配置docker加速

shell: |

echo -e '{\n\t"registry-mirrors": [\n\t\t"https://registry.docker-cn.com"\n\t]\n}' > /etc/docker/daemon.json

- name: 加载新的unit 配置文件

shell: "systemctl daemon-reload"

- name: 重启DOCKER服务

service:

name: docker

state: restarted

- name: 创建WEB目录

file:

path: /root/wordpress

state: directory

- name: 安装rsync

yum:

name: rsync

state: present

- name: 复制

ansible.posix.synchronize:

src: /root/wordpress/

dest: /root/wordpress

recursive: yes

- name: 下载镜像httpd

docker_image:

name: httpd

source: pull

- name: 配置和连接httpd镜像

docker_container:

name: web02

image: httpd

state: started

detach: yes

interactive: yes

port:

- "8080:80"

volumes:

- /root/wordpress/:/var/www/html/ wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo -O /etc/yum.repos.d/docker-ce.repo

# 下载

yum install docker-ce-20.10.0

# 查看版本号

docker --version 启动docker

systemctl enable docker.service

systemctl start docker.service配置docker镜像加速器

# 设置docker加速:

vim /etc/docker/daemon.json

{

"registry-mirrors": ["https://frz7i079.mirror.aliyuncs.com"]

}

systemctl restart docker.service设置iptables不对bridge的数据进行处理,启用IP路由转发功能(可选)

为了让 Linux 节点的 iptables 能够正确查看桥接流量,请确认 sysctl 配置中的 net.bridge.bridge-nf-call-iptables 设置为 1。例如:

# 允许 iptables 检查桥接流量

cat <<EOF | sudo tee /etc/modules-load.d/k8s.conf

overlay

br_netfilter

EOF

sudo modprobe overlay

sudo modprobe br_netfilter

# 设置所需的 sysctl 参数,参数在重新启动后保持不变

cat <<EOF | sudo tee /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.ipv4.ip_forward = 1

EOF

# 应用 sysctl 参数而不重新启动

sysctl --system

或者:sysctl -p /etc/sysctl.d/k8s.conf安装k8s

配置环境:

# 修改hosts

vim /etc/hosts

172.25.250.10 k8s cluster-endpoint

172.25.250.11 k8s-master

172.25.250.99 k8s-node1

# 关闭selinux

vim /etc/selinux/config

SELINUX=disabled

# Linux swapoff命令用于关闭系统交换分区(swap area)

swapoff -a

sed -i '/swap/d' /etc/fstab

# 关闭防火墙

systemctl stop firewalld

systemctl disable firewalld 配置k8s的yum源:

vim /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg加载源并安装kubectl kubelet kubeadm组件:

mount /dev/cdrom /mnt

yum repolist

# yum install -y kubectl kubelet kubeadm

yum --showduplicates list kubelet kubectl kubeadm

yum install -y kubelet-1.21.14-0 kubectl-1.21.14-0 kubeadm-1.21.14-0kubectl:是Kubernetes 的命令行工具,人们通常通过它与Kubernetes 进行交互。

Kubelet:是kubernetes 工作节点上的一个代理组件,运行在每个节点上。

Kubeadm:是一个提供了 kubeadm init 和 kubeadm join 的工具, 作为创建Kubernetes 集群的“快捷途径” 的最佳实践(用于创建集群)

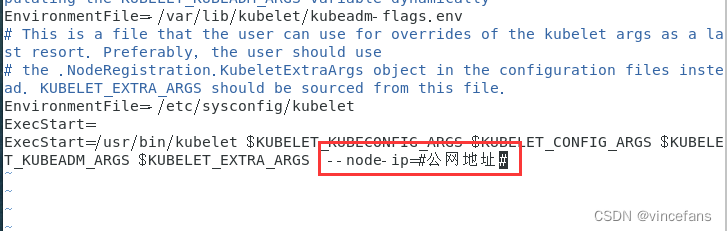

如果要是搭建公网集群(使用公网地址注册集群,否则默认私网地址):

1、增加网卡第二个ip地址:ifconfig eth0:1 《公网地址》

对应的删除命令:ip addr del 《公网地址》 dev eth0

2、修改10-kubeadm.conf文件

vim /usr/lib/systemd/system/kubelet.service.d/10-kubeadm.conf

由于docker的Cgroup Driver和kubelet的Cgroup Driver不一致导致的,此处选择修改docker的和kubelet一致查看docker信息:docker info | grep Cgroup

# 可以看到驱动为Cgroup,需要改为systemd。编辑文件

docker info | grep Cgroup

# vim /usr/lib/systemd/system/docker.service

# 在ExecStart命令中添加--exec-opt native.cgroupdriver=systemd

vim /usr/lib/systemd/system/docker.service

ExecStart=/usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sock --exec-opt native.cgroupdriver=systemd

# 重启docker

systemctl daemon-reload

systemctl restart docker.service

docker info | grep Cgroup启动kubelet并设置为开机启动:

systemctl enable kubelet

systemctl start kubeletkubeadm init命令初始化集群:

kubeadm init --apiserver-advertise-address=172.25.250.10 \

--image-repository registry.aliyuncs.com/google_containers \

--control-plane-endpoint=cluster-endpoint \

--kubernetes-version=v1.21.14 \

--service-cidr=10.1.0.0/16 \

--pod-network-cidr=10.244.0.0/16 \

--v=5

参数详解:

--apiserver-advertise-address:集群通告的局域网地址(本机的),如果是公网地址会麻烦点,看下面“提示”

--image-repository:由于默认拉取镜像地址 k8s.gcr.io 国内无法访问,这里指定阿里云镜像仓库地址

--kubernetes-version:k8s 版本,使用kubelet --version命令查看版本

--service-cidr:集群内部虚拟网络,Pod 统一访问入口(非必需:默认10.96.0.0/12)

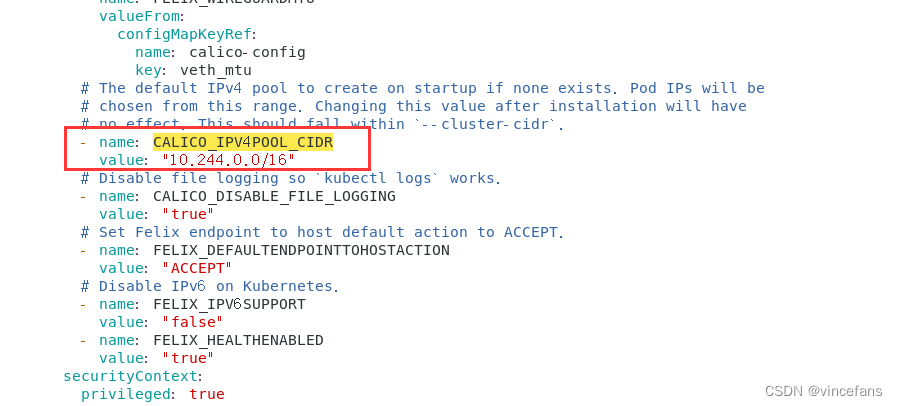

--pod-network-cidr:Pod 网络,与下面部署的CNI网络组件yaml中保持一致(必须)

--control-plane-endpoint: cluster-endpoint 是映射到该 IP 的自定义 DNS 名称,这里配置hosts映射:192.168.0.113 cluster-endpoint。 这将允许你将 --control-plane-endpoint=cluster-endpoint 传递给 kubeadm init,并将相同的 DNS 名称传递给 kubeadm join。 稍后你可以修改 cluster-endpoint 以指向高可用性方案中的负载均衡器的地址。

--ignore-preflight-errors:在预检中如果有错误可以忽略掉,比如忽略 IsPrivilegedUser,Swap等

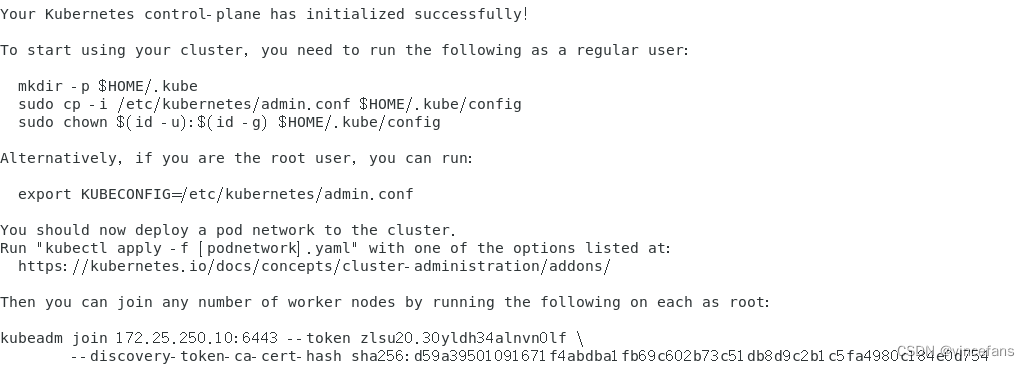

# 根据提示创建目录和配置文件

mkdir -p $HOME/.kube

cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

# 修改文件权限

chown $(id -u):$(id -g) $HOME/.kube/config

ls -ld .kube/config

# 永久生效

echo "export KUBECONFIG=/etc/kubernetes/admin.conf" >> ~/.bash_profile

source ~/.bash_profile

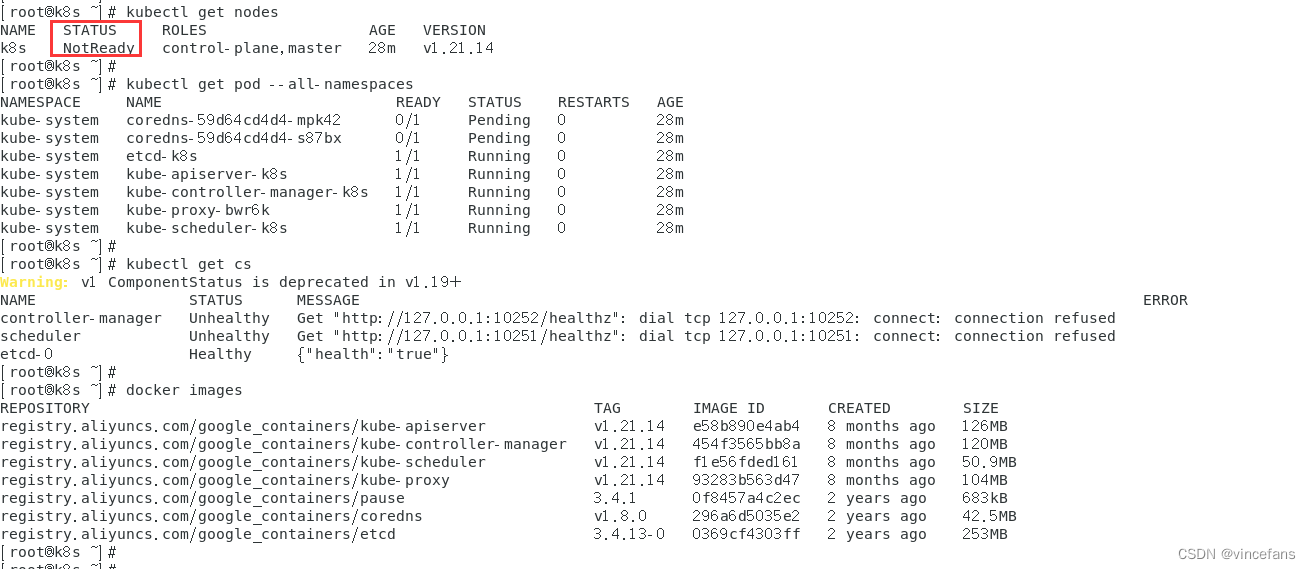

# 现在已经可以看到master节点了

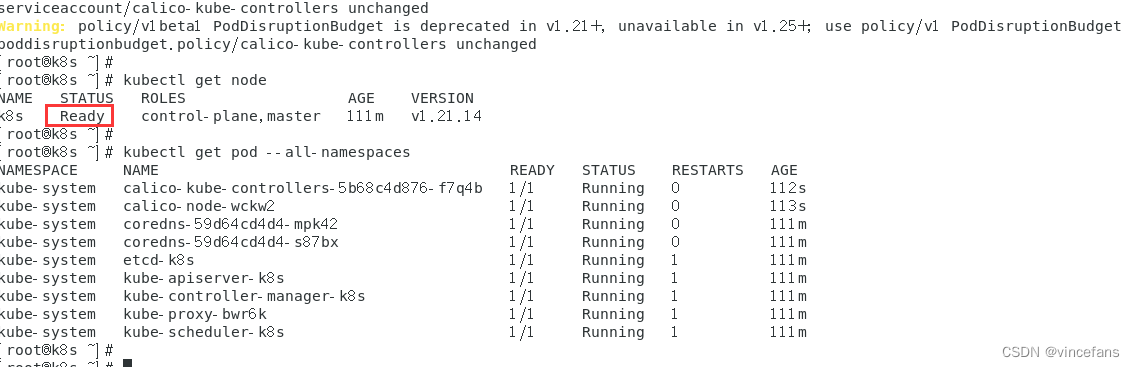

kubectl get node

kubectl get pod --all-namespaces

kubectl get cs

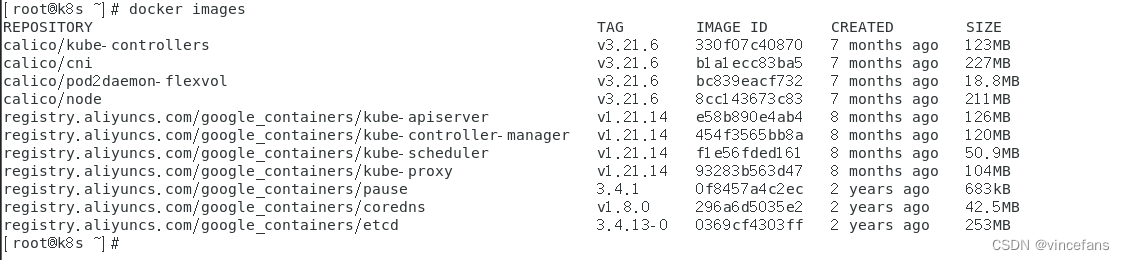

docker images

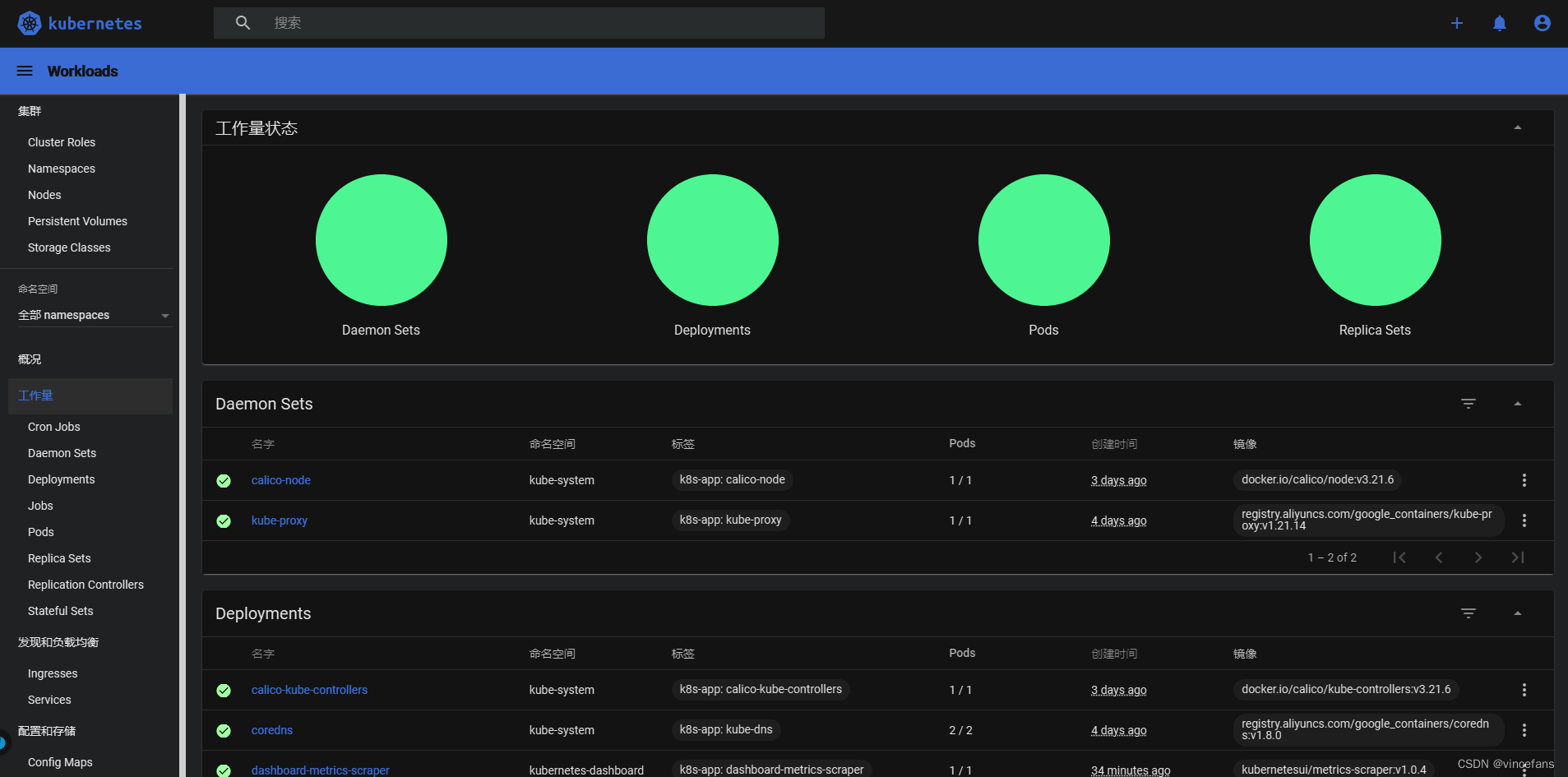

安装 Pod 网络插件(CNI:Container Network Interface)(master)

安装calico网络(或者flannel)

node节点为NotReady,因为coredns pod没有启动,缺少网络pod

curl https://docs.projectcalico.org/archive/v3.21/manifests/calico.yaml -O

# 查看需要下载的calico镜像,这四个镜像需要在所有节点都下载

grep image calico.yaml

image: docker.io/calico/cni:v3.21.6

image: docker.io/calico/cni:v3.21.6

image: docker.io/calico/pod2daemon-flexvol:v3.21.6

image: docker.io/calico/node:v3.21.6

image: docker.io/calico/kube-controllers:v3.21.6

docker pull docker.io/calico/cni:v3.21.6

docker pull docker.io/calico/pod2daemon-flexvol:v3.21.6

docker pull docker.io/calico/node:v3.21.6

docker pull docker.io/calico/kube-controllers:v3.21.6

cat calico.yaml | egrep "CALICO_IPV4POOL_CIDR|"10.244""

应用calico.yaml文件

kubectl apply -f calico.yaml

kubectl get node

kubectl get pod --all-namespaces

# cni突然起不来

kubectl set env daemonset/calico-node -n kube-system IP_AUTODETECTION_METHOD=interface=en.*

配置kubectl命令tab键自动补全

# 查看kubectl自动补全命令

kubectl --help | grep bash

# 添加source <(kubectl completion bash)到/etc/profile,并使配置生效

vim /etc/profile | head -2

# /etc/profile

source <(kubectl completion bash)

# 刷新环境变量

source /etc/profile

# 下载补全补丁

yum install -y bash-completion部署Dashboard

1.创建Dashboard的yaml文件

GitHub 地址:https://github.com/kubernetes/dashboard

wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.4.0/aio/deploy/recommended.yamlvim recommended.yaml

# opyright 2017 The Kubernetes Authors.

#

## Licensed under the Apache License, Version 2.0 (the "License");

## you may not use this file except in compliance with the License.

## You may obtain a copy of the License at

##

## http://www.apache.org/licenses/LICENSE-2.0

##

## Unless required by applicable law or agreed to in writing, software

## distributed under the License is distributed on an "AS IS" BASIS,

## WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

## See the License for the specific language governing permissions and

## limitations under the License.

apiVersion: v1

kind: Namespace

metadata:

name: kubernetes-dashboard

---

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

type: NodePort

ports:

- port: 443

targetPort: 8443

nodePort: 30001

selector:

k8s-app: kubernetes-dashboard

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-certs

namespace: kubernetes-dashboard

type: Opaque

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-csrf

namespace: kubernetes-dashboard

type: Opaque

data:

csrf: ""

---

apiVersion: v1

kind: Secret

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-key-holder

namespace: kubernetes-dashboard

type: Opaque

---

kind: ConfigMap

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard-settings

namespace: kubernetes-dashboard

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

rules:

# Allow Dashboard to get, update and delete Dashboard exclusive secrets.

- apiGroups: [""]

resources: ["secrets"]

resourceNames: ["kubernetes-dashboard-key-holder", "kubernetes-dashboard-certs", "kubernetes-dashboard-csrf"]

verbs: ["get", "update", "delete"]

# Allow Dashboard to get and update 'kubernetes-dashboard-settings' config map.

- apiGroups: [""]

resources: ["configmaps"]

resourceNames: ["kubernetes-dashboard-settings"]

verbs: ["get", "update"]

# Allow Dashboard to get metrics.

- apiGroups: [""]

resources: ["services"]

resourceNames: ["heapster", "dashboard-metrics-scraper"]

verbs: ["proxy"]

- apiGroups: [""]

resources: ["services/proxy"]

resourceNames: ["heapster", "http:heapster:", "https:heapster:", "dashboard-metrics-scraper", "http:dashboard-metrics-scraper"]

verbs: ["get"]

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

rules:

# Allow Metrics Scraper to get metrics from the Metrics server

- apiGroups: ["metrics.k8s.io"]

resources: ["pods", "nodes"]

verbs: ["get", "list", "watch"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: kubernetes-dashboard

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: kubernetes-dashboard

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: kubernetes-dashboard

template:

metadata:

labels:

k8s-app: kubernetes-dashboard

spec:

containers:

- name: kubernetes-dashboard

image: kubernetesui/dashboard:v2.4.0

imagePullPolicy: Always

ports:

- containerPort: 8443

protocol: TCP

args:

- --auto-generate-certificates

- --namespace=kubernetes-dashboard

# Uncomment the following line to manually specify Kubernetes API server Host

# # If not specified, Dashboard will attempt to auto discover the API server and connect

# # to it. Uncomment only if the default does not work.

# # - --apiserver-host=http://my-address:port

volumeMounts:

- name: kubernetes-dashboard-certs

mountPath: /certs

# Create on-disk volume to store exec logs

- mountPath: /tmp

name: tmp-volume

livenessProbe:

httpGet:

scheme: HTTPS

path: /

port: 8443

initialDelaySeconds: 30

timeoutSeconds: 30

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

runAsUser: 1001

runAsGroup: 2001

volumes:

- name: kubernetes-dashboard-certs

secret:

secretName: kubernetes-dashboard-certs

- name: tmp-volume

emptyDir: {}

serviceAccountName: kubernetes-dashboard

nodeSelector:

"kubernetes.io/os": linux

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

---

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: dashboard-metrics-scraper

name: dashboard-metrics-scraper

namespace: kubernetes-dashboard

spec:

ports:

- port: 8000

targetPort: 8000

selector:

k8s-app: dashboard-metrics-scraper

---

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: dashboard-metrics-scraper

name: dashboard-metrics-scraper

namespace: kubernetes-dashboard

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: dashboard-metrics-scraper

template:

metadata:

labels:

k8s-app: dashboard-metrics-scraper

spec:

securityContext:

seccompProfile:

type: RuntimeDefault

containers:

- name: dashboard-metrics-scraper

image: kubernetesui/metrics-scraper:v1.0.7

ports:

- containerPort: 8000

protocol: TCP

livenessProbe:

httpGet:

scheme: HTTP

path: /

port: 8000

initialDelaySeconds: 30

timeoutSeconds: 30

volumeMounts:

- mountPath: /tmp

name: tmp-volume

securityContext:

allowPrivilegeEscalation: false

readOnlyRootFilesystem: true

runAsUser: 1001

runAsGroup: 2001

serviceAccountName: kubernetes-dashboard

nodeSelector:

"kubernetes.io/os": linux

# Comment the following tolerations if Dashboard must not be deployed on master

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

volumes:

- name: tmp-volume

emptyDir: {}

由于官方没使用nodeport,所以需要修改文件,添加两行配置。

type: NodePort

nodePort: 30000

部署服务:

kubectl apply -f recommended.yaml

# 重新部署

# kubectl delete -f recommended.yaml

# 查看svc和pod的信息

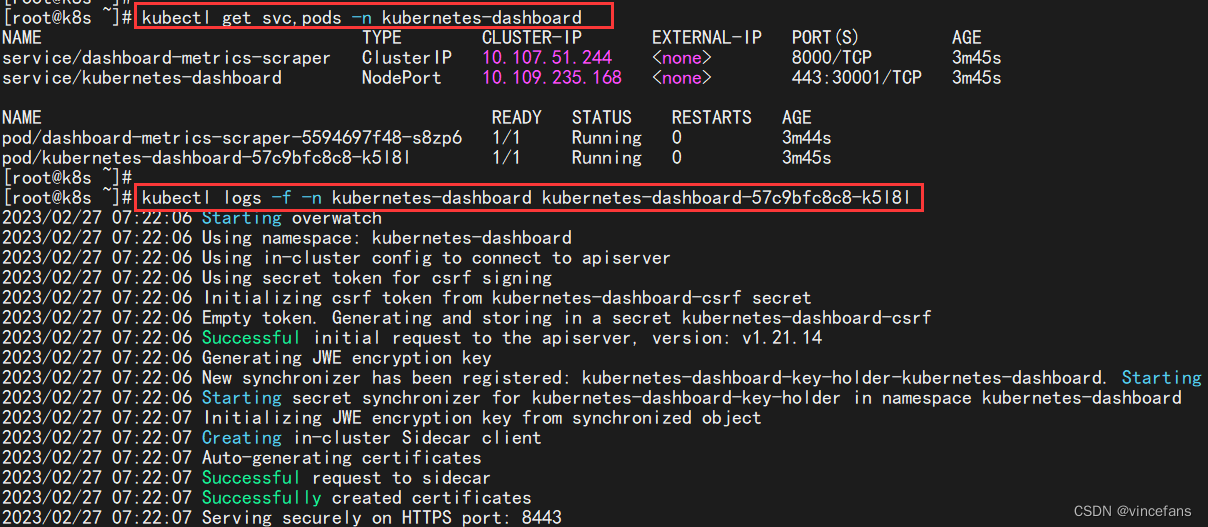

kubectl get pods -n kubernetes-dashboard -o wide

kubectl get svc,pods -n kubernetes-dashboard

# 查看日志

# kubectl logs -f -n kubernetes-dashboard + pods

kubectl logs -f -n kubernetes-dashboard kubernetes-dashboard-57c9bfc8c8-k5l8l

kubectl logs -f -n kubernetes-dashboard service/kubernetes-dashboard

# 查看详细信息

# kubectl logs -f -n kubernetes-dashboard + pods

kubectl logs -f -n kubernetes-dashboard kubernetes-dashboard-57c9bfc8c8-k5l8l

kubectl describe pod -n kubernetes-dashboard dashboard-metrics-scraper-5594697f48-s8zp

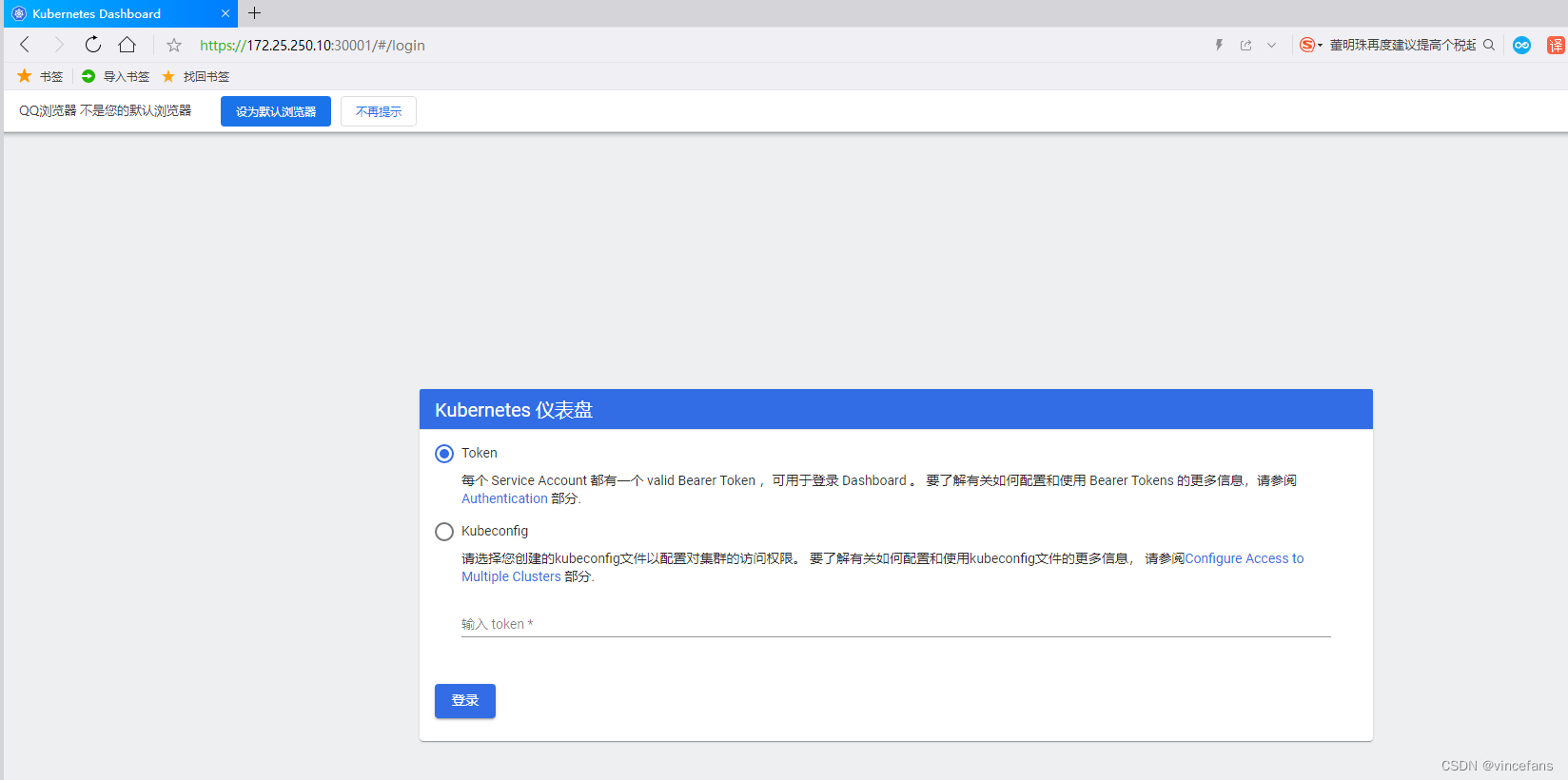

https://172.25.250.10:30001

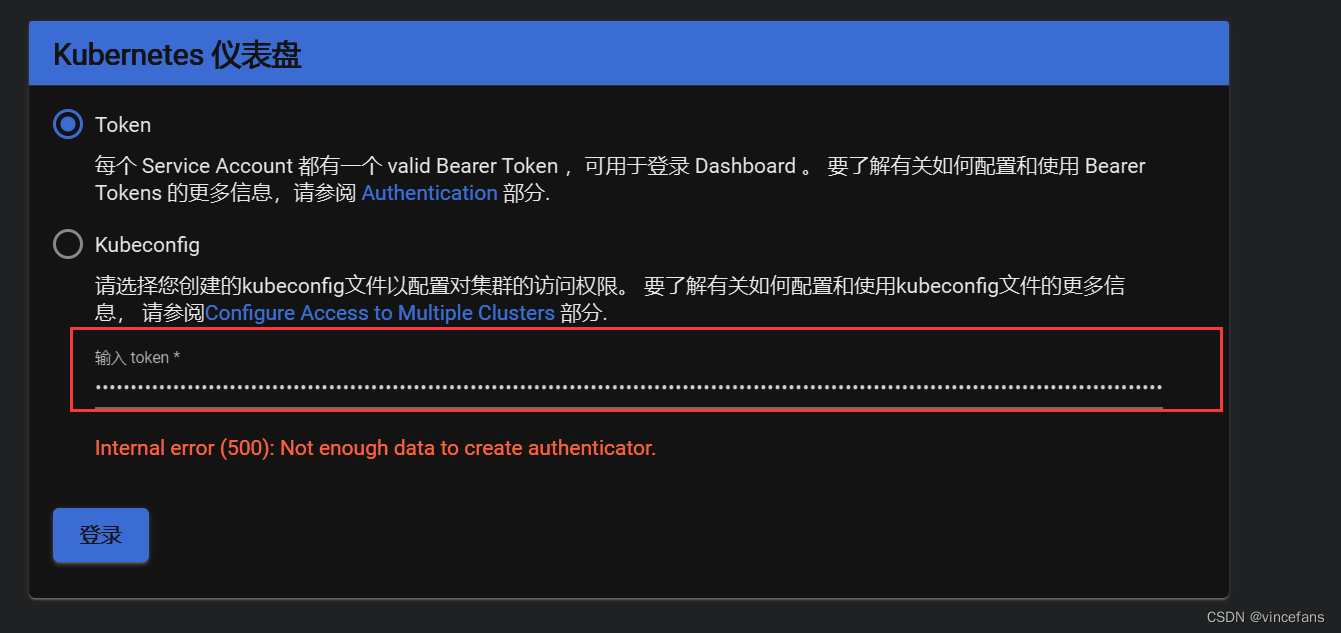

使用Kubeconfig登录

1.创建token:

kubectl create serviceaccount dashboard-admin -n kubernetes-dashboard

删除用户

kubectl delete serviceaccount dashboard-admin -n kubernetes-dashboard

2.授 权token访 问权 限 :

kubectl create clusterrolebinding dashboard-admin --clusterrole=cluster-admin --serviceaccount=kubernetes-dashboard:dashboard-admin

删除授权

kubectl delete clusterrolebinding dashboard-admin

3.获取token:

kubectl describe secrets -n kubernetes-dashboard $(kubectl -n kubernetes-dashboard get secret | awk '/dashboard-admin/{print $1}')

或者:

ADMIN_SECRET=$(kubectl get secrets -n kubernetes-dashboard | grep dashboard-admin | awk '{print $1}')

DASHBOARD_LOGIN_TOKEN=$(kubectl describe secret -n kubernetes-dashboard ${ADMIN_SECRET} | grep -E '^token' | awk '{print $2}')

echo ${DASHBOARD_LOGIN_TOKEN}

部署集群:

| 主机 | IP |

| k8s(master) | 172.25.250.10 |

| k8s-master(master) | 172.25.250.11 |

| k8s-node1 | 172.25.250.99 |

配置环境:

# 修改hosts文件

cat >> /etc/hosts<<EOF

172.25.250.10 k8s cluster-endpoint

172.25.250.11 k8s-master

172.25.250.99 k8s-node1

EOF

# 关闭selinux

vim /etc/selinux/config

SELINUX=disabled

# Linux swapoff命令用于关闭系统交换分区(swap area)

swapoff -a

sed -i '/swap/d' /etc/fstab

# 时间同步

yum install chrony -y

systemctl start chronyd

systemctl enable chronyd

chronyc sources

# 关闭防火墙

systemctl stop firewalld

systemctl disable firewalld设置iptables不对bridge的数据进行处理,启用IP路由转发功能(可选)

为了让 Linux 节点的 iptables 能够正确查看桥接流量,请确认 sysctl 配置中的 net.bridge.bridge-nf-call-iptables 设置为 1。例如:

# 允许 iptables 检查桥接流量

cat <<EOF | sudo tee /etc/modules-load.d/k8s.conf

overlay

br_netfilter

EOF

sudo modprobe overlay

sudo modprobe br_netfilter

# 设置所需的 sysctl 参数,参数在重新启动后保持不变

cat <<EOF | sudo tee /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.ipv4.ip_forward = 1

EOF

# 应用 sysctl 参数而不重新启动

sysctl --system

或者:sysctl -p /etc/sysctl.d/k8s.conf安装容器 docker(所有节点):

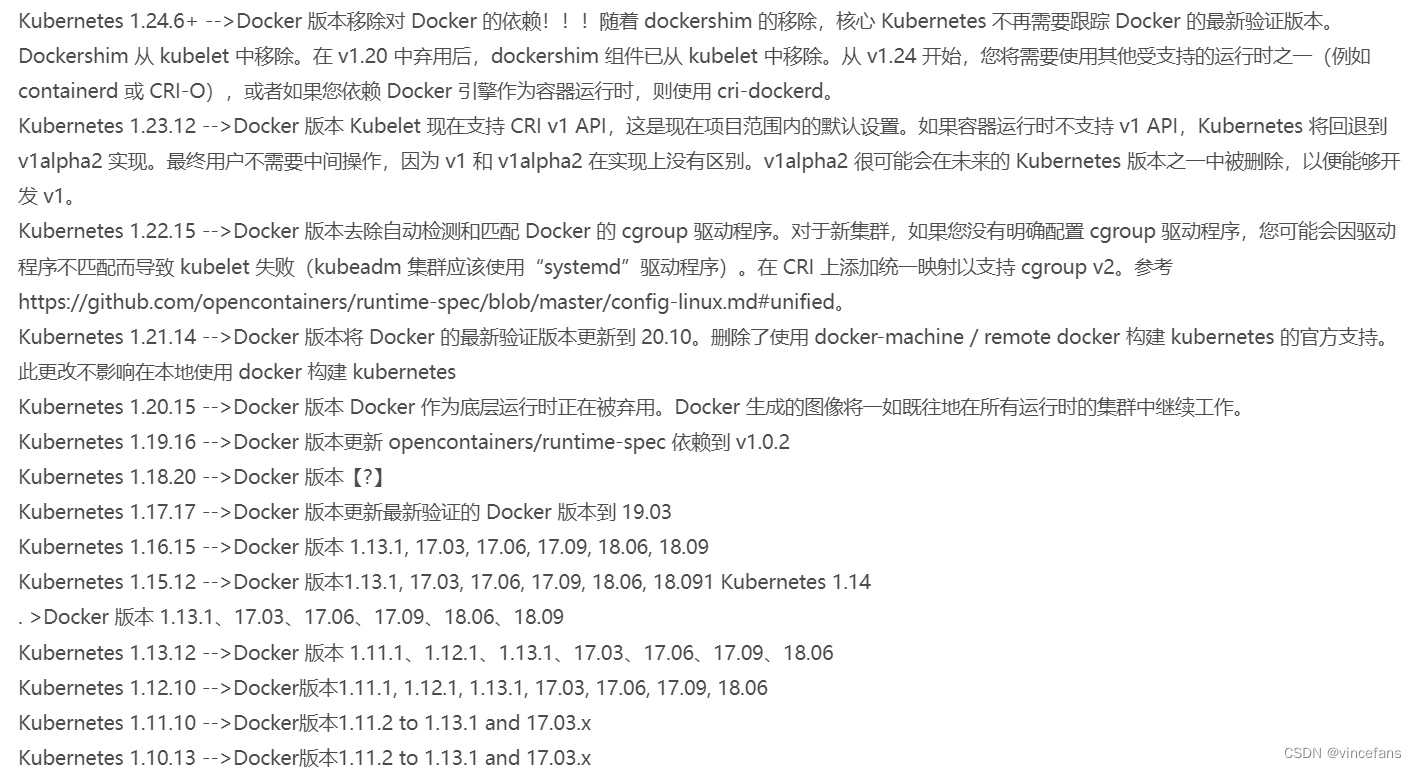

提示:v1.24 之前的 Kubernetes 版本包括与 Docker Engine 的直接集成,使用名为 dockershim 的组件。这种特殊的直接整合不再是 Kubernetes 的一部分 (这次删除被作为 v1.20 发行版本的一部分宣布)。你可以阅读检查 Dockershim 弃用是否会影响你 以了解此删除可能会如何影响你。要了解如何使用 dockershim 进行迁移,请参阅从 dockershim 迁移。

# 配置yum源

cd /etc/yum.repos.d ; mkdir bak; mv CentOS-Linux-* bak/

# centos7

wget -O /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo

# centos8

# wget -O /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-8.repo

# 安装yum-config-manager配置工具

yum -y install yum-utils

# 设置yum源

yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

# 安装docker-ce版本

yum install docker-ce-20.10.0

# 启动并设置开机自启

systemctl start docker

systemctl enable docker

# 查看版本号

docker --version

# 查看版本具体信息

docker version

# Docker镜像源设置

# 修改文件 /etc/docker/daemon.json,没有这个文件就创建

# 添加以下内容后,重启docker服务:

cat >/etc/docker/daemon.json<<EOF

{

"registry-mirrors": ["http://hub-mirror.c.163.com"]

}

EOF

# 加载

systemctl reload docker

# 查看

systemctl status docker containerd配置 k8s yum 源(所有节点):

cat > /etc/yum.repos.d/kubernetes.repo << EOF

[k8s]

name=k8s

enabled=1

gpgcheck=0

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

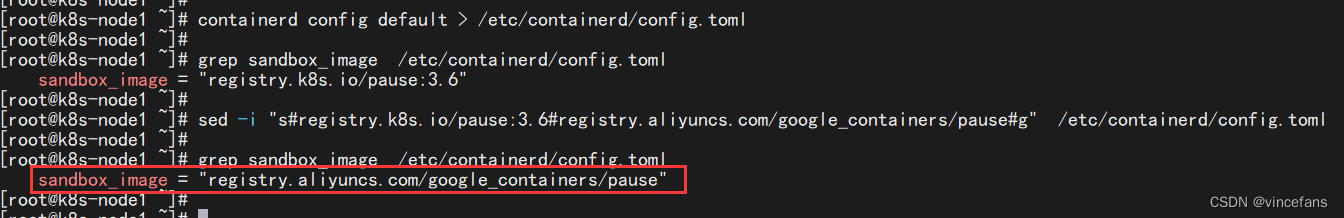

EOF将 sandbox_image 镜像源设置为阿里云 google_containers 镜像源(所有节点)

# 导出默认配置,config.toml这个文件默认是不存在的

containerd config default > /etc/containerd/config.toml

grep sandbox_image /etc/containerd/config.toml

sed -i "s#registry.k8s.io/pause:3.6#registry.aliyuncs.com/google_containers/pause#g" /etc/containerd/config.toml

grep sandbox_image /etc/containerd/config.toml

配置 containerd cgroup 驱动程序 systemd(所有节点)

kubernets 自v 1.24.0 后,就不再使用 docker.shim,替换采用 containerd 作为容器运行时端点。因此需要安装 containerd(在 docker 的基础下安装),上面安装 docker 的时候就自动安装了 containerd 了。这里的 docker 只是作为客户端而已。容器引擎还是 containerd。

# 可以看到驱动为Cgroup,需要改为systemd。编辑文件

docker info | grep Cgroup

# vim /usr/lib/systemd/system/docker.service

# 在ExecStart命令中添加--exec-opt native.cgroupdriver=systemd

vim /usr/lib/systemd/system/docker.service

ExecStart=/usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sock --exec-opt native.cgroupdriver=systemd

# 重启docker

systemctl daemon-reload

systemctl restart docker.service

docker info | grep Cgroup6)开始安装 kubeadm,kubelet 和 kubectl(master 节点)

# 不指定版本就是最新版本

yum install -y kubelet-1.21.14 kubeadm-1.21.14 kubectl-1.21.14

systemctl start kubelet.service

systemctl enable kubelet.service

# 查看版本

kubectl version

yum info kubeadm添加node节点:

master:生成token和 --discovery-token-ca-cert-hash参数

# 查看集群的信息了

kubectl cluster-info

kubectl get nodes -o wide

kubectl get pods -A -o wide

# 查看现有的token列表

kubeadm token list

# 如果token已经失效,那就再创建一个新的token

kubeadm token create

kubeadm token list

# 如果找不到--discovery-token-ca-cert-hash参数,则可以在master节点上使用openssl工具来获取

openssl x509 -pubkey -in /etc/kubernetes/pki/ca.crt | openssl rsa -pubin -outform der 2>/dev/null | openssl dgst -sha256 -hex | sed 's/^.* //'

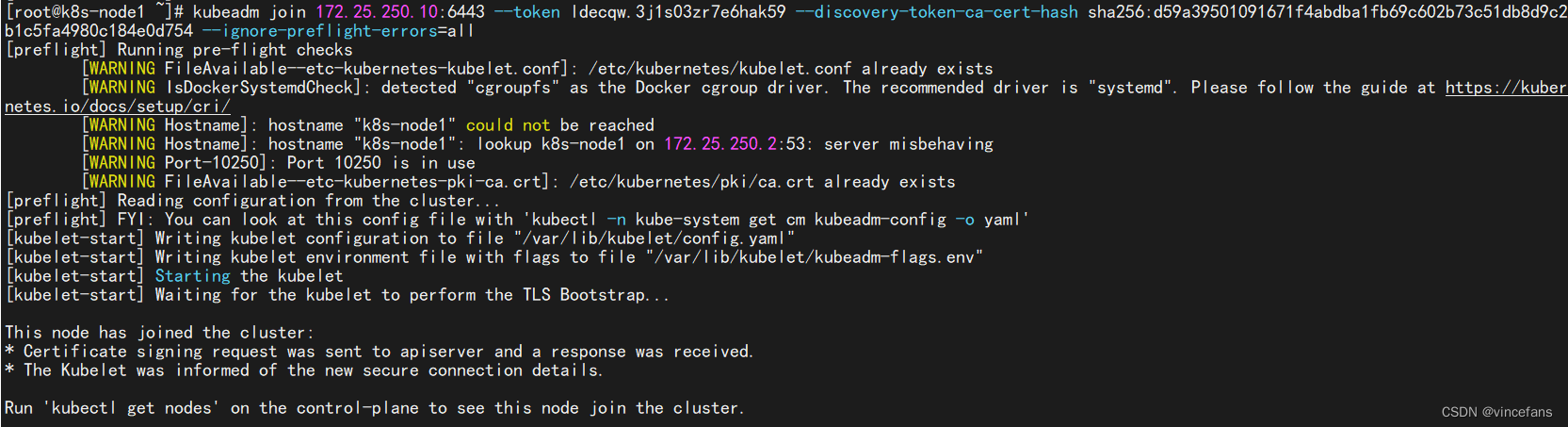

node:通过master生成的token和 --discovery-token-ca-cert-hash参数加入集群

# 这时候我们还需要继续添加节点作为worker节点运行负载,直接在剩下的节点上面运行集群初始化成功时输出的命令就可以成功加入集群:

kubeadm join 172.25.250.10:6443 --token ldecqw.3j1s03zr7e6hak59 \

--discovery-token-ca-cert-hash sha256:d59a39501091671f4abdba1fb69c602b73c51db8d9c2b1c5fa4980c184e0d754 \

--ignore-preflight-errors=all

# 查看node

[root@k8s-master ~]# kubectl get node

The connection to the server localhost:8080 was refused - did you specify the right host or port?

#如果有这个报错:

#echo "export KUBECONFIG=/etc/kubernetes/admin.conf" >> /etc/profile

#source /etc/profile

添加master节点:

# k8s:生成token和证书

[root@k8s ~]# kubeadm token create --print-join-command

kubeadm join 172.25.250.10:6443 --token 8joe59.i7og7wbu7ekh7amr --discovery-token-ca-cert-hash sha256:d59a39501091671f4abdba1fb69c602b73c51db8d9c2b1c5fa4980c184e0d754

[root@k8s ~]#

[root@k8s ~]# kubeadm init phase upload-certs --upload-certs

I0308 15:04:41.534044 76027 version.go:254] remote version is much newer: v1.26.2; falling back to: stable-1.21

[upload-certs] Storing the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace

[upload-certs] Using certificate key:

150ea5096386bffaa2aa6f027eb2043c554572420b10b13f079f94803cb5a38b

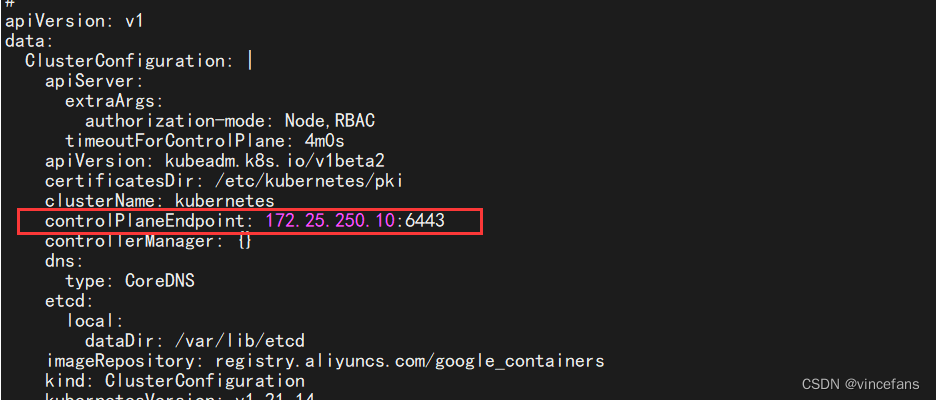

# 添加controlPlaneEndpoint配置

kubectl describe cm kubeadm-config -n kube-system

kubectl describe cm kubeadm-config -n kube-system | grep controlPlaneEndpoint

# 发现没有controlPlaneEndpoint

# 自行添加

kubectl -n kube-system edit cm kubeadm-config

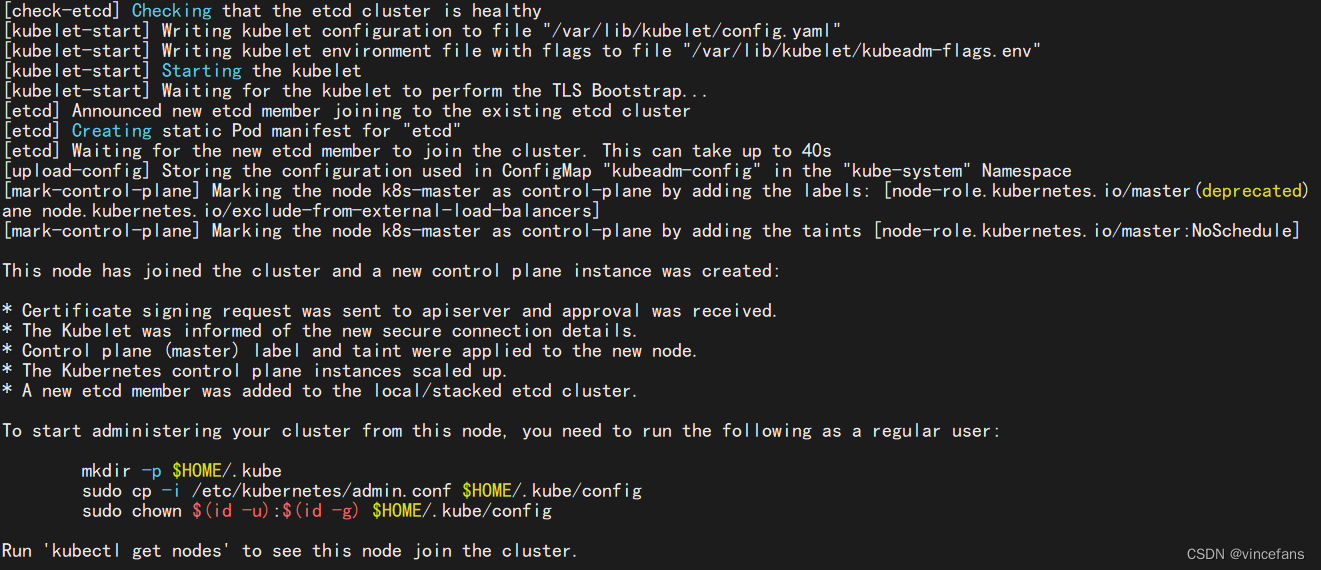

# k8s-master:加入集群

[root@k8s-master ~]# kubeadm join 172.25.250.10:6443 \

--token 8joe59.i7og7wbu7ekh7amr \

--discovery-token-ca-cert-hash sha256:d59a39501091671f4abdba1fb69c602b73c51db8d9c2b1c5fa4980c184e0d754 \

--control-plane --certificate-key 150ea5096386bffaa2aa6f027eb2043c554572420b10b13f079f94803cb5a38b \

--ignore-preflight-errors=all

# 根据提示执行如下命令:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

[root@k8s-master ~]# kubectl get node

The connection to the server localhost:8080 was refused - did you specify the right host or port?

# 如果出现上面报错:

# echo "export KUBECONFIG=/etc/kubernetes/admin.conf" >> /etc/profile

# source /etc/profile

kubeadm join cluster-endpoint:16443 \

--token itpixm.4n93xaw8pzuwnms0 \

--discovery-token-ca-cert-hash sha256:244a72de4646ec7d43a2134eb4e3ee9d80c4ca95c57d576aeb9b9e138d41a3f5 \

--control-plane --certificate-key e52da0a81a92dd2b6b99bf3d7d241b7d41b4427d994793ceddf92cc67d5aacfb \

--ignore-preflight-errors=all

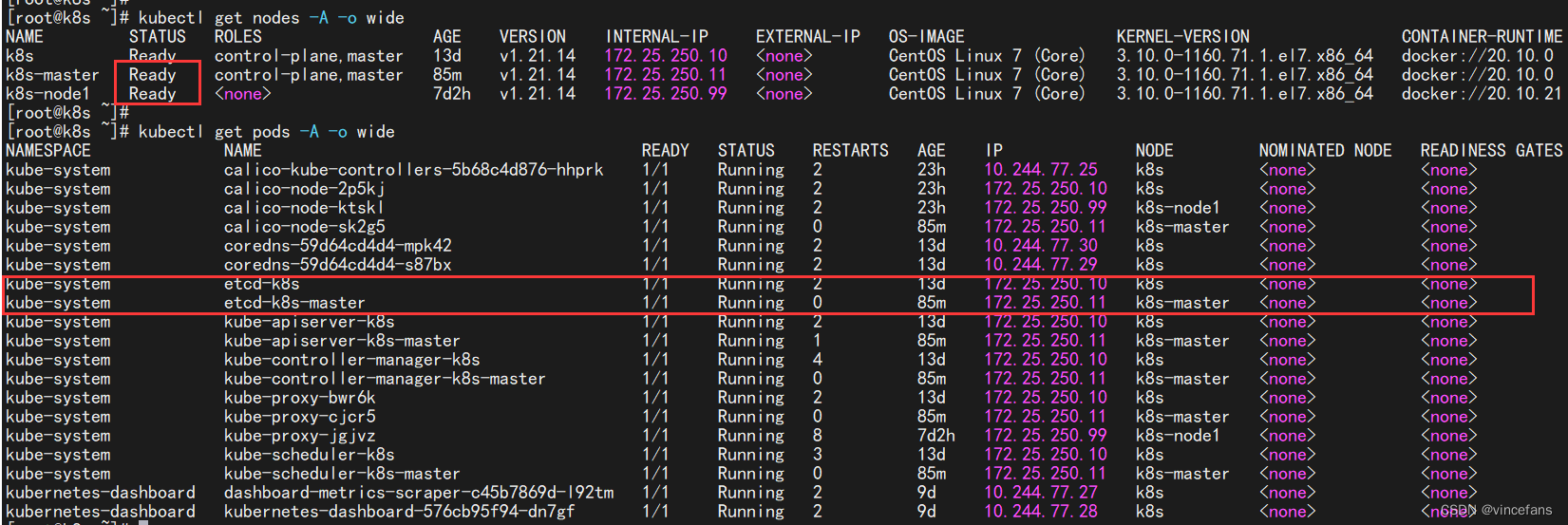

master: 查看信息

# kubernetes master没有与本机绑定,集群初始化的时候没有绑定,此时设置在本机的环境变量即可解决问题

echo "export KUBECONFIG=/etc/kubernetes/kubelet.conf" >> /etc/profile

source /etc/profile

kubectl get nodes

kubectl get pods -A因为master安装了CNI所以status为Ready ;

虽然现在已经有两个 master 了,但是对外还是只能有一个入口的,所以还得要一个负载均衡器,如果一个 master 挂了,会自动切到另外一个 master 节点。

部署keepalived+haproxy(或者nginx)高可用负载均衡器

yum install -y keepalived haproxy

[root@k8s ~]# cat /etc/haproxy/haproxy.cfg

#---------------------------------------------------------------------

# Example configuration for a possible web application. See the

# full configuration options online.

#

# http://haproxy.1wt.eu/download/1.4/doc/configuration.txt

#

#---------------------------------------------------------------------

#---------------------------------------------------------------------

# Global settings

#---------------------------------------------------------------------

global

# to have these messages end up in /var/log/haproxy.log you will

# need to:

#

# 1) configure syslog to accept network log events. This is done

# by adding the '-r' option to the SYSLOGD_OPTIONS in

# /etc/sysconfig/syslog

#

# 2) configure local2 events to go to the /var/log/haproxy.log

# file. A line like the following can be added to

# /etc/sysconfig/syslog

#

# local2.* /var/log/haproxy.log

#

log 127.0.0.1 local2

chroot /var/lib/haproxy

pidfile /var/run/haproxy.pid

maxconn 4000

user haproxy

group haproxy

daemon

# turn on stats unix socket

stats socket /var/lib/haproxy/stats

#---------------------------------------------------------------------

# common defaults that all the 'listen' and 'backend' sections will

# use if not designated in their block

#---------------------------------------------------------------------

defaults

mode http

log global

option httplog

option dontlognull

option http-server-close

option forwardfor except 127.0.0.0/8

option redispatch

retries 3

timeout http-request 10s

timeout queue 1m

timeout connect 10s

timeout client 1m

timeout server 1m

timeout http-keep-alive 10s

timeout check 10s

maxconn 3000

#---------------------------------------------------------------------

# main frontend which proxys to the backends

#---------------------------------------------------------------------

frontend kube-apiserver

bind *:6443

mode tcp

option tcplog

default_backend kube-apiserver

#---------------------------------------------------------------------

# static backend for serving up images, stylesheets and such

#---------------------------------------------------------------------

listen stats

bind *:1080

stats auth admin:awesomePassword

stats refresh 5s

stats realm HAProxy\ Statistics

stats uri /admin?stats

#---------------------------------------------------------------------

# round robin balancing between the various backends

#---------------------------------------------------------------------

backend kube-apiserver

mode tcp

balance roundrobin

server k8s 172.25.250.10:6443 check

server k8s-master 172.25.250.11:6443 check

cat > /etc/keepalived/keepalived.conf << EOF

global_defs {

notification_email {

acassen@firewall.loc

failover@firewall.loc

sysadmin@firewall.loc

}

notification_email_from fage@qq.com

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id NGINX_MASTER

}

vrrp_script check_nginx {

script "/etc/keepalived/check_haproxy.sh"

}

vrrp_instance VI_1 {

state MASTER

interface ens33

virtual_router_id 51 # VRRP 路由 ID实例,每个实例是唯一的

priority 100 # 优先级,备服务器设置 90

advert_int 1 # 指定VRRP 心跳包通告间隔时间,默认1秒

authentication {

auth_type PASS

auth_pass 1111

}

# 虚拟IP

virtual_ipaddress {

172.25.250.20/24

}

track_script {

check_haproxy

}

}

EOF

cat > /etc/keepalived/check_haproxy.sh << "EOF"

#!/bin/bash

count=$(ps -ef |grep haproxy |egrep -cv "grep|$$")

if [ "$count" -eq 0 ];then

exit 1

else

exit 0

fi

EOF

chmod +x /etc/keepalived/check_haproxy.sh

# Keepalived 配置(backup)

cat > /etc/keepalived/keepalived.conf << EOF

global_defs {

notification_email {

acassen@firewall.loc

failover@firewall.loc

sysadmin@firewall.loc

}

notification_email_from fage@qq.com

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id NGINX_BACKUP

}

vrrp_script check_nginx {

script "/etc/keepalived/check_haproxy.sh"

}

vrrp_instance VI_1 {

state BACKUP

interface ens33

virtual_router_id 51 # VRRP 路由 ID实例,每个实例是唯一的

priority 90

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

172.25.250.20/24

}

track_script {

check_hapraxy

}

}

EOF

cat > /etc/keepalived/check_haproxy.sh << "EOF"

#!/bin/bash

count=$(ps -ef |grep haproxy |egrep -cv "grep|$$")

if [ "$count" -eq 0 ];then

exit 1

else

exit 0

fi

EOF

chmod +x /etc/keepalived/check_haproxy.sh

# 启动并设置开机启动

systemctl daemon-reload

systemctl restart haproxy keepalived

systemctl enable haproxy keepalived

systemctl status haproxy keepalived

# ping 172.25.250.20

# ping 不通 VIP 将把全局的vrrp_strict这个注释就可以了

ip address show ens33检测vip是否漂移:

# vip-master: vip在k8s这里,然后关闭keepalived

[root@k8s ~]# ip address show ens33

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:5b:8e:91 brd ff:ff:ff:ff:ff:ff

inet 172.25.250.10/24 brd 172.25.250.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet 172.25.250.20/24 scope global secondary ens33

valid_lft forever preferred_lft forever

inet6 fe80::c63e:cad8:3484:7e77/64 scope link noprefixroute

valid_lft forever preferred_lft forever

[root@k8s ~]#

[root@k8s ~]# systemctl stop keepalived.service

# vip-backup: vip漂移到了k8s-master这里

[root@k8s-master ~]# ip address show ens33

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:f5:ea:85 brd ff:ff:ff:ff:ff:ff

inet 172.25.250.11/24 brd 172.25.250.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet 172.25.250.20/24 scope global secondary ens33

valid_lft forever preferred_lft forever

inet6 fe80::1f87:2e52:5e00:1f1a/64 scope link noprefixroute

valid_lft forever preferred_lft forever

# vip-master: k8s再开启keepalived,vip重新漂移回k8s(因为优先级比较高);

[root@k8s ~]# systemctl start keepalived.service

[root@k8s ~]#

[root@k8s ~]# ip address show ens33

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:5b:8e:91 brd ff:ff:ff:ff:ff:ff

inet 172.25.250.10/24 brd 172.25.250.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet 172.25.250.20/24 scope global secondary ens33

valid_lft forever preferred_lft forever

inet6 fe80::c63e:cad8:3484:7e77/64 scope link noprefixroute

valid_lft forever preferred_lft forever

通过NFS实现持久化存储

1)每台节点都安装nfs

yum -y install nfs-utils rpcbind2)master主机创建/nfs/data/k8s目录,并给/nfs/data目录授权

mkdir -p /nfs/data/k8s

chmod -R 777 /nfs/data3)编辑/etc/exports文件,并使配置生效并查看

vim /etc/exports

/nfs/data *(rw,no_root_squash,sync)

# 配置生效

exportfs -f

exportf4)启动rpcbind、nfs服务并查看rpcbind注册情况

systemctl start rpcbind

systemctl enable rpcbind

systemctl start nfs

systemctl enable nfs

查看注册情况

rpcinfo -p localhost5)定义一个pv (nginx-pv.yaml)

#PV全称叫做Persistent Volume,持久化存储卷。它是用来描述或者说用来定义一个存储卷的。这个通常都是有运维或者数据存储工程师来定义。

vim nginx-pv.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: nginx-pv # pv的名称

spec:

accessModes: # 访问模式

- ReadWriteMany # PV以read-write挂载到多个节点

capacity: # 容量

storage: 500Mi # pv可用的大小

persistentVolumeReclaimPolicy: Retain # 回收策略

storageClassName: nginx

nfs:

path: /nfs/data/ # NFS的挂载路径

server: 172.25.250.10 # NFS服务器地址accessModes:支持三种类型

ReadWriteMany:多路读写,卷能被集群多个Node挂载并读写

ReadWriteOnce:单路读写,卷只能被单一集群Node挂载读写

ReadOnlyMany:多路只读,卷能被多个集群Node挂载且只能读persistentVolumeReclaimPolicy :支持的策略有:

Retain: 需要管理员手工回收。

Recycle:清除 PV 中的数据,效果相当于执行 rm -rf /thevolume/*。

Delete: 删除 Storage Provider 上的对应存储资源,例如 AWS EBS、GCE PD、Azure Disk、- OpenStack Cinder Volume 等。

6)定义一个pvc

需要新建一个命名空间namespace:

ps1: namespace 缩写为ns

ps2: 命名空间命名是注意不要使用kube-前缀,这是kubenets系统命名空间保留的。

# 新建命名空间:vctest

kubectl get ns

kubectl create ns vctest

# 或者yaml的方法:

apiVersion: v1

kind: Namespace

metadata:

name: vctest

# 删除命名空间 kubectl delete ns vctest删除命名空间时需要注意:

1,删除ns会自动删除所有属于ns的资源

2,default 和kube-system命名空间不可删除

3,PersistentVolumes是不属于任何namespace的,但PersistentVolumeClaim是属于某个特定namespace的。

Events是否属于namespace取决于产生events的对象。

# PVC全称是Persistent Volume Claim,也就是持久化存储声明。

# PVC是用来描述希望使用什么样的或者说是满足什么条件的存储,它的开发人员使用这个来描述该容器需要一个什么存储。

vim nginx-pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim # 类型

metadata:

name: nginx-pvc # PVC 的名字

namespace: dev # 命名空间

spec:

accessModes: # 访问模式

- ReadWriteMany # PVC以read-write挂载到多个节点

resources:

requests:

storage: 500Mi # PVC允许申请的大小

storageClassName: nginx

PV和PVC中的spec关键字段要匹配,比如存储(storage)大小

PV和PVC中的storageClassName字段必须一致

7)定义一个pod,指定需要使用pvc

vim nginx-pod.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-pvc

namespace: nginx # 如果前面的PVC指定了命名空间这里必须指定与PVC一致的命名空间,否则PVC不可用

spec:

selector:

matchLabels:

app: nginx-pvc

template:

metadata:

labels:

app: nginx-pvc

spec:

containers:

- name: nginx-test

image: nginx:1.20.0

imagePullPolicy: IfNotPresent

ports:

- name: web-port

containerPort: 80

protocol: TCP

volumeMounts:

- name: nginx-persistent-storage # 取个名字,与下面的volumes的名字要一致

mountPath: /usr/share/nginx/html # 容器中的路径

volumes:

- name: nginx-persistent-storage

persistentVolumeClaim:

claimName: nginx-pvc # 引用前面声明的PVC

与使用普通 Volume 的格式类似,在 volumes 中通过 persistentVolumeClaim 指定使用 nginx-pvc 申请的 Volume。

8)master启动yaml文件

kubectl apply -f nginx-pv.yaml

kubectl apply -f nginx-pvc.yaml

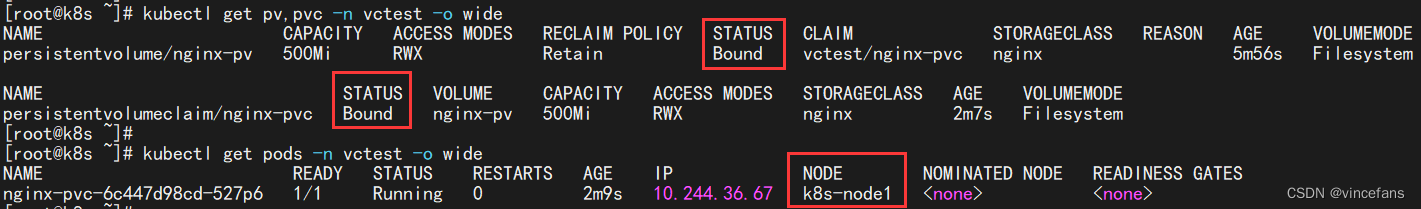

kubectl apply -f nginx-pod.yaml9)查看pv、pvc状态,查看pod,发现pod挂载到k8s-node1节点

kubectl get pv,pvc -n vctest -o wide

kubectl get pods -n vctest -o wide

10)验证pv-pvc-nfs

k8s-node1节点查询容器:

docker ps -a | grep nginx-test

7b58f0b43a37 nginx "/docker-entrypoint.…" 25 minutes ago Up 25 minutes k8s_nginx-test_nginx-pvc-6c447d98cd-527p6_vctest_77c36afb-d9c8-4336-8157-51e676b2ec62_0

# 进入容器:

docker exec -it 7b58f0b43a37 /bin/bash

# 在容器中,挂载目录创建一个index.html文件,我这里容器中挂载路径是/usr/share/nginx/html;

echo " hello This is pv->pvc->pod test! ---k8s-node1" >> /usr/share/nginx/html/index.html

回到nfs服务器(master)查看:

[root@k8s ~]# cat /nfs/data/index.html

hello This is pv->pvc->pod test! ---k8s-node1

# 进入文件共享目录,创建一个新的文件test.html

echo "Hello file to /nfs/data path! ---k8s(master) " >> /nfs/data/master.html切换k8s-node1节点进入容器验证:

root@nginx-pvc-6c447d98cd-527p6:/# ls /usr/share/nginx/html/

index.html k8s master.html

root@nginx-pvc-6c447d98cd-527p6:/# cat /usr/share/nginx/html/master.html

Hello file to /nfs/data path! ---k8s(master)11)删除Pod、NFS服务器(master)查看文件

master节点操作,删除pod,k8s会自动创建一个pod:

[root@k8s ~]# kubectl get pod -n vctest -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-pvc-6c447d98cd-527p6 1/1 Running 0 71m 10.244.36.67 k8s-node1 <none> <none>

[root@k8s ~]#

[root@k8s ~]# kubectl delete pod nginx-pvc-6c447d98cd-527p6 -n vctest

pod "nginx-pvc-6c447d98cd-527p6" deleted

[root@k8s ~]#

[root@k8s ~]# kubectl get pod -n vctest -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-pvc-6c447d98cd-bmvtn 1/1 Running 0 16s 10.244.36.68 k8s-node1 <none> <none>切换k8s-node1节点访问验证成功:

[root@k8s-node1 ~]# curl 10.244.36.68/index.html

hello This is pv->pvc->pod test! ---k8s-node1

[root@k8s-node1 ~]#

[root@k8s-node1 ~]# curl 10.244.36.68/master.html

Hello file to /nfs/data path! ---k8s(master)12)回收PV

当pv不需要时,可通过删除 PVC 回收,master节点进行操作。

# 先删除pod:

kubectl delete deploy/nginx-pvc -n vctest

# 再删除pvc:

kubectl delete pvc/nginx-pvc -n vctes

[root@k8s ~]# kubectl get pod -n vctest -o wide

No resources found in vctest namespace.

[root@k8s ~]# ll /nfs/data/

总用量 8

-rw-r--r-- 1 root root 48 3月 3 14:57 index.html

drwxrwxrwx 2 root root 6 3月 3 13:44 k8s

-rw-r--r-- 1 root root 49 3月 3 15:00 master.html

使用 "Retain" 时,如果用户删除 PersistentVolumeClaim,对应的 PersistentVolume 不会被删除。 相反,它将变为 Released 状态,表示所有的数据可以被手动恢复

k8s持久化类型三种,分别为:emptydir、hostpath、pv、pvc,值得注意的是emptydir类型随着pod而创建的,随着pod的删除而删除,里面的文件内容也会随着消失。请各位根据需求来合理使用。

PV的动态供给

前面的例子中,我们提前创建了 PV,然后通过 PVC 申请 PV 并在 Pod 中使用,这种方式叫做静态供给(Static Provision)。

与之对应的是动态供给(Dynamical Provision),即如果没有满足 PVC 条件的 PV,会动态创建 PV。相比静态供给,动态供给有明显的优势:不需要提前创建 PV,减少了管理员的工作量,效率高。

基于NFS的PV动态供给(StorageClass)

静态:pod-->pvc-->pv

动态:pod -->pvc-->storageclass

去官网下载三个文件

这三个文件去网上下载 https://github.com/kubernetes-incubator/external-storage/tree/master/nfs-client/deploy

使用脚本批量下载:

for file in class.yaml deployment.yaml rbac.yaml; do wget https://raw.githubusercontent.com/kubernetes-incubator/external-storage/master/nfs-client/deploy/$file ; done[root@k8s ~]# cat rbac.yam

apiVersion: v1

kind: ServiceAccount

metadata:

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: nfs-client-provisioner-runner

rules:

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create", "update", "patch"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: run-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

roleRef:

kind: ClusterRole

name: nfs-client-provisioner-runner

apiGroup: rbac.authorization.k8s.io

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

rules:

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

roleRef:

kind: Role

name: leader-locking-nfs-client-provisioner

apiGroup: rbac.authorization.k8s.io

[root@k8s ~]# cat class.yaml

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: managed-nfs-storage

provisioner: fuseim.pri/ifs # or choose another name, must match deployment's env PROVISIONER_NAME'

parameters:

archiveOnDelete: "false"[root@k8s ~]# vim deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nfs-client-provisioner

labels:

app: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

spec:

replicas: 1

strategy:

type: Recreate

selector:

matchLabels:

app: nfs-client-provisioner

template:

metadata:

labels:

app: nfs-client-provisioner

spec:

serviceAccountName: nfs-client-provisioner

containers:

- name: nfs-client-provisioner

image: quay.io/external_storage/nfs-client-provisioner #镜像可能会下载不到

volumeMounts:

- name: nfs-client-root

mountPath: /persistentvolumes

env:

- name: PROVISIONER_NAME

value: fuseim.pri/ifs

- name: NFS_SERVER

value: 172.25.250.10 #这里需要修改

- name: NFS_PATH

value: /nfs/data #这里需要修改

volumes:

- name: nfs-client-root

nfs:

server: 172.25.250.10 #这里需要修改

path: /nfs/data #这里需要修改

然后分别去应用这三个文件

[root@k8s ~]# kubectl create -f rbac.yaml

serviceaccount/nfs-client-provisioner created

clusterrole.rbac.authorization.k8s.io/nfs-client-provisioner-runner created

clusterrolebinding.rbac.authorization.k8s.io/run-nfs-client-provisioner created

role.rbac.authorization.k8s.io/leader-locking-nfs-client-provisioner created

rolebinding.rbac.authorization.k8s.io/leader-locking-nfs-client-provisioner created

[root@k8s ~]# kubectl create -f class.yaml

storageclass.storage.k8s.io/managed-nfs-storage created

[root@k8s ~]# kubectl create -f deployment.yaml

deployment.apps/nfs-client-provisioner created

创建pod进行测试

[root@k8s ~]# cat nginx.yaml

apiVersion: v1

kind: Service

metadata:

name: nginx

labels:

app: nginx

spec:

type: NodePort

ports:

- port: 80

nodePort: 30012

name: web

selector:

app: nginx

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: web

spec:

selector:

matchLabels:

app: nginx

serviceName: "nginx"

replicas: 3

template:

metadata:

labels:

app: nginx

spec:

terminationGracePeriodSeconds: 10

containers:

- name: nginx

image: wangyanglinux/myapp:v1

ports:

- containerPort: 80

name: web

volumeMounts:

- name: www

mountPath: /usr/share/nginx/html

volumeClaimTemplates:

- metadata:

name: www

spec:

accessModes: [ "ReadWriteMany" ]

storageClassName: "managed-nfs-storage"

resources:

requests:

storage: 300Mikubectl apply -f nginx.yaml

kubectl get pod -o wide

# 查看pv和pvc

kubectl get pv -o wide

kubectl get pvc -o wide

337

337

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?