上一节讲了linux的网络命名空间,创建了veth,然后使两个网络命名空间的网络互通,那么docker创建容器之后,会发现在容器里面是可以访问外网的,而且容器之间的网络是互通的。

1、容器里能访问外网

新建一个容器,进到容器里面ping www.baidu.com,能ping 通

[root@vol ~]# docker run -d --name test1 busybox /bin/sh -c "while true; do sleep 3600;done"

dfe2c0f67d68db7d2b8498ab4ff9a787cde8da9c87f705b0bd685d33b0fab9e5

[root@vol ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

dfe2c0f67d68 busybox "/bin/sh -c 'while t…" 35 seconds ago Up 33 seconds test1

[root@vol ~]# docker exec -it dfe2c0f67d68 /bin/sh

/ # ping www.baidu.com

PING www.baidu.com (14.215.177.38): 56 data bytes

64 bytes from 14.215.177.38: seq=0 ttl=53 time=5.337 ms

64 bytes from 14.215.177.38: seq=1 ttl=53 time=9.697 ms

64 bytes from 14.215.177.38: seq=2 ttl=53 time=5.318 ms

^C

--- www.baidu.com ping statistics ---

3 packets transmitted, 3 packets received, 0% packet loss

round-trip min/avg/max = 5.318/6.784/9.697 ms

/ #

2、再新建一个容器,容器与容器之间网络能互通

## 查看容器ip发现,test1容器 ip为172.17.0.2 test2容器的ip为172.17.0.3

[root@vol ~]# docker exec test1 ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

4: eth0@if5: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue

link/ether 02:42:ac:11:00:02 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.2/16 brd 172.17.255.255 scope global eth0

valid_lft forever preferred_lft forever

[root@vol ~]# docker exec test2 ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

6: eth0@if7: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue

link/ether 02:42:ac:11:00:03 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.3/16 brd 172.17.255.255 scope global eth0

valid_lft forever preferred_lft forever

##进入test1容器里,ping test2的ip,发现能ping通

[root@vol ~]# docker exec -it test1 /bin/sh

/ # ping 172.17.0.3

PING 172.17.0.3 (172.17.0.3): 56 data bytes

64 bytes from 172.17.0.3: seq=0 ttl=64 time=0.187 ms

64 bytes from 172.17.0.3: seq=1 ttl=64 time=0.118 ms

64 bytes from 172.17.0.3: seq=2 ttl=64 time=0.133 ms

^C

--- 172.17.0.3 ping statistics ---

3 packets transmitted, 3 packets received, 0% packet loss

round-trip min/avg/max = 0.118/0.146/0.187 ms

/ # exit

进入test2容器ping test1 ip也是一样能通,说明两个容器之间网络是互通的。

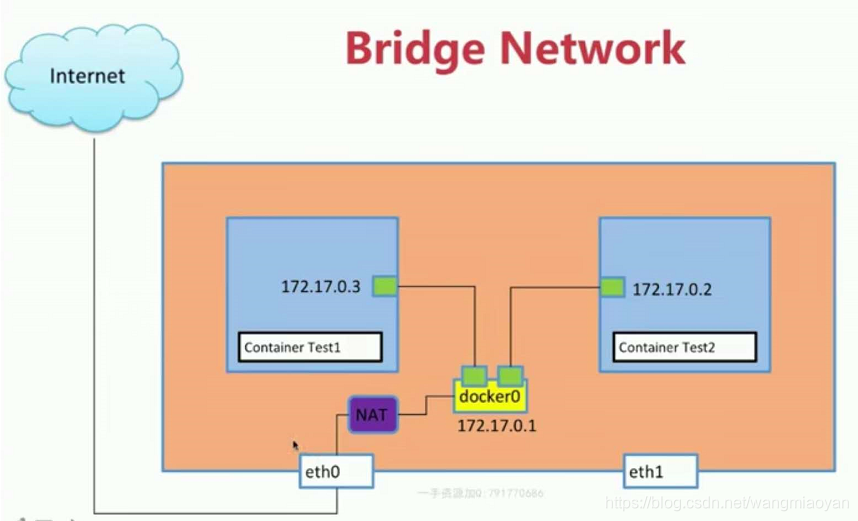

原理

其实原理也是类似于上一节所说。实际上就是新建了一对veth,将网络打通了。

1、容器里能访问外网,是因为有一对veth,一端连着容器,一端连着主机的docker0,这样容器就能共用主机的网络了,当容器访问外网时,就会通过NAT进行地址转换,实际是通过iptables来实现的。

2、再新建一个容器,又会生成一对veth,一端连着容器,一端连着docker0网络,这样两个容器都连着docker0,他们就可以互相通信了。这里可以把容器想象为家里的电脑,docker0则是路由器,想要两台电脑在同一局域网,就可以拿两条网线,把他们连到同一个路由器上,这样两台电脑就可以相互通信了。

如图所示:

验证

1、安装brctl工具

2、跟踪网络链路

安装brctl

[root@vol ~]# yum install bridge-utils

Loaded plugins: fastestmirror

Determining fastest mirrors

docker-ce-stable | 3.5 kB 00:00

epel | 5.3 kB 00:00

extras | 2.9 kB 00:00

kubernetes/signature | 454 B 00:00

kubernetes/signature | 1.4 kB 00:00 !!!

。。。省略。。。

查看本机bridge网络以及查看docker网络

已知有两个运行的容器,查看本机的ip地址,发现除了lo,ens160和docker0外,多出了两个veth,运行brctl show会发现bridge网络上连了两个veth,而这两个veth正好是主机上多出的那两个veth,由此可知,主机上veth连在docker0上,那么veth的另一端连着哪里呢?

[root@vol ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

da0dd80d5418 busybox "/bin/sh -c 'while t…" 17 minutes ago Up 17 minutes test2

dfe2c0f67d68 busybox "/bin/sh -c 'while t…" 4 hours ago Up 4 hours test1

[root@vol ~]# ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens160: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

link/ether 00:50:56:87:bd:a7 brd ff:ff:ff:ff:ff:ff

inet 172.31.17.54/16 brd 172.31.255.255 scope global noprefixroute ens160

valid_lft forever preferred_lft forever

inet6 fe80::33db:6382:9c3a:12e8/64 scope link tentative dadfailed

valid_lft forever preferred_lft forever

inet6 fe80::a780:a19:68f2:9347/64 scope link tentative dadfailed

valid_lft forever preferred_lft forever

inet6 fe80::a62f:dd94:b9a2:3027/64 scope link tentative dadfailed

valid_lft forever preferred_lft forever

3: docker0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:9d:e3:47:69 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

inet6 fe80::42:9dff:fee3:4769/64 scope link

valid_lft forever preferred_lft forever

5: veth9d0b56c@if4: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master docker0 state UP group default

link/ether 52:9f:51:35:0c:b8 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet6 fe80::509f:51ff:fe35:cb8/64 scope link

valid_lft forever preferred_lft forever

7: vethe9de44d@if6: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master docker0 state UP group default

link/ether f2:b4:4a:9c:41:0a brd ff:ff:ff:ff:ff:ff link-netnsid 1

inet6 fe80::f0b4:4aff:fe9c:410a/64 scope link

valid_lft forever preferred_lft forever

[root@vol ~]# brctl show

bridge name bridge id STP enabled interfaces

docker0 8000.02429de34769 no veth9d0b56c

vethe9de44d

可以看到veth9d0b56c 刚好是本机的5: veth9d0b56c@if4,而vethe9de44d是对应本机的 7: vethe9de44d@if6,另一端连在哪?

inspect bridge查看bridge的相关信息。发现其中的Containers上有test1和test2容器,说明这两个容器都是连在bridge网络上的。再新建一个容器,会发现又多一个容器连在bridge上。

[root@vol ~]# docker network list

NETWORK ID NAME DRIVER SCOPE

0ee165ccab6f bridge bridge local

baa1cdd2d1e4 host host local

2cb2a0e5dad5 none null local

[root@vol ~]# docker inspect bridge

[

{

"Name": "bridge",

"Id": "0ee165ccab6fa3c171708329bd3ab692376fc46ea4eb04fce93f2e0b3269d640",

"Created": "2020-01-19T16:23:36.392297087+08:00",

"Scope": "local",

"Driver": "bridge",

"EnableIPv6": false,

"IPAM": {

"Driver": "default",

"Options": null,

"Config": [

{

"Subnet": "172.17.0.0/16",

"Gateway": "172.17.0.1"

}

]

},

"Internal": false,

"Attachable": false,

"Ingress": false,

"ConfigFrom": {

"Network": ""

},

"ConfigOnly": false,

"Containers": {

"da0dd80d5418f7138d1d41fea43e06006d9d3dfe175e68502ba8ba6a809d1f83": {

"Name": "test2",

"EndpointID": "187509eca88c77ba6b1a7bb63f485d6a9e610038142983817d353031b63afba6",

"MacAddress": "02:42:ac:11:00:03",

"IPv4Address": "172.17.0.3/16",

"IPv6Address": ""

},

"dfe2c0f67d68db7d2b8498ab4ff9a787cde8da9c87f705b0bd685d33b0fab9e5": {

"Name": "test1",

"EndpointID": "bc27d2081aa69cebf0ee609aa42f00aa4a3cd73e72ff8b6c13eefb4b0b2fab67",

"MacAddress": "02:42:ac:11:00:02",

"IPv4Address": "172.17.0.2/16",

"IPv6Address": ""

}

},

"Options": {

"com.docker.network.bridge.default_bridge": "true",

"com.docker.network.bridge.enable_icc": "true",

"com.docker.network.bridge.enable_ip_masquerade": "true",

"com.docker.network.bridge.host_binding_ipv4": "0.0.0.0",

"com.docker.network.bridge.name": "docker0",

"com.docker.network.driver.mtu": "1500"

},

"Labels": {}

}

]

从上面配置看到,driver是bridge,Options中显示,桥接的是docker0网络,从containers的ip来看,bridge网络连着的是另一半的veth,如下,可以看到容器里的veth ip与bridge上的ip是一致的。

[root@vol ~]# docker exec test1 ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

4: eth0@if5: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue

link/ether 02:42:ac:11:00:02 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.2/16 brd 172.17.255.255 scope global eth0

valid_lft forever preferred_lft forever

[root@vol ~]# docker exec test2 ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

6: eth0@if7: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue

link/ether 02:42:ac:11:00:03 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.3/16 brd 172.17.255.255 scope global eth0

valid_lft forever preferred_lft forever

由此可以知道,新建一个docker容器,就会新建一对veth,一端连着本机的docker0,一端连着docker容器,由此实现的docker容器网络互通,以及容器与主机网络互通。

PS:

查看iptables规则

[root@vol ~]# iptables -t nat -vnL

Chain PREROUTING (policy ACCEPT 35048 packets, 5716K bytes)

pkts bytes target prot opt in out source destination

1763K 120M DOCKER all -- * * 0.0.0.0/0 0.0.0.0/0 ADDRTYPE match dst-type LOCAL

Chain INPUT (policy ACCEPT 31450 packets, 5320K bytes)

pkts bytes target prot opt in out source destination

Chain OUTPUT (policy ACCEPT 289K packets, 17M bytes)

pkts bytes target prot opt in out source destination

0 0 DOCKER all -- * * 0.0.0.0/0 !127.0.0.0/8 ADDRTYPE match dst-type LOCAL

Chain POSTROUTING (policy ACCEPT 289K packets, 17M bytes)

pkts bytes target prot opt in out source destination

7 406 MASQUERADE all -- * !docker0 172.17.0.0/16 0.0.0.0/0

Chain DOCKER (2 references)

pkts bytes target prot opt in out source destination

0 0 RETURN all -- docker0 * 0.0.0.0/0 0.0.0.0/0

这条规则会将源地址为172.17.0.0/16的包(也就是从Docker容器产生的包),并且不是从docker0网卡发出的,进行源地址转换,转换成主机网卡的地址。这么说可能不太好理解,举一个例子说明一下。假设主机有一块网卡为eth0,IP地址为10.10.101.105/24,网关为10.10.101.254。从主机上一个IP为172.17.0.1/16的容器中ping百度(180.76.3.151)。IP包首先从容器发往自己的默认网关docker0,包到达docker0后,也就到达了主机上。然后会查询主机的路由表,发现包应该从主机的eth0发往主机的网关10.10.105.254/24。接着包会转发给eth0,并从eth0发出去(主机的ip_forward转发应该已经打开)。这时候,上面的Iptable规则就会起作用,对包做SNAT转换,将源地址换为eth0的地址。这样,在外界看来,这个包就是从10.10.101.105上发出来的,Docker容器对外是不可见的。

2万+

2万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?