Docker(含docker-compose)、Kubernetes基础、Calico网络(含calicoctl)、nfs存储、Kubernetes dashboard、Helm、Harbor

文章目录

1.部署 Docker[含docker-compose]

1.1 Docker

Docker版本:20.10.8

Docker部署

1.2

docker-compose部署:

sudo curl -L "https://github.com/docker/compose/releases/download/1.29.2/docker-compose-$(uname -s)-$(uname -m)" -o /usr/local/bin/docker-compose

chmod +x /usr/local/bin/docker-compose

1.3 开启2375端口

vim /usr/lib/systemd/system/docker.service

修改:

ExecStart=/usr/bin/dockerd -H tcp://0.0.0.0:2375 -H fd:// --containerd=/run/containerd/containerd.sock

重启docker服务

systemctl daemon-reloadsystemctl restart docker

备注:

处于安全考虑,192.168.1.2的docker端口已修改为2275。

1.4 修改docker存储路径

参考: https://blog.csdn.net/u014589856/article/details/120280686

2.部署 Kubernetes

2.2 手动部署etcd服务+k8s服务的方式

step 1. 部署etcd服务

参考: https://www.cnblogs.com/shenjianping/p/14398093.html

cd /usr/local/etcdnohup ./etcd --config-file /usr/local/etcd/conf.yml &

[root@master etcd]# ./etcd --version

etcd Version: 3.4.18

Git SHA: 72d3e382e

Go Version: go1.12.17

Go OS/Arch: linux/amd64

etcd数据备份脚本:

#!/bin/bash

# etcd数据备份脚本

ETCDCTL_PATH='/usr/local/etcd/etcdctl'

ENDPOINTS='127.0.0.1:2379'

BACKUP_DIR='/etcdbackup'

DATE=`date +%Y%m%d-%H%M%S`

[ ! -d $BACKUP_DIR ] && mkdir -p $BACKUP_DIR

export ETCDCTL_API=3;$ETCDCTL_PATH --endpoints=$ENDPOINTS snapshot save $BACKUP_DIR/snapshot-$DATE\.db

cd $BACKUP_DIR;ls -lt $BACKUP_DIR|awk '{if(NR>11){print "rm -rf "$30}}'|sh

etcd数据恢复,以192.168.10.2为例:

/usr/local/etcd/etcdctl snapshot restore /etcdbackup/snapshot-20220713-010001.db --endpoints=127.0.0.1:2379

step 2. 部署Kubernetes服务

首先安装基础服务:

yum install -y kubelet-1.21.5

yum install -y kubeadm-1.21.5

yum install -y kubectl-1.21.5

然后参考:https://www.pingface.com/2021/01/etcd-ipvs-kubeadm.html 从“八 初始化Master”开始

cd /usr/local/src/k8s # 这是提前创建好的路径

kubeadm init --config k8s_init_config.yaml

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

kubectl taint nodes --all node-role.kubernetes.io/master-

k8s_init_config.yaml 内容:

apiVersion: kubeadm.k8s.io/v1beta1

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 192.168.1.2

bindPort: 6443

nodeRegistration:

criSocket: /var/run/dockershim.sock

name: master

#taints:

#- effect: NoSchedule

# key: node-role.kubernetes.io/master

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta1

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controlPlaneEndpoint: "192.168.1.2:6443"

controllerManager: {}

dns:

type: CoreDNS

etcd:

external:

endpoints:

- http://127.0.0.1:2379

imageRepository: gotok8s

kind: ClusterConfiguration

kubernetesVersion: v1.21.3

networking:

dnsDomain: cluster.local

podSubnet: "10.244.0.0/16"

serviceSubnet: 10.96.0.0/12

scheduler: {}

---

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

mode: ipvs

ipvs:

scheduler: nq

备注:

- goto8s仓库上没有找到v1.21.5版本,所以init就用了上面的v1.21.3版本。

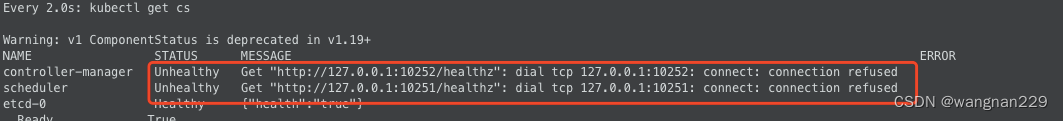

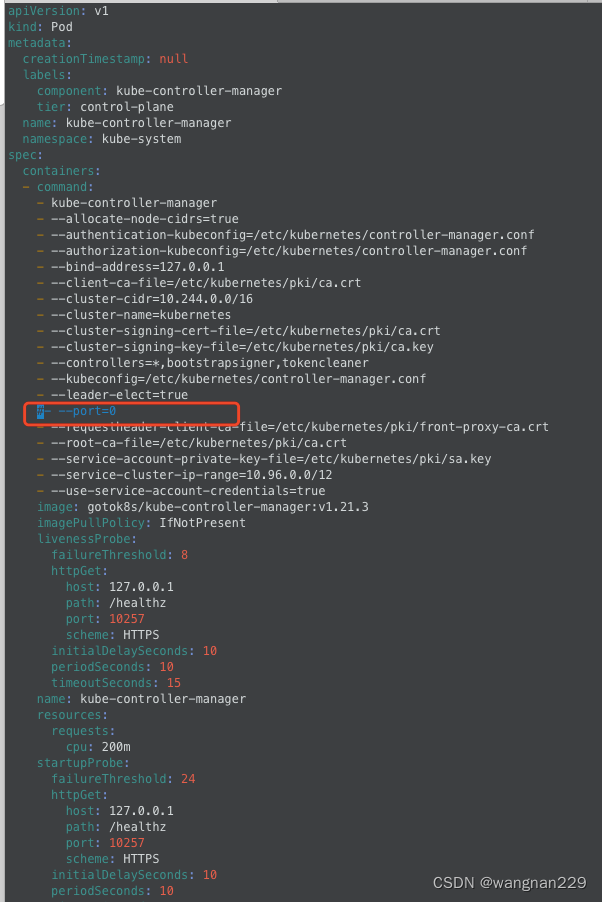

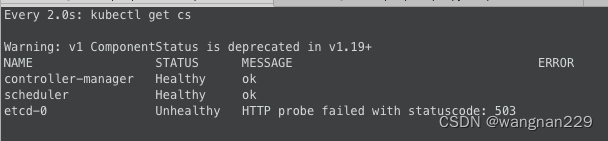

- 集群运行几个月后,发现 kube-controller-manager、kube-scheduler发生了数千次的restart,检查 events发现,提示:

google后,是安装k8s过程中,因为是禁用了非安全接口,所以有一个启动参数需要禁用:- --port=0

修改 /etc/kubernetes/manifest中的 kube-controller-manager.yaml、kube-scheduler.yaml,注释掉,kubelet自动重启后恢复正常。

3.部署 Kubernetes 组件:网络-Calico[含calicoctl]、存储-nfs

3.1 网络-Calico(含calicoctl)

3.1.1 calico安装:

版本:3.20.1

参考:https://www.cnblogs.com/leozhanggg/p/12930006.html ,如果完全按照官网指导安装,会出现IP检测错误的问题。

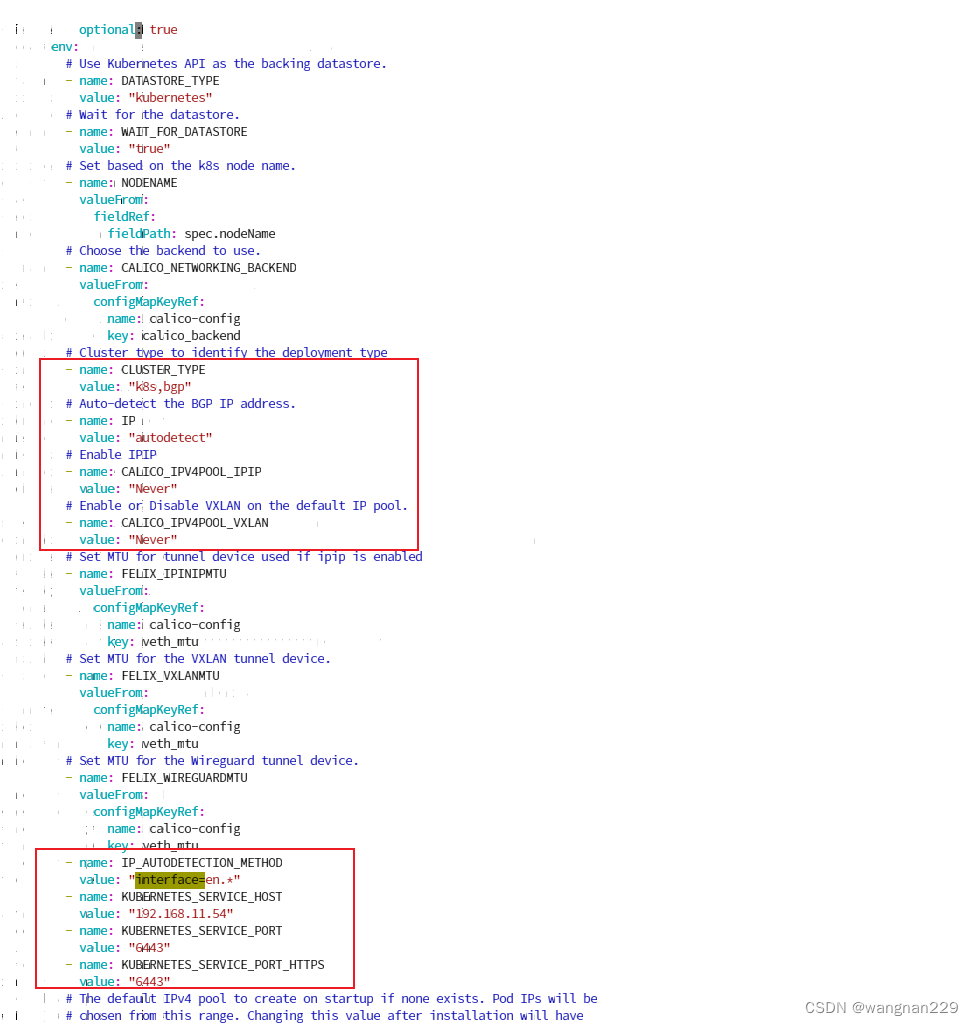

wget https://docs.projectcalico.org/manifests/calico.yaml

修改calico.yaml,注意红框标记的位置:其中,IP_AUTODETECTION_METHOD 是筛选主机网卡的正则表达式,我们安装的Centos7的默认网卡名称一般都是ens33,所以用 “en.*“筛选,而对于192.168.1.2来说,有2块网卡,分别名为enp1s0f0、enp1s0f1两块网卡,我们需要取的是enp1s0f0这块内网网卡的IP,所以可以直接写 “interface=enp1s0f0*”。另外,KUBERNETES_SERVICE_HOST 是指定集群 api-server 的ip。

vim calico.yaml

然后创建calico资源

kubectl apply -f /usr/local/src/yamls/calico.yaml

然后等待所有下相关pods正常运行即可。

watch kubectl get pods -n kube-system

问题:

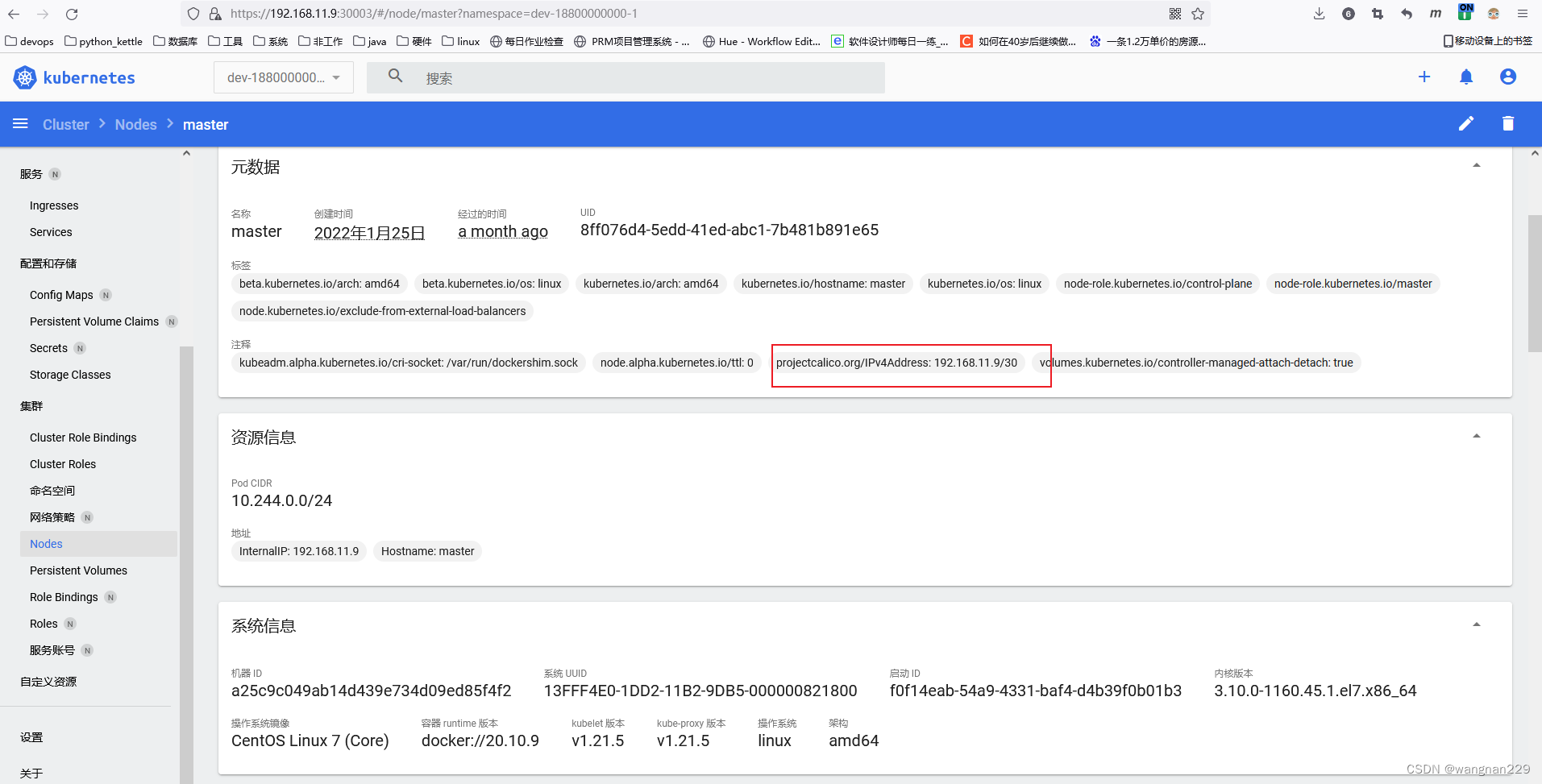

生成的calico pod如果有不正常,可以首先检查各个节点的这个注释:projectcalico.org/IPv4Address: 192.168.1.2/30 ,IP是否正常,如果机器配有多个IP,可能会出现匹配错误,那么需要手动编辑

生成加入新节点的命令:

kubeadm token create --print-join-command

将上面生成的命令,在每个计算节点上都执行一下,加入集群

kubeadm join 192.168.1.2:6443 --token p8xpcg.ow5lximej2gwpq8o --discovery-token-ca-cert-hash sha256:104d0a66442c6dc14f4a6b006d98359ad49db5e8b3c18ab9059176675fff434b

3.1.2 calicoctl 安装:

# 版本:3.20.2

curl -o /usr/local/bin/calicoctl -O -L "https://github.com/projectcalico/calicoctl/releases/download/v3.20.2/calicoctl"

chmod +x /usr/local/bin/calicoctl

3.2 存储-nfs 安装配置:

3.2.1 准备工作:

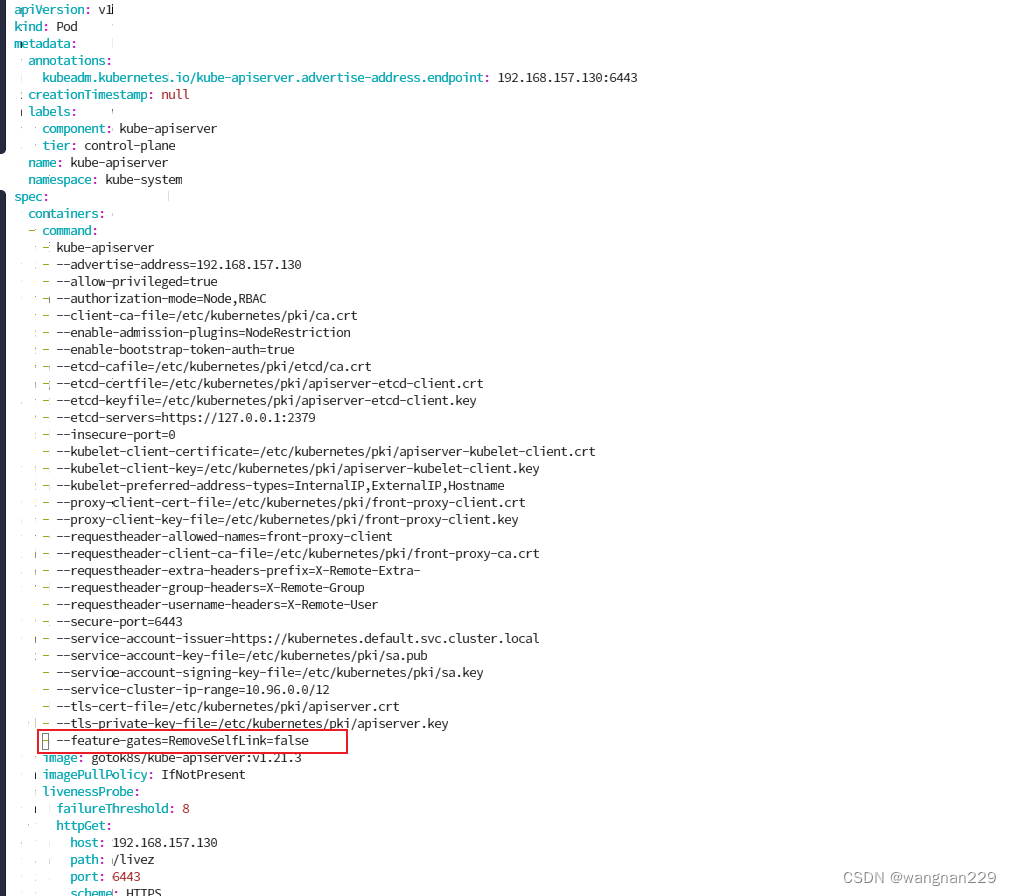

①修改 /etc/kubernetes/manifests/kube-apiserver.yaml,新增 - --feature-gates=RemoveSelfLink=false

②所有节点安装并启动 nfs 服务

yum install nfs-utils rpcbind -y

systemctl start rpcbind && systemctl enable rpcbind

systemctl start nfs && systemctl enable nfs

说明:客户端同样安装nfs-utils和rpcbind并启动,必须先启动rpcbind,否则报错(注意防火墙等)

##### 3.2.2 先创建nfs目录

```shell

mkdir -p /var/nfs

vim /etc/exports

加入一行内容,其中 no_root_squash 代表以root权限访问目录:

/var/nfs 192.168.1.0/24(rw,async,no_root_squash)

3.2.3 部署yaml资源

文件内容:

- class.yaml

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: managed-nfs-storage

annotations:

storageclass.kubernetes.io/is-default-class: 'true'

provisioner: fuseim.pri/ifs # or choose another name, must match deployment's env PROVISIONER_NAME'

parameters:

archiveOnDelete: 'false'

reclaimPolicy: Retain

- deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nfs-client-provisioner

labels:

app: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

spec:

replicas: 1

selector:

matchLabels:

app: nfs-client-provisioner

strategy:

type: Recreate

selector:

matchLabels:

app: nfs-client-provisioner

template:

metadata:

labels:

app: nfs-client-provisioner

spec:

serviceAccountName: nfs-client-provisioner

containers:

- name: nfs-client-provisioner

image: jmgao1983/nfs-client-provisioner:latest

volumeMounts:

- name: nfs-client-root

mountPath: /persistentvolumes

env:

- name: PROVISIONER_NAME

value: fuseim.pri/ifs

- name: NFS_SERVER

value: 192.168.1.2

- name: NFS_PATH

value: /var/nfs

resources:

requests:

memory: "100Mi"

cpu: "250m"

limits:

memory: "256Mi"

cpu: "500m"

volumes:

- name: nfs-client-root

nfs:

server: 192.168.1.2

path: /var/nfs

- rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: nfs-client-provisioner-runner

rules:

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create", "update", "patch"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: run-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

roleRef:

kind: ClusterRole

name: nfs-client-provisioner-runner

apiGroup: rbac.authorization.k8s.io

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

rules:

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: default

roleRef:

kind: Role

name: leader-locking-nfs-client-provisioner

apiGroup: rbac.authorization.k8s.io

执行

cd /usr/local/src/yamls/storageclassdeploy

kubectl apply -f class.yaml -f deployment.yaml -f rbac.yaml

检查生成的Pod,等待Pod状态变成Running:

[root@master storageclassdeploy]# kubectl get pod -n default

NAME READY STATUS RESTARTS AGE

nfs-client-provisioner-74ffbf57c4-fhplh 1/1 Running 1 19h

检查是否生成 managed-nfs-storage 默认存储类:

[root@master storageclassdeploy]# kubectl get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

managed-nfs-storage (default) fuseim.pri/ifs Delete Immediate false 19h

4.部署 Helm

版本:3.6.3

wget https://get.helm.sh/helm-v3.6.3-linux-amd64.tar.gz

tar -xf helm-v3.6.3-linux-amd64.tar.gz

cp linux-amd64/helm /usr/local/bin

5.部署仪表板 Kubernetes-dashboard

5.1 从Helm安装

版本:5.0.2

helm repo add kubernetes-dashboard https://kubernetes.github.io/dashboard/

# helm pull kubernetes-dashboard/kubernetes-dashboard

# version 选择chart的版本,开启metrics-server

helm repo update

kubectl create namespace dashboard

helm install dashboard -n dashboard kubernetes-dashboard/kubernetes-dashboard --set metricsScraper.enabled=true --set metrics-server.enabled=true --version=5.0.2

备注:如果创建失败,那么就删除dashboard命名空间,再重新安装。

5.2 生成新的secret:

mkdir -p /etc/kubernetes/token && cd /etc/kubernetes/token

openssl genrsa -out dashboard.key 2048

openssl req -new -out dashboard.csr -key dashboard.key -subj '/CN=192.168.1.2' # IP是master的地址

openssl x509 -req -in dashboard.csr -signkey dashboard.key -out dashboard.crt

openssl x509 -in dashboard.crt -text -noout

kubectl delete secret dashboard-kubernetes-dashboard-certs -n dashboard # 此处的 dashboard-kubernetes-dashboard-certs 根据实际的secret名称替换,以及命名空间也要根据实际修改

kubectl create secret generic dashboard-kubernetes-dashboard-certs --from-file=/etc/kubernetes/token/dashboard.key --from-file=/etc/kubernetes/token/dashboard.crt -n dashboard

kubectl get pod -n dashboard

kubectl delete pods/kubernetes-dashboard-* -n dashboard # 删除旧Pod,让系统自动重建新Pod

5.3 创建绑定用户:

[root@master dashboard]# cat admin-user.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin-user

namespace: dashboard

[root@master dashboard]# cat admin-user-role-binding.yaml

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: admin-user

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin-user

namespace: dashboard

5.4 获取admin-user的访问token

kubectl get secret -n dashboard

kubectl describe secret/admin-user-token-6tdwr -n dashboard

5.5 修改service nodePort

设置nodePort为30003

[root@master storageclassdeploy]# kubectl edit svc/dashboard-kubernetes-dashboard -n dashboard

[root@master storageclassdeploy]# kubectl get svc -n dashboard

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

dashboard-kubernetes-dashboard NodePort 10.105.129.57 <none> 443:30003/TCP 18hd

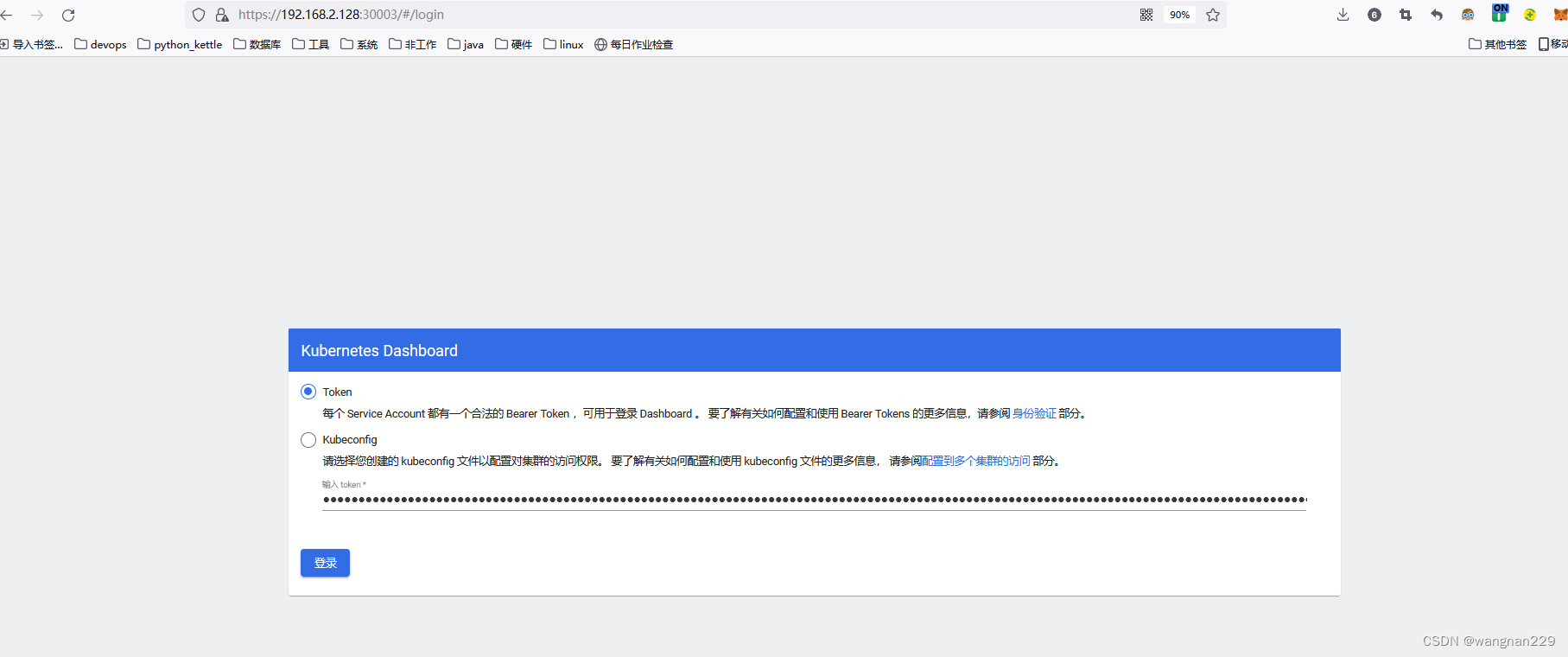

5.5 访问

输入 [5.4]步骤 获取的token,然后访问:

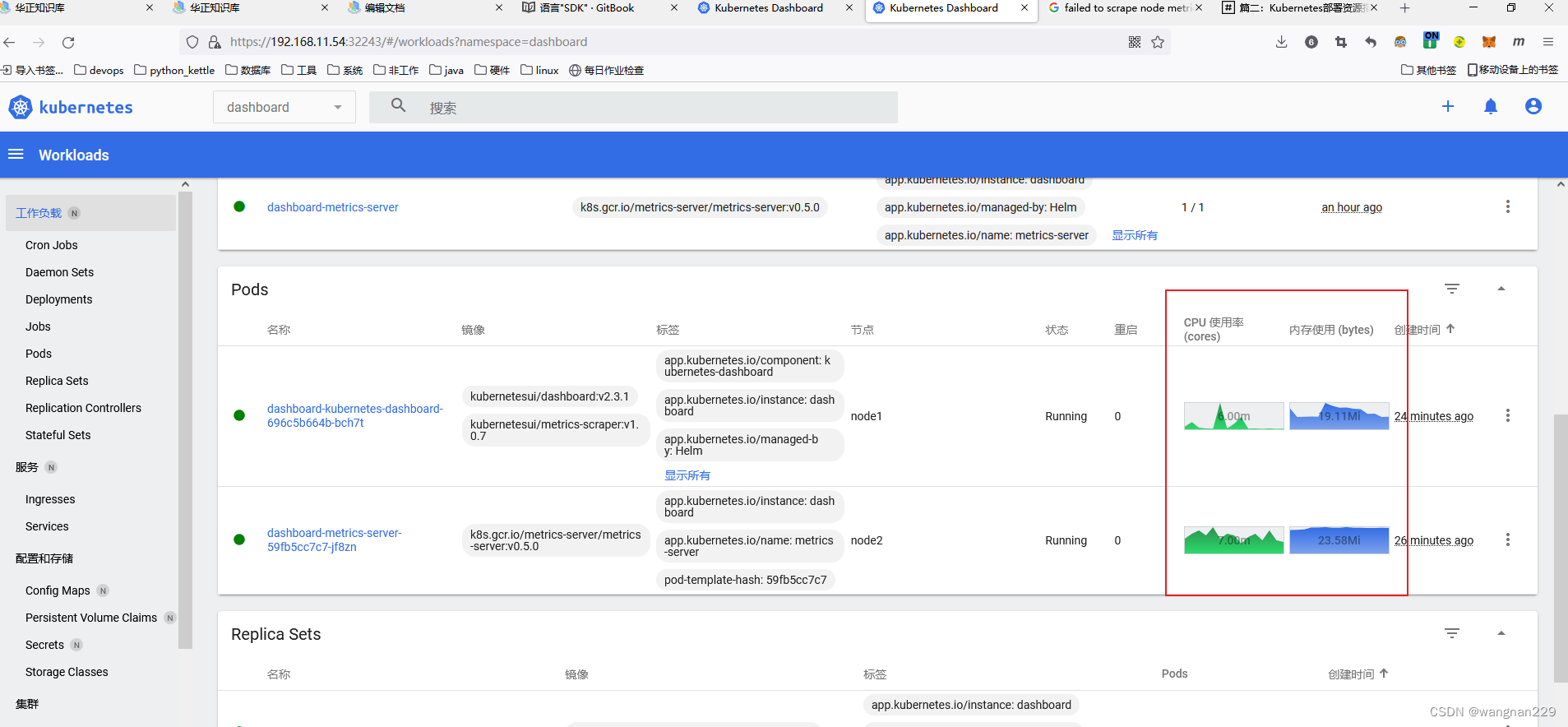

5.6 配置 metrics-server

5.6.1 配置image拉取源:

metrics-server deployment 的yaml,默认的image地址是 k8s.gcr.io/metrics-server/metrics-server:v0.5.0,国内很难访问通,我们修改成 zhangdiandong/metrics-server:v0.5.0,然后再使用docker tag 命令修改为 k8s.gcr.io/metrics-server/metrics-server:v0.5.0,我们可以在所有节点中执行,也可以只在分配了metrics-server pod 的节点上执行。

root@node1 ~]# docker pull zhangdiandong/metrics-server:v0.5.0

v0.5.0: Pulling from zhangdiandong/metrics-server

5dea5ec2316d: Pull complete

2ca785997557: Pull complete

Digest: sha256:05bf9f4bf8d9de19da59d3e1543fd5c140a8d42a5e1b92421e36e5c2d74395eb

Status: Downloaded newer image for zhangdiandong/metrics-server:v0.5.0

docker.io/zhangdiandong/metrics-server:v0.5.0

[root@node1 ~]# docker tag zhangdiandong/metrics-server:v0.5.0 k8s.gcr.io/metrics-server/metrics-server:v0.5.0

5.6.2 配置 deployment:

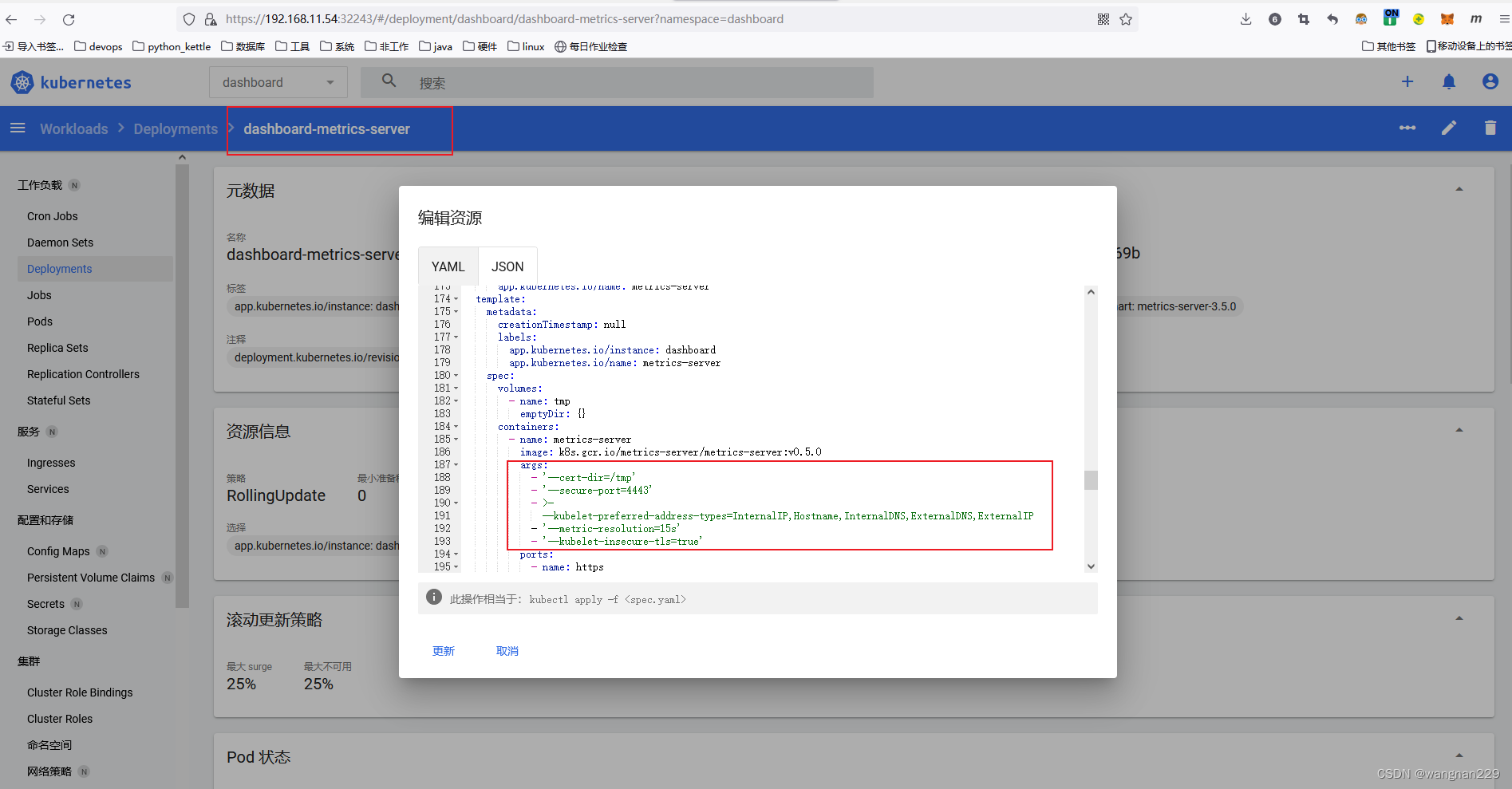

先修改deployment的启动命令参数,按下图配置:

主要就是新增 - ‘—kubelet-insecure-tls=true’然后删除 dashboard、metrics-server的pod,等待自动重建完成后,登录后查看,便有了统计功能。

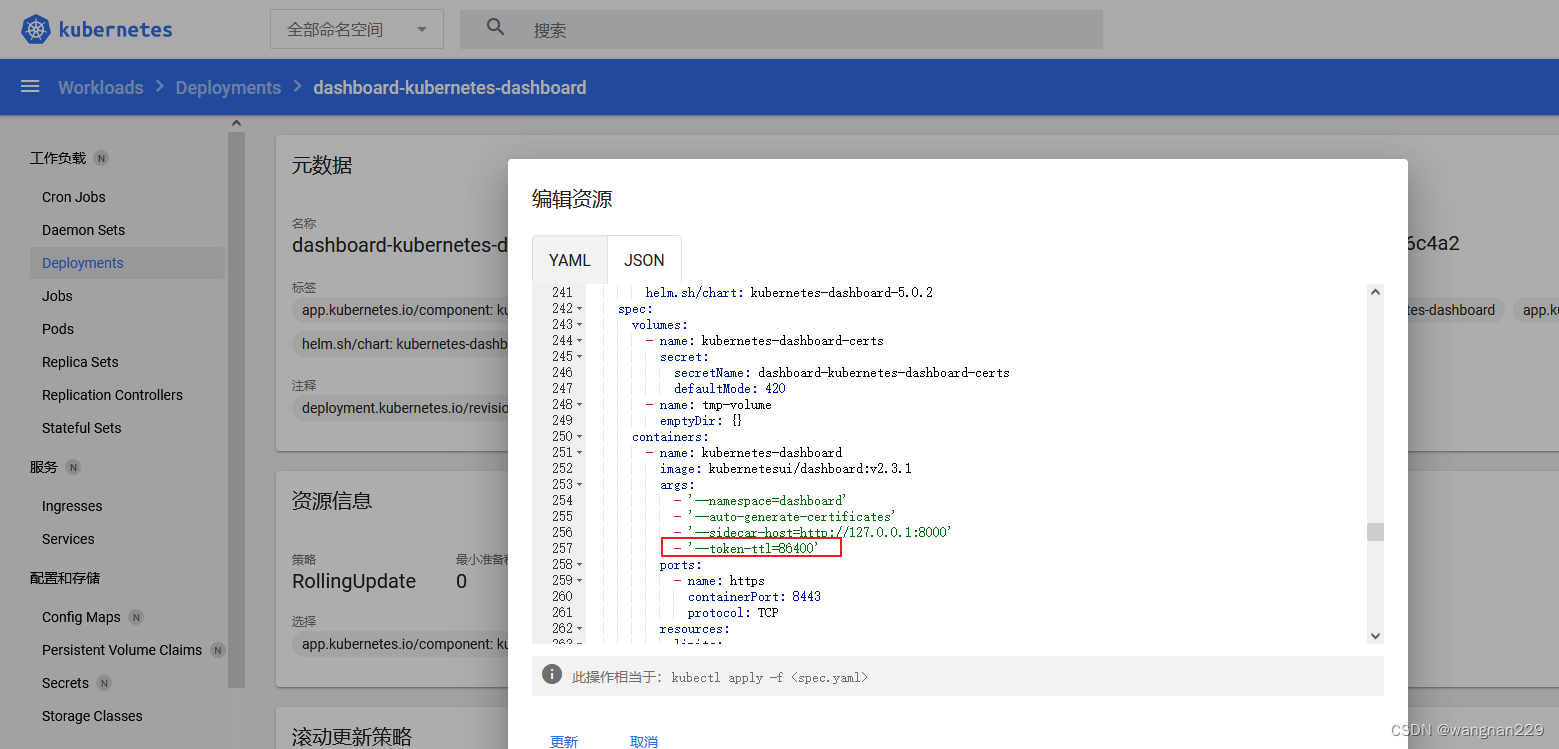

5.7 配置超时时间

默认超时时间15分钟,调试不方便,修改为1天。修改deployment,增加 - ‘—token-ttl=86400’ 参数:

6.部署 Harbor[Helm Chart方式]及使用说明

6.1 部署

Chart版本:1.7.3

kubectl create namespace harbor

helm repo add goharbor https://helm.goharbor.io

helm repo update

# 查看harbor chart的各个版本

helm search repo harbor -l

# --version 选择chart的版本

helm install harbor goharbor/harbor -n harbor --set persistence.enabled=true --set expose.type=nodePort --set expose.tls.enabled=false --set externalURL=http://192.168.1.2:30002 --set service.nodePorts.http=30002 --version=1.7.3 # nodePort要与externalURL的端口一致

kubectl get svc -n harbor

为了能够正常推送镜像,需要修改Docker的设置,所有节点都要设置:

$ vim /etc/docker/daemon.json

增加内容:

{"insecure-registries" : ["192.168.1.2:30002"]}

重启docker服务

systemctl restart docker

6.2 使用说明

6.2.1 创建用户

首先用admin的默认密码 Harbor12345 登录

6.2.2 创建仓库

目标URL:填写部署Harbor时的 externalURL 地址; 访问ID,填写上面创建的用户。然后测试连接。

6.2.3 创建项目

如果选中 公开,则拉取镜像时不需要docker login,一般选择 不公开。

6.2.4 将镜像push到harbor仓库

[root@master images]# docker tag 192.168.1.2:6999/images/admin:1.3.1 192.168.1.2:30002/project/admin:1.3.1 # 打标签tag

[root@master images]# docker login 192.168.1.2:30002 -u password # 登录仓库

Password:

WARNING! Your password will be stored unencrypted in /root/.docker/config.json.

Configure a credential helper to remove this warning. See

https://docs.docker.com/engine/reference/commandline/login/#credentials-store

Login Succeeded

[root@master images]# docker push 192.168.1.2:30002/project/admin:1.3.1 # 将本地image推送到仓库

The push refers to repository [192.168.1.2:30002/project/admin]

c996c1cf2a54: Pushed

111dbad519c1: Pushed

e64fbc0b83ec: Pushed

a52fcbff5465: Pushed

767f936afb51: Pushed

1.3.1: digest: sha256:a50f7c03ec99a1f37c5fba11fdc9385d2ee7f5ef313bb3488928fc47f22a128b size: 1366

7 部署Ingress

7.1 绑定外网IP的节点打标签,添加禁止调度的污点:

kubectl label nodes node0 ingress-selector=true

kubectl taint nodes node0 key=value:NoExecute

7.2 部署Ingress Controller

cd /usr/local/src/ingress/ingress-service

kubectl apply -f ingress-service.yaml -f mandatory.yaml

完成后,应该只会在指定节点上生成Ingress Controller的容器组。

8 安装容器组Shell工具

参考:kubernetes通过web方式连接指定pod的命令行终端(运维管理平台开源组件alkssh)

docker run -d -v /root/.kube/config:/root/kubernetes.yaml -p 3578:3578 --add-host apiserver.cluster.local:192.168.1.2 --name podshell czl1041484348/alkssh:v1

访问样例:

http://192.168.1.2:3578/index/dev-18800000000-1/pom-pom-vue-769d85f5b-wmxzv

删除集群

大致步骤:

kubeadm reset

iptables -F && iptables -t nat -F && iptables -t mangle -F && iptables -X

systemctl stop kubelet

systemctl stop docker

rm -rf /var/lib/cni/*

rm -rf /var/lib/kubelet/*

rm -rf /etc/kubernetes/

rm -rf $HOME/.kube

rm -rf /etc/cni/*

ifconfig cni0 down

ifconfig flannel.1 down

ifconfig docker0 down

ip link delete cni0

ip link delete flannel.1

systemctl start docker

1469

1469

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?