一、安装Elasticsearch

mkdir -p /home/qiang/data/elasticsearch/data

mkdir -p /home/qiang/data/elasticsearch/logs

chmod 777 /home/qiang/data/elasticsearch/data /home/qiang/data/elasticsearch/logs

# 如迁移目录则授权

chown -R 1000:root /home/qiang/data/elasticsearch/data/

version: '3'

services:

elasticsearch:

image: elasticsearch:7.12.1

container_name: elasticsearch

restart: always

ports:

- "9200:9200"

volumes:

- /home/qiang/data/elasticsearch/data:/usr/share/elasticsearch/data

- /home/qiang/data/elasticsearch/logs:/usr/share/elasticsearch/logs

environment:

- "ES_JAVA_OPTS=-Xms256m -Xmx256m"

- "discovery.type=single-node"

# 复制配置文件

mkdir -p /home/qiang/data/elasticsearch/conf

docker cp elasticsearch:/usr/share/elasticsearch/config/elasticsearch.yml /home/qiang/data/elasticsearch/conf

elasticsearch.yml

# 集群名称

cluster.name: my-application

# 节点名称

node.name: node-1

# 是否可以成为master节点

node.master: true

# 是否允许该节点存储数据,默认开启

node.data: true

# 网络绑定

network.host: 0.0.0.0

# 设置对外服务的http端口

http.port: 9200

cluster.initial_master_nodes: ["node-1"]

# 支持跨域访问

http.cors.enabled: true

http.cors.allow-origin: "*"

# 安全认证

xpack.security.enabled: false

#http.cors.allow-headers: "Authorization"

version: "3"

services:

elasticsearch:

container_name: elasticsearch

hostname: elasticsearch

image: elasticsearch:7.12.1

restart: always

ports:

- 9200:9200

volumes:

- /home/qiang/data/elasticsearch/data:/usr/share/elasticsearch/data

- /home/qiang/data/elasticsearch/logs:/usr/share/elasticsearch/logs

- /home/qiang/data/elasticsearch/conf/elasticsearch.yml:/usr/share/elasticsearch/config/elasticsearch.yml

environment:

- "ES_JAVA_OPTS=-Xms256m -Xmx256m"

二、安装Logstash

version: '3'

services:

logstash:

image: logstash:7.12.1

container_name: logstash

restart: always

ports:

- "5044:5044"

- "9600:9600"

environment:

- "LS_JAVA_OPTS=-Xms256m -Xmx256m"

# 创建目录

mkdir -p /home/qiang/data/logstash/config/

# 复制配置文件

docker cp logstash:/usr/share/logstash/config/logstash.yml /home/qiang/data/logstash/config/

docker cp logstash:/usr/share/logstash/config/logstash-sample.conf /home/qiang/data/logstash/config/logstash.conf

logstash.yml

http.host: "0.0.0.0"

xpack.monitoring.elasticsearch.hosts: [ "http://192.168.1.51:9200" ]

logstash.conf

input {

tcp {

port => 5044

codec => json_lines

}

}

output {

# 输出到控制台

stdout {

codec => rubydebug

}

elasticsearch {

hosts => ["http://192.168.1.51:9200"]

index => "logstash_%{+YYYY.MM.dd}"

}

}

有数据卷

version: '3'

services:

logstash:

image: logstash:7.12.1

container_name: logstash

restart: always

ports:

- "5044:5044"

- "9600:9600"

volumes:

- /home/qiang/data/logstash/config/logstash.yml:/usr/share/logstash/config/logstash.yml

- /home/qiang/data/logstash/config/logstash.conf:/usr/share/logstash/pipeline/logstash.conf

environment:

- "LS_JAVA_OPTS=-Xms256m -Xmx256m"

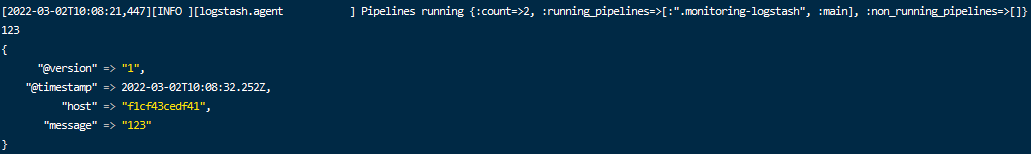

测试命令

# 进入容器

docker exec -it logstash bash

# 输出到控制台

/usr/share/logstash/bin/logstash --path.data /usr/share/logstash/test -e 'input { stdin{} } output { stdout{ codec => rubydebug }}'

# 输出到ES

/usr/share/logstash/bin/logstash --path.data /usr/share/logstash/test -e 'input { stdin{} } output { elasticsearch {hosts => ["192.168.1.51:9200"] index => "logstash_%{+YYYY.MM.dd}" }}'

# 输出到文件

/usr/share/logstash/bin/logstash --path.data /usr/share/logstash/test -e 'input { stdin{} } output { file { path => "/tmp/logstash_%{+YYYY.MM.dd}.log"}}'

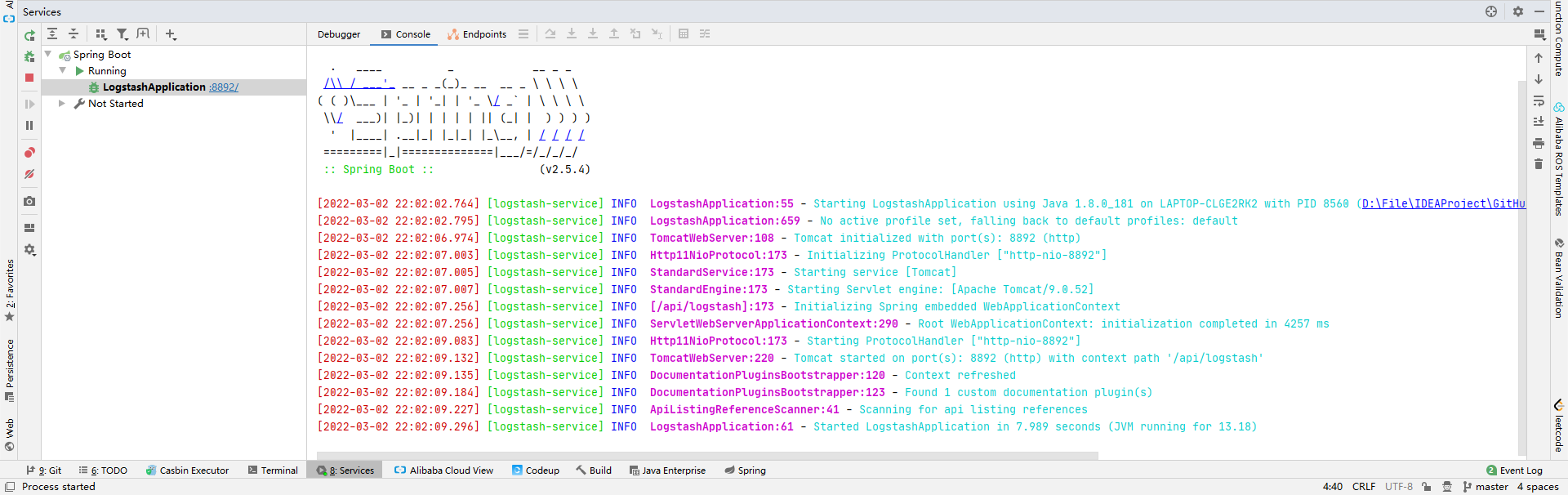

Springboot整合Logstash

pom.xml

<dependency>

<groupId>net.logstash.logback</groupId>

<artifactId>logstash-logback-encoder</artifactId>

<version>7.0.1</version>

</dependency>

logback.xml

<?xml version="1.0" encoding="UTF-8"?>

<!--

debug: 当此属性设置为true时,将打印出logback内部日志信息,实时查看logback运行状态。默认值为false。

scan: 当此属性设置为true时,配置文件如果发生改变,将会被重新加载,默认值为true。

scanPeriod: 设置监测配置文件是否有修改的时间间隔,如果没有给出时间单位,默认单位是毫秒。当scan为true时,此属性生效。默认的时间间隔为1分钟。

-->

<configuration debug="false" scan="true" scanPeriod="30 seconds">

<!-- 使用:只需要修改服务名的value即可 -->

<!-- 服务名 -->

<property name="serviceName" value="logstash-service"/>

<!-- 获取服务名 -->

<contextName>${serviceName}</contextName>

<!-- 控制台输出格式 -->

<appender name="console" class="ch.qos.logback.core.ConsoleAppender">

<encoder class="ch.qos.logback.classic.encoder.PatternLayoutEncoder">

<!--

%d{HH:mm:ss.SSS}:日期输出格式

%contextName:服务名

[%thread]:线程名

%-5level: -5从左显示5个字符宽度,level日志级别

%logger{36}:类的全名,36最长的字符限制

%msg:日志信息

%n:换行

%tradeId:链路ID

-->

<pattern>%red([%d{yyyy-MM-dd HH:mm:ss.SSS}]) %green([%contextName]) %highlight(%-5level) %boldMagenta(%logger{0}:%line) - %cyan(%msg%n)

</pattern>

</encoder>

<!-- 控制台输出INFO级别以上的 -->

<filter class="ch.qos.logback.classic.filter.ThresholdFilter">

<level>INFO</level>

</filter>

</appender>

<!-- 通过Tcp协议连接Logstash -->

<appender name="logstash" class="net.logstash.logback.appender.LogstashTcpSocketAppender">

<destination>192.168.1.51:5044</destination>

<encoder class="net.logstash.logback.encoder.LoggingEventCompositeJsonEncoder">

<providers>

<timestamp>

<timeZone>UTC</timeZone>

</timestamp>

<pattern>

<pattern>

{

"date":"%d",

"serviceName":"%contextName",

"level":"%level",

"thread":"%thread",

"logger":"%logger{0}:%line",

"log": "%msg"

}

</pattern>

</pattern>

</providers>

</encoder>

<filter class="ch.qos.logback.classic.filter.ThresholdFilter">

<level>INFO</level>

</filter>

</appender>

<!-- 选择输入的日志级别 -->

<root level="info">

<!-- 输出到打印控制台 -->

<appender-ref ref="console"/>

<!-- 输出到logstash -->

<appender-ref ref="logstash"/>

</root>

</configuration>

启动服务

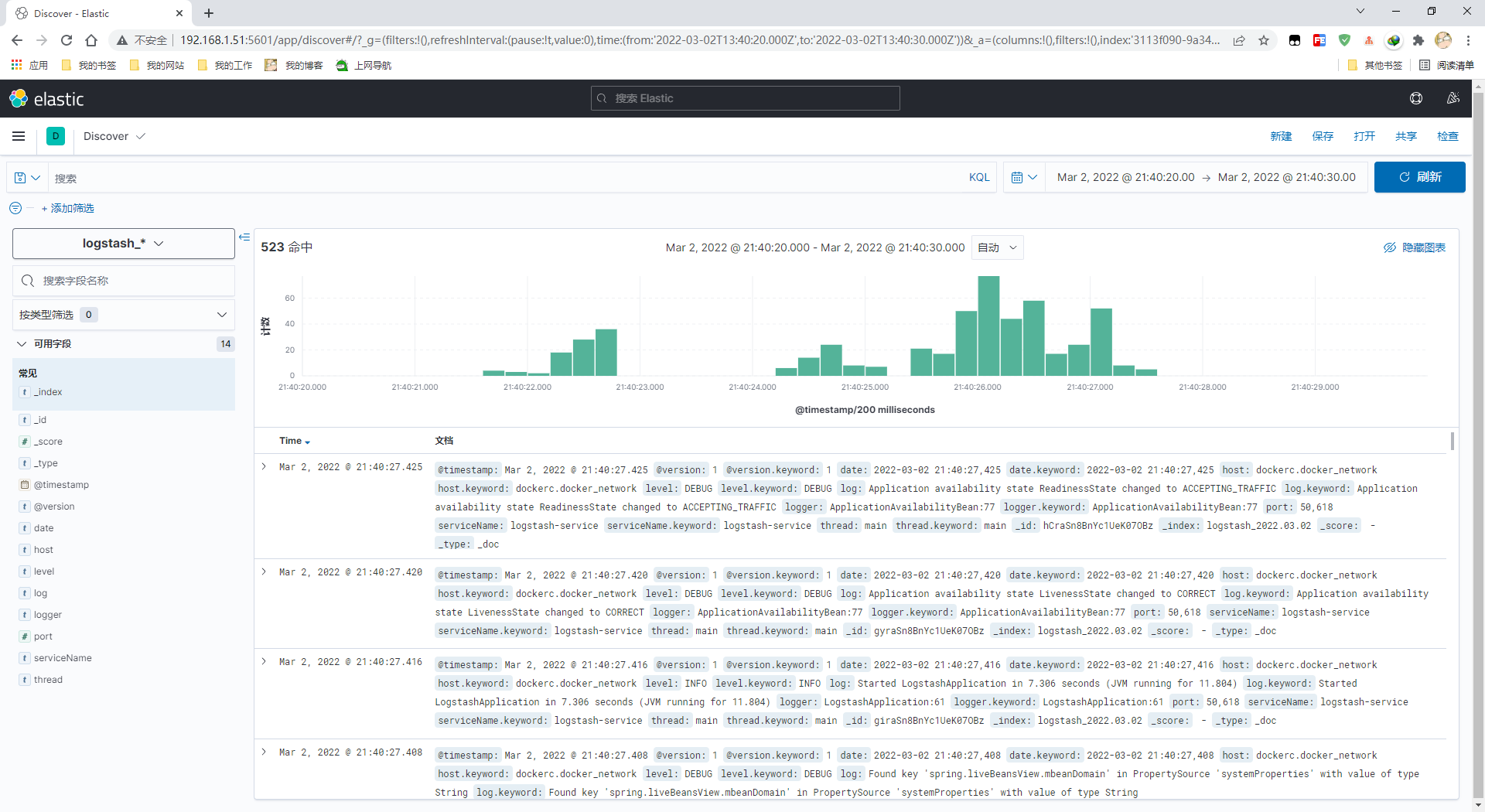

收集到日志springboot -> logstash -> elasticsearch

三、安装Kibana

无数据卷

version: '3'

services:

kibana:

image: kibana:7.12.1

container_name: kibana

restart: always

privileged: true

ports:

- "5601:5601"

environment:

- "ELASTICSEARCH_HOSTS=http://192.168.1.51:9200"

- "I18N_LOCALE=zh-CN"

访问地址

# 复制配置文件

mkdir -p /home/qiang/data/kibana/

docker cp kibana:/usr/share/kibana/config/kibana.yml /home/qiang/data/kibana/

kibana.yml

#

# ** THIS IS AN AUTO-GENERATED FILE **

#

# Default Kibana configuration for docker target

server.name: kibana

server.host: "0"

server.basePath: "/kibana"

elasticsearch.hosts: [ "http://elasticsearch:9200" ]

monitoring.ui.container.elasticsearch.enabled: true

docker-compose.yaml

version: '3'

services:

kibana:

image: kibana:7.12.1

container_name: kibana

restart: always

privileged: true

ports:

- "5601:5601"

environment:

- "ELASTICSEARCH_HOSTS=http://192.168.1.51:9200"

- "I18N_LOCALE=zh-CN"

volumes:

- /home/qiang/data/kibana/kibana.yml:/usr/share/kibana/config/kibana.yml

nginx代理

location /kibana/ {

proxy_pass http://192.168.1.51:5601;

rewrite ^/elk/(.*)$ /$1 break;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header Host $host:$server_port;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_http_version 1.1;

}

作者(Author):小强崽

来源(Source):https://www.wuduoqiang.com/archives/Docker搭建ELK

协议(License):署名-非商业性使用-相同方式共享 4.0 国际 (CC BY-NC-SA 4.0)

版权(Copyright):商业转载请联系作者获得授权,非商业转载请注明出处。 For commercial use, please contact the author for authorization. For non-commercial use, please indicate the source.

3762

3762

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?