1、修改主机名/hosts文件

hostnamectl set-hostname k8s-master

hostnamectl set-hostname k8s-node01

2、修改hosts文件

每台机器上执行

cat >> /etc/hosts << EOF

172.16.17.203 k8s-master

172.16.17.204 k8s-node01

EOF

3、关闭防火墙

每台机器上执行:

systemctl stop firewalld.service

systemctl disable firewalld.service

systemctl status firewalld.service

4、关闭SELINUX配置

每台机器上执行:

setenforce 0

sed -ri 's/SELINUX=enforcing/SELINUX=disabled/' /etc/selinux/config

sestatus

5、安装ipset、ipvsadm

yum -y install --downloadonly --downloaddir /data/software/ipset_ipvsadm ipset ipvsadm

rpm -ivh ipvsadm-1.27-8.el7.x86_64.rpm --nodeps --force

6、关闭swap交换区

#临时关闭Swap分区

swapoff -a

#永久关闭Swap分区

sed -ri 's/.*swap.*/#&/' /etc/fstab

#查看下

grep swap /etc/fstab

7、配置ssh免密登录

ssh-keygen 一直回车

复制id_rsa.pub

cd /root/.ssh

cp id_rsa.pub authorized_keys

在其他机器创建/root/.ssh目录

mkdir -p /root/.ssh

将/root/.ssh拷贝到其他机器

scp -r /root/.ssh/* 172.16.17.204:/root/.ssh/

8、安装docker-ce/cri-dockerd

在一台有网的机器下载包(配置阿里云源)

cd /etc/yum.repos.d/

#备份默认的repo文件

mkdir bak && mv *.repo bak

#下载阿里云yum源文件

curl -o /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo

#清理、更新缓存

yum clean all && yum makecache

如果存在docker,卸载

yum remove docker docker-client docker-client-latest docker-common docker-latest docker-latest-logrotate docker-logrotate docker-engine

建议重新安装epel源

rpm -qa | grep epel

yum remove epel-release

yum -y install epel-release

安装yum-utils

yum install -y yum-utils

添加docker仓库

yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

更新软件包索引

yum makecache fast

下载RPM包

#查看docker版本,这里选择25.0.5

yum list docker-ce --showduplicates |sort –r

#查看containerd.io版本,这里选择1.6.31

yum list containerd.io --showduplicates |sort –r

#下载命令,下载后包在/data/docker下

mkdir -p /data/docker -p

yum install -y docker-ce-25.0.5 docker-ce-cli-25.0.5 containerd.io-1.6.31 --downloadonly --downloaddir=/data/docker

每台机器都安装

将下载好的安装包上传至各个虚拟机

rpm -ivh *.rpm --nodeps --force

启动docker

#重载unit配置文件

systemctl daemon-reload

#启动Docker

systemctl start docker

#设置开机自启

systemctl enable docker.service

2 安装cri-docker

在一台有网的机器下载安装包

下载地址:https://github.com/Mirantis/cri-dockerd/releases

选择对应的架构和版本,这里下载:https://github.com/Mirantis/cri-dockerd/releases/download/v0.3.8/cri-dockerd-0.3.8-3.el7.x86_64.rpm

#安装

rpm -ivh cri-dockerd-0.3.8-3.el7.x86_64.rpm --nodeps --force

#修改/usr/lib/systemd/system/cri-docker.service中ExecStart那一行,制定用作Pod的基础容器镜像(pause)

ExecStart=/usr/bin/cri-dockerd --pod-infra-container-image=registry.k8s.io/pause:3.9 --container-runtime-endpoint fd://

启动cri-dockerd

systemctl enable --now cri-docker

systemctl status cri-docker

安装kubernetes

1 下载 kubelet kubeadm kubectl

在一台有网的机器执行:

配置镜像源

k8s源镜像源准备(社区版yum源,注意区分版本)

cat <<EOF | tee /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://pkgs.k8s.io/core:/stable:/v1.30/rpm/

enabled=1

gpgcheck=1

gpgkey=https://pkgs.k8s.io/core:/stable:/v1.30/rpm/repodata/repomd.xml.key

EOF

#查看可安装的版本,选择合适的版本,这里选择1.30.0-150500.1.1

yum list kubeadm.x86_64 --showduplicates |sort -r

yum list kubelet.x86_64 --showduplicates |sort -r

yum list kubectl.x86_64 --showduplicates |sort -r

#yum下载(不安装)

yum -y install --downloadonly --downloaddir=/opt/software/k8s-package kubeadm-1.30.0-150500.1.1 kubelet-1.30.0-150500.1.1 kubectl-1.30.0-150500.1.1

2 安装kubelet kubeadm kubectl

每台机器都执行

将安装包上传至各个机器

安装

rpm -ivh *.rpm --nodeps --force

修改docker的cgroup-driver

vi /etc/docker/daemon.json

添加或修改内容

{

"exec-opts": ["native.cgroupdriver=systemd"]

}

#重启docker

systemctl daemon-reload

systemctl restart docker

systemctl status docker

配置kublet的cgroup 驱动与docker一致

#备份原文件

cp /etc/sysconfig/kubelet{,.bak}

#修改kubelet文件

vi /etc/sysconfig/kubelet

#修改内容

KUBELET_EXTRA_ARGS="--cgroup-driver=systemd"

开启自启kubelet

systemctl enable kubelet

3 安装tab命令补全工具

yum install -y --downloadonly --downloaddir=/data/software/command-tab/ bash-completion

rpm -ivh bash-completion-2.1-8.el7.noarch.rpm --nodeps --force

source /usr/share/bash-completion/bash_completion

echo "source <(kubectl completion bash)" >> ~/.bashrc

source ~/.bashrc

4 下载K8S运行依赖的镜像

docker pull registry.aliyuncs.com/google_containers/kube-apiserver:v1.30.0

docker pull registry.aliyuncs.com/google_containers/kube-controller-manager:v1.30.0

docker pull registry.aliyuncs.com/google_containers/kube-scheduler:v1.30.0

docker pull registry.aliyuncs.com/google_containers/kube-proxy:v1.30.0

docker pull registry.aliyuncs.com/google_containers/coredns:1.11.1

docker pull registry.aliyuncs.com/google_containers/pause:3.9

docker pull registry.aliyuncs.com/google_containers/etcd:3.5.12-0

将docker镜像保存为tar包,并保存待离线使用

docker save -o kube-apiserver-v1.30.0.tar registry.aliyuncs.com/google_containers/kube-apiserver:v1.30.0

docker save -o kube-controller-manager-v1.30.0.tar registry.aliyuncs.com/google_containers/kube-controller-manager:v1.30.0

docker save -o kube-scheduler-v1.30.0.tar registry.aliyuncs.com/google_containers/kube-scheduler:v1.30.0

docker save -o kube-proxy-v1.30.0.tar registry.aliyuncs.com/google_containers/kube-proxy:v1.30.0

docker save -o coredns-1.11.1.tar registry.aliyuncs.com/google_containers/coredns:1.11.1

docker save -o pause-3.9.tar registry.aliyuncs.com/google_containers/pause:3.9

docker save -o etcd-3.5.12-0.tar registry.aliyuncs.com/google_containers/etcd:3.5.12-0

然后加载推送到镜像库

docker load -i kube-apiserver-v1.30.0.tar

docker load -i kube-controller-manager-v1.30.0.tar

docker load -i kube-scheduler-v1.30.0.tar

docker load -i kube-proxy-v1.30.0.tar

docker load -i coredns-1.11.1.tar

docker load -i pause-3.9.tar

docker load -i etcd-3.5.12-0.tar

vi /etc/docker/daemon.json

添加配置vi /etc/docker/daemon.json

{

"data-root": "/data/docker",

"exec-opts": ["native.cgroupdriver=systemd"],

"insecure-registries":["172.16.17.203:81", "quay.io", "k8s.gcr.io", "gcr.io"]

}

systemctl daemon-reload

systemctl restart docker

修改cri-docker将pause镜像修改自己的

#vi /usr/lib/systemd/system/cri-docker.service

#修改--pod-infra-container-image=registry.k8s.io/pause:3.9 为--pod-infra-container-image=registry.aliyuncs.com/google_containers/pause:3.9

#重启cri-docker

systemctl daemon-reload

systemctl restart cri-docker

6.1 k8s-master安装

在主节点k8s-master操作 :

kubeadm init --kubernetes-version=v1.30.0 --pod-network-cidr=10.244.0.0/16 --apiserver-advertise-address=172.16.17.203 --cri-socket unix:///var/run/cri-dockerd.sock --image-repository registry.aliyuncs.com/google_containers --upload-certs

kubectl get node

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

在其它节点

kubeadm join 172.16.17.203:6443 --token 2ml2ro.adrzs4t9zq1yc51e --discovery-token-ca-cert-hash sha256:984cba7d5ba30dedf3672db51cecc7b21eec744ac87edf706becb7ffe6d41c21 --cri-socket `unix:///var/run/cri-dockerd.sock`

安装网络组件calico

docker pull docker.io/calico/node:v3.27.3

docker pull docker.io/calico/kube-controllers:v3.27.3

docker pull docker.io/calico/cni:v3.27.3

docker save -o calico-node.tar docker.io/calico/node:v3.27.3

docker save -o calico-kube-controllers.tar docker.io/calico/kube-controllers:v3.27.3

docker save -o calico-cni.tar docker.io/calico/cni:v3.27.3

docker load -i calico-cni.tar

docker load -i calico-kube-controllers.tar

docker load -i calico-node.tar

#如果以上方式不好下载,从github下载:https://github.com/projectcalico/calico/releases/tag/v3.27.3,选择release-v3.27.3.tgz,下载后解压,从image中找到三个镜像

下载calico.yaml: https://github.com/projectcalico/calico/blob/v3.27.3/manifests/calico.yaml

将calico.yaml上传至主节点

由于国内镜像问题 image: docker.io/calico/xxxxx 只要是docker.io都换成自己的镜像地址

修改其中的镜像,都修改为172.16.17.203:81中的三个镜像:`172.16.17.203:81/calico/node:v3.27.3,172.16.17.203:81/calico/kube-controllers:v3.27.3,172.16.17.203:81/calico/cni:v3.27.3`

修改网络参数与init时的参数--pod-network-cidr一致

- name: CALICO_IPV4POOL_CIDR

value: "172.16.17.0/16"

本文这里使用的是registry

docker pull registry.aliyuncs.com/google_containers/registry

也可以打包带走

docker save -o registry.tar registry.aliyuncs.com/google_containers/registry:latest

然后加载

docker load -i registry.tar

docker run -d --name registry --restart=always -v /data/registry-data:/var/lib/registry -p 81:5000 registry.aliyuncs.com/google_containers/registry

vi /usr/lib/systemd/system/cri-docker.service修改--pod-infra-container-image=registry.k8s.io/pause:3.9 为--pod-infra-container-image=172.16.17.xxx:81/pause:3.9

docker tag calico/node:v3.27.3 172.16.17.203:81/calico/node:v3.27.3

docker tag calico/kube-controllers:v3.27.3 172.16.17.203:81/calico/kube-controllers:v3.27.3

docker tag docker.io/calico/cni:v3.27.3 172.16.17.203:81/calico/cni:v3.27.3

docker tag registry.aliyuncs.com/google_containers/kube-apiserver:v1.30.0 172.16.17.203:81/kube-apiserver:v1.30.0

docker tag registry.aliyuncs.com/google_containers/kube-controller-manager:v1.30.0 172.16.17.203:81/kube-controller-manager:v1.30.0

docker tag registry.aliyuncs.com/google_containers/kube-scheduler:v1.30.0 172.16.17.203:81/kube-scheduler:v1.30.0

docker tag registry.aliyuncs.com/google_containers/kube-proxy:v1.30.0 172.16.17.203:81/kube-proxy:v1.30.0

docker tag registry.aliyuncs.com/google_containers/coredns:v1.11.1 172.16.17.203:81/coredns:v1.11.1

docker tag registry.aliyuncs.com/google_containers/pause:3.9 172.16.17.203:81/pause:3.9

docker tag registry.aliyuncs.com/google_containers/etcd:3.5.12-0 172.16.17.203:81/etcd:3.5.12-0

docker push 172.16.17.203:81/calico/node:v3.27.3

docker push 172.16.17.203:81/calico/kube-controllers:v3.27.3

docker push 172.16.17.203:81/calico/cni:v3.27.3

docker push 172.16.17.203:81/kube-apiserver:v1.30.0

docker push 172.16.17.203:81/kube-controller-manager:v1.30.0

docker push 172.16.17.203:81/kube-scheduler:v1.30.0

docker push 172.16.17.203:81/kube-proxy:v1.30.0

docker push 172.16.17.203:81/coredns:v1.11.1

docker push 172.16.17.203:81/pause:3.9

docker push 172.16.17.203:81/etcd:3.5.12-0

在子节点pull

docker pull 172.16.17.203:81/kube-apiserver:v1.30.0

docker pull 172.16.17.203:81/kube-controller-manager:v1.30.0

docker pull 172.16.17.203:81/kube-scheduler:v1.30.0

docker pull 172.16.17.203:81/kube-proxy:v1.30.0

docker pull 172.16.17.203:81/coredns:1.11.1

docker pull 172.16.17.203:81/pause:3.9

docker pull 172.16.17.203:81/etcd:3.5.12-0

docker pull 172.16.17.203:81/calico/node:v3.27.3

docker pull 172.16.17.203:81/calico/kube-controllers:v3.27.3

docker pull 172.16.17.203:81/calico/cni:v3.27.3

最好执行calico

kubectl apply -f calico.yaml

重启cri-docker

systemctl daemon-reload

systemctl restart cri-docker

查看pod

kubectl get node

延长证书有效期

openssl x509 -in /etc/kubernetes/pki/apiserver.crt -noout -text | grep Not

Not Before: Apr 22 14:09:36 2024 GMT

Not After : Apr 22 14:14:36 2025 GMT

openssl x509 -in /etc/kubernetes/pki/apiserver-etcd-client.crt -noout -text |grep Not

Not Before: Apr 22 14:09:36 2024 GMT

Not After : Apr 22 14:14:36 2025 GMT

openssl x509 -in /etc/kubernetes/pki/front-proxy-ca.crt -noout -text |grep Not

Not Before: Apr 22 14:09:36 2024 GMT

Not After : Apr 20 14:14:36 2034 GMT

将update-kubeadm-cert.sh上传至主节点,并执行

chmod +x update-kubeadm-cert.sh

./update-kubeadm-cert.sh all

#!/bin/bash

set -o errexit

set -o pipefail

# set -o xtrace

log::err() {

printf "[$(date +'%Y-%m-%dT%H:%M:%S.%N%z')]: \033[31mERROR: \033[0m$@\n"

}

log::info() {

printf "[$(date +'%Y-%m-%dT%H:%M:%S.%N%z')]: \033[32mINFO: \033[0m$@\n"

}

log::warning() {

printf "[$(date +'%Y-%m-%dT%H:%M:%S.%N%z')]: \033[33mWARNING: \033[0m$@\n"

}

check_file() {

if [[ ! -r ${1} ]]; then

log::err "can not find ${1}"

exit 1

fi

}

# get x509v3 subject alternative name from the old certificate

cert::get_subject_alt_name() {

local cert=${1}.crt

check_file "${cert}"

local alt_name=$(openssl x509 -text -noout -in ${cert} | grep -A1 'Alternative' | tail -n1 | sed 's/[[:space:]]*Address//g')

printf "${alt_name}\n"

}

# get subject from the old certificate

cert::get_subj() {

local cert=${1}.crt

check_file "${cert}"

local subj=$(openssl x509 -text -noout -in ${cert} | grep "Subject:" | sed 's/Subject:/\//g;s/\,/\//;s/[[:space:]]//g')

printf "${subj}\n"

}

cert::backup_file() {

local file=${1}

if [[ ! -e ${file}.old-$(date +%Y%m%d) ]]; then

cp -rp ${file} ${file}.old-$(date +%Y%m%d)

log::info "backup ${file} to ${file}.old-$(date +%Y%m%d)"

else

log::warning "does not backup, ${file}.old-$(date +%Y%m%d) already exists"

fi

}

# generate certificate whit client, server or peer

# Args:

# $1 (the name of certificate)

# $2 (the type of certificate, must be one of client, server, peer)

# $3 (the subject of certificates)

# $4 (the validity of certificates) (days)

# $5 (the x509v3 subject alternative name of certificate when the type of certificate is server or peer)

cert::gen_cert() {

local cert_name=${1}

local cert_type=${2}

local subj=${3}

local cert_days=${4}

local alt_name=${5}

local cert=${cert_name}.crt

local key=${cert_name}.key

local csr=${cert_name}.csr

local csr_conf="distinguished_name = dn\n[dn]\n[v3_ext]\nkeyUsage = critical, digitalSignature, keyEncipherment\n"

check_file "${key}"

check_file "${cert}"

# backup certificate when certificate not in ${kubeconf_arr[@]}

# kubeconf_arr=("controller-manager.crt" "scheduler.crt" "admin.crt" "kubelet.crt")

# if [[ ! "${kubeconf_arr[@]}" =~ "${cert##*/}" ]]; then

# cert::backup_file "${cert}"

# fi

case "${cert_type}" in

client)

openssl req -new -key ${key} -subj "${subj}" -reqexts v3_ext \

-config <(printf "${csr_conf} extendedKeyUsage = clientAuth\n") -out ${csr}

openssl x509 -in ${csr} -req -CA ${CA_CERT} -CAkey ${CA_KEY} -CAcreateserial -extensions v3_ext \

-extfile <(printf "${csr_conf} extendedKeyUsage = clientAuth\n") -days ${cert_days} -out ${cert}

log::info "generated ${cert}"

;;

server)

openssl req -new -key ${key} -subj "${subj}" -reqexts v3_ext \

-config <(printf "${csr_conf} extendedKeyUsage = serverAuth\nsubjectAltName = ${alt_name}\n") -out ${csr}

openssl x509 -in ${csr} -req -CA ${CA_CERT} -CAkey ${CA_KEY} -CAcreateserial -extensions v3_ext \

-extfile <(printf "${csr_conf} extendedKeyUsage = serverAuth\nsubjectAltName = ${alt_name}\n") -days ${cert_days} -out ${cert}

log::info "generated ${cert}"

;;

peer)

openssl req -new -key ${key} -subj "${subj}" -reqexts v3_ext \

-config <(printf "${csr_conf} extendedKeyUsage = serverAuth, clientAuth\nsubjectAltName = ${alt_name}\n") -out ${csr}

openssl x509 -in ${csr} -req -CA ${CA_CERT} -CAkey ${CA_KEY} -CAcreateserial -extensions v3_ext \

-extfile <(printf "${csr_conf} extendedKeyUsage = serverAuth, clientAuth\nsubjectAltName = ${alt_name}\n") -days ${cert_days} -out ${cert}

log::info "generated ${cert}"

;;

*)

log::err "unknow, unsupported etcd certs type: ${cert_type}, supported type: client, server, peer"

exit 1

esac

rm -f ${csr}

}

cert::update_kubeconf() {

local cert_name=${1}

local kubeconf_file=${cert_name}.conf

local cert=${cert_name}.crt

local key=${cert_name}.key

# generate certificate

check_file ${kubeconf_file}

# get the key from the old kubeconf

grep "client-key-data" ${kubeconf_file} | awk {'print$2'} | base64 -d > ${key}

# get the old certificate from the old kubeconf

grep "client-certificate-data" ${kubeconf_file} | awk {'print$2'} | base64 -d > ${cert}

# get subject from the old certificate

local subj=$(cert::get_subj ${cert_name})

cert::gen_cert "${cert_name}" "client" "${subj}" "${CAER_DAYS}"

# get certificate base64 code

local cert_base64=$(base64 -w 0 ${cert})

# backup kubeconf

# cert::backup_file "${kubeconf_file}"

# set certificate base64 code to kubeconf

sed -i 's/client-certificate-data:.*/client-certificate-data: '${cert_base64}'/g' ${kubeconf_file}

log::info "generated new ${kubeconf_file}"

rm -f ${cert}

rm -f ${key}

# set config for kubectl

if [[ ${cert_name##*/} == "admin" ]]; then

mkdir -p ~/.kube

cp -fp ${kubeconf_file} ~/.kube/config

log::info "copy the admin.conf to ~/.kube/config for kubectl"

fi

}

cert::update_etcd_cert() {

PKI_PATH=${KUBE_PATH}/pki/etcd

CA_CERT=${PKI_PATH}/ca.crt

CA_KEY=${PKI_PATH}/ca.key

check_file "${CA_CERT}"

check_file "${CA_KEY}"

# generate etcd server certificate

# /etc/kubernetes/pki/etcd/server

CART_NAME=${PKI_PATH}/server

subject_alt_name=$(cert::get_subject_alt_name ${CART_NAME})

cert::gen_cert "${CART_NAME}" "peer" "/CN=etcd-server" "${CAER_DAYS}" "${subject_alt_name}"

# generate etcd peer certificate

# /etc/kubernetes/pki/etcd/peer

CART_NAME=${PKI_PATH}/peer

subject_alt_name=$(cert::get_subject_alt_name ${CART_NAME})

cert::gen_cert "${CART_NAME}" "peer" "/CN=etcd-peer" "${CAER_DAYS}" "${subject_alt_name}"

# generate etcd healthcheck-client certificate

# /etc/kubernetes/pki/etcd/healthcheck-client

CART_NAME=${PKI_PATH}/healthcheck-client

cert::gen_cert "${CART_NAME}" "client" "/O=system:masters/CN=kube-etcd-healthcheck-client" "${CAER_DAYS}"

# generate apiserver-etcd-client certificate

# /etc/kubernetes/pki/apiserver-etcd-client

check_file "${CA_CERT}"

check_file "${CA_KEY}"

PKI_PATH=${KUBE_PATH}/pki

CART_NAME=${PKI_PATH}/apiserver-etcd-client

cert::gen_cert "${CART_NAME}" "client" "/O=system:masters/CN=kube-apiserver-etcd-client" "${CAER_DAYS}"

# restart etcd

docker ps | awk '/k8s_etcd/{print$1}' | xargs -r -I '{}' docker restart {} || true

log::info "restarted etcd"

}

cert::update_master_cert() {

PKI_PATH=${KUBE_PATH}/pki

CA_CERT=${PKI_PATH}/ca.crt

CA_KEY=${PKI_PATH}/ca.key

check_file "${CA_CERT}"

check_file "${CA_KEY}"

# generate apiserver server certificate

# /etc/kubernetes/pki/apiserver

CART_NAME=${PKI_PATH}/apiserver

subject_alt_name=$(cert::get_subject_alt_name ${CART_NAME})

cert::gen_cert "${CART_NAME}" "server" "/CN=kube-apiserver" "${CAER_DAYS}" "${subject_alt_name}"

# generate apiserver-kubelet-client certificate

# /etc/kubernetes/pki/apiserver-kubelet-client

CART_NAME=${PKI_PATH}/apiserver-kubelet-client

cert::gen_cert "${CART_NAME}" "client" "/O=system:masters/CN=kube-apiserver-kubelet-client" "${CAER_DAYS}"

# generate kubeconf for controller-manager,scheduler,kubectl and kubelet

# /etc/kubernetes/controller-manager,scheduler,admin,kubelet.conf

cert::update_kubeconf "${KUBE_PATH}/controller-manager"

cert::update_kubeconf "${KUBE_PATH}/scheduler"

cert::update_kubeconf "${KUBE_PATH}/admin"

# check kubelet.conf

# https://github.com/kubernetes/kubeadm/issues/1753

set +e

grep kubelet-client-current.pem /etc/kubernetes/kubelet.conf > /dev/null 2>&1

kubelet_cert_auto_update=$?

set -e

if [[ "$kubelet_cert_auto_update" == "0" ]]; then

log::warning "does not need to update kubelet.conf"

else

cert::update_kubeconf "${KUBE_PATH}/kubelet"

fi

# generate front-proxy-client certificate

# use front-proxy-client ca

CA_CERT=${PKI_PATH}/front-proxy-ca.crt

CA_KEY=${PKI_PATH}/front-proxy-ca.key

check_file "${CA_CERT}"

check_file "${CA_KEY}"

CART_NAME=${PKI_PATH}/front-proxy-client

cert::gen_cert "${CART_NAME}" "client" "/CN=front-proxy-client" "${CAER_DAYS}"

# restart apiserve, controller-manager, scheduler and kubelet

docker ps | awk '/k8s_kube-apiserver/{print$1}' | xargs -r -I '{}' docker restart {} || true

log::info "restarted kube-apiserver"

docker ps | awk '/k8s_kube-controller-manager/{print$1}' | xargs -r -I '{}' docker restart {} || true

log::info "restarted kube-controller-manager"

docker ps | awk '/k8s_kube-scheduler/{print$1}' | xargs -r -I '{}' docker restart {} || true

log::info "restarted kube-scheduler"

systemctl restart kubelet

log::info "restarted kubelet"

}

main() {

local node_tpye=$1

KUBE_PATH=/etc/kubernetes

CAER_DAYS=3650

# backup $KUBE_PATH to $KUBE_PATH.old-$(date +%Y%m%d)

cert::backup_file "${KUBE_PATH}"

case ${node_tpye} in

etcd)

# update etcd certificates

cert::update_etcd_cert

;;

master)

# update master certificates and kubeconf

cert::update_master_cert

;;

all)

# update etcd certificates

cert::update_etcd_cert

# update master certificates and kubeconf

cert::update_master_cert

;;

*)

log::err "unknow, unsupported certs type: ${cert_type}, supported type: all, etcd, master"

printf "Documentation: https://github.com/yuyicai/update-kube-cert

example:

'\033[32m./update-kubeadm-cert.sh all\033[0m' update all etcd certificates, master certificates and kubeconf

/etc/kubernetes

├── admin.conf

├── controller-manager.conf

├── scheduler.conf

├── kubelet.conf

└── pki

├── apiserver.crt

├── apiserver-etcd-client.crt

├── apiserver-kubelet-client.crt

├── front-proxy-client.crt

└── etcd

├── healthcheck-client.crt

├── peer.crt

└── server.crt

'\033[32m./update-kubeadm-cert.sh etcd\033[0m' update only etcd certificates

/etc/kubernetes

└── pki

├── apiserver-etcd-client.crt

└── etcd

├── healthcheck-client.crt

├── peer.crt

└── server.crt

'\033[32m./update-kubeadm-cert.sh master\033[0m' update only master certificates and kubeconf

/etc/kubernetes

├── admin.conf

├── controller-manager.conf

├── scheduler.conf

├── kubelet.conf

└── pki

├── apiserver.crt

├── apiserver-kubelet-client.crt

└── front-proxy-client.crt

"

exit 1

esac

}

main "$@"

在主节点执行命令查看证书有效期

openssl x509 -in /etc/kubernetes/pki/apiserver.crt -noout -text | grep Not

Not Before: Apr 22 15:05:11 2024 GMT

Not After : Apr 20 15:05:11 2034 GMT

openssl x509 -in /etc/kubernetes/pki/apiserver-etcd-client.crt -noout -text |grep Not

Not Before: Apr 22 15:05:11 2024 GMT

Not After : Apr 20 15:05:11 2034 GMT

openssl x509 -in /etc/kubernetes/pki/front-proxy-ca.crt -noout -text |grep Not

Not Before: Apr 22 14:09:36 2024 GMT

Not After : Apr 20 14:14:36 2034 GMT

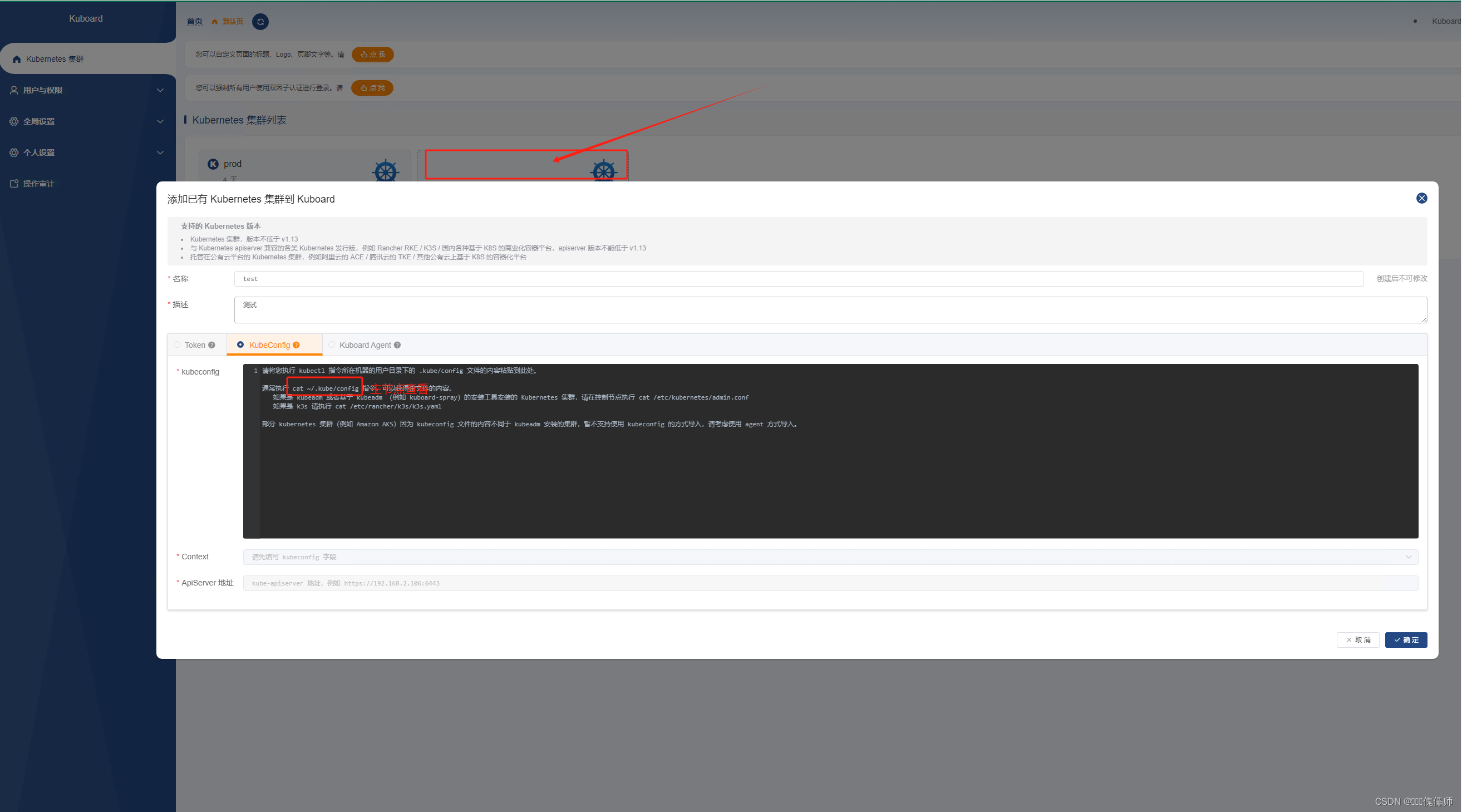

安装kuboard v3

docker pull registry.aliyuncs.com/google_containers/eipwork/kuboard:v3

运行kuboard

sudo docker run -d --restart=unless-stopped --name=kuboard -p 31000:80/tcp -p 10081:10081/tcp -e KUBOARD_ENDPOINT="http://172.16.17.xxx:31000" -e KUBOARD_AGENT_SERVER_TCP_PORT="10081" -v /root/kuboard-data:/data eipwork/kuboard:v3

复制粘贴 就完成了。

5186

5186

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?