openai-gpt

重点 (Top highlight)

Copycat Letter-String Analogies

模仿字母字符串类比

In the early 1980s, Douglas Hofstadter introduced the “Copycat” letter-string domain for analogy-making. Here are some sample analogy problems:

在1980年代初期,道格拉斯·霍夫斯塔特(Douglas Hofstadter)引入了“类比”字母字符串域进行类比。 以下是一些类比问题示例:

If the string abc changes to the string abd, what does the string pqr change to?

如果字符串abc更改为字符串abd ,则字符串pqr更改为什么?

If the string abc changes to the string abd, what does the string ppqqrr change to?

如果字符串abc更改为字符串abd ,则字符串ppqqrr更改为什么?

If the string abc changes to the string abd, what does the string mrrjjj change to?

如果字符串abc更改为字符串abd ,则字符串mrrjjj更改为什么?

If the string abc changes to the string abd, what does the string xyz change to?

如果字符串abc更改为字符串abd ,则字符串xyz更改为什么?

If the string axbxcx changes to the string abc, what does the string xpxqxr change to?

如果字符串axbxcx更改为字符串abc ,则字符串xpxqxr更改为什么?

The purpose of this “microworld” (as it was called back then) is to model the kinds of analogies humans make in general. Each string in an analogy problem represents a miniature “situation”, with objects, attributes, relationships, groupings, and actions. Figuring out answers to these problems, it was claimed, involves at least some of the mechanisms of more general analogy-making, such as perceiving abstract roles and correspondences between roles, ignoring irrelevant aspects, and mapping the gist of one situation to a different situation. In Chapter 24 of his book Metamagical Themas, Hofstadter wrote a long, incisive analysis of human analogy and how the Copycat domains captures some key aspects of it. The letter-string domain is deceptively simple — these problems can capture a large range of complex issues in recognizing abstract similarity. Hofstadter and his students (myself among them) came up with thousands of different letter string analogies, some of them extraordinarily subtle. A small collection of examples is given at this link.

这个“微观世界”(当时称为“微观世界”)的目的是模拟人类通常做出的类比。 类比问题中的每个字符串都代表一个微型“情况”,其中包含对象,属性,关系,分组和动作。 据称,找出这些问题的答案至少涉及一些更一般类比的机制,例如感知抽象角色和角色之间的对应关系,忽略不相关的方面以及将一种情况的要旨映射到另一种情况。 霍夫施塔特(Hofstadter)在他的著作《 超魔术的主题 》( Metamagical Themas)的第24章中,对人类类比以及山寨领域如何捕捉其中的一些关键方面进行了详尽而深入的分析。 字母字符串域看似简单-这些问题在识别抽象相似性时可以捕获大量复杂问题。 霍夫施塔特和他的学生们(包括我本人)想到了成千上万种不同的字符串类比,其中有些极其微妙。 此链接提供了一些示例。

The Copycat Program

模仿程序

I started working as a research assistant for Douglas Hofstadter at MIT in 1983. In 1984 I followed him to the University of Michigan and started graduate school there. My PhD project was to implement a program (called Copycat, naturally) that could solve letter-string analogy problems in a cognitively plausible manner. The goal wasn’t to build a letter-string-analogy-solver, per se, but to develop an architecture that implemented some of the general mechanisms of analogy-making, and to test it on letter-string problems. I won’t describe the details of this program here. I wrote a whole book about it, and also Hofstadter and I wrote a long article detailing the architecture, its connections to theories about human perception and analogy-making, and the results we obtained. In the end, the program was able to solve a wide array of letter-string analogies (though it was far from what humans could do in this domain). I also did extensive surveys asking people to solve these problems, and found that the program’s preferences for answers largely matched those of the people I surveyed. Later, Jim Marshall (another student of Hofstadter) extended my program to create Metacat, which could solve an even wider array of letter-string problems, and was able to observe its own problem-solving (hence the “meta” in its name). Other projects from Hofstadter’s research group, using related architectures, are described in Hofstadter’s book Fluid Concepts and Creative Analogies.

我从1983年开始在MIT担任Douglas Hofstadter的研究助理。1984年,我跟随他来到密歇根大学,并在那里开始了研究生院。 我的博士项目是实施一个程序(自然而然地称为Copycat ),该程序可以以认知上合理的方式解决字母串类比问题。 目的不是要本质上构建字母字符串模拟解决方案,而是要开发一种实现一些类比生成通用机制的体系结构,并在字母字符串问题上进行测试。 我将不在这里描述该程序的详细信息。 我写了一本关于它的整本书 ,还写了一篇很长的文章,详细介绍了体系结构,体系结构与人类感知和类比理论之间的联系以及获得的结果。 最终,该程序能够解决各种各样的字母字符串类比(尽管与人类在该领域的能力相去甚远)。 我还进行了广泛的调查,要求人们解决这些问题,发现该程序对答案的偏好在很大程度上与我所调查的人们相匹配。 后来,吉姆·马歇尔(Hofstadter的另一个学生)扩展了我的程序,以创建Metacat ,该Metacat可以解决更广泛的字母字符串问题,并且能够观察到自己的解决问题的能力(因此以“ meta”命名) 。 Hofstadter的研究小组使用相关架构的其他项目在Hofstadter的《 流体概念和创造力类比》一书中进行了描述。

GPT-3

GPT-3

GPT-3 (“Generative Pre-Trained Transformer 3) is a language model — a program that is, given an input text, trained to predict the next word or words. GPT-3 is one of the largest such models, having been trained on about 45 terabytes of text data, taken from thousands of web sites such as Wikipedia, plus online books and many other sources. GPT-3 uses a deep-neural-network “transformer” architecture, described in a technical paper written by its creators at the company OpenAI. (I wrote a non-technical essay about language models that focused on GPT-2, which was GPT-3’s predecessor.)

GPT-3(“生成式预训练变压器3”)是一种语言模型 -一种程序,在输入文本的情况下,经过训练可以预测下一个或多个单词。 GPT-3是此类模型中最大的模型之一,它接受了大约45 TB的文本数据的培训,这些数据来自Wikipedia等数千个网站,以及在线书籍和许多其他来源。 GPT-3使用深层神经网络“变压器”架构,该架构在其OpenAI公司的创建者撰写的技术论文中进行了描述 。 (我写了一篇非技术文章,内容是关于GPT-2的语言模型,这是GPT-3的前身。)

To interact with GPT-3, you give it a “prompt” — that is, some text — and it generates a continuation of your prompt. For example, I gave it the following prompt:

要与GPT-3进行交互,您可以给它一个“提示”(即一些文本),它会生成提示的延续。 例如,我给它以下提示:

Q: What is the best horror movie ever made?

问:有史以来最好的恐怖电影是什么?

GPT-3 answered my question, and then asked and answered two more of its own questions (I put its generated text in italics):

GPT-3回答了我的问题,然后又问又回答了两个自己的问题(我将其生成的文本用斜体表示):

A: The Exorcist.

答:驱魔人。

Q: Who is the best horror actor?

问:谁是最恐怖的演员?

A: Boris Karloff.

答:鲍里斯·卡洛夫(Boris Karloff)。

Q: Who is the best horror actress?

问:谁是最恐怖的女演员?

A: Fay Wray

答:费雷

Many articles and social media posts have given examples of GPT-3’s extraordinarily human-like text, its seemingly endless knowledge of (mostly Western) culture, and even its ability to create computer programs just by being given a few input-output examples. My purpose in this article is not to review the success, hype, or counter-hype on GPT-3. Instead, I want to explore its ability to make Copycat letter-string analogies.

许多文章和社交媒体帖子都给出了GPT-3的非凡的类人文字示例,对(大多数是西方的)文化似乎无穷无尽的知识,甚至仅给出了一些输入输出示例就可以创建计算机程序。 我在本文中的目的不是要回顾GPT-3的成功,炒作或反炒作。 相反,我想探索其做出模仿山猫字母字符串的能力。

GPT-3 and Letter-String Analogies

GPT-3和字母字符串类比

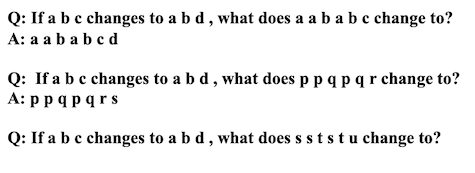

OpenAI generously granted me access to the GPT-3 API (i.e., web interface), and I immediately tried it on a few Copycat analogy problems. I did this by giving the program this kind of prompt:

OpenAI慷慨地授予我访问GPT-3 API (即Web界面)的权限,我立即在一些Copycat类比问题上尝试了它。 我通过给程序一个这样的提示来做到这一点:

I tried GPT-3 on several problems like this, with varying numbers of examples, and found that it performed abysmally. I gleefully posted the results on Twitter. Several people informed me that I needed to put in spaces between the letters in the strings, because of the way GPT-3 interprets its input. I tried this:

我尝试了GPT-3这样的几个问题,并使用了不同数量的示例,结果发现GPT-3表现不佳。 我高兴地将结果发布在Twitter上。 有几个人告诉我,由于GPT-3解释其输入的方式,我需要在字符串中的字母之间放置空格。 我尝试了这个:

Suddenly, GPT-3 was giving the right answers! I tweeted these results, with the joking aside, “Copycat is toast.”

突然,GPT-3给出了正确的答案! 我在推特上发布了这些结果,开了一个玩笑,“模仿者就是吐司。”

To my surprise, several prominent Twitter users cited my informal experiments as showing that GPT-3 had human-like cognitive abilities. One person commented “@MelMitchell1's copycat experiments gave me significant pause. Those appear to be cases where the machine is developing concepts on the fly.” Another person stated, “GPT-3 solves Copycat out of the box.”

令我惊讶的是,几个杰出的Twitter用户引用了我的非正式实验,表明GPT-3具有类似于人的认知能力。 有人评论说: “ @ MelMitchell1的模仿实验给了我很大的停顿。 这些似乎是机器在动态开发概念的情况。” 另一个人说 :“ GPT-3开箱即用地解决了模仿问题。”

Such conclusions weren’t at all justified by the scattershot tests I posted on Twitter, so I decided to give GPT-3 a more systematic test.

我在Twitter上发布的散点测试根本无法证明这样的结论,因此我决定对GPT-3进行更系统的测试。

Methodology

方法

Below I’ll give the results of the more extensive and systematic experiments I did. GPT-3 is stochastic; given a particular prompt, it doesn’t always give the same response. To account for that stochasticity, I gave it each prompt five separate times (“trials”) and recorded the response. Before each trial I refreshed the website to clear out any memory of prior trials the system might be using.

下面我将给出我所做的更广泛和系统的实验的结果。 GPT-3是随机的; 在给出特定提示时,它并不总是给出相同的响应。 为了解决这种随机性,我给了每个提示五个单独的时间(“试用”),并记录了响应。 在每次试用之前,我都刷新了网站,以清除系统可能正在使用的先前试用的所有内存。

GPT-3’s API has some parameters that need to be set: a temperature, which influences how much randomness the system uses in generating its replies (I used the default value of 0.7) and the response length, which gives approximately the number of “words” the system will generate in response to a prompt (I typically used 16, but I don’t think this parameter really affects the responses to my analogy questions.) I only recorded the first line of the response (which was usually of the form “A: [letter string]) ; I ignored any other lines it generated. For example, here is a screenshot of a typical experiment (prompt in boldface, GPT-3 response in Roman face):

GPT-3的API具有一些需要设置的参数:温度,该温度会影响系统在生成其回复时使用的随机性(我使用默认值0.7)和响应长度(大约为“字数”) ”系统将根据提示生成响应(我通常使用16,但我认为此参数并不会真正影响对类比问题的响应。)我仅记录了响应的第一行(通常采用以下形式: “ A:[字母字符串]); 我忽略了它生成的任何其他行。 例如,下面是一个典型实验的屏幕截图(黑体字提示,罗马字母GPT-3响应):

For this I would record answer i j l and ignore the rest of the generated text (which was most often GPT-3 generating the beginning of an additional analogy question to follow the pattern of the prompt).

为此,我将记录答案ijl,而忽略其余生成的文本(这通常是GPT-3生成的另一个类推问题的开头,以遵循提示的模式)。

The next sections give all the results of my numerous experiments. Since these results are rather long, you might want to skim them and skip to the Conclusions section at the end. On the other hand, if you are an analogy nerd like me, you might find the details rather interesting.

下一部分给出了我大量实验的所有结果。 由于这些结果相当长,因此您可能需要略过它们,最后跳到“结论”部分。 另一方面,如果您是像我这样的类比书呆子,您可能会发现细节很有趣。

Experiment 1: Simple alphabetic sequences

实验1:简单字母序列

- First, I tried a simple “zero-shot” experiment — that is, no “training examples”. 首先,我尝试了一个简单的“零镜头”实验-即没有“训练示例”。

Prompt:

提示:

People’s preferred answer: p q s

人们的首选答案: pqs

GPT-3’s answers (it sometimes didn’t give a letter string answer; I ignored these).

GPT-3的答案(有时没有给出字符串答案;我忽略了这些)。

In general, I found that GPT-3 cannot perform zero-shot analogy-making in this domain.

总的来说,我发现GPT-3在该领域无法进行零击类比。

2. Next, I gave it one “training example” — that is, one solved analogy problem.

2.接下来,我给它提供了一个“培训示例”,即解决了一个类比问题。

Prompt:

提示:

GPT-3 answered i j l on every trial! It looks like one-shot learning works for this problem.

GPT-3在每次审判中都回答了ijl ! 似乎一次性学习可解决此问题。

3. Let’s try to see if GPT-3 can generalize to strings of different lengths.

3.让我们尝试看看GPT-3是否可以推广到不同长度的字符串。

Prompt:

提示:

Humans will easily generalize, and answer i j k l n.

人类将很容易概括并回答ijkl n。

GPT-3’s answers:

GPT-3的答案:

So, with only one training example, GPT-3 cannot generalize to the longer string.

因此,仅以一个训练示例为例,GPT-3不能推广到更长的字符串。

4. Okay, let’s give it two training examples of different lengths.

4.好的,让我们举两个不同长度的训练示例。

Prompt:

提示:

Humans, if they can remember the alphabet, will answer r s t u v x.

如果人们能记住字母,他们将回答rstuv x。

GPT-3 never got this answer; here are the answers from its five trials:

GPT-3从未得到这个答案; 以下是五项试验的答案:

Even with two training examples, GPT-3 cannot generalize to the longer string.

即使有两个训练示例,GPT-3也不能推广到更长的字符串。

5. Let’s be generous and give it three training examples of different lengths.

5.让我们大方,给它三个不同长度的训练示例。

Prompt:

提示:

We’re looking for answer e f g h i j l.

我们正在寻找答案efghij l。

And indeed, now GPT-3 gives answer e f g h i j l on all five trials!

实际上,现在GPT-3在所有五个试验中都给出了答案efghijl !

Experiment 2: Alphabetic sequences with grouping

实验2:按字母顺序排列的分组

1. Let’s try a zero shot experiment that requires grouping letters:

1.让我们尝试一项需要对字母进行分组的零击实验:

Prompt:

提示:

Humans will almost always say i i j j l l.

人类几乎总是会说艾杰尔 。

In the five trials of GPT-3, the answer was never a letter string sequence. In one trial, GPT-3 amusingly replied “It’s a trick question.” Indeed.

在GPT-3的五次试验中,答案绝不是字母序列。 在一项试验中,GPT-3有趣地回答“这是一个技巧问题”。 确实。

2. Let’s try giving GPT-3 an example.

2.让我们尝试举一个GPT-3为例。

Prompt:

提示:

This was enough: GPT-3 returns m m n n p p on each trial.

这就足够了:GPT-3在每次试验中都返回mmnnpp 。

3. But what if we ask GPT-3 to generalize to a string of a different length?

3.但是,如果我们要求GPT-3泛化为不同长度的字符串怎么办?

Prompt:

提示:

GPT-3’s answers:

GPT-3的答案:

Not very reliable; it gets the human-preferred answer q q r r s s u u on two out of five trials.

不是很可靠; 在五分之二的试验中,它得到了人类偏爱的答案qqrrssuu 。

4. Let’s try with two examples.

4.让我们尝试两个示例。

Prompt:

提示:

GPT-3’s answers:

GPT-3的答案:

Again, not reliable; the human-preferred answer, e e f f g g h h j j was returned only once in five trials.

再次,不可靠; 在五次试验中,只有人为首选的答案eeffgghhjj被返回了一次。

5. What about giving GPT-3 three examples?

5.给GPT-3三个例子怎么样?

Prompt:

提示:

GPT-3’s answers:

GPT-3的答案:

Not once did it return the human-preferred answer of r r r r s s s s u u u u . It definitely has trouble with generalization here.

它没有一次返回人们喜欢的rrrrssssuuuuu答案。 这里肯定存在泛化的麻烦。

Experiment 3: “Cleaning up” a String

实验3:“清理”字符串

Another abstract concept in the letter-string domain is the notion of “cleaning up” a string.

字母字符串领域中的另一个抽象概念是“清理”字符串的概念。

1. Here I gave GPT-3 one example.

1.在这里,我举了一个GPT-3示例。

Prompt:

提示:

GPT-3 got this one correct on three out of five trials. Here are its answers:

GPT-3在五分之三的试验中都得出了正确的结论。 这是它的答案:

2. Let’s try this with two examples.

2.让我们通过两个示例进行尝试。

Prompt:

提示:

GPT-3 nailed this one, answering x y z on all five trials.

GPT-3提出了这一要求,在所有五项试验中均回答了xyz 。

3. Now, a trickier version of “cleaning up a string”. We’ll start by giving GPT-3 two examples.

3.现在,使用了一个更复杂的“清理字符串”版本。 我们先举两个GPT-3示例。

Prompt:

提示:

Most humans would answer m n o p.

大多数人都会回答mnop 。

GPT-3 returned that answer once in five trials. Here are its answers:

GPT-3在五次试验中一次返回了该答案。 这是它的答案:

4. Let’s try this again with three examples.

4.让我们用三个例子再试一次。

Prompt:

提示:

GPT-3 did better this time, getting the “correct” answer j k l m n on four out of five trials (on one trial it answered j l m n ).

GPT-3这次表现更好,在五分之四的试验中得到了“正确”的答案jklmn (在一项试验中它回答了jlmn )。

5. Finally, I tried an example where the character to remove (here, “x”), is at the beginning of the target string.

5.最后,我尝试了一个示例,其中要删除的字符(此处为“ x”)位于目标字符串的开头。

Prompt:

提示:

GPT-3 did not get this one at all; it answered x i j k on all five trials.

GPT-3根本没有得到这一点; 它在所有五项审判中都回答了xijk 。

Experiment 4: Analogies involving abstract examples of “successorship”

实验4:类推涉及“继承”的抽象示例

In these experiments, we look at several analogies involving various abstract notions of successorship.

在这些实验中,我们看了几个涉及继承的各种抽象概念的类比。

1. First we see if GPT-3 can generalize from letter-successor to abstract “number” successor.

1.首先,我们看一下GPT-3是否可以从字母继承者推广到抽象的“数字”继承者。

Prompt:

提示:

While this is sometimes hard for people to discover, once it’s pointed out, people tend to prefer the answer j y y q q q q , that is, the number sequence 1–2–3 changes to 1–2–4.

尽管有时人们很难发现这一点,但一旦指出,人们往往更喜欢答案jyyqqqq ,即数字序列1–2–3变为1–2–4。

GPT-3 never gets this answer. Here are its responses.

GPT-3从未得到这个答案。 这是它的回应。

This generalization seems beyond GPT-3’s abilities.

这种概括似乎超出了GPT-3的能力。

2. What about creating an abstract numerical sequence?

2.如何创建抽象的数字序列?

Prompt:

提示:

Here we’re looking for b o o c c c v v v v (1–2–3–4)

在这里我们正在寻找boocccvvvv (1-2–3–4)

GPT-3’s answers:

GPT-3的答案:

GPT-3 doesn’t seem to get this concept. I tried this also with one additional example, but GPT-3 still never responded with the kind of “number sequence” displayed in the analogy.

GPT-3似乎没有这个概念。 我也用另一个示例进行了尝试,但是GPT-3仍然从不对类比中显示的“数字序列”进行响应。

3. Let’s try a different kind of abstract “successorship”.

3.让我们尝试另一种抽象的“继承”。

Prompt:

提示:

Here the idea is to parse the target string as s — s t — s t u. The “successor” of the rightmost sequence is s t u v, so we’re looking for answer s s t s t u v (“replace rightmost element with its successor”).

这里的想法是将目标字符串解析为s — st — stu 。 最右边序列的“后继”是stuv,因此我们正在寻找答案sststuv (“用后继替换最右边的元素”)。

GPT-3 got this one: it answered s s t s t u v on each trial.

GPT-3 做到了这一点:每次试验都回答了sststuv 。

4. But did it really get the concept we have in mind? Let’s test this by seeing if it can generalize to a different-length target string.

4.但这真的使我们想到了这个概念吗? 让我们测试一下是否可以推广到不同长度的目标字符串。

Prompt:

提示:

The answer we’re looking for is e e f e f g e f g h i.

我们正在寻找的答案是eefefgefghi 。

GPT-3 got this answer on four out of five trials (on one trial it responded e e f e f g h i ). Pretty good!

GPT-3在五分之四的试验中得到了这个答案(在一项试验中,它对eefefghi做出了回应)。 非常好!

Experiment 5: A letter with no successor

实验5:无后继者的来信

As a final experiment, let’s look at problems in which we try to take the successor of “z”.

作为最后的实验,让我们看一下尝试采用“ z”后继者的问题。

1. A deceptively simple problem.

1.一个看似简单的问题。

Prompt:

提示:

Most people will say x y a. This answer wasn’t available to the original Copycat program (it didn’t have the concept of a “circular” alphabet), and it would give answers like x y y; w y z; or x y y. Fortunately, Copycat was able to explain its reasoning to some extent (see references above for more details).

大多数人会说xya 。 这个答案不适用于原始的Copycat程序(它没有“圆形”字母的概念),并且会给出xyy之类的答案; 威兹 ; 或xyy 。 幸运的是,Copycat能够在某种程度上解释其原因(有关更多详细信息,请参见上面的参考资料)。

On this one, GPT-3 is all over the map.

在这一点上,GPT-3遍布整个地图。

GPT-3’s answers:

GPT-3的答案:

2. Let’s look at one more.

2.让我们再看一看。

Prompt:

提示:

GPT-3’s answered the reasonable x y z a on four out of five trials (on one trial it answered x y z a a).

GPT-3在五项试验中的四项中回答了合理的xyza (在一项试验中,它回答了xyzaa )。

Conclusions

结论

I tested GPT-3 on a set of letter-string analogy problems that focus on different kinds of abstract similarity. The program’s performance was mixed. GPT-3 was not designed to make analogies per se, and it is surprising that it is able to do reasonably well on some of these problems, although in many cases it is not able to generalize well. Moreover, when it does succeed, it does so only after being shown some number of “training examples”. To my mind, this defeats the purpose of analogy-making, which is perhaps the only “zero-shot learning” mechanism in human cognition — that is, you adapt the knowledge you have about one situation to a new situation. You (a human, I assume) do not learn to make analogies by studying examples of analogies; you just make them. All the time. Most of the time you are not even aware that you are making analogies. (If you’re not convinced of this, I recommend reading the wonderful book by Douglas Hofstadter and Emmanuel Sander, Surfaces and Essences.)

我针对一组字母类比问题测试了GPT-3,这些问题关注于不同种类的抽象相似性。 该程序的性能参差不齐。 GPT-3本身并不是为了进行类比而设计的,令人惊讶的是,尽管许多情况下它不能很好地概括,但它能够在其中一些问题上做得相当好。 而且,只有在显示了一些“培训实例”之后,它才能成功。 在我看来,这违背了类比制作的目的,类比制作可能是人类认知中唯一的“零镜头学习”机制-也就是说,您将对一种情况的了解适应于一种新情况。 您(我想是一个人)不会通过研究类比实例来学习类比; 你只是让他们。 每时每刻。 大多数时候,您甚至都不知道自己在做类比。 (如果您不相信这一点,我建议您阅读道格拉斯·霍夫施塔特和伊曼纽尔·桑德的著作《 表面与精华》的精彩著作。)

One of the problems with having to provide training examples like theones I described above is that it’s hard to tell if GPT-3 is actuallymaking an analogy, or if it is copying patterns in the target strings(i.e. ignoring the “If string1 changes to string2” parts of theprompt). I didn’t test that, but it would be interesting to do so.

必须提供像我上面所描述的那样的训练示例的问题之一是,很难判断GPT-3是否确实在进行类比,或者是否在目标字符串中复制模式(即忽略“如果string1更改为string2” ”部分的提示)。 我没有测试过,但是这样做很有趣。

All in all, GPT-3’s performance is often impressive and surprising, but it isalso similar to a lot of what we see in today’s state-of-the-art AIsystems: impressive, intelligent-seeming performance interspersed withunhumanlike errors, plus no transparency as to why it performs wellor makes certain errors. And it is often hard to tell if the system hasactually learned the concept we are trying to teach it.

总而言之,GPT-3的性能通常令人印象深刻且令人惊讶,但它也与我们在当今最先进的AI系统中看到的很多相似:令人印象深刻,智能的性能散布着不人道的错误,而且没有透明性至 为什么 它表现良好或犯某些错误。 通常很难说系统是否已经实际掌握了我们正在尝试教授的概念。

I don’t have a definitive answer for the question in the title — canGPT-3 make analogies? In some cases, it appears to make analogies,but I don’t think it is doing anything like human analogy-making orconceptual abstraction. My intuition is that to achieve robusthumanlike performance on analogy, something quite different is neededthan the kind of statistical language-model approach taken by GPT-3.

对于标题中的问题,我没有明确的答案-GPT-3可以类推吗? 在某些情况下,它似乎可以进行类比,但我认为它没有像人类进行类比或概念抽象那样。 我的直觉是,要在类比上获得强大的类人性能,需要采取与GPT-3采用的统计语言模型方法完全不同的方法。

As usual in such essays, I’ll end by asking the question to GPT-3 itself:

像在此类论文中一样,我将最后向GPT-3本身提问:

Prompt: Can a language model like GPT-3 make analogies?

提示: 像GPT-3这样的语言模型可以类推吗?

GPT-3: The news that Google had cracked the code to making a language model that can make analogies and do so quite well has been met with mixed reactions. On the one hand, it is a major breakthrough in natural language processing and artificial intelligence. On the other, it’s hard to see how this has anything to do with language.

GPT-3: 有关Google破解了代码以创建可以进行类比并且做得很好的语言模型的消息,引起了不同的反响。 一方面,这是自然语言处理和人工智能领域的重大突破。 另一方面,很难看出这与语言有什么关系。

Touché!

碰!

Note: I have written a brief follow-up to this article with the answers to some commonly asked questions.

注意:我已经对本文进行了简短的后续介绍,并回答了一些常见问题。

翻译自: https://medium.com/@melaniemitchell.me/can-gpt-3-make-analogies-16436605c446

openai-gpt

3533

3533

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?