汤国安mooc实验数据

In this world of the Internet, the amount of data that is surrounded by you is like a vast ocean for any field of research or personal interest. To effectively harvest this data, you’ll need to develop the skillset for web scraping. With Web scraping, we can build our own dataset as per our requirements for further analysis. Using this technique only, Amazon’s rating, Netflix review, IMDB ratings, and many other datasets are prepared and analyzed.

在这个互联网世界中,您所包围的数据量就像是一块广阔的海洋,适合任何研究领域或个人兴趣。 为了有效地收集这些数据,您需要开发Web抓取的技能。 使用Web抓取,我们可以根据我们的需求构建自己的数据集,以进行进一步的分析。 仅使用此技术,就可以准备和分析Amazon的评分,Netflix的评论,IMDB评分以及许多其他数据集。

I learned recently Web Scrapping and then suddenly I got an exciting idea if I can apply to scrape the Medium data to find the list of publications and do further analysis of it.

我最近了解了Web Scrapping,然后突然间我有了一个令人振奋的主意,如果我可以申请对Medium数据进行抓取以查找出版物列表并对其进行进一步分析。

Also, there’s a key point to remember here:

另外,这里要记住一个关键点:

The things that we learned from examples or toy implementation is always different for real-time examples.

我们从示例或玩具实现中学到的东西对于实时示例总是不同的。

Let’s start without any delay.

让我们开始吧!

This blog speaks about the code for web scraping multiple pages using Beautiful Soup. The website I have scrapped to build the dataset for this analysis is “https://toppub.xyz/publications”. To implement this code I have used Python libraries requests and Beautiful Soup, which is considered the most powerful tool for this job.

该博客介绍了使用Beautiful Soup在Web上抓取多个页面的代码。 我为构建此分析数据集而废弃的网站是“ https://toppub.xyz/publications ”。 为了实现此代码,我使用了Python库requests和Beautiful Soup,这被认为是这项工作中功能最强大的工具。

Pre-requisites: It is very much needed to understand the HTML tags and different divisions of web-pages. You can easily grasp it with a link https://www.dataquest.io/blog/web-scraping-beautifulsoup/. This is the only most challenging part of web scrapping, as we need to understand someone’s written code and then adapt to our code, as per our expected result.

先决条件:非常需要了解HTML标签和网页的不同划分。 您可以通过链接https://www.dataquest.io/blog/web-scraping-beautifulsoup/轻松地掌握它。 这是Web爬网中最具挑战性的部分,因为我们需要了解某人的书面代码,然后根据我们的预期结果适应我们的代码。

网页抓取: (Web scraping:)

Web scraping is collecting information from the Internet. Even copy-pasting the lyrics of your favorite song is a form of web scraping! However, when we do this process with automation it is called ‘Web Scraping’. Before proceeding, on this, always check that you are not violating any Terms of Service. As some websites don’t like it when automatic scrapers gather their data, while others don’t mind.

Web抓取是从Internet收集信息。 甚至复制粘贴您喜欢的歌曲的歌词也是Web抓取的一种形式! 但是,当我们以自动化方式执行此过程时,则称为“ Web Scraping”。 在进行此操作之前,请务必检查您是否未违反任何服务条款。 由于有些网站不喜欢自动抓取工具收集数据,而其他网站则不介意。

If you’re scraping a page respectfully for educational and research purposes, then you are in a safe zone. Automated web scraping helps to speed up the data collection process. We write our code once and it will get the information as we want many times and from many pages.

如果您出于教育和研究目的而认真刮取页面,那么您处于安全区域。 自动化的网页抓取有助于加快数据收集过程。 我们只编写一次代码,它将根据需要多次从许多页面中获取信息。

要考虑的要点: (Points to consider:)

Every website is different and this is the most important point o consider the variety of each site. While there are some general structures that tend to repeat themselves, but still each website is unique and will need its own personal treatment if you want to extract the information that’s relevant to you.

每个网站都是不同的,这是最重要的一点,请考虑每个网站的多样性。 虽然有一些一般的结构会重复出现,但是每个网站仍然是唯一的,如果您想提取与您相关的信息,则需要对其进行个人处理。

There is always constant development in building websites and so here comes the matter of durability. So it may happen that, initially your code and script worked flawlessly. But when you run the same script only a short while later, you run into a discouraging and lengthy stack of tracebacks. Keep integrating with your code, as per your need and project requirements.

网站建设始终在不断发展,因此,持久性问题就来了。 因此,有可能发生的情况是,最初您的代码和脚本可以完美地工作。 但是当您不久之后运行同一脚本时,就会遇到令人沮丧且冗长的回溯堆栈。 根据您的需求和项目要求,与代码保持集成。

报废: (Scrapping:)

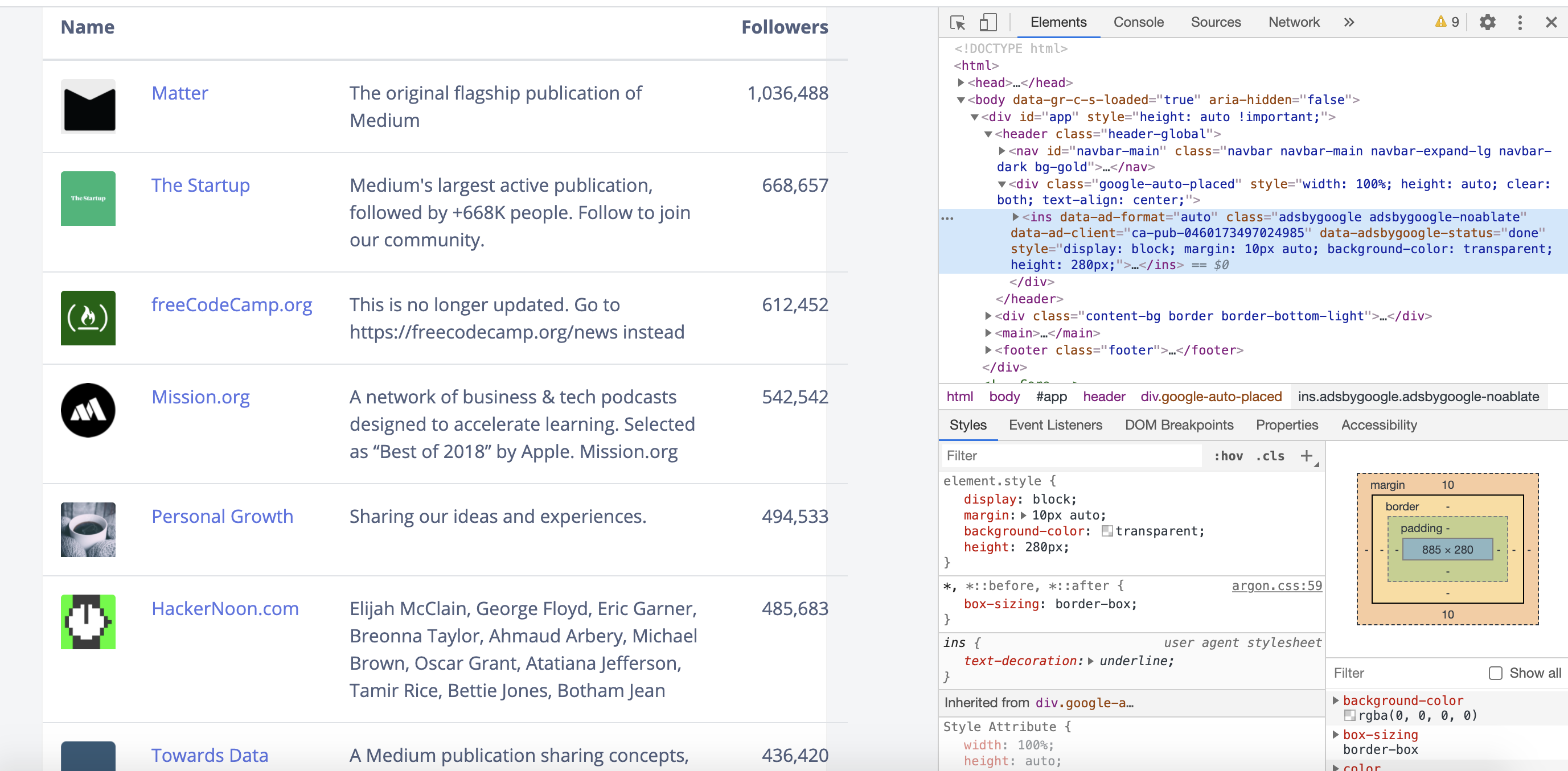

My intention is to build the dataset with Name of Publication, Description, and No.of followers for it. It has a total of 792 pages to scrape. The website looks like this which provided the necessary information as per my expectation:

我的意图是使用发布名称,描述和关注者编号来构建数据集。 它总共有792页要刮。 该网站看起来像这样,它按照我的期望提供了必要的信息:

Next, Step is to Inspect the site and identify the class and tags which we want to scrape.

接下来,步骤是检查站点并确定我们要抓取的类和标签。

My required information is in the form of a table and so the below code gives the required information and store it in the form of CSV file format. GitHub Link.

我所需的信息以表格的形式出现,因此以下代码提供了所需的信息并以CSV文件格式存储。 GitHub链接 。

报废后,分析如下: (After Scrapping, the analysis is as follows:)

1. Medium has a total of 11,865 publishers.

1.中级总共有11,865家出版商。

2. The topmost Publications is Matter, which is own of Medium with 10,36,488 followers then it is followed by Startup with 6,68,651 its followers.

2.最重要的出版物是Matter ,该公司属于Medium,拥有10,36,488位关注者,其次是Startup,拥有6,68,651位关注者。

3. There are 646 publications which are having ≤0 followers.

3.有646个出版物的关注者≤0。

4. There are 45 publications that have more than 100 thousand (1 lakh followers).

4.有45种出版物的发行量超过10万(关注者达10万)。

Enjoy Scraping!!!

享受刮!

翻译自: https://medium.com/swlh/build-your-own-dataset-with-beautiful-soup-583717e3dad7

汤国安mooc实验数据

440

440

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?