ios框架

There were quite a few interesting announcements during WWDC 2020. Without a doubt, enhancements in SwiftUI 2.0 and Apple’s bold decision to move away from Intel for Mac in favor of in-house Silicon chips became the major talking points.

在WWDC 2020期间有许多有趣的公告。毫无疑问,SwiftUI 2.0的增强功能以及苹果公司大胆的决定,不再使用Intel for Mac 而改用内部硅芯片成为了主要话题。

But that didn’t stop Apple from showcasing its computer vision ambitions once again this year. The Vision framework has been enhanced with some exciting new updates for iOS 14.

但这并没有阻止苹果今年再次展示其计算机视觉野心。 视觉框架已通过iOS 14的一些令人兴奋的新更新得到增强。

Through iOS 13, Apple expanded the breadth of the Vision framework by introducing a variety of requests ranging from text recognition to built-in image classification and improvements in face recognition and detection.

苹果通过iOS 13引入了各种请求,从文本识别到内置图像分类以及面部识别和检测方面的改进,扩展了Vision框架的范围。

In iOS 14, Apple has focused many of its efforts on increasing the depth of its Vision framework.

在iOS 14中,Apple已将许多工作重点放在增加Vision框架的深度上。

Primarily, the new Vision requests introduced in iOS 14 enable new ways to perform action classification and analysis, which will help developers build immersive gameplay experiences, fitness apps, and sports coaching-based applications (something that’s has seen a huge demand in recent times).

首先,iOS 14中引入的新的Vision要求提供了执行动作分类和分析的新方法,这将帮助开发人员构建身临其境的游戏体验,健身应用程序和基于体育教练的应用程序(近来需求很大) 。

Let’s dig through the new Vision requests and utilities that were announced at WWDC 2020.

让我们来看看WWDC 2020上宣布的新的Vision要求和实用程序。

视觉轮廓检测请求 (Vision Contour Detection Request)

Contour detection finds outlines of shapes in an image. It looks to join all the continuous points that have the same color or intensity. This request finds good use in scenarios like coin detection and finding other objects in an image and grouping them by size, aspect ratio, etc.

轮廓检测可找到图像中形状的轮廓。 它看起来将所有具有相同颜色或强度的连续点合并在一起。 此请求在硬币检测,查找图像中的其他对象并按大小,长宽比等对它们进行分组等场景中有很好的用途。

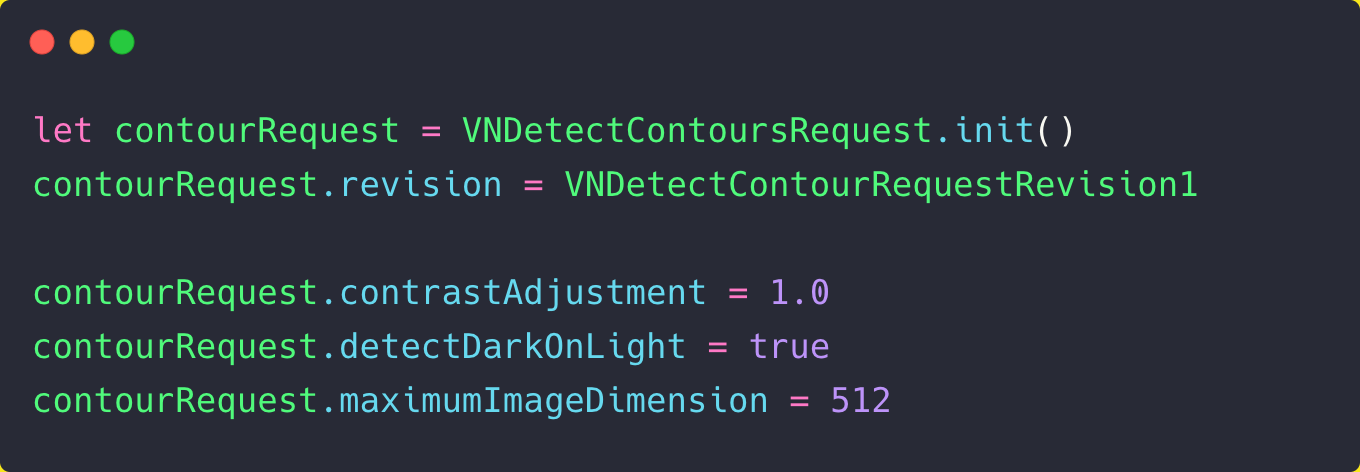

Here’s the sample code used to initiate a Vision contour detection request:

以下是用于启动视觉轮廓检测请求的示例代码:

Like every Vision request, the idea is simple: pass the Vision request into a VNImageRequestHandler for images, and get the results in VNObservation.

像每个Vision请求一样,想法很简单:将Vision请求传递到用于图像的VNImageRequestHandler中,然后在VNObservation获取结果。

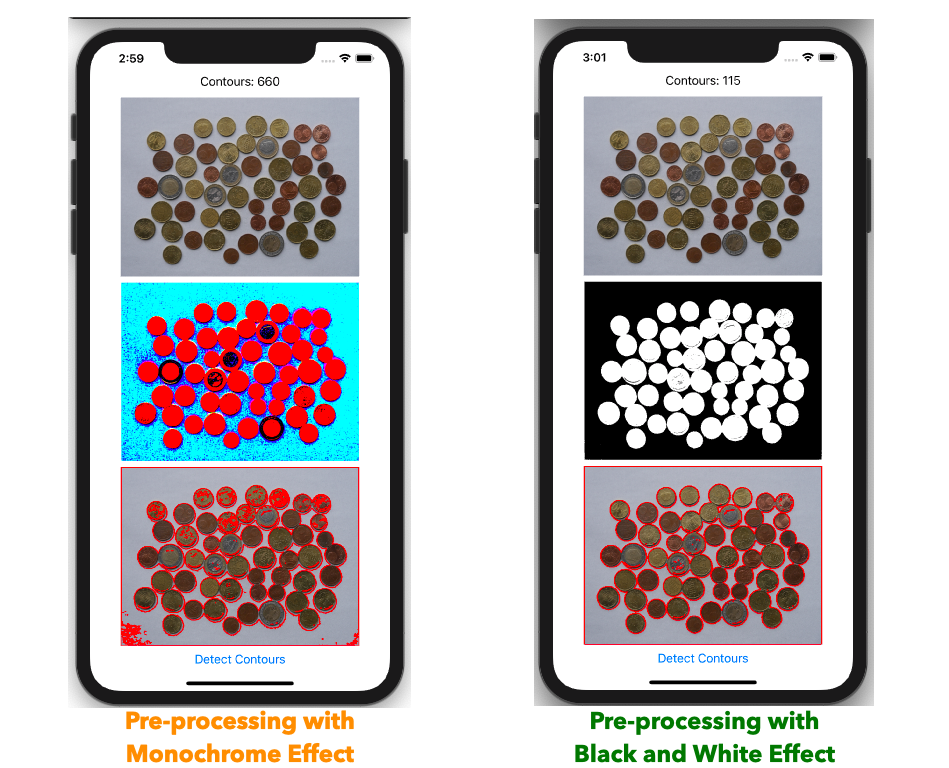

Core Image is a really handy framework for pre-processing images for contour detection requests. It helps smooth textures, which in turn lets us simplify contours and mask parts that aren’t in the region of interest.

Core Image是用于轮廓检测请求的图像预处理的非常方便的框架。 它有助于平滑纹理,从而使我们简化轮廓并遮盖不在关注区域中的零件。

The below screenshot shows what happens when you use the wrong filter for pre-processing (adds more contours instead of reducing them, in the left image):

下面的屏幕截图显示了当您使用错误的滤镜进行预处理时(在左图中添加更多轮廓而不是缩小轮廓)会发生什么:

VNGeometryUtils is also a handy utility class introduced this year for analyzing contour shapes, bounding circles, area, and diameter.

VNGeometryUtils也是今年推出的一种实用工具,用于分析轮廓形状,边界圆,面积和直径。

Implementing advanced computer vision features in iOS apps is getting easier, but there’s still a full project lifecycle to consider if you want to do it right. Download our free ebook that explores this lifecycle from end-to-end.

在iOS应用程序中实现高级计算机视觉功能变得越来越容易,但是如果您想做对的话,仍然有一个完整的项目生命周期需要考虑。 下载我们的免费电子书,从端到端探索这个生命周期 。

光流 (Optical Flow)

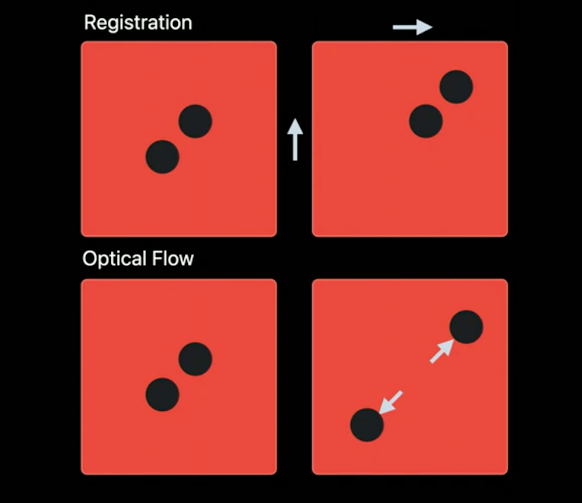

VNGenerateOpticalFlowRequest deals with the directional flow of individual pixels across frames, and is used in motion estimation, surveillance tracking, and video processing.

VNGenerateOpticalFlowRequest处理跨帧的单个像素的定向流,并用于运动估计,监视跟踪和视频处理。

Unlike image registration, which tells you the alignment of the whole image with respect to another image, optical flow helps in analyzing only regions that have changed based on the pixel shifts.

与图像配准不同,图像配准可以告诉您整个图像相对于另一幅图像的对齐方式,而光流则有助于仅分析基于像素偏移而变化的区域。

Optical flow is one of the trickiest computer vision algorithms to master, primarily due to the fact that it’s highly sensitive to noise (even a shadow could play a huge role in changing the final results).

光流是最难掌握的计算机视觉算法之一,这主要是因为它对噪声非常敏感(即使阴影在改变最终结果中也起着很大的作用)。

iOS 14 also introduced VNStatefulRequest, a subclass of VNImageRequest which takes into consideration the previous Vision results. This request is handy for optical flow and trajectory detection Vision requests, as it helps in building evidence over time.

iOS 14还引入了VNStatefulRequest ,它是VNImageRequest的子类,它考虑了以前的Vision结果。 该请求对于光流和轨迹检测视觉请求很方便,因为它有助于随着时间的推移建立证据。

let visionRequest = VNGenerateOpticalFlowRequest(targetedCIImage: previousImage, options: [:])手和身体姿势估计 (Hand and Body Pose Estimation)

After bolstering its face recognition technology with more refined detection and face capture quality requests last year, Apple has now introduced two new requests — hand and body pose estimation.

去年,在通过更加精细的检测和面部捕捉质量要求加强了面部识别技术之后,苹果公司现在提出了两个新的要求-手和身体姿势估计。

The new VNDetectHandPoseRequest has opened possibilities for building touchless, gesture-based applications based on the 21 returned landmark points in the VNRecognizedPointsObservation.

新的VNDetectHandPoseRequest为基于VNRecognizedPointsObservation返回的21个界标点构建基于手势的非接触式应用程序打开了可能性。

Drawing with hand gestures is now literally possible by tracking the thumb and index finger points. Amongst the other things, you can detect and track a given number of hands and also analyze the kinds of instruments a person is interacting or playing(example: guitars and keyboards).

通过跟踪拇指和食指点,现在可以用手势进行绘制。 除其他功能外,您还可以检测和跟踪给定数量的手,还可以分析一个人正在交互或演奏的乐器(例如:吉他和键盘)。

Another exciting use case of hand pose is in auto-capturing selfies in your custom camera-based applications.

手势的另一个令人兴奋的用例是在基于自定义相机的应用程序中自动捕获自拍。

Human body pose estimation is another exciting addition to the Vision framework this year. By leveraging the VNDetectHumanBodyPoseRequest, you can identify different body poses of multiple people in a given frame.

人体姿势估计是今年Vision框架中另一个令人兴奋的功能。 通过利用VNDetectHumanBodyPoseRequest ,您可以在给定帧中识别多个人的不同身体姿势。

From tracking if your exercise form is correct to determining the perfect action shot in a video to creating stromotion effects (by using different body poses of a person across frames and blending them), the possibilities of using body pose in camera-based applications are endless.

从跟踪您的运动形式是否正确到确定视频中的完美动作,再到产生划痕效果(通过跨帧使用人的不同身体姿势并将其混合),在基于相机的应用程序中使用身体姿势的可能性无穷无尽。

Feeling inspired? Fritz AI Studio has the tools to build, test, and improve mobile computer vision models. Start building and teach your devices to see with a free Sandbox account.

感觉受到启发? Fritz AI Studio具有用于构建,测试和改善移动计算机视觉模型的工具。 开始构建并教您的设备使用免费的Sandbox帐户进行查看 。

轨迹检测 (Trajectory Detection)

VNDetectTrajectoriesRequest is used for identifying and analyzing trajectories across multiple frames of videos and live photos. It’s a stateful request, which implies previous Vision results are required to build evidence over time.

VNDetectTrajectoriesRequest用于识别和分析跨多个视频和实时照片帧的轨迹。 这是一个有状态的请求,这意味着需要Vision以前的结果才能逐步建立证据。

Trajectory detection requests would be extremely useful in analyzing the performances of sports athletes and build insights from it.

轨迹检测请求对于分析体育运动员的表现并从中获得洞察力非常有用。

From soccer to golf to cricket, visualizing trajectories of balls would be entertaining for the end-user, in addition to providing important analytics.

从足球到高尔夫再到板球,除了提供重要的分析功能外,可视化球的轨迹对于最终用户也将很有趣。

VNDetectTrajectoriesRequest requires setting frameAnalysisSpacing for intervals at which the Vision request should run, along with trajectoryLength, to determine the number of points you’re looking to analyze in the trajectory.

VNDetectTrajectoriesRequest需要为Vision请求运行的时间间隔设置frameAnalysisSpacing以及trajectoryLength ,以确定要在轨迹中分析的点数。

Additionally, you can also set minimumObjectSize and maximumObjectSize to filter out the noise. The results (there would be multiple trajectories) returned in the VNTrajectoryObservation instance provides us with detected and projected points. Project points are extremely handy for estimating where the target object is in real-world space. Just imagine—building a hawk-eye projection is now possible on-device using Vision in iOS 14.

此外,您还可以设置minimumObjectSize和maximumObjectSize来滤除噪声。 VNTrajectoryObservation实例中返回的结果(可能有多个轨迹)为我们提供了检测到的和投影的点。 项目点对于估计目标对象在现实空间中的位置非常方便。 想象一下-现在可以在iOS 14中使用Vision在设备上构建鹰眼投影。

离线视觉视频处理实用程序 (A Utility For Offline Vision Video Processing)

Last but not the least is the new utility class VNVideoProcessor, which lets you perform Vision requests on videos offline. VNVideoProcess lets you pass a video asset URL, set the time range for which you want to run the Vision requests, and add or remove requests easily.

最后但并非最不重要的是新的实用程序类VNVideoProcessor ,它使您可以脱机对视频执行视觉请求。 VNVideoProcess ,您可以传递视频资产URL,设置要运行Vision请求的时间范围以及轻松添加或删除请求。

Now, this might not look fancy, but it’s the most underrated introduction in the Vision framework this year.

现在,这可能看起来并不理想,但这是今年Vision框架中被低估的介绍。

From detecting anomalies in videos to analyzing if the content is NSFW or not before playing, the possibilities of this utility class are truly limitless. Moreover, trajectory detection and optical flow requests require a steady camera, preferably with a tripod, which makes the introduction of the offline video processor a great addition.

从检测视频中的异常到在播放之前分析内容是否为NSFW,该实用工具类别的可能性确实是无限的。 此外,轨迹检测和光流请求需要一个稳定的摄像机,最好带有一个三脚架,这使离线视频处理器的引入成为一个很大的补充。

As a side note, with the introduction of a native VideoPlayer in SwiftUI 2.0 this year, VNVideoProcessor will only boost pure SwiftUI computer vision applications that do more than just image processing. The SwiftUI Video Player and VNVideoProcessor are a match made in heaven.

附带说明一下,随着今年SwiftUI 2.0中本机VideoPlayer的引入, VNVideoProcessor将仅增强纯粹的SwiftUI计算机视觉应用程序,而不仅仅是图像处理。 SwiftUI Video Player和VNVideoProcessor是天作之合。

结论 (Conclusion)

Apple’s Vision framework has been regularly receiving interesting updates over the years, and WWDC 2020 has only pushed the envelope further.

多年来,Apple的Vision框架一直定期获得有趣的更新,而WWDC 2020只是进一步扩大了范围。

From the look of it, the collection of Vision requests introduced this year— namely, hand and pose, trajectory detection, and optical flow—are key players for augmented reality and might eventually play a huge role in how users interact with Apple’s much-anticipated AR glasses.

从外观上看,今年推出的Vision要求集合(即手和姿势,轨迹检测和光流)是增强现实的关键参与者,最终可能会在用户与苹果公司备受期待的交互方式中发挥巨大作用AR眼镜。

By introducing these exciting Vision requests this year, the Cupertino tech giant has just showcased the possibilities and new use cases of computer vision in mobile applications.

通过在今年引入这些令人兴奋的Vision要求,库比蒂诺科技巨头刚刚展示了移动应用中计算机视觉的可能性和新用例。

Editor’s Note: Heartbeat is a contributor-driven online publication and community dedicated to exploring the emerging intersection of mobile app development and machine learning. We’re committed to supporting and inspiring developers and engineers from all walks of life.

编者注: 心跳 是由贡献者驱动的在线出版物和社区,致力于探索移动应用程序开发和机器学习的新兴交集。 我们致力于为各行各业的开发人员和工程师提供支持和启发。

Editorially independent, Heartbeat is sponsored and published by Fritz AI, the machine learning platform that helps developers teach devices to see, hear, sense, and think. We pay our contributors, and we don’t sell ads.

Heartbeat在编辑上是独立的,由以下机构赞助和发布 Fritz AI ,一种机器学习平台,可帮助开发人员教设备看,听,感知和思考。 我们向贡献者付款,并且不出售广告。

If you’d like to contribute, head on over to our call for contributors. You can also sign up to receive our weekly newsletters (Deep Learning Weekly and the Fritz AI Newsletter), join us on Slack, and follow Fritz AI on Twitter for all the latest in mobile machine learning.

如果您想做出贡献,请继续我们的 呼吁捐助者 。 您还可以注册以接收我们的每周新闻通讯(《 深度学习每周》 和《 Fritz AI新闻通讯》 ),并加入我们 Slack ,然后关注Fritz AI Twitter 提供了有关移动机器学习的所有最新信息。

翻译自: https://heartbeat.fritz.ai/whats-new-in-the-vision-framework-in-ios-14-73d22a942ba5

ios框架

549

549

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?