急速安装和编译安装

TLDR: Learn how to use RAPIDS, HuggingFace, and Dask for high-performance NLP. See how to build end-to-end NLP pipelines in a fast and scalable way on GPUs. This covers feature engineering, deep learning inference, and post-inference processing.介绍 (Introduction)

Modern natural language processing (NLP) mixes modeling, feature engineering, and general text processing. Deep learning NLP models can provide fantastic performance for tasks like named-entity recognition (NER), sentiment classification, and text summarization. However, end-to-end workflow pipelines with these models often struggle with performance at scale, especially when the pipelines involve extensive pre- and post-inference processing.

现代自然语言处理(NLP)将建模,功能工程和常规文本处理混合在一起。 深度学习NLP模型可以为诸如命名实体识别(NER),情感分类和文本摘要之类的任务提供出色的性能。 但是,具有这些模型的端到端工作流管道通常难以实现大规模性能,尤其是当管道涉及大量的推理前和推理后处理时。

In our previous blog post, we covered how RAPIDS accelerates string processing and feature engineering. This post explains how to leverage RAPIDS for feature engineering and string processing, HuggingFace for deep learning inference, and Dask for scaling out for end-to-end acceleration on GPUs.

在之前的博客文章中 ,我们介绍了RAPIDS如何加速字符串处理和功能工程。 这篇文章解释了如何利用RAPIDS进行功能工程和字符串处理,如何利用HuggingFace进行深度学习推理,以及如何利用Dask进行扩展以实现GPU上的端到端加速。

NLP管道通常涉及以下步骤: (An NLP pipeline often involves the following steps:)

Pre-processing

前处理

Tokenization

代币化

Inference

推理

Post Inference Processing

后推断处理

预处理: (Pre-Processing:)

Pre-Processing for NLP pipelines involves general data ingestion, filtration, and general reformatting. With the RAPIDS ecosystem, each piece of the workflow is accelerated on GPUs. Check out our recent blog where we showcased these capabilities in more detail.

NLP管道的预处理涉及常规数据提取,过滤和常规重新格式化。 借助RAPIDS生态系统,可在GPU上加速工作流程的每一部分。 查看我们最近的博客 ,我们在其中更详细地展示了这些功能。

Once we have pre-processed our data, we need to tokenize it so that the appropriate machine learning model can ingest it.

预处理数据后,我们需要对它进行标记化,以便适当的机器学习模型可以吸收它。

子词标记化: (Subword Tokenization:)

Tokenization is the process of breaking down the text into standard units that a machine can understand. It is a fundamental step across NLP methods from traditional like CountVectorizer to advanced deep learning methods like Transformers.

标记化是将文本分解成机器可以理解的标准单位的过程。 这是从传统的CountVectorizer等NLP方法到高级的深度学习方法(如Transformers )的基础性步骤。

One approach to tokenization is breaking a sentence into words. For example, the sentence, “I love apples” can be broken down into, “I,” “love,” “apples”. But this delimiter based tokenization runs into problems like:

标记化的一种方法是将句子分解为单词。 例如,句子“我爱苹果”可以分解为“我”,“爱”,“苹果”。 但是,这种基于定界符的令牌化会遇到类似以下问题:

- Needing a large vocabulary as you will need to store all words in the dictionary. 需要大量词汇,因为您需要将所有单词存储在字典中。

- Uncertainty of combined words like “check-in,” i.e., what exactly constitutes a word, is often ambiguous. 诸如“值机”之类的组合词的不确定性(即确切构成一个词)通常是不确定的。

- Some languages don’t segment by spaces. 某些语言不按空格分割。

To solve these problems, we use a subword tokenization. Subword tokenization is a recent strategy from machine translation that breaks into subword units, strings of characters like “ing”, “any”, “place”. For example, the word “anyplace” can be broken down into “any” and “place” so you don’t need an entry for each word in your vocabulary.

为了解决这些问题,我们使用子词标记化。 子词标记化是机器翻译中的一种最新策略,可分为子词单元,即“ ing”,“ any”,“ place”之类的字符串。 例如,单词“任何地方”可以分解为“任意”和“地方”,因此您不需要为词汇表中的每个单词都输入一个条目。

When BERT(Bidirectional Encoder Representations from Transformers) was released in 2018, it included a new subword algorithm called WordPiece. This tokenization is used to create input for NLP DL models like BERT, Electra, DistilBert, and more.

BERT (《变形金刚的双向编码器表示法》)于2018年发布时,它包含了一个名为WordPiece的新子词算法。 此标记化用于为NLP DL模型(例如BERT,Electra,DistilBert等)创建输入。

GPU子字词分词 (GPU Subword Tokenization)

We first introduced the GPU BERT subword tokenizer in a previous blog as part of CLX for cybersecurity applications. Since then, we migrated the implementation into RAPIDS cuDF and exposed it as a string function, subword tokenization, making it easier to use in typical DataFrame workflows.

我们在之前的博客中首先介绍了GPU BERT子词令牌生成器,作为网络安全应用程序CLX的一部分。 从那时起,我们将实现迁移到RAPIDS cuDF中,并将其作为字符串函数( subword tokenization公开,从而使其更易于在典型的DataFrame工作流程中使用。

This tokenizer takes a series of strings and returns tokenized cupyarrays:

该标记器采用一系列字符串并返回标记化的cupy数组:

def tokenize_text_series(text_ser, seq_len, stride, vocab_hash_file):

"""

This function tokenizes a text series using the bert subword_tokenizer and vocab-hash

Parameters

__________

text_ser: Text Series to tokenize

seq_len: Sequence Length to use (We add to special tokens for ner classification job)

stride : Stride for the tokenizer

vocab_hash_file: vocab_hash_file to use (Created using `perfect_hash.py` with compact flag)

Returns

_______

A dictionary with these keys {'token_ar':,'attention_ar':,'metadata':}

"""

if len(text_ser) == 0:

return {"token_ar": None, "attention_ar": None, "metadata": None}

max_rows_tensor = len(text_ser) * 2

max_length = seq_len - 2

tokens, attention_masks, metadata = text_ser.str.subword_tokenize(

vocab_hash_file,

do_lower=False,

max_rows_tensor=max_rows_tensor,

stride=stride,

max_length=max_length,

do_truncate=False,

)

### reshape metadata into a matrix

metadata = metadata.reshape(-1, 3)

tokens = tokens.reshape(-1, max_length)

output_rows = tokens.shape[0]

padded_tokens = cp.zeros(shape=(output_rows, seq_len), dtype=cp.uint32)

# Mark sequence start with [CLS] token to mark start of sequence

padded_tokens[:, 1:-1] = tokens

padded_tokens[:, 0] = 101

# Mark end of sequence [SEP]

seq_end_col = padded_tokens.shape[1] - (padded_tokens[:, ::-1] != 0).argmax(1)

padded_tokens[cp.arange(padded_tokens.shape[0]), seq_end_col] = 102

del tokens

## Attention mask

attention_masks = attention_masks.reshape(-1, max_length)

padded_attention_mask = cp.zeros(shape=(output_rows, seq_len), dtype=cp.uint32)

padded_attention_mask[:, 1:-1] = attention_masks

# Mark sequence start with 1

padded_attention_mask[:, 0] = 1

# Mark sequence end with 1

padded_attention_mask[cp.arange(padded_attention_mask.shape[0]), seq_end_col] = 1

return {

"token_ar": padded_tokens,

"attention_ar": padded_attention_mask,

"metadata": metadata,

}

example_data = cudf.Series(['First sequence',

'Second sequence',

'unary'

])

### wget https://raw.githubusercontent.com/rapidsai/clx/267c6d30805c9dcbf80840f222bf31c5c4b7068a/python/clx/analytics/perfect_hash.py

### Created using python3 perfect_hash.py --vocab 'vocab.txt' --output 'vocab-hash.txt' --compact

d = tokenize_text_series(example_data,5,2,'./vocab-hash.txt')

vocab2int,int2vocab = create_vocab_table('./vocab.txt')

print(d['token_ar'])

print(vocab2int[d['token_ar'].get()])cuDF的GPU子词Tokenizer的优势: (Advantages of cuDF’s GPU subword Tokenizer:)

The advantages of using cudf.str.subword_tokenize include:

使用cudf.str.subword_tokeniz e的优点包括:

The tokenizer itself is up to 483x faster than HuggingFace’s Fast RUST tokenizer

BertTokeizerFast.batch_encode_plus.标记生成器本身是高达483x速度比HuggingFace的快速锈菌标记者

BertTokeizerFast.batch_encode_plus。Tokens are extracted and kept in GPU memory and then used in subsequent tensors, all without leaving GPUs and avoiding expensive CPU copies.

令牌被提取并保存在GPU内存中,然后用于后续的张量中,所有这些都无需离开GPU并避免昂贵的CPU复制 。

Once our inputs are tokenized using the subword tokenizer, they can be fed into NLP DL models like BERT for inference.

使用子词标记器对输入进行标记后,就可以将它们输入到BERT等NLP DL模型中进行推断。

HuggingFace概述: (HuggingFace Overview:)

HuggingFace provides access to several pre-trained transformer model architectures ( BERT, GPT-2, RoBERTa, XLM, DistilBert, XLNet…) for Natural Language Understanding (NLU) and Natural Language Generation (NLG) with over 32+ pre-trained models in 100+ languages.

HuggingFace提供了用于自然语言理解(NLU)和自然语言生成(NLG)的多种预训练的变压器模型架构( BERT , GPT-2 , RoBERTa , XLM, DistilBert , XLNet …),并具有32种以上的预训练模型超过100种语言。

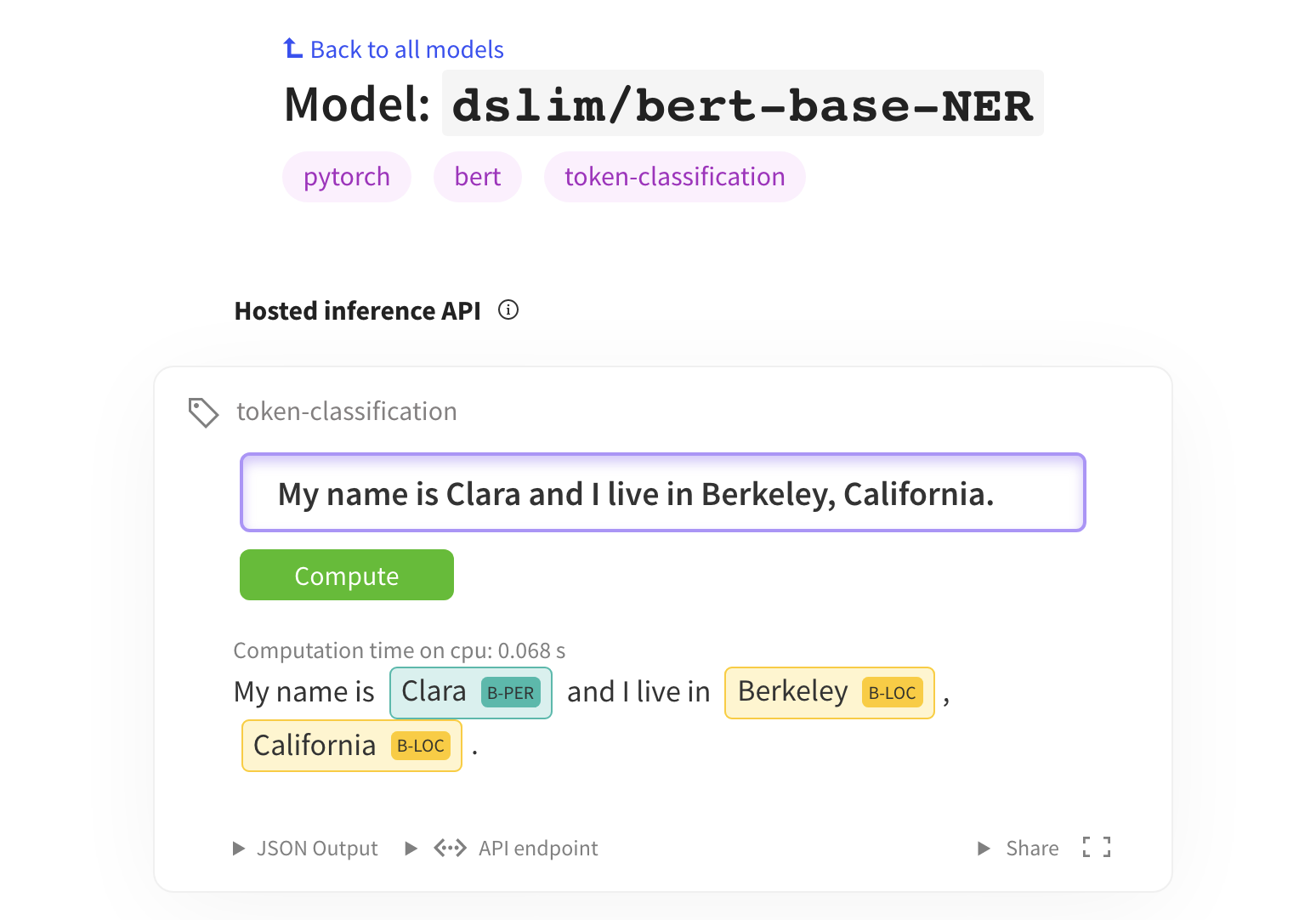

In our workflow, we used BERT and DISTIILBERT from HuggingFace to do named entity recognition.

在我们的工作流程中,我们使用了DISTIILBERT BERT和DISTIILBERT进行命名实体识别。

结合RAPIDS,HuggingFace和Dask: (Combining RAPIDS, HuggingFace, and Dask:)

This section covers how we put RAPIDS, HuggingFace, and Dask together to achieve 5x better performance than the leading Apache Spark and OpenNLP for TPCx-BB query 27 equivalent pipeline at the 10TB scale factor with 136 V100 GPUs while using a near state of the art NER model. We expect to see even better results with A100 as A100's BERT inference speed is up to 6x faster than V100's.

本节介绍了我们如何将RAPIDS,HuggingFace和Dask组合在一起,以实现比其他产品高5倍的性能。 领先的Apache Spark和OpenNLP for TPCx-BB使用136个V100 GPU以10TB的比例因子查询了27条等效管道,同时使用了最新的NER模型。 我们期望A100会获得更好的结果,因为A100的BERT推理速度比V100快6倍 。

In this workflow, we are given 26 Million synthetic reviews, and the task is to find the competitor company names in the product reviews for a given product. We then return the review id, product id, competitor company name, and the related sentence from the online review. To get a competitor’s name, we need to do NER on the reviews and find all the tokens in the review labeled as an organization.

在此工作流程中,我们获得了2600万条综合评论,而任务是在给定产品的产品评论中找到竞争对手的公司名称。 然后,我们从在线评论中返回评论ID,产品ID,竞争对手的公司名称以及相关的句子。 为了获得竞争对手的名字,我们需要对评论进行NER,并在评论中找到标记为组织的所有标记。

Our previous implementation relied on spaCy for NER but, spaCy currently needs your inputs on CPU and thus was slow as it required a copy to CPU memory and back to GPU memory. With the new cudf.str.subword_tokenize, we can go from cudf.string.series to subword tensors without leaving the GPU unlocking many new SOTA language models.

我们以前的实现依赖于NER的spaCy,但是spaCy当前需要您在CPU上的输入,因此速度很慢,因为它需要复制到CPU内存并返回到GPU内存。 使用新的cudf.str.subword_tokeniz e ,我们可以从 cudf.string.series 为子词张量,而无需离开GPU来解锁许多新的SOTA语言模型。

In this task, we experimented with two of HuggingFace’s models for NER fine-tuned on CoNLL 2003(English) :

在此任务中,我们针对CoNLL 2003(English)对HuggingFace的两个NER模型进行了实验:

Bert-base-model: This model gets anf1of91.95and achieves a speedup of 1.7 x over spaCy.Bert-base-model:此模型的f1为91.95, 在spaCy上实现了1.7倍的加速。Distil-bert-cased model: This model gets anf1of89.6(97% of the accuracy of BERT ) and achieves a speedup of 2.5x over spaCyDistil-bert-cased model:该模型的f1为89.6( 是BERT精度的97% ),并且在空间上的速度提高了2.5倍

Research by Zhu, Mengdi et al. (2019) showcased that BERT-based model architectures achieve near state art performance, significantly improving the performance on existing public-NER toolkits like spaCy, NLTK, and StanfordNER.

朱梦迪等人的研究。 (2019)展示了基于BERT的模型架构实现了近乎艺术状态的性能,极大地提高了spaCy,NLTK和StanfordNER等现有public-NER工具包的性能。

For example, the

bert-basemodel on average across datasets achieves a 13.63% better F1 than spaCy, so not only did we get faster but also reached near state of the art performance.例如,基于

bert-base数据集上的模型平均F1比spaCy高13.63%,因此我们不仅获得了更快的速度,而且达到了近乎一流的性能 。

Check out the workflow code here.

结论 : (Conclusion:)

This workflow is just one example of leveraging GPUs to do end to end accelerating natural language processing. With cudf.str.subword_tokenize now, most of the NLP tasks such as question answering, text-classification, summarization, translation, token classification are all within reach for an end to end acceleration leveraging RAPIDS and HuggingFace.

该工作流程只是利用GPU端到端加速自然语言处理的一个示例。 现在有了cudf.str.subword_tokeniz e ,就可以利用RAPIDS和HuggingFace达到端到端加速的大部分NLP任务,例如问题解答,文本分类,摘要,翻译,令牌分类。

Stay tuned for more examples and in, the meantime, try out RAPIDS in your NLP work on Google Colab or blazingsql notebooks, see our documentation docs page, and if you see something missing, we welcome feature requests on GitHub!

请继续关注更多示例,同时,在NLP在Google Colab或blazingsql笔记本上的NLP工作中试用RAPIDS,请参阅我们的文档docs页面 ,如果您发现缺少的内容,我们欢迎 GitHub上的功能要求 !

急速安装和编译安装

304

304

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?