python 并行处理

The idea of creating a practical guide for Python parallel processing with examples is actually not that old for me. We know that this is not really one of the main contents for Python. However, when the time comes to work with big data, we cannot be very patient about the time. And at this point, it’s no secret that we need new equipment to take on big tasks.

创建带有示例的Python并行处理实用指南的想法实际上对我来说并不那么古老。 我们知道,这实际上并不是Python的主要内容之一。 但是,当需要处理大数据时,我们不能对时间非常耐心。 在这一点上,我们需要新设备来承担重大任务已不是什么秘密。

This article will help you understand:

本文将帮助您了解:

Why is parallel processing and what is parallel processing?

为什么要进行并行处理,什么是并行处理?

Which function is used and how many processors can be used?

使用哪个功能以及可以使用多少个处理器?

What should I know before starting parallelization?

开始并行化之前我应该知道些什么?

How is any function parallelization?

函数如何并行化?

How to parallelize Pandas DataFrame?

如何并行化Pandas DataFrame?

1.为什么和什么? (1. Why and What?)

为什么需要并行处理? (Why do i need parallel processing?)

- A single process covers a separately executable piece of code 单个过程涵盖了单独的可执行代码段

- Some sections of code can be run simultaneously and allow, in principle, parallelization 某些代码段可以同时运行,并且原则上允许并行化

- Using the features of modern processors and operating systems, we can shorten the total execution time of a program, for example by using each core of a processor. 利用现代处理器和操作系统的功能,我们可以缩短程序的总执行时间,例如通过使用处理器的每个内核。

- You may need this to reduce the complexity of your program / code and outsource the workpieces to specialist agents who act as sub-processes. 您可能需要这样做,以减少程序/代码的复杂性,并将工件外包给充当子流程的专业代理商。

什么是并行处理? (What is the Parallel Processing?)

- Parallel processing is a mode of operation in which instructions are executed simultaneously on multiple processors on the same computer to reduce overall processing time. 并行处理是一种操作模式,其中指令在同一台计算机上的多个处理器上同时执行,以减少总体处理时间。

- It allows you to take advantage of multiple processors in one machine. In this way, your processes can be run in completely separate memory locations. 它使您可以在一台计算机上利用多个处理器。 这样,您的进程可以在完全独立的内存位置中运行。

2.使用什么功能? (2. What function is used?)

Using the standard multiprocessing module, we can efficiently parallelize simple tasks by creating child processes.Whether you are a Windows user or a Unix user, this does not change. To basically understand parallel processing, this example can be given:

使用标准的multiprocessing模块,我们可以通过创建子进程来有效地并行化简单任务。无论您是Windows用户还是Unix用户,这都不会改变。 为了基本了解并行处理,可以给出以下示例:

from multiprocessing import Pooldef f(x):return x*xif __name__ == '__main__':with Pool(5) as p:

print(p.map(f, [1, 2, 3]))

output: [1, 4, 9]If you think why

if __name__ == '__main__'part is necessary, “Yes, the” entry point “of the program must be protected” (https://docs.python.org/3.8/library/multiprocessing.html#multiprocessing-programming)如果您认为为什么

if __name__ == '__main__'部分,请“是,必须保护程序的“入口点””( https://docs.python.org/3.8/library/multiprocessing.html#multiprocessing -编程 )

我最多可以运行几个并行进程? (How many parallel processes can I run at most?)

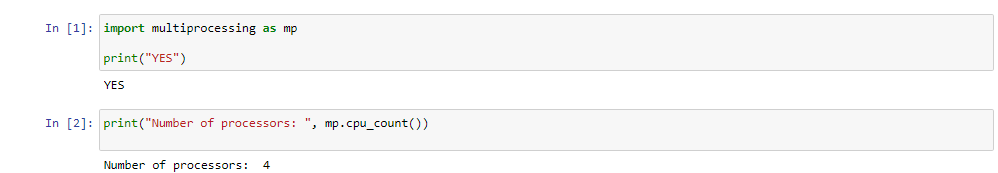

The maximum number of processes you can run at one time is limited by the number of processors on your computer. However, if you don’t know how many processors you have, you can query as in the example below:

一次可以运行的最大进程数受计算机上处理器数量的限制。 但是,如果您不知道有多少个处理器,则可以按照以下示例进行查询:

3.开始并行化之前我应该知道些什么? (3. What should I know before starting parallelization?)

'multiprocessing’works in Ipython console but not in Jupyter Notebook and why?

'multiprocessing'在Ipython控制台中起作用,但在Jupyter Notebook中不起作用,为什么?

It does not work inside jupyter because it need to run directly. In short, the subprocesses do not know they are subprocesses and are attempting to run the main script recursively. It is well known that multiprocessing is a bit dangerous in interactive translators in Windows.

它在jupyter内部不起作用,因为它需要直接运行。 简而言之,子进程不知道它们是子进程,而是试图递归运行主脚本。 众所周知,在Windows中的交互式翻译器中,多处理有些危险。

The library was originally developed under Windows and Python2, and there were no problems with multiprocessing before. However multiprocessing works perfectly if you run it from the command line.

该库最初是在Windows和Python2下开发的,以前的多处理没有问题。 但是,如果您从命令行运行多处理,则可以完美地工作。

4.什么是函数并行化? (4. How is any function parallelization?)

处理 (Process)

By subclassing multiprocessing.process, you can create a process that runs independently. By extending the __init__ method you can initialize resource and by implementing Process.run() method you can write the code for the subprocess.

通过子类化multiprocessing.process,可以创建独立运行的进程。 通过扩展__init__方法,您可以初始化资源,并通过实现Process.run()方法,可以编写子流程的代码。

import import class def super(Process, self).__init__()self.id= def time.sleep(1)print("This is the process with id: {}".format(self.id))We need to initialize our Process object and invoke Process.start() method. For this Process.start() will create a new process and will invoke the Process.run() method.

我们需要初始化我们的Process对象并调用Process.start()方法。 为此,Process.start()将创建一个新进程,并将调用Process.run()方法。

if __name__ == '__main__':

p = Process(0)

p.start()After p.start() will be executed immediately before the task completion of process p. To wait for the task completion, you can use Process.join()

在p.start()之后,将立即执行进程p的任务。 要等待任务完成,可以使用Process.join()

if __name__ == '__main__':

p = Process(0)

p.start()

p.join()=

p.join()And output should appear like this:

输出应如下所示:

output:

This is the process with id: 0

This is the process with id: 1泳池班 (Pool Class)

You can initialize a Pool with ’n’ number of processors and pass the function you want to parallelize to one of Pools parallization methods.

您可以使用'n'个处理器初始化池,然后将要并行化的函数传递给池并行化方法之一。

The multiprocessing.Pool() class spawns a set of processes called workers and can submit tasks using the methods apply()/apply_asnc() and map()/map_async().

multiprocessing.Pool()类产生了一组称为worker的进程,可以使用apply() / apply_asnc()和map() / map_async()方法提交任务。

Let’s show Pool using map() first:

让我们 首先 使用 map() 显示Pool :

import import def return * if == pool = pool = =4) inputs = outputs = print("Input: {}".format(inputs)) print("Output: {}".format(outputs))So the output for map() should look like this:

因此map()的输出应如下所示:

Input: [0,2,4,6,8]

Output: [0,4,16,36,64]Using map_async(), the AsyncResult object is returned immediately without stopping the main program and the task is done in the background.

使用map_async() ,无需停止主程序即可立即返回AsyncResult对象,并且该任务在后台完成。

import import def return * if == pool = inputs = outputs_async = outputs = print("Output: {}".format(outputs))The output for map_async() should look like this:

map_async()的输出应如下所示:

Output: [0, 1, 4, 9, 16]Let’s take a look at an example Pool.apply_async. Pool.apply_async conditions a task consisting of a single function to one of the workers. It takes the function and its arguments and returns an AsyncResult object. For example:

让我们看一个示例Pool.apply_async. Pool.apply_async一个包含单个功能的任务Pool.apply_async给其中一个工作程序。 它接受函数及其参数,并返回一个AsyncResult对象。 例如:

import import def return * if == pool = result_async = = for in range(5)] results = for in print("Output: {}".format(results))And output for Pool.apply_async :

并输出Pool.apply_async :

Output: [0, 1, 4, 9, 16]5.如何并行化Pandas DataFrame? (5. How to parallelize Pandas DataFrame?)

When it comes to parallelizing a DataFrame, you can apply it to the dataframe as an input parameter for the function to be parallelized, a row, a column, or the whole dataframe.

当涉及到并行化一个DataFrame时,您可以将其作为要并行化的函数,行,列或整个数据帧的输入参数应用到该数据帧。

You just need to know: For the parallelizing on an entire dataframe, you will use the pathos package that uses dill for serialization internally.

您只需要知道:为了在整个数据帧上进行并行化,您将使用在内部使用dill进行序列化的pathos软件包。

Forany example, lets create a dataframe and see how to do row-wise and column-wise paralleization.

例如,让我们创建一个数据框,看看如何进行行和列并行化。

First, let’s create a sample data frame and see how to do row parallelization.

首先,让我们创建一个示例数据框,然后看看如何进行行并行化。

import numpy as np

import pandas as pd

import multiprocessing as mp

df = pd.DataFrame(np.random.randint(3, 10, size=[5, 2]))print(df.head())

#> 0 1

#> 0 8 5

#> 1 5 3

#> 2 3 4

#> 3 4 4

#> 4 7 9Now let’s apply the hypotenuse Function to each raw, but since we want to run 4 transactions at a time, we will need df.itertuples(name=False)name=Falsehypotenuse

现在,将hypotenuse函数应用于每个原始函数,但是由于我们想一次运行4个事务,因此需要df.itertuples(name=False)name=Falsehypotenuse

# Row wise Operation

def hypotenuse(row):

return round(row[1]**2 + row[2]**2, 2)**0.5

with mp.Pool(4) as pool:

result = pool.imap(hypotenuse, df.itertuples(name=False), chunksize=10)

output = [round(x, 2) for x in result]

print(output)

#> [9.43, 5.83, 5.0, 5.66, 11.4]Now let’s do a column-by-column paralleling. For this I use df.iteritems()sum_of_squares to pass an entire column as an array to the function.

现在,让我们逐列进行并行化。 为此,我使用df.iteritems()sum_of_squares将整个列作为数组传递给函数。

# Column wise Operation

def sum_of_squares(column):

return sum([i**2 for i in column[1]])

with mp.Pool(2) as pool:

result = pool.imap(sum_of_squares, df.iteritems(), chunksize=10)

output = [x for x in result]

print(output)

#> [163, 147]Here is an interesting notebook with which you can compare processing times for multitasking. Please read with pleasure

这是一个有趣的笔记本,您可以用它来比较多任务处理时间。 请愉快地阅读

结论 (Conclusion)

In this article, we have seen the various ways to implement parallel processing using the general procedure and multitasking module. Taking advantage of these benefits is indeed the same for any size machine. Our project will gain speed, depending on the number of processors, when the procedures are followed exactly. I hope such a basic start will be useful for you.

在本文中,我们已经看到了使用常规过程和多任务模块实现并行处理的各种方法。 对于任何大小的机器,利用这些好处的确是相同的。 完全遵循这些步骤,我们的项目将根据处理器的数量提高速度。 我希望这样的基本开始对您有用。

If your job requires speed, who wouldn’t want that!

如果您的工作需要速度,谁会不想要速度!

翻译自: https://medium.com/@kurt.celsius/5-step-guide-to-parallel-processing-in-python-ac0ecdfcea09

python 并行处理

1320

1320

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?