傅里叶变换 直观

Many of us have heard, read, or even performed an A/B Test before, which means we have conducted a statistical test at some point. Most of the time, we have worked with data from first or third-party sources and performed these tests with ease by either using tools ranging from Excel to Statistical Software and even more automated solutions such as Google Optimize.

我们当中许多人以前都听过,读过甚至进行过A / B测试,这意味着我们在某个时候进行了统计测试。 在大多数情况下,我们使用第一方或第三方来源的数据,并使用Excel到Statistics Software等工具以及更自动化的解决方案(例如Google Optimize)轻松地执行了这些测试。

If you are like me, you might be curious about how these types of tests work and how concepts such as Type I and Type II Error, Confidence Intervals, Effect Magnitude, Statistical Power, and others interact with each other.

如果您像我一样,可能会对这些类型的测试如何工作以及类型I和类型II错误 , 置信区间 , 影响幅度 , 统计 功效以及其他概念之间的交互方式感到好奇。

In this post, I would like to invite you to take a different approach for one specific type of A/B test, which makes use of a particular statistic called Chi-Squared. In particular, I will try to explore and walk through this type of test by taking the great but long road of simulations, avoiding libraries and tables, hopefully managing to explore and build some of the intuition behind it.

在本文中,我想邀请您对一种特定类型的A / B测试采用不同的方法,该方法利用称为Chi-Squared的特定统计量。 特别是,我将尝试通过漫长而漫长的模拟之路,避免使用库和表,希望设法探索并建立其背后的一些直觉 ,从而探索并完成此类测试。

开始之前 (Before we start)

Even though we could use data from our past experiments or even third-party sources such as Kaggle, it would be more convenient for this post to generate our data. It will allow us to compare our conclusions with a known ground truth; otherwise, it will be most likely unknown.

即使我们可以使用过去实验中的数据,甚至可以使用第三方来源(例如Kaggle)中的数据,对于本帖子来说,生成我们的数据也会更加方便。 它可以使我们将结论与已知的事实相比较; 否则,很可能未知。

For this example, we will generate a dummy dataset that will represent six different versions of a signup form and the number of leads we observed on each. For this dummy set to be random and have a winner version that will serve us as ground truth, we will generate this table by simulating some biased dice’s throws.

对于此示例,我们将生成一个虚拟数据集,该数据集将表示六个不同版本的注册表单以及我们在每个表单上观察到的潜在客户数量。 为了使这个虚拟集是随机的,并且有一个获胜者版本将用作 基础事实,我们将通过模拟一些有偏向的骰子投掷来生成此表。

For this, we have generated an R function that simulates a biased dice in which we have a 20% probability of lading in 6 while a 16% chance of landing in any other number.

为此,我们生成了一个R函数,该函数模拟了一个有偏见的骰子,在该骰子中,我们有20%的概率在6中提货,而在其他数字中有16%的机会着陆。

# Biased Dice Rolling Function

DiceRolling <- function(N) {

Dices <- data.frame()

for (i in 1:6) {

if(i==6) {

Observed <- data.frame(Version=as.character(LETTERS[i]),Signup=rbinom(N/6,1,0.2))

} else {

Observed <- data.frame(Version=as.character(LETTERS[i]),Signup=rbinom(N/6,1,0.16))

}

Dices <- rbind(Dices,Observed)

}

return(Dices)

}# Let's roll some dices

set.seed(123) # this is for result replication 86

Dices <- DiceRolling(1800)Think of each Dice number as a representation of a different landing version (1–6 or A-F). For each version, we will throw our Dice 300 times, and we will write down its results as follows:

将每个骰子编号视为不同着陆版本(1-6或AF)的表示。 对于每个版本,我们将掷骰子300次,并将其结果记录如下:

- If we are on version A (1) and throw the Dice and it lands on 1, we consider it to be Signup; otherwise, just a visit. 如果我们使用版本A(1)并将骰子扔到1,则认为它是Signup; 否则,只是一次访问。

- We repeat 300 times for each version. 每个版本重复300次。

样本数据 (Sample Data)

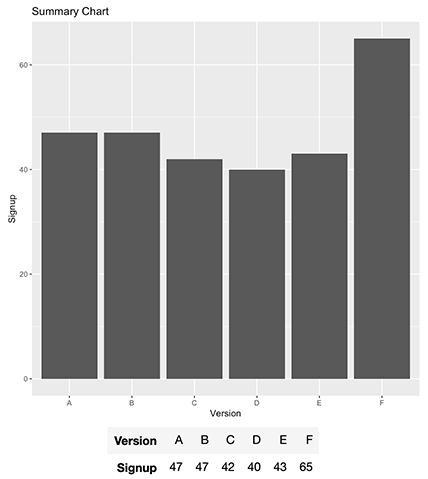

As commented earlier, this is what we got:

如前所述,这是我们得到的:

# We shuffle our results

set.seed(25)

rows <- sample(nrow(Dices))

t(Dices[head(rows,10),])

We can observe from our first ten results that we got one Signup for F, D, and A. In aggregated terms, our table looks like this:

我们可以从前十个结果中观察到,我们为F , D和A获得了一个Signup 。总的来说,我们的表如下所示:

library(ggplot2)

ggplot(Result, aes(x=Version, y=Signup)) + geom_bar(stat="identity", position="dodge") + ggtitle("Summary Chart")

Result <- aggregate(Signup ~ Version, Dices, sum)

t(Result)

From now own, think of this table as Dice throws, eCommerce conversions, surveys, or a Landing Page Signup Conversion as we will use here, it does not matter, use whatever is more intuitive for you.

从现在开始,将此表视为Dice投掷,电子商务转换,调查或着陆页注册转换,就像我们将在此处使用的那样,这没关系,可以使用对您而言更直观的方式。

For us, it will be signups, so we should produce this report:

对于我们来说,这将是注册,因此我们应该生成此报告:

观察频率 (Observed Frequencies)

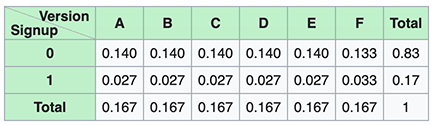

We will now aggregate our results, including both our Signup (1) and Did not Signup (0) results, which will allow us to understand better how these differ from our expected values or frequencies; this is also called a Cross Tabulation or Contingency Table.

现在,我们将汇总我们的结果,包括“ 注册”(1)和“未注册”(0)结果,这将使我们能够更好地了解这些结果与预期值或频率之间的差异; 这也称为交叉表或列联表 。

# We generate our contigency table

Observed <- table(Dices)

t(Observed)

In summary:

综上所述:

预期频率 (Expected Frequencies)

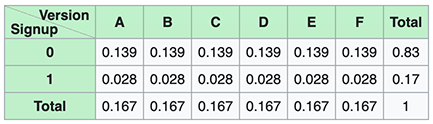

Since we know how our Cross Tabulation looks, we can now generate a table simulating how we should expect our results to be like considering the same performance of all versions. It is equivalent to say that each version had the same Signup Conversion or probability in the case of our example or the expected result of a non-biased dice if you prefer.

由于我们知道交叉制表的外观,因此我们现在可以生成一个表,该表模拟我们如何期望我们的结果像考虑所有版本的相同性能一样。 可以说,在我们的示例中,每个版本都有相同的注册转换或概率,或者,如果您愿意,可以使用无偏向骰子的预期结果。

# We generate our expected frequencies table

Expected <- Observed

Expected[,1] <- (sum(Observed[,1])/nrow(Observed))

Expected[,2] <- sum(Observed[,2])/nrow(Observed)

t(Expected)

In summary:

综上所述:

假设检验 (Hypothesis Testing)

We know our test had a higher-performing version not only by visually inspecting the results but because we purposely designed it to be that way.

我们知道我们的测试具有更高性能的版本,不仅是通过目视检查结果,还因为我们有目的地将其设计为这种方式。

This is the moment we have waited for: is it possible for us to prove this solely based on the results we got?.

这是我们等待的时刻: 是否有可能仅根据获得的结果来证明这一点? 。

The answer is yes, and the first step is to define our Null and Alternative Hypothesis, which we will later try to accept or reject.

答案是肯定的,第一步是定义零假设和替代假设,我们稍后将尝试接受或拒绝。

Our alternative hypothesis (H1) is what we want to prove correct, which states that there is, in fact, a relationship between the landing version and the result we observed. In contrast, our null hypothesis states that there is no relationship meaning there is no significant difference between our observed and expected frequencies.

我们要证明的另一种假设(H1)是正确的,它指出着陆版本与我们观察到的结果之间实际上存在某种关系。 相反,我们的零假设指出没有关系,这意味着我们的观测频率与预期频率之间没有显着差异。

统计 (Statistic)

Our goal is to find how often our observed data is located in a universe where our null hypothesis is correct, meaning, where our observed and expected signup frequencies have no significant difference.

我们的目标是找到我们的观测数据位于原假设正确的宇宙中的频率,即我们的观测和预期签约频率无显着差异。

A useful statistic that’s able to sum up all these values; six columns (one for each version) and two rows (one for each signup state) into a single value is Chi-Square, which is calculated as follows:

有用的统计信息,能够汇总所有这些值; 六个值(每个版本一个)和两行(每个注册状态一个)组成一个值是Chi-Square,其计算方式如下:

We will not get into details of how this formula can be found neither of its assumptions or requirements (such as Yates Correction), because it is not the subject of this post. On the contrary, we would like to perform a numerical approach through simulations, which should shed some light on these types of hypothesis tests.

我们不会详细介绍如何从公式的任何假设或要求(例如Yates Correction)中都找不到该公式,因为它不是本文的主题。 相反,我们想通过仿真执行数值方法,这应该为这些类型的假设检验提供一些启发。

Resuming, if we compute this formula with our data, we get:

继续,如果我们使用我们的数据计算此公式,则会得到:

# We calculate our X^2 score

Chi <- sum((Expected-Observed)^2/Expected)

Chi

空分布模拟 (Null Distribution Simulation)

We need to obtain the probability of finding a statistic as extreme as the one we observed, which in this case, is represented by Chi-Square equal to 10.368. This, in terms of probability, is also known as our P-Value.

我们需要获得找到与我们观察到的统计数据一样极端的统计数据的概率,在本例中,该统计数据由卡方表示为10.368。 就概率而言,这也称为我们的P值 。

For this, we will simulate a Null Distribution as a benchmark. What this means is that we need to generate a scenario in which our Null Distribution is correct, suggesting a situation where there is no relationship between the landing version and the observed signup results (frequencies) we got.

为此,我们将模拟空分布作为基准。 这意味着我们需要生成一个空分布正确的方案,这表明着陆版本与我们观察到的注册结果(频率)之间没有关系 。

A solution that rapidly comes to mind is to repeat our experiment from scratch, either by re-collecting results many times or, as in the context of this post, using an unbiased dice to compare how our observed results behave in contrast to these tests. Even though this might seem intuitive at first, in real-world scenarios, this solution might not be the most efficient one since it would require extreme use of resources such as time and budget to repeat this A/B test many times.

Swift想到的解决方案是从头开始重复我们的实验,方法是多次重新收集结果,或者如本文所述,使用无偏小骰子来比较观察到的结果与这些测试相比的表现。 尽管起初看起来似乎很直观,但在实际情况下,此解决方案可能并不是最有效的解决方案,因为它需要大量使用资源(例如时间和预算)才能多次重复进行此A / B测试。

重采样 (Resampling)

An excellent solution to the problem discussed above is called resampling. What resampling does is make one variable independent of the other by shuffling one of them randomly. If there were an initial relationship between them, this relation would be lost due to the random sampling method.

解决上述问题的一种极好的解决方案称为重采样。 重采样的作用是通过随机地对其中一个变量进行改组,使一个变量与另一个变量无关。 如果它们之间存在初始关系,则由于随机抽样方法,该关系将丢失。

In particular, we need to use the original (unaggregated) samples for this scenario. We will later permutate one of the columns several times, which will be Signup status in this case.

特别是,在这种情况下,我们需要使用原始(未汇总)样本。 稍后,我们将对其中一列进行多次排列,在本例中为“注册”状态。

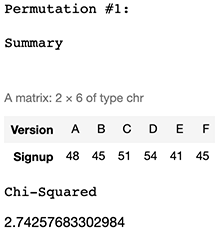

In particular, let us see an example of 2 shuffles for the first “10 samples” shown earlier:

特别是,让我们看一下前面显示的第一个“ 10个样本”的2个随机播放的示例:

Let us try it now with the complete (1800) sample set:

现在让我们尝试使用完整的样本集(1800):

Permutation #1

排列#1

Perm1 <- Dices

set.seed(45)

Perm1$Signup <- sample(Dices$Signup)

ResultPerm1 <- aggregate(Signup ~ Version, Perm1, sum)

cat("Permutation #1:\n\n")

cat("Summary\n\n")

t(ResultPerm1)

cat("Chi-Squared")

Perm1Observed <- table(Perm1)

sum((Expected-Perm1Observed)^2/Expected)

Permutation #2

排列#2

Perm1 <- Dices

set.seed(22)

Perm1$Signup <- sample(Dices$Signup)

ResultPerm1 <- aggregate(Signup ~ Version, Perm1, sum)

cat("Permutation #2:\n\n")

cat("Summary\n\n")

t(ResultPerm1)

cat("Chi-Squared")

Perm1Observed <- table(Perm1)

sum((Expected-Perm1Observed)^2/Expected)

As seen in both permutations of our data, we got utterly different summaries and Chi-Squared values. We will repeat this process a bunch of times to explore what we can obtain at a massive scale.

从我们的数据的两个排列中可以看出,我们得到了完全不同的汇总和Chi-Squared值。 我们将重复此过程很多次,以探索我们可以大规模获得的东西。

模拟 (Simulation)

Let us simulate 15k permutations of our data.

让我们模拟数据的15k排列。

# Simulation Function

Simulation <- function(Dices,k) {

dice_perm <- data.frame()

i <- 0

while(i < k) {

i <- i + 1;# We permutate our Results

permutation$Signup <- sample(Dices$Signup)# We generate our contigency table

ObservedPerm <- table(permutation)# We generate our expected frequencies table

ExpectedPerm <- ObservedPerm

ExpectedPerm[,1] <- (sum(ObservedPerm[,1])/nrow(ObservedPerm))

ExpectedPerm[,2] <- sum(ObservedPerm[,2])/nrow(ObservedPerm)# We calculate X^2 test for our permutation

ChiPerm <- sum((ExpectedPerm-ObservedPerm)^2/ExpectedPerm)# We add our test value to a new dataframe

dice_perm <- rbind(dice_perm,data.frame(Permutation=i,ChiSq=ChiPerm))

}

return(dice_perm)

}# Lest's resample our data 15.000 times

start_time <- Sys.time()

permutation <- Dicesset.seed(12)

permutation <- Simulation(Dices,15000)

end_time <- Sys.time()

end_time - start_time

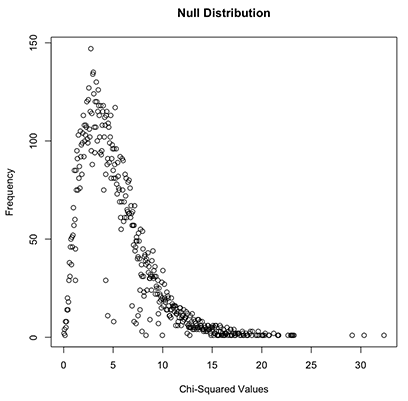

重采样分布 (Resample Distribution)

As we can observe below, our 15k permutations look like it is distributed with a distinct shape and resembles, as expected, a Chi-Square distribution. With this information, we can now calculate how many of the 15k iterations, we observed a Chi-Squared value as extreme as our initial 10.36 calculation.

正如我们在下面可以看到的,我们的15k排列看起来像是分布有不同的形状,并且与预期的卡方分布相似。 有了这些信息,我们现在可以计算出15k迭代中有多少次,我们观察到的Chi-Squared值与我们最初的10.36计算一样极端。

totals <- as.data.frame(table(permutation$ChiSq))

totals$Var1 <- as.numeric(as.character(totals$Var1))

plot( totals$Freq ~ totals$Var1, ylab=”Frequency”, xlab=”Chi-Squared Values”,main=”Null Distribution”)

P值 (P-Value)

Let us calculate how many times we obtained a Chi-Square value equal to or higher than 10.368 (our calculated score).

让我们计算获得等于或高于10.368(我们的计算得分)的卡方值的次数。

Higher <- nrow(permutation[which(permutation$ChiSq >= Chi),])

Total <- nrow(permutation)

prob <- Higher/Total

cat(paste("Total Number of Permutations:",Total,"\n"))

cat(paste(" - Total Number of Chi-Squared Values equal to or higher than",round(Chi,2),":",Higher,"\n"))

cat(paste(" - Percentage of times it was equal to or higher (",Higher,"/",Total,"): ",round(prob*100,3),"% (P-Value)",sep=""))

决策极限 (Decision Limits)

We now have our P-Value, which means that if the Null Hypothesis is correct, saying there is no relationship between Version and Signups, we should encounter a Chi-Square as extreme only a small 6.5% of the time. If we think of this as only dice results, we should expect “results as biased as ours” even by throwing an unbias dice at most 6.5% of the time.

现在,我们有了P值,这意味着如果零假设是正确的,也就是说版本和注册之间没有关系,那么我们应该仅在很小的6.5%的时间内遇到卡方。 如果我们认为这只是骰子的结果,那么即使最多最多掷6.5%的时间来获得无偏见的骰子,我们也应该期望“结果像我们一样有偏见”。

Now we need to define our decision limits on which we accept or reject our null hypothesis.

现在,我们需要定义我们接受或拒绝原假设的决策极限。

We calculated our decision limits for 90%, 95%, and 99% confidence intervals, meaning which Chi-Squared values we should expect as a limit on those odds.

我们计算了90%,95%和99%置信区间的决策极限,这意味着我们应该期望将Chi-Squared值作为这些几率的极限。

# Decition Limits

totals <- as.data.frame(table(permutation$ChiSq))

totals$Var1 <- as.numeric(as.character(totals$Var1))

totals$Prob <- cumsum(totals$Freq)/sum(totals$Freq)

Interval90 <- totals$Var1[min(which(totals$Prob >= 0.90))]

Interval95 <- totals$Var1[min(which(totals$Prob >= 0.95))]

Interval975 <- totals$Var1[min(which(totals$Prob >= 0.975))]

Interval99 <- totals$Var1[min(which(totals$Prob >= 0.99))]cat(paste("Chi-Squared Limit for 90%:",round(Interval90,2),"\n"))

cat(paste("Chi-Squared Limit for 95%:",round(Interval95,2),"\n"))

cat(paste("Chi-Squared Limit for 99%:",round(Interval99,2),"\n"))

Fact Check

事实检查

As observed by the classical “Chi-Square Distribution Table”, we can find very similar values from the ones we obtained from our simulation, which means our confidence intervals and P-Values should be very accurate.

正如经典“卡方分布表”所观察到的,我们可以从模拟中获得非常相似的值,这意味着我们的置信区间和P值应该非常准确。

假设检验 (Hypothesis Testing)

As we expected, we can reject the Null Hypothesis and claim that there is a significant relationship between versions and signups. Still, there is a small caveat, and this is our level of confidence. As observed in the calculations above, we can see that our P-Value (6.5%) is just between 90% and 95% confidence intervals, which means, even though we can reject our Null Hypothesis with 90% confidence, we cannot reject it at 95% or any superior confidence level.

如我们所料,我们可以拒绝零假设,并声称版本和注册之间存在重要关系。 仍然有一点需要注意,这就是我们的信心水平 。 从上面的计算中可以看出,我们可以看到P值(6.5%)介于90%和95%的置信区间之间,这意味着,即使我们可以90%的置信度拒绝零假设,我们也不能拒绝它95%或更高的置信度。

If we claim to have 90% confidence, then we are also claiming there is a 10% chance of wrongly rejecting our null hypothesis (also called Type I Error, False Positive, or Alpha). Note, in reality, such standard arbitrary values (90%,95%, 99%) are used, but we could easily claim we are 93.5% certain since we calculated a 6.5% probability of a Type I Error.

如果我们声称拥有90%的置信度,那么我们还声称有10%的机会错误地拒绝了我们的零假设(也称为I型错误 , 误报或Alpha )。 注意,实际上,使用了此类标准任意值(90%,95%,99%),但由于我们计算出I型错误的概率为6.5% ,因此我们可以很容易地断言我们具有93.5%的确定性。

Interestingly, even though we know for sure there is a relationship between version and signups, we cannot prove this by mere observation, simulations, and neither by doing this hypothesis test with a standard 95% confidence level. This concept of failing to reject our Null Hypothesis even though we know it is wrong is called a false negative or Type II Error (Beta), which is dependent on the Statistical Power of this test, which measures the probability that this does not happen.

有趣的是,即使我们确定知道版本和注册之间存在关联,我们也不能仅仅通过观察,模拟以及通过以标准的95%置信度进行假设检验来证明这一点。 即使我们知道错误假设也不会拒绝零假设的概念称为假阴性或II型错误 ( Beta ),这取决于此测试的统计功效 ,该度量衡量了这种情况不会发生的可能性。

统计功效 (Statistical Power)

In our hypothesis test, we saw we were unable to reject our Null Hypothesis even at standard values intervals such as 95% confidence or more. This is due to the Statistical Power (or Power) of the test we randomly designed, which is particularly sensitive to our statistical significance criterion discussed above (alpha or Type I error) and both effect magnitude and sample sizes.

在我们的假设检验中,我们看到即使在标准值间隔(例如95%置信度或更高)下也无法拒绝零假设。 这是由于我们随机设计的测试的统计 功效 (或功效 ),这对我们上面讨论的统计显着性标准(alpha或I型误差)以及影响幅度和样本量特别敏感。

Power is calculated as follows:

功率计算如下:

In particular, we can calculate our current statistical Power by answering the following question:

特别是,我们可以通过回答以下问题来计算当前的统计功效:

- If we were to repeat our experiment X amount of times and calculate our P-Value on each experiment, which percent of the times, we should expect a P-Value as extreme as 5%? 如果我们要重复实验X次并在每个实验中计算出我们的P值(占百分比的百分比),那么我们应该期望P值达到5%的极限吗?

Let us try answering this question:

让我们尝试回答这个问题:

MultipleDiceRolling <- function(k,N) {

pValues <- NULL

for (i in 1:k) {

Dices <- DiceRolling(N)

Observed <- table(Dices)

pValues <- cbind(pValues,chisq.test(Observed)$p.value)

i <- i +1

}

return(pValues)

}# Lets replicate our experiment (1800 throws of a biased dice) 10k times

start_time <- Sys.time()

Rolls <- MultipleDiceRolling(10000,1800)

end_time <- Sys.time()

end_time - start_timeHow many times did we observe P-Values as extreme as 5%?

我们观察过多少次P值高达5%?

cat(paste(length(which(Rolls <= 0.05)),"Times"))

Which percent of the times did we observe this scenario?

我们观察到这种情况的百分比是多少?

Power <- length(which(Rolls <= 0.05))/length(Rolls)

cat(paste(round(Power*100,2),"% of the times (",length(which(Rolls <= 0.05)),"/",length(Rolls),")",sep=""))

As calculated above, we observe a Power equivalent to 21.91% (0.219), which is quite low since the gold standard is around 0.8 or even 0.9 (90%). In other words, this means we have a 78.09% (1 — Power) probability of making a Type II Error or, equivalently, a 78% chance of failing to reject our Null Hypothesis at a 95% confidence interval even though it is false, which is what happened here.

根据上面的计算,我们观察到的功效等于21.91%(0.219),这是非常低的,因为金标准约为0.8甚至0.9(90%)。 换句话说,这意味着我们有78.09%(1- Power)发生II型错误的概率,或者等效地, 即使它是假的 , 也有78%的机会未能在95%的置信区间内拒绝零假设 ,这就是这里发生的事情。

As mentioned, Power is a function of:

如前所述,Power是以下功能之一:

Our significance criterion: this is our Type I Error or Alpha, which we decided to be 5% (95% confidence).

我们的显着性标准 :这是我们的I类错误或Alpha,我们决定为5%(置信度为95%)。

Effect Magnitude or Size: This represents the difference between our observed and expected values in terms of the standardized statistic of use. In this case, since we used Chi-Square statistic, this effect (named w) is calculated as the squared root of the normalized Chi-Square value and is usually categorized as Small (0.1), Medium (0.3), and Large (0.5) (Ref: Cohen, J. (1988).)

影响幅度或大小 :这表示我们的观察值与期望值之间的差异(使用标准化的使用统计数据)。 在这种情况下,由于我们使用的是卡方统计量,因此将此效果(称为w )计算为归一化卡方值的平方根,通常分为小(0.1),中(0.3)和大(0.5)。 )(参考资料: Cohen,J.(1988)。 )

Sample size: This represents the total amount of samples (in our case, 1800).

样本数量 :代表样本总数(在我们的示例中为1800)。

效果幅度 (Effect Magnitude)

We designed an experiment with a relatively small effect magnitude since our Dice was only biased in one face (6) with only a slight additional chance of landing in its favor.

我们设计的实验的效果等级相对较小,因为我们的骰子仅偏向一张脸(6),只有很少的其他机会落在其脸上。

In simple words, our effect magnitude (w) is calculated as follows:

简而言之,我们的影响幅度(w)计算如下:

1) Where our Observed Proportions are calculated as follow:

1)我们的观察比例计算如下:

Probabilities of our alternative hypothesis

我们的替代假设的概率

2) And our Expected Proportions:

2)和我们的预期比例 :

Probabilities of our null hypothesis

原假设的概率

Finally, we can obtain our effect size as follows:

最后,我们可以获得如下效果大小:

样本量 (Sample Size)

Similarly to our effect size, our sample sizes, even though it seems of enough magnitude (1800), is not big enough to spot relationship (or bias) at 95% confidence since our effect size, as we calculated, was very small. We can expect an inverse relationship between sample sizes and effect magnitude. The more significant the effect, the lower the sample size needed to prove it at a given significance level.

与我们的效应量相似,我们的样本量即使看起来足够大(1800),也不足以在95%的置信度上发现关系(或偏差),因为我们计算出的效应量很小。 我们可以预期样本量与效应量之间存在反比关系。 效果越显着,在给定的显着性水平下证明该结果所需的样本量越少。

At this time, it might be more comfortable to think sample sizes of our A/B test as dice or even coin throws. It is somewhat intuitive that with one dice/coin throw, we will be unable to spot a biased dice/coin, but if 1800 throws are not high enough to detect this small effect at a 95% confidence level, this leads us to the following question: how many throws do we need?

目前,将A / B测试的样本大小视为掷骰子甚至投掷硬币可能会更舒服。 从一个直观的角度来看,掷一枚骰子/硬币,我们将无法发现有偏见的骰子/硬币,但是如果1800枚硬币的高度不足以在95%的置信度水平上检测到这种小影响,这将导致我们得出以下结论:问题:我们需要多少次掷球?

The same principle applies to the sample size of our A/B test. The lesser the effect, such as small variations in conversion from small changes in each version (colors, fonts, buttons), the bigger the sample and, therefore, the time we need to collect the data required to accept or reject our hypothesis. A common problem in many A/B tests concerning website conversion in eCommerce is that tools such as Google Optimize can take many days, if not weeks, and most of the time, we do not encounter a conclusive answer.

同样的原则适用于我们的A / B测试的样本量。 效果越小(例如,每个版本(颜色,字体,按钮)的微小变化带来的转换变化很小),样本就越大,因此,我们需要收集接受或拒绝我们的假设所需的数据的时间也越大。 在许多与电子商务中的网站转换有关的A / B测试中,一个普遍的问题是,诸如Google Optimize之类的工具可能要花费很多天(如果不是几周的话),并且在大多数情况下,我们没有得到最终的答案。

To solve this, first, we need to define the Statistical Power we want. Next, we will try answering this question by iterating different values of N until we minimize the difference between our Expected Power and the Observed Power.

为了解决这个问题,首先,我们需要定义所需的统计功效。 接下来,我们将尝试通过迭代N的不同值来回答这个问题,直到将期望功率和观测功率之间的差异最小化为止。

# Basic example on how to obtain a given N based on a target Power.# Playing with initialization variables might be needed for different scenarios.

CostFunction <- function(n,w,p) {

value <- pchisq(qchisq(0.05, df = 5, lower = FALSE), df = 5, ncp = (w^2)*n, lower = FALSE)

Error <- (p-value)^2

return(Error)

}

SampleSize <- function(w,n,p) {

# Initialize variables

N <- n

i <- 0

h <- 0.000000001

LearningRate <- 40000000

HardStop <- 20000

power <- 0

# Iteration loop

for (i in 1:HardStop) {

dNdError <- (CostFunction(N + h,w,p) - CostFunction(N,w,p)) / h

N <- N - dNdError*LearningRate

ChiLimit <- qchisq(0.05, df = 5, lower = FALSE)

new_power <- pchisq(ChiLimit, df = 5, ncp = (w^2)*N, lower = FALSE)

if(round(power,6) >= round(new_power,6)) {

cat(paste0("Found in ",i," Iterations\n"))

cat(paste0(" Power: ",round(power,2),"\n"))

cat(paste0(" N: ",round(N)))

break();

}

power <- new_power

i <- i +1

}

}

set.seed(22)

SampleSize(0.04,1800,0.8)

SampleSize(0.04,1800,0.9)

As seen above, after different iterations of N, we obtained a recommended sample of 8.017 and 10.293 for 0.8 and 0.9 Power values, respectively.

如上所示,在N的不同迭代之后,我们分别针对0.8和0.9的Power值获得了推荐的样本8.017和10.293。

Let us repeat the experiment from scratch and see which results we get for these new sample size of 8.017 suggested by aiming a commonly used Power of 0.8.

让我们从头开始重复该实验,并查看针对这些新的8.017样本大小(通过将常用功效设定为0.8)所获得的结果。

start_time <- Sys.time()# Let's roll some dices

set.seed(11) # this is for result replication

Dices <- DiceRolling(8017) # We expect 80% Power

t(table(Dices))# We generate our contigency table

Observed <- table(Dices)# We generate our expected frequencies table

Expected <- Observed

Expected[,1] <- (sum(Observed[,1])/nrow(Observed))

Expected[,2] <- sum(Observed[,2])/nrow(Observed)# We calculate our X^2 score

Chi <- sum((Expected-Observed)^2/Expected)

cat("Chi-Square Score:",Chi,"\n\n")# Lest's resample our data 15.000 times

permutation <- Dices

set.seed(20)

permutation <- Simulation(Dices,15000)Higher <- nrow(permutation[which(permutation$ChiSq >= Chi),])

Total <- nrow(permutation)

prob <- Higher/Total

cat(paste("Total Number of Permutations:",Total,"\n"))

cat(paste(" - Total Number of Chi-Squared Values equal to or higher than",round(Chi,2),":",Higher,"\n"))

cat(paste(" - Percentage of times it was equal to or higher (",Higher,"/",Total,"): ",round(prob*100,3),"% (P-Value)\n\n",sep=""))# Lets replicate this new experiment (8017 throws of a biased dice) 20k times

set.seed(20)

Rolls <- MultipleDiceRolling(10000,8017)

Power <- length(which(Rolls <= 0.05))/length(Rolls)

cat(paste(round(Power*100,3),"% of the times (",length(which(Rolls <= 0.05)),"/",length(Rolls),")",sep=""))end_time <- Sys.time()

end_time - start_time

最后的想法 (Final Thoughts)

As expected by our new experiment design of sample size equal to 8017, we were able to reduce our P-Value to 1.9%.

正如我们新的样本量等于8017的实验设计所预期的那样,我们能够将P值降低到1.9%。

Additionally, we observe a Statistical Power equivalent to 0.79 (very near our goal), which implies we were able to reduce our Type II Error (non-rejection of our false null hypothesis) to just 21%!

此外,我们观察到的统计功效等于0.79(非常接近我们的目标),这意味着我们能够将II型错误(不拒绝错误的虚假假设)降低到21%!

This allows us to conclude with 95% confidence (in reality 98.1%) that there is, as we always knew, a statistically significant relationship between Landing Version and Signups. Now we need to test, with a given confidence level, which version was the higher performer; this will be covered in a similar future post.

这使我们能够以95%的信心(实际上是98.1%)得出结论,正如我们一直知道的那样,着陆版本和注册之间存在统计上显着的关系。 现在我们需要在给定的置信度下测试哪个版本的性能更高; 这将在以后的类似文章中介绍。

If you have any questions or comments, do not hesitate to post them below.

如果您有任何问题或意见,请随时在下面发布。

翻译自: https://towardsdatascience.com/intuitive-simulation-of-a-b-testing-191698575235

傅里叶变换 直观

2743

2743

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?