扫描二维码读取文档

To many people’s dismay, there is still a giant wealth of paper documents floating out there in the world. Tucked into corner drawers, stashed in filing cabinets, overflowing from cubicle shelves — these are headaches to keep track of, keep updated, and just store. What if there existed a system where you could scan these documents, generate plain text files from their contents, and automatically categorize them into high level topics? Well, the technology to do all of this exists, and it’s simply a matter of stitching them all together and getting it to work as a cohesive system, which is what we’ll be going through in this article. The main technologies used will be OCR (Optical Character Recognition) and Topic Modeling. Let’s get started!

令很多人感到沮丧的是,世界上仍然漂浮着大量的纸质文件。 藏在角落的抽屉里,藏在文件柜中,从小隔间的架子上溢出来-这些都是跟踪,更新和存储的麻烦。 如果存在一个可以扫描这些文档,从其内容生成纯文本文件并自动将其分类为高级主题的系统,该怎么办? 好的,存在完成所有这些工作的技术,这仅仅是将它们缝合在一起并使之作为一个内聚系统工作的问题,这就是我们将在本文中介绍的内容。 使用的主要技术将是OCR(光学字符识别)和主题建模。 让我们开始吧!

收集数据 (Collecting Data)

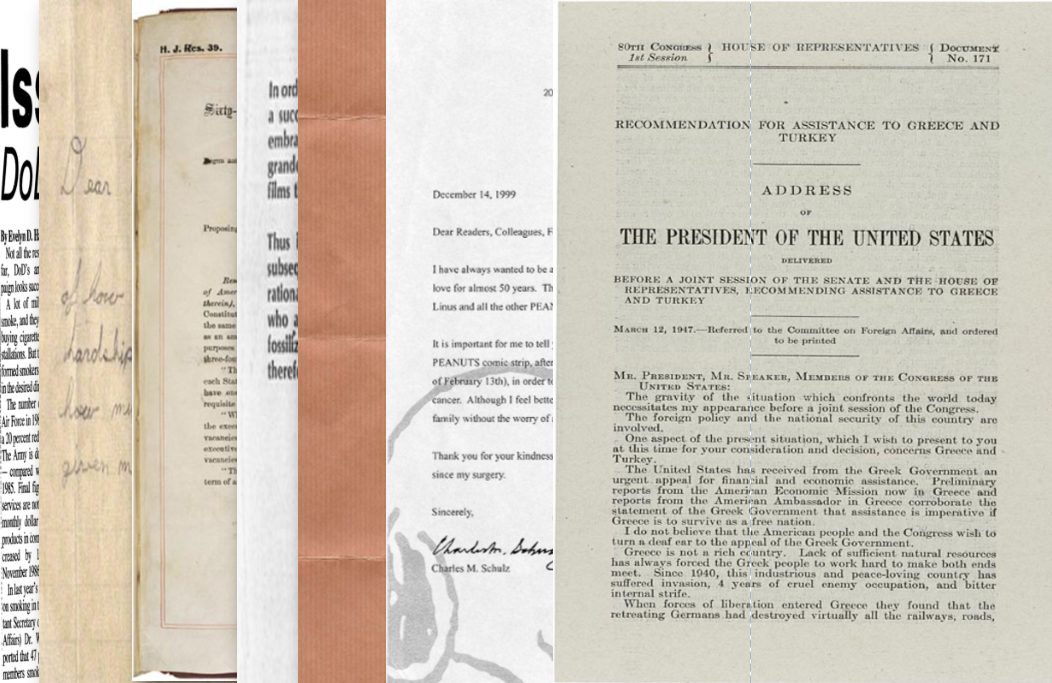

The first thing we’re going to do is create a simple dataset so that we can test each portion of our workflow and make sure it’s doing what it’s supposed to. Ideally, our dataset will contain scanned documents of various levels of legibility and time periods, and the high level topic that each document belongs to. I couldn’t locate a dataset with these exact specifications, so I got to work building my own. The high level topics I decided on were government, letters, smoking, and patents. Random? Well these were mainly chosen because of the availability of a good variety of scanned documents for each of these areas. The wonderful sources below were used to extract the scanned documents for each of these topics:

我们要做的第一件事是创建一个简单的数据集,以便我们可以测试工作流的每个部分,并确保它按预期工作。 理想情况下,我们的数据集将包含不同级别的可读性和时间范围的扫描文档,以及每个文档所属的高级主题。 我无法找到具有这些确切规格的数据集,因此我必须开始构建自己的数据集。 我决定的高级主题是政府,信件,吸烟和专利。 随机? 好的,之所以选择这些文档,是因为每个区域都有大量的扫描文档。 下面的精彩资源用于提取每个主题的扫描文档:

Government/Historical: OurDocuments

政府/历史 : OurDocuments

Letters: LettersofNote

字母 : 便笺

Patents: The Portal to Texas History (University of North Texas)

专利 : 德克萨斯历史门户(北德克萨斯大学)

Smoking: Tobacco 800 Dataset

吸烟 : 烟草800数据集

From each of these sources I picked 20 or so documents that were of a good size and legible to me, and put them into individual folders defined by the topic

从这些来源中,我选择了大约20份大小合适且对我来说清晰易读的文档,并将它们放在该主题定义的各个文件夹中

After almost a full day of searching for and cataloging all the images, I resized them all to 600x800 and converted them into .PNG format. The finished dataset is available for download here.

经过将近一整天的搜索和分类所有图像,我将它们的大小全部调整为600x800,并将其转换为.PNG格式。 完整的数据集可在此处下载。

The simple resizing and conversion script is below:

简单的大小调整和转换脚本如下:

建立OCR管道 (Building the OCR Pipeline)

Optical Character Recognition is the process of extracting written text from images. This is usually done via machine learning models, and most often through pipelines incorporating convolutional neural networks. While we could train a custom OCR model for our application, it would require tons more training data and computation resources. We will instead utilize the fantastic Microsoft Computer Vision API, which includes a specific module specifically for OCR. You will need to register for a free tier account (sufficient for use with document scanning) and the API call will consume an image (as a PIL image) and output several bits of information including the location/orientation of the text on the image as well as the text itself. The following function will take in a list of PIL images and output an equal sized list of extracted texts:

光学字符识别是从图像中提取文字的过程。 这通常是通过机器学习模型完成的,并且通常是通过包含卷积神经网络的管道完成的。 尽管我们可以为我们的应用训练定制的OCR模型,但将需要大量的训练数据和计算资源。 相反,我们将使用出色的Microsoft Computer Vision API,其中包括专门用于OCR的特定模块。 您将需要注册一个免费帐户(足以用于文档扫描),并且API调用将使用一个图像(作为PIL图像)并输出几位信息,包括该文本在图像上的位置/方向。以及文字本身。 以下函数将获取一个PIL图像列表,并输出一个相等大小的提取文本列表:

后期处理 (Post-processing)

Since we might want to end our workflow here in some instances, instead of just holding onto the extracted text as a giant list in memory, we can also write out the extracted texts into individual .txt files with the same names as the original input files. While the OCR technology from Microsoft is good, it occasionally will make mistakes. We can mitigate some of these mistakes using the SpellChecker module. The following script accepts an input and output folder, reads in all the scanned documents in the input folder, reads them using our OCR script, runs a spell check and correct mis-spelled words, and finally writes out the raw .txt files into the output folder.

由于我们可能希望在某些情况下在这里结束工作流程,而不是仅将提取的文本作为巨大的列表保存在内存中,因此我们还可以将提取的文本写到单独的.txt文件中,其名称与原始输入文件相同。 尽管Microsoft的OCR技术很好,但偶尔也会出错。 我们可以使用SpellChecker模块来减轻其中的一些错误。 以下脚本接受输入和输出文件夹,读取输入文件夹中的所有扫描文档,使用我们的OCR脚本读取它们,运行拼写检查并纠正拼写错误的单词,最后将原始.txt文件写出到导出目录。

为主题建模准备文本 (Preparing Text for Topic Modeling)

If our set of scanned documents is large enough, writing them all into one large folder can make them hard to sort through, and we likely already have some kind of implicit grouping in the documents (especially if they came from something like a filing cabinet). If we have a rough idea of how many different “types” or topics of documents we have, we can use topic modeling to help identify these automatically. This will give us the infrastructure to split the identified text from OCR into individual folders based on document content. The topic model we will be using is called LDA, for Latent Direchlet Analysis, and there’s a great introduction to this type of model here. To run this model we will need a bit more pre-processing and organizing of our data, so to prevent our scripts from getting to long and congested we will assume the scanned documents have already been read and converted to .txt files using the above workflow. The topic model will then read in these .txt files, classify them into however many topics we specify, and place them into appropriate folders.

如果我们扫描的文档集足够大,则将它们全部写到一个大文件夹中可能会使它们难以分类,并且我们可能已经在文档中进行了某种隐式分组(特别是如果它们来自诸如文件柜之类的文件) 。 如果我们对文档有多少个不同的“类型”或主题有一个大概的了解,我们可以使用主题建模来自动识别它们。 这将为我们提供基础结构,以根据文档内容将OCR中识别出的文本分成单独的文件夹。 我们将使用的主题模型称为LDA,用于潜在Direchlet分析,这里对这种模型进行了很好的介绍。 要运行此模型,我们将需要对数据进行更多的预处理和组织,因此,为防止脚本变得冗长而拥挤,我们将假定已使用上述工作流程读取了扫描的文档并将其转换为.txt文件。 然后,主题模型将读取这些.txt文件,将它们分类为我们指定的许多主题,并将它们放置在适当的文件夹中。

We’ll start off with a simple function to read all the outputted .txt files in our folder and read them into a list of tuples with (filename, text). This will help us keep track of the original filenames for after we categorize them into topics

我们将从一个简单的函数开始,读取文件夹中所有输出的.txt文件,并将它们读入具有(文件名,文本)的元组列表。 这有助于我们在将原始文件名归类为主题后跟踪原始文件名

Next, we will need to make sure that all useless words (ones that don’t help us distinguish the topic of a particular document). We will do this using three different methods:

接下来,我们将需要确保所有无用的单词(那些不会帮助我们区分特定文档主题的单词)。 我们将使用三种不同的方法进行此操作:

- Remove stopwords 删除停用词

- Strip tags, punctuations, numbers, and multiple whitespaces 去除标签,标点,数字和多个空格

- TF-IDF filtering TF-IDF过滤

To achieve all of this (and our topic model) we will use the Gensim package. The script below will run the necessary pre-processing steps on a list of text (output from the function above) and train an LDA model.

为了实现所有这些(以及我们的主题模型),我们将使用Gensim包。 下面的脚本将对文本列表(从上面的函数输出)运行必要的预处理步骤,并训练LDA模型。

使用主题模型对文档进行分类 (Using the Topic Model to Categorize Documents)

Once we have our LDA model trained, we can use it to categorize our set of training documents (and future documents that might come in) into topics and then place them into the appropriate folders.

一旦我们对LDA模型进行了培训,我们就可以使用它来将我们的培训文档集(以及将来可能出现的未来文档)分类为主题,然后将它们放置在适当的文件夹中。

Using the trained LDA model against a new text string require some fiddling (in fact I needed some help figuring it out myself, thank god for SO), all of the complication is contained in the function below:

对新的文本字符串使用经过训练的LDA模型需要一些摆弄(实际上,我需要一些帮助自己弄清楚的东西,谢谢上帝,所以),所有复杂性都包含在下面的函数中:

Finally, we’ll need another method to get the actual name of the topic based on the topic index.

最后,我们将需要另一种方法来根据主题索引获取主题的实际名称。

放在一起 (Putting it All Together)

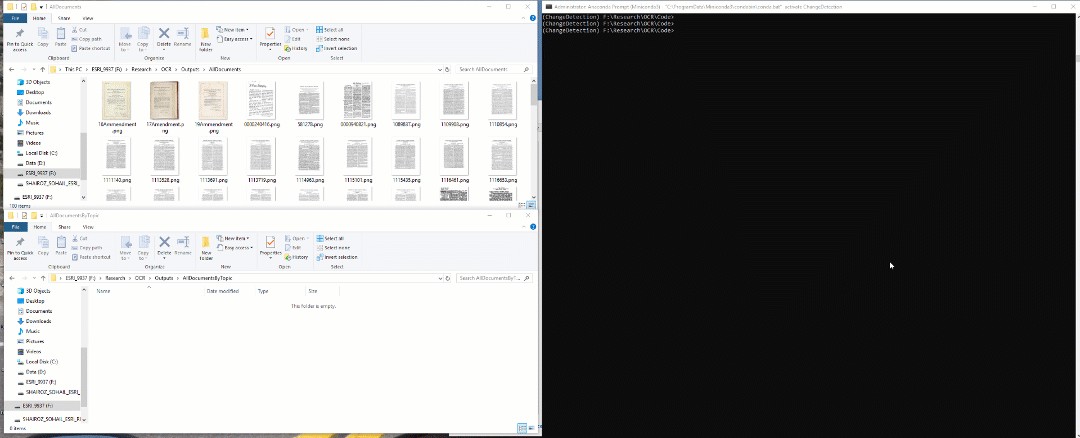

Now, we can stick all of the functions we wrote above into a single script that accepts an input folder, output folder, and topic count. The script will read all the scanned document images in the input folder, write them into .txt files, build an LDA model to find high level topics in the documents, and organize the outputted .txt files into folders based on document topic.

现在,我们可以将上面编写的所有功能粘贴到一个脚本中,该脚本可以接受输入文件夹,输出文件夹和主题计数。 该脚本将读取输入文件夹中的所有扫描的文档图像,将它们写入.txt文件,构建LDA模型以在文档中查找高级主题,并根据文档主题将输出的.txt文件组织到文件夹中。

演示版 (Demo)

To prove all of the above wasn’t just long winded gibberish, here’s a video demo of the system. There’s many things that can be improved (most notably to keep track of line breaks from the scanned documents, handling special characters and other languages besides English, and making requests to the computer vision API in batch instead of one by one) but we have ourselves a solid foundation to build improvements on. For more information checkout the associated Github repo.

为了证明以上所有内容不只是long花一现,这是该系统的视频演示。 有很多可以改进的地方(最值得注意的是跟踪扫描的文档中的换行符,处理除英语之外的特殊字符和其他语言,以及批量而不是一一地向计算机视觉API发出请求),但我们拥有自己的能力不断改进的坚实基础。 有关更多信息,请查看相关的Github存储库 。

Thanks for reading!

谢谢阅读!

扫描二维码读取文档

2749

2749

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?