Pre October 31 2017, the date eager execution was introduced to Tensorflow (TF), TF was fast. Yes TF used to be faster. Hear me out: TF had revelled on the speed of lazy execution in which a computational graph was created where the nodes were operations and edges were tensors. The nodes are computed only when required. A node is only executed when either that node or another node depending on it is invoked for execution[1] Traditionally, lazy execution was known as dataflow programming and is used particularly in parallel computing. TF used to leverage on this by running the computational graphs withing a TF session which can distribute execution of the graph across various compute resources.

在2017年10月31日之前,向Tensorflow(TF)引入了渴望执行的日期,TF很快。 是的,TF曾经更快。 告诉我吧:TF迷上了懒惰执行的速度,在这种速度下创建了一个计算图,其中节点是操作,边是张量。 仅在需要时才计算节点。 当任一节点或者根据它的另一个节点被调用用于执行的[仅执行的节点1 ]传统上,懒惰执行被称为数据流编程和特别用于并行计算。 TF过去通过在TF会话中运行计算图来利用这一点,该TF会话可以在各种计算资源之间分配图的执行。

With TF’s pursuit of being more intuitive and being more research purpose friendly, eager execution was introduced to support fast debugging with immediate runtime errors. For example, the Python Debugger, pdb, can be used to print and change values of tensors.

由于TF追求更直观,更便于研究的目的,因此引入了急切的执行方式来支持具有即时运行时错误的快速调试。 例如,Python调试器pdb可用于打印和更改张量的值。

We will now jump into the implementation of eager execution provided by the Tensorflow guide which could allow you to have a greater appreciation for it despite the speed drawback.

现在,我们将进入Tensorflow指南提供的急切执行的实施,尽管速度较慢,它也可以使您对此有所了解。

First, import the necessary libraries and ensure that eager mode is enabled:

首先,导入必要的库并确保启用了eager模式:

import osimport tensorflow as tfimport cProfiletf.executing_eagerly()A key component of machine learning is computing gradients for backpropagation. With eager execution on TF2, tf.GradientTape can be used to trace operations for computing gradients later. Gradient Tape works such that forward operations are recorded as tape and the tape is run backwards to compute the gradient. In the training example below, let us classify the standard MNIST handwritten digits:

机器学习的关键组成部分是计算用于反向传播的梯度。 借助对TF2的热切执行, tf.GradientTape可用于跟踪操作以稍后计算梯度。 渐变磁带的工作方式是将向前操作记录为磁带,然后将磁带向后运行以计算梯度。 在下面的训练示例中,让我们对标准MNIST手写数字进行分类:

#Dataset setup

(mnist_images, mnist_labels), _ = tf.keras.datasets.mnist.load_data()dataset = tf.data.Dataset.from_tensor_slices((tf.cast(mnist_images[...,tf.newaxis]/255, tf.float32),tf.cast(mnist_labels,tf.int64)))dataset = dataset.shuffle(1000).batch(32)Now, we will create a simple neural network:

现在,我们将创建一个简单的神经网络:

mnist_model = tf.keras.Sequential([tf.keras.layers.Conv2D(16,[3,3], activation='relu',input_shape=(None, None, 1)),tf.keras.layers.Conv2D(16,[3,3], activation='relu'),tf.keras.layers.GlobalAveragePooling2D(),tf.keras.layers.Dense(10)])Next, define the optimizer and loss function:

接下来,定义优化器和损失函数:

optimizer = tf.keras.optimizers.Adam()loss_object = tf.keras.losses.SparseCategoricalCrossentropy(from_logits=True)loss_history = []We define a function to carry out a single train step. In the main training loop, we can execute this function within the inner batch loop.

我们定义一个功能来执行单个训练步骤。 在主训练循环中,我们可以在内部批处理循环中执行此功能。

def train_step(images, labels): with tf.GradientTape() as tape: logits = mnist_model(images, training=True) tf.debugging.assert_equal(logits.shape, (32, 10)) loss_value = loss_object(labels, logits) loss_history.append(loss_value.numpy().mean()) grads = tape.gradient(loss_value, mnist_model.trainable_variables) optimizer.apply_gradients(

zip(grads,mnist_model.trainable_variables))The main training loop:

主要训练循环:

def train(epochs): for epoch in range(epochs): for (batch, (images, labels)) in enumerate(dataset): train_step(images, labels) print ('Epoch {} finished'.format(epoch))This is a simple example of how eager execution comes into play during the training process.

这是一个简单的示例,说明在训练过程中如何积极执行。

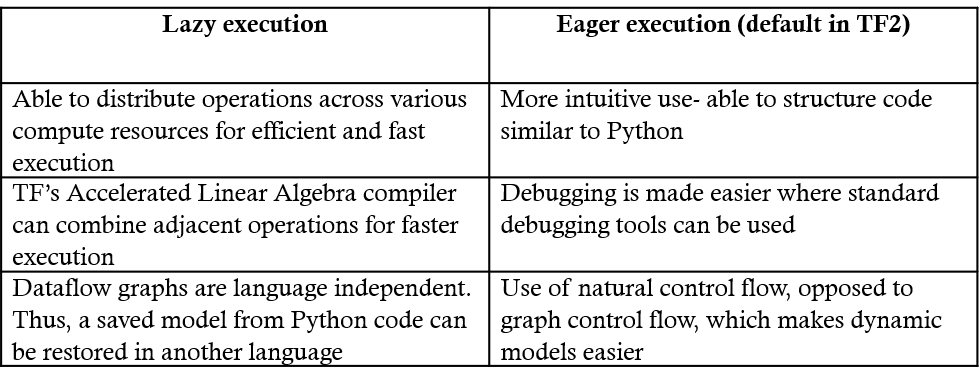

Both lazy and eager execution have their own set of advantages as summarised below:

懒惰和渴望执行都有其自身的优点,概述如下:

So can we get the best of both modes? TF2 has introduced the tf.function API which marries the best of both lazy and eager execution.

那么,我们可以充分利用这两种模式吗? TF2引入了tf.function API,该API结合了懒惰和热切执行的tf.function 。

Lazy execution is made possible on TF2 via AutoGraph which is a library available by default in tf.function . In TF1, the norm was to instantiate a graph object and execute a session. TF2 replaces these with tf.function

可以通过AutoGraph在TF2上进行延迟执行,这是tf.function默认提供的一个库。 在TF1中,规范是实例化图形对象并执行会话。 TF2用tf.function代替了tf.function

To define a computation graph in TF2, you simply decorate a function with @tf.function .The conversion of the function to a graph is taken care of by AutoGraph. Let’s say that we want to create a graph for the training step:

要在TF2中定义计算图,您只需用@tf.function装饰一个函数@tf.function负责将函数转换为图。 假设我们要为训练步骤创建一个图形:

@tf.function

def train_step(images, labels): with tf.GradientTape() as tape: logits = mnist_model(images, training=True) tf.debugging.assert_equal(logits.shape, (32, 10)) loss_value = loss_object(labels, logits) loss_history.append(loss_value.numpy().mean()) grads = tape.gradient(loss_value, mnist_model.trainable_variables)

optimizer.apply_gradients(zip

(grads,mnist_model.trainable_variables))This means that train_step has been wrapped in a python.eager.def_function.Function object. When train_step is called the graph starts building.

这意味着train_step已包装在python.eager.def_function.Function对象中。 调用train_step时,图形开始构建。

Tensorflow’s eager mode can be seen as a step towards getting more people involved in the use of Tensorflow, thereby deep learning. The more intuitive way or Pythonic way of implementing things on the eager mode would encourage a lot more coders to explore deep learning. Alex Passos, a software Engineer on the TensorFlow Eager Execution Team, had shared that he sees the Eager execution as a core part of the Tensorflow experience. Having used TF2’s Eager mode in my work as a machine learning engineer at Datature, I definitely appreciate the fact that the Eager mode makes more Pythonic sense and makes debugging a more enjoyable process.

Tensorflow的渴望模式可以看作是使更多的人参与Tensorflow的使用,从而进行深度学习的一步。 在渴望模式下实现事物的更直观的方式或Python方式将鼓励更多的程序员探索深度学习。 TensorFlow Eager执行团队的软件工程师Alex Passos曾分享说,他将Eager执行视为Tensorflow体验的核心部分。 在Datature担任机器学习工程师的工作中使用过TF2的Eager模式后,我肯定会欣赏到Eager模式具有更多的Python含义并且使调试过程变得更加令人愉悦的事实。

In a nutshell, we learnt about the benefits of both lazy and eager execution. We also explored the use of tf.function in TF2 which brings the best of both execution modes. I have shared more resources below to further understand Eager execution and AutoGraph in TF2 below.

简而言之,我们了解了懒惰和渴望执行的好处。 我们还探索了TF2中tf.function的使用,它带来了两种执行模式中的优点。 我在下面共享了更多资源,以进一步了解下面的TF2中的Eager执行和AutoGraph。

I would like to acknowledge the following resources which have inspired me to write this article:

我想感谢以下启发我写这篇文章的资源:

TF guide on Eager mode: https://www.tensorflow.org/guide/eager

渴望模式下的TF指南: https : //www.tensorflow.org/guide/eager

Code from https://github.com/tensorflow/docs/blob/master/site/en/guide/eager.ipynb

来自https://github.com/tensorflow/docs/blob/master/site/zh/guide/eager.ipynb的代码

tf.function: https://www.tensorflow.org/guide/function

tf.function: https ://www.tensorflow.org/guide/function

Eager mode in TF1: https://ai.googleblog.com/2017/10/eager-execution-imperative-define-by.html

TF1中的急切模式: https : //ai.googleblog.com/2017/10/eager-execution-imperative-define-by.html

Accelerated Linear Algebra: https://www.tensorflow.org/xla

加速线性代数: https : //www.tensorflow.org/xla

翻译自: https://medium.com/@arun_raja/eager-execution-in-tensorflow-2-0-ad82dbd5b8b1

1万+

1万+

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?