此次遇到的是一个函数使用不熟练造成的问题,但有了分析工具后可以很快定位到问题(此处推荐一个非常棒的抓包工具fiddler)

正文如下:

在爬取某个app数据时(app上的数据都是由http请求的),用Fidder分析了请求信息,并把python的request header信息写在程序中进行请求数据

代码如下

importrequestsurl= 'http://xxx?startDate=2017-10-19&endDate=2017-10-19&pageIndex=1&limit=50&sort=datetime&order=desc'headers={"Host":"xxx.com","Connection": "keep-alive","Accept": "application/json, text/javascript, */*; q=0.01","User-Agent": "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/29.0.1547.59 Safari/537.36","X-Requested-With": "XMLHttpRequest","Referer": "http://app.jg.eastmoney.com/html_Report/index.html","Accept-Encoding": "gzip,deflate","Accept-Language": "en-us,en","Cookie":"xxx"}

r= requests.get(url,headers)

print (r.text)

请求成功但是,返回的是

{"Id":"6202c187-2fad-46e8-b4c6-b72ac8de0142","ReturnMsg":"加载失败!"}

就是被发现不是正常请求被拦截了

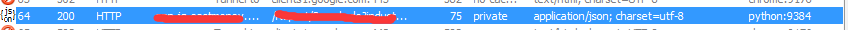

然后我去Fidder中看刚才python发送请求的记录 #盖掉的两个部分分别是Host和URL,

然后查看请求详细信息的时候,请求头并没有加载进去,User-Agent就写着python-requests ! #请求头里的UA信息是java,python程序,有点反爬虫意识的网站、app都会拦截掉

Header详细信息如下

GET http://xxx?istartDate=2017-10-19&endDate=2017-10-19&pageIndex=1&limit=50&sort=datetime&order=desc

&Host=xxx.com

&Connection=keep-alive

&Accept=application%2Fjson%2C+text%2Fjavascript%2C+%2A%2F%2A%3B+q%3D0.01

&User-Agent=Mozilla%2F5.0+%28Windows+NT+6.1%3B+WOW64%29+AppleWebKit%2F537.36+%28KHTML%2C+like+Gecko%29+Chrome%2F29.0.1547.59+Safari%2F537.36

&X-Requested-With=XMLHttpRequest

&Referer=xxx

&Accept-Encoding=gzip%2Cdeflate

&Accept-Language=en-us%2Cen

&Cookie=xxx

HTTP/1.1

Host: xxx.com

User-Agent: python-requests/2.18.4Accept-Encoding: gzip, deflate

Accept:*/*Connection: keep-alive

HTTP/1.1 200OK

Server: nginx/1.2.2Date: Sat,21 Oct 2017 06:07:21GMT

Content-Type: application/json; charset=utf-8Content-Length: 75Connection: keep-alive

Cache-Control: private

X-AspNetMvc-Version: 5.2X-AspNet-Version: 4.0.30319X-Powered-By: ASP.NET

一开始还没发现,等我把请求的URL信息全部读完,才发现程序把我的请求头信息当做参数放到了URL里

那就是我请求的时候request函数Header信息参数用错了

又重新看了一下Requests库的Headers参数使用方法,发现有一行代码写错了,在使用request.get()方法时要把参数 “headers =“写出来

更改如下:

importrequests

url= 'http://xxx?startDate=2017-10-19&endDate=2017-10-19&pageIndex=1&limit=50&sort=datetime&order=desc'headers={"Host":"xxx.com","Connection": "keep-alive","Accept": "application/json, text/javascript, */*; q=0.01","User-Agent": "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/29.0.1547.59 Safari/537.36","X-Requested-With": "XMLHttpRequest","Referer": "http://app.jg.eastmoney.com/html_Report/index.html","Accept-Encoding": "gzip,deflate","Accept-Language": "en-us,en","Cookie":"xxx"}

r= requests.get(url,headers=headers)

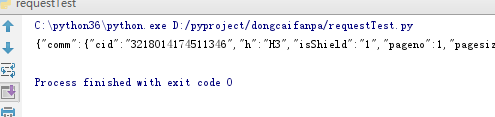

然后去查看Fiddler中的请求,

此次python中的请求头已经正常了,请求详细信息如下

GET http://xxx?startDate=2017-10-19&endDate=2017-10-19&pageIndex=1&limit=50&sort=datetime&order=desc HTTP/1.1User-Agent: Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/29.0.1547.59 Safari/537.36Accept-Encoding: gzip,deflate

Accept: application/json, text/javascript, */*; q=0.01Connection: keep-alive

Host: xxx.com

X-Requested-With: XMLHttpRequest

Referer: http://xxxAccept-Language: en-us,en

Cookie: xxx

HTTP/1.1 200OK

Server: nginx/1.2.2Date: Sat,21 Oct 2017 06:42:21GMT

Content-Type: application/json; charset=utf-8Content-Length: 75Connection: keep-alive

Cache-Control: private

X-AspNetMvc-Version: 5.2X-AspNet-Version: 4.0.30319X-Powered-By: ASP.NET

然后又用python程序请求了一次,结果请求成功,返回的还是

{"Id":"6202c187-2fad-46e8-b4c6-b72ac8de0142","ReturnMsg":"加载失败!"}

因为一般cookie都会在短时间内过期,所以更新了cookie,然后请求成功

需要注意的是用程序爬虫一定要把Header设置好,这个app如果反爬的时候封ip的话可能就麻烦了。

1514

1514

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?