最新学习ELK也查了很多资料,主要用于收集日志,在此记录下学习的成果。

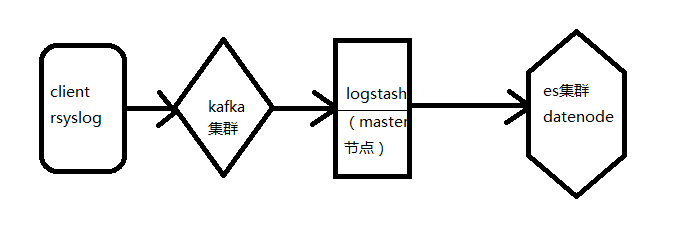

基本构思: rsyslog发送日志到kafka集群,然后由logstash从kafka中取出日志发送到es集群的数据节点。(注:logstash运行在master节点上)

1.本文所用的软件的软件的版本:

rsyslog8.14.0,kafka_2.11-0.9.0.1,elasticsearch2.2.1,logstash2.2.2,kibana4.4.2

2.rsyslog安装

由于rsyslog v8.7.0版本以后支持发送到kafka,所以升级rsyslog版本,本例升级到v8.14.0

安装脚本: rsyslog_install.sh

WORK_DIR=$(pwd)

cd /etc/yum.repos.d

wget http://rpms.adiscon.com/v8-stable/rsyslog.repo -O rsyslog.repo

cd $WORK_DIR

mkdir rsyslog-install

cd rsyslog-install

# check rsyslog version

# rsyslog supports kafka from v8.7.0

#old_rsyslog_ver=$(syslogd -version |head -n 1 | awk '{print $2}')

old_rsyslog_ver=$(rsyslogd -version |head -n 1 | awk '{print $2}')

## install rsyslog dependency: libestr

yum install -y libestr-devel

## install rsyslog dependency: libee

yum install -y libee-devel

## install rsyslog dependency: json-c

yum install -y json-c-devel

## install rsyslog denpendency: uuid

yum install -y libuuid-devel

## install rsyslog denpendency: liblogging-stdlog

yum install -y liblogging-devel

## install rsyslog denpendency: rst2man

yum install -y python-docutils

## install libcurl for omelasticsearch

yum install -y libcurl-deve

##install libgcrypt-devel

yum install -y libgcrypt-devel

##install libestr

yum install -y libestr

## install librdkafka for omkafka

wget https://github.com/edenhill/librdkafka/archive/0.8.5.tar.gz -O librdkafka-0.8.5.tar.gz

tar zxvf librdkafka-0.8.5.tar.gz

cd librdkafka-0.8.5

./configure

make

make install

cd ..

## install rsyslog

wget http://www.rsyslog.com/files/download/rsyslog/rsyslog-8.14.0.tar.gz -O rsyslog-8.14.0.tar.gz

tar zxvf rsyslog-8.14.0.tar.gz

export PKG_CONFIG_PATH=/usr/lib64/pkgconfig:/lib64/pkgconfig/

#old_executable_path=$(which syslogd)

old_executable_path=$(which rsyslogd)

executable_dir=$(dirname "$old_executable_path")

cd rsyslog-8.14.0

./configure --sbindir=$executable_dir --libdir=/usr/lib64 --enable-omkafka --enable-elasticsearch --enable-imfile --enable-impstats

make

make install

cp /usr/local/lib/librdkafka* /usr/lib64/

sed -i '/^*.emerg/d' /etc/rsyslog.conf

sed -i '/emerg/a*.emerg :omusrmsg:*' /etc/rsyslog.conf

sed -i '/^SYSLOGD_OPTIONS/d' /etc/sysconfig/rsyslog

echo 'SYSLOGD_OPTIONS=" "' >>/etc/sysconfig/rsyslog

[ ! -d /var/lib/rsyslog ] && mkdir /var/lib/rsyslog

升级好rsyslog后进入到/etc/rsyslog.d/下配置发送给kafka的配置文件rsyslog_kafka.conf:

## rsyslog v8 configuration file

module(load="imfile")

module(load="omkafka")

module(load="omelasticsearch")

#module(load="impstats" interval="600" severity="6" log.file="/var/lib/rsyslog/impstats.log" log.syslog="off" format="cee" resetCounters="on")

module(load="impstats" interval="600" severity="7" log.syslog="on" format="cee" resetCounters="on")

global (

maxMessageSize="32m"

workDirectory="/var/lib/rsyslog"

preserveFQDN="on"

action.reportSuspension="on"

)

main_queue (

queue.filename="mainQ"

queue.type="linkedlist"

queue.size="500000"

queue.timeoutenqueue="1000"

queue.maxfilesize="512M"

queue.maxdiskspace="10g"

queue.dequeuebatchsize="2000"

queue.workerthreads="4"

queue.workerthreadminimummessages="100000"

queue.discardseverity="3"

queue.checkpointinterval="10"

queue.timeoutshutdown="10"

queue.timeoutactioncompletion="10"

queue.saveonshutdown="on"

)

### INPUT FILE

input(type="imfile" File="/usr/local/nginx/logs/access1.log" Tag="nginx-log1" facility="local0" severity="info" persiststateinterval="5" readmode="2" addmetadata="on")

input(type="imfile" File="/usr/local/nginx/logs/access2.log" Tag="nginx-log2" facility="local0" severity="info" persiststateinterval="5" readmode="2" addmetadata="on")

## TEMPLETE

template(name="json_lines" type="list") {

constant(value="{")

constant(value="\\\\\"") property(name="hostname")

constant(value="\\\\\"") property(name="syslogtag")

constant(value="\\\\\"") property(name="msg")

constant(value="\\\\\"}")}

template( name="dynaFileRsyslog" type="string" string="/var/lib/rsyslog/%$year%/%$month%/%$day%_impstats.log" )

if ($syslogseverity == "7" ) then {

action( type="omfile" name="action_impstats-to-file" DynaFile="dynaFileRsyslog" FileCreateMode="0600")

}

if ($syslogtag startswith "nginx-") then {

action(

type="omkafka"

broker="yourdomain:9091,yourdomain:9092,yourdomain:9093"

topic="nginxlog"

partitions.auto="on"

confParam=["queue.buffering.max.messages=10000000","compression.codec=snappy","socket.timeout.ms=50","socket.keepalive.enable=true"]

action.ResumeInterval="10"

action.ResumeRetryCount="-1"

template="json_lines"

name="action_impstats-to-kafka"

queue.filename="action_impstats-to-kafka"

queue.size="1500"

queue.dequeuebatchsize="500"

)

}

## nginx_log not write message

if ($syslogtag startswith "nginx-") then stop

重启rsyslogd服务即可发送数据到kafka(前提要先配置好kafka),yourdomain替换成kafka集群配置中配置的域名

3.kafka集群搭建

由于机器紧张kafka集群由一台服务器搭建,机器充裕可多台机器搭建(遇到过kafka机器宕机事件/大哭)

解压kafka_2.11-0.9.0.1-1.tgz;mv kafka_2.11-0.9.0.1-1 kafka01; cp -a kafka01 kafka02;cp -a kafka01 kafka03

修改配置文件config下的zookeeper.properties,server.properties

| kafka01 | kafka02 | kafka03 |

| zookeeper.properties: | zookeeper.properties: | zookeeper.properties: |

| maxClientCnxns=0 | maxClientCnxns=0 | maxClientCnxns=0 |

| tickTime=2000 | tickTime=2000 | tickTime=2000 |

| initLimit=10 | initLimit=10 | initLimit=10 |

| syncLimit=5 | syncLimit=5 | syncLimit=5 |

| dataDir=/var/elk/zookeeper/kafka01 | dataDir=/var/elk/zookeeper/kafka02 | dataDir=/var/elk/zookeeper/kafka03 |

| dataLogDir=/var/elk/zookeeper/log01 | dataLogDir=/var/elk/zookeeper/log02 | dataLogDir=/var/elk/zookeeper/log03 |

| clientPort=2181 | clientPort=2182 | clientPort=2183 |

| server.1=ip:3881:4881 | server.1=ip:3881:4881 | server.1=ip:3881:4881 |

| server.2=ip:3882:4882 | server.2=ip:3882:4882 | server.2=ip:3882:4882 |

| server.3=ip:3883:4883 | server.3=ip:3883:4883 | server.3=ip:3883:4883 |

| server.properties: broker.id=1 | server.properties: broker.id=2 | server.properties: broker.id=3 |

| delete.topic.enable=true | delete.topic.enable=true | delete.topic.enable=true |

| listeners=PLAINTEXT://ip:9091 | listeners=PLAINTEXT://ip:9091 | listeners=PLAINTEXT://ip:9091 |

| port=9091 | port=9092 | port=9093 |

| host.name=ip | host.name=ip | host.name=ip |

| ###重要,如果区分内外网,客户端链接需要 | ###重要,如果区分内外网,客户端链接需要 | ###重要,如果区分内外网,客户端链接需要 |

| advertised.host.name=yourdomain | advertised.host.name=yourdomain | advertised.host.name=yourdomain |

| num.network.threads=24 | num.network.threads=24 | num.network.threads=24 |

| num.io.threads=24 | num.io.threads=24 | num.io.threads=24 |

| socket.send.buffer.bytes=1048576 | socket.send.buffer.bytes=1048576 | socket.send.buffer.bytes=1048576 |

| socket.receive.buffer.bytes=1048675 | socket.receive.buffer.bytes=1048675 | socket.receive.buffer.bytes=1048675 |

| socket.request.max.bytes=104857600 | socket.request.max.bytes=104857600 | socket.request.max.bytes=104857600 |

| log.dirs=/var/elk/zookeeper/kafka01-logs | log.dirs=/var/elastic/zookeeper/kafka02-logs | log.dirs=/var/elastic/zookeeper/kafka03-logs |

| ##topic partition,根据需要设置 | ##topic partition,根据需要设置 | ##topic partition,根据需要设置 |

| num.partitions=8 | num.partitions=8 | num.partitions=8 |

| num.recovery.threads.per.data.dir=8 | num.recovery.threads.per.data.dir=8 | num.recovery.threads.per.data.dir=8 |

| log.flush.interval.messages=60000 | log.flush.interval.messages=60000 | log.flush.interval.messages=60000 |

| log.flush.interval.ms=5000 | log.flush.interval.ms=5000 | log.flush.interval.ms=5000 |

| log.retention.hours=36 | log.retention.hours=36 | log.retention.hours=36 |

| log.retention.bytes=1073741824 | log.retention.bytes=1073741824 | log.retention.bytes=1073741824 |

| log.segment.bytes=1073741824 | log.segment.bytes=1073741824 | log.segment.bytes=1073741824 |

| log.retention.check.interval.ms=300000 | log.retention.check.interval.ms=300000 | log.retention.check.interval.ms=300000 |

| zookeeper.connect=ip:2181,ip:2182,ip:2183 | zookeeper.connect=ip:2181,ip:2182,ip:2183 | zookeeper.connect=ip:2181,ip:2182,ip:2183 |

| zookeeper.connection.timeout.ms=1000000 | zookeeper.connection.timeout.ms=1000000 | zookeeper.connection.timeout.ms=1000000 |

注:1).ip替换为本机的IP(可用内网)地址;yourdomain设置一个域名解析到这个ip(公网)地址(kafka跨机房接收数据的时候使用公网IP)

2).在/var/elk/zookeeper/kafka01下创建文件myid echo 1 >myid;在/var/elk/zookeeper/kafka02下创建文件myid echo 2 >myid;在/var/elk/zookeeper/kafka03下创建文件myid echo 3 >myid

3).在/var/elk/zookeeper/logs01下创建文件myid echo 1 > myid;在/var/elk/zookeeper/logs02下创建文件myid echo 2 >myid;在/var/elk/zookeeper/logs03下创建文件myid echo 3 >myid;

启动zookeeper和kafka: 分辨进入到kafka01,kafka02,kafka03目录下执行:bin/zookeeper-server-start.sh config/zookeeper.properties &

然后再分别执行:bin/kafka-server-start.sh config/server.properties &

注: 先启动zookeeper再启动kafka,注意先后顺序。

4.ES集群搭建

自建ES集群共有9个节点,5个数据节点布在同一台机器上(24核,64G内存),4个master节点4台机器(每个master节点上有logstash在跑,从kafka中取数据发送到es数据节点)。

解压elasticsearch2.2.1.tar.gz修改JVM内存配置:

vi elasticsearch2.2.1/bin/elasticsearch.in.sh

ES_MIN_MEM=10g

ES_MAX_MEM=10g

vi elasticsearch2.2.1/config/elasticsearch.yml

cluster.name: mycluster

##节点名称

node.name: master-node1

##node.master改为false且node.data改为true则变为数据节点,

node.master: true

node.data: false

##相应的目录自行修改

path.data: /home/elkdata/data

path.logs: /home/elkdata/logs

path.plugins: /home/elkdata/plugins

path.work: /home/elkdata/work

bootstrap.mlockall: true

network.host: localhost-ip

http.port: 9200

gateway.recover_after_nodes: 6

discovery.zen.ping.unicast.hosts: ["master-ip"]

discovery.zen.minimum_master_nodes: 2

node.max_local_storage_nodes: 10

action.destructive_requires_name: false

index.number_of_shards: 5

index.number_of_replicas: 0

index.translog.flush_threshold_size : 1000mb

index.translog.flush_threshold_ops: 10000

indices.cache.filter.size: 10%

indices.fielddata.cache.size: 10%

threadpool.search.queue_size: 10000

http.max_content_length: 200mb

http.enabled: true

http.compression: true

gateway.recover_after_time: 5m

gateway.expected_nodes: 6

cluster.routing.allocation.cluster_concurrent_rebalance: 5

cluster.routing.allocation.node_initial_primaries_recoveries: 5

cluster.routing.allocation.node_concurrent_recoveries: 5

cluster.routing.allocation.enable: all

indices.recovery.max_bytes_per_sec: 100mb

indices.recovery.concurrent_streams: 10

discovery.zen.ping.timeout: 100s

discovery.zen.ping.multicast.enabled: false

index.search.slowlog.threshold.query.warn: 30s

index.search.slowlog.threshold.fetch.warn: 1s

index.indexing.slowlog.threshold.index.warn: 10s

monitor.jvm.gc.young.warn: 1000ms

monitor.jvm.gc.old.warn: 10s

monitor.jvm.gc.old.info: 5s

monitor.jvm.gc.old.debug: 2s

index.refresh_interval: 5s

节点配置完成后,启动ES集群。

cd elasticsearch2.2.1/; bin/elasticsearch -d

然后到日志目录观察日志查看集群启动情况。

5.logstash读取kafka数据的配置

input {

kafka {

zk_connect => "kafka-IP:2181,kafka-IP:2182,kafka-IP:2183"

group_id => "logstash"

topic_id => "nginxlog"

codec => plain

reset_beginning => false

consumer_threads => 1

decorate_events => true

}

}

#filter {

# filter 大家根据自身的需求自行更改吧

#}

output {

elasticsearch {

hosts => ["datenode-ip1:port1","datenode-ip2:port2","datenode-ip3:port3","datenode-ip4:port4","datenode-ip5:port5"]

index => "logstash-%{type}-%{+YYYY.MM.dd}"

workers => 1

template_overwrite => true

}

}

6.kibana配置:

解压kibana-4.4.2-linux-x64.tar.gz

cd kibana-4.4.2-linux-x64;修改配置文件config/kibana.yml

server.port: 5601

server.host: "localhost-ip"

elasticsearch.url: "http://master-ip:9200"

cd kibana-4.4.2-linux-x64/bin/; nohup ./kibana &

7.访问kibana,浏览器输入http://localhost-ip:5601

1647

1647

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?