# Created by Wang, Jerry, last modified on Sep 12, 2015

## start-master.sh ( sbin folder下)

then ps -aux

7334 5.6 0.6 1146992 221652 pts/0 Sl 12:34 0:05 /usr/jdk1.7.0_79/bin/java -cp /root/devExpert/spark-1.4.1/sbin/../conf/:/root/devExpert/spar

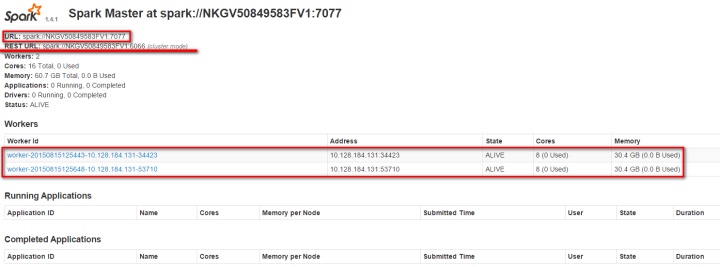

monitor master node via url: http://10.128.184.131:8080

启动两个worker:

./spark-class org.apache.spark.deploy.worker.Worker spark://NKGV50849583FV1:7077 ( bin folder下)

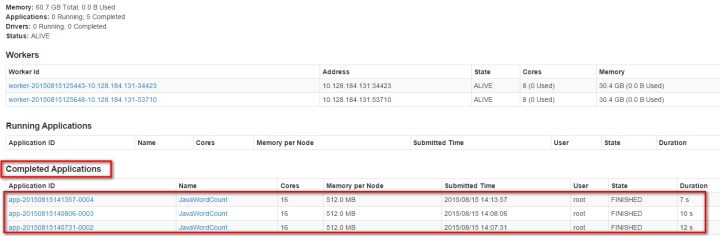

## 提交job到集群

./spark-submit --class "org.apache.spark.examples.JavaWordCount" --master spark://NKGV50849583FV1:7077 /root/devExpert/spark-1.4.1/example-java-build/JavaWordCount/target/JavaWordCount-1.jar /root/devExpert/spark-1.4.1/bin/test.txt

## 成功执行job

./spark-submit --class "org.apache.spark.examples.JavaWordCount" --master spark://NKGV50849583FV1:7077 /root/devExpert/spark-1.4.1/example-java-build/JavaWordCount/target/JavaWordCount-1.jar /root/devExpert/spark-1.4.1/bin/test.txt

added by Jerry: loading load-spark-env.sh !!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!1

added by Jerry:..........................................

/root/devExpert/spark-1.4.1/conf

added by Jerry, number of Jars: 1

added by Jerry, launch_classpath: /root/devExpert/spark-1.4.1/assembly/target/scala-2.10/spark-assembly-1.4.1-hadoop2.4.0.jar

added by Jerry,RUNNER:/usr/jdk1.7.0_79/bin/java

added by Jerry, printf argument list: org.apache.spark.deploy.SparkSubmit --class org.apache.spark.examples.JavaWordCount --master spark://NKGV50849583FV1:7077 /root/devExpert/spark-1.4.1/example-java-build/JavaWordCount/target/JavaWordCount-1.jar /root/devExpert/spark-1.4.1/bin/test.txt

added by Jerry, I am in if-else branch: /usr/jdk1.7.0_79/bin/java -cp /root/devExpert/spark-1.4.1/conf/:/root/devExpert/spark-1.4.1/assembly/target/scala-2.10/spark-assembly-1.4.1-hadoop2.4.0.jar:/root/devExpert/spark-1.4.1/lib_managed/jars/datanucleus-rdbms-3.2.9.jar:/root/devExpert/spark-1.4.1/lib_managed/jars/datanucleus-core-3.2.10.jar:/root/devExpert/spark-1.4.1/lib_managed/jars/datanucleus-api-jdo-3.2.6.jar -Xms512m -Xmx512m -XX:MaxPermSize=256m org.apache.spark.deploy.SparkSubmit --master spark://NKGV50849583FV1:7077 --class org.apache.spark.examples.JavaWordCount /root/devExpert/spark-1.4.1/example-java-build/JavaWordCount/target/JavaWordCount-1.jar /root/devExpert/spark-1.4.1/bin/test.txt

Using Spark's default log4j profile: org/apache/spark/log4j-defaults.properties

15/08/15 14:08:02 INFO SparkContext: Running Spark version 1.4.1

15/08/15 14:08:03 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

15/08/15 14:08:03 WARN Utils: Your hostname, NKGV50849583FV1 resolves to a loopback address: 127.0.0.1; using 10.128.184.131 instead (on interface eth0)

15/08/15 14:08:03 WARN Utils: Set SPARK_LOCAL_IP if you need to bind to another address

15/08/15 14:08:03 INFO SecurityManager: Changing view acls to: root

15/08/15 14:08:03 INFO SecurityManager: Changing modify acls to: root

15/08/15 14:08:03 INFO SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(root); users with modify permissions: Set(root)

15/08/15 14:08:04 INFO Slf4jLogger: Slf4jLogger started

15/08/15 14:08:04 INFO Remoting: Starting remoting

15/08/15 14:08:04 INFO Remoting: Remoting started; listening on addresses :[akka.tcp://sparkDriver@10.128.184.131:44792]

15/08/15 14:08:04 INFO Utils: Successfully started service 'sparkDriver' on port 44792.

15/08/15 14:08:04 INFO SparkEnv: Registering MapOutputTracker

15/08/15 14:08:04 INFO SparkEnv: Registering BlockManagerMaster

15/08/15 14:08:04 INFO DiskBlockManager: Created local directory at /tmp/spark-6fc6b901-3ac8-4acd-87aa-352fd22cf8d4/blockmgr-4c660a56-0014-4b1f-81a9-7ac66507b9fa

15/08/15 14:08:04 INFO MemoryStore: MemoryStore started with capacity 265.4 MB

15/08/15 14:08:05 INFO HttpFileServer: HTTP File server directory is /tmp/spark-6fc6b901-3ac8-4acd-87aa-352fd22cf8d4/httpd-b4344651-dbd8-4ba4-be1a-913ae006d839

15/08/15 14:08:05 INFO HttpServer: Starting HTTP Server

15/08/15 14:08:05 INFO Utils: Successfully started service 'HTTP file server' on port 46256.

15/08/15 14:08:05 INFO SparkEnv: Registering OutputCommitCoordinator

15/08/15 14:08:05 WARN Utils: Service 'SparkUI' could not bind on port 4040. Attempting port 4041.

15/08/15 14:08:05 WARN QueuedThreadPool: 2 threads could not be stopped

15/08/15 14:08:05 WARN Utils: Service 'SparkUI' could not bind on port 4041. Attempting port 4042.

15/08/15 14:08:05 WARN Utils: Service 'SparkUI' could not bind on port 4042. Attempting port 4043.

15/08/15 14:08:06 WARN Utils: Service 'SparkUI' could not bind on port 4043. Attempting port 4044.

15/08/15 14:08:06 WARN Utils: Service 'SparkUI' could not bind on port 4044. Attempting port 4045.

15/08/15 14:08:06 INFO Utils: Successfully started service 'SparkUI' on port 4045.

15/08/15 14:08:06 INFO SparkUI: Started SparkUI at http://10.128.184.131:4045

15/08/15 14:08:06 INFO SparkContext: Added JAR file:/root/devExpert/spark-1.4.1/example-java-build/JavaWordCount/target/JavaWordCount-1.jar at http://10.128.184.131:46256/jars/JavaWordCount-1.jar with timestamp 1439618886415

15/08/15 14:08:06 INFO AppClient$ClientActor: Connecting to master akka.tcp://sparkMaster@NKGV50849583FV1:7077/user/Master...

15/08/15 14:08:06 INFO SparkDeploySchedulerBackend: Connected to Spark cluster with app ID app-20150815140806-0003

15/08/15 14:08:06 INFO AppClient$ClientActor: Executor added: app-20150815140806-0003/0 on worker-20150815125648-10.128.184.131-53710 (10.128.184.131:53710) with 8 cores

15/08/15 14:08:06 INFO SparkDeploySchedulerBackend: Granted executor ID app-20150815140806-0003/0 on hostPort 10.128.184.131:53710 with 8 cores, 512.0 MB RAM

15/08/15 14:08:06 INFO AppClient$ClientActor: Executor added: app-20150815140806-0003/1 on worker-20150815125443-10.128.184.131-34423 (10.128.184.131:34423) with 8 cores

15/08/15 14:08:06 INFO SparkDeploySchedulerBackend: Granted executor ID app-20150815140806-0003/1 on hostPort 10.128.184.131:34423 with 8 cores, 512.0 MB RAM

15/08/15 14:08:06 INFO AppClient$ClientActor: Executor updated: app-20150815140806-0003/0 is now LOADING

15/08/15 14:08:06 INFO AppClient$ClientActor: Executor updated: app-20150815140806-0003/1 is now LOADING

15/08/15 14:08:06 INFO AppClient$ClientActor: Executor updated: app-20150815140806-0003/0 is now RUNNING

15/08/15 14:08:06 INFO AppClient$ClientActor: Executor updated: app-20150815140806-0003/1 is now RUNNING

15/08/15 14:08:06 INFO Utils: Successfully started service 'org.apache.spark.network.netty.NettyBlockTransferService' on port 60182.

15/08/15 14:08:06 INFO NettyBlockTransferService: Server created on 60182

15/08/15 14:08:06 INFO BlockManagerMaster: Trying to register BlockManager

15/08/15 14:08:06 INFO BlockManagerMasterEndpoint: Registering block manager 10.128.184.131:60182 with 265.4 MB RAM, BlockManagerId(driver, 10.128.184.131, 60182)

15/08/15 14:08:06 INFO BlockManagerMaster: Registered BlockManager

15/08/15 14:08:06 INFO SparkDeploySchedulerBackend: SchedulerBackend is ready for scheduling beginning after reached minRegisteredResourcesRatio: 0.0

15/08/15 14:08:07 INFO MemoryStore: ensureFreeSpace(143840) called with curMem=0, maxMem=278302556

15/08/15 14:08:07 INFO MemoryStore: Block broadcast_0 stored as values in memory (estimated size 140.5 KB, free 265.3 MB)

15/08/15 14:08:07 INFO MemoryStore: ensureFreeSpace(12633) called with curMem=143840, maxMem=278302556

15/08/15 14:08:07 INFO MemoryStore: Block broadcast_0_piece0 stored as bytes in memory (estimated size 12.3 KB, free 265.3 MB)

15/08/15 14:08:07 INFO BlockManagerInfo: Added broadcast_0_piece0 in memory on 10.128.184.131:60182 (size: 12.3 KB, free: 265.4 MB)

15/08/15 14:08:07 INFO SparkContext: Created broadcast 0 from textFile at JavaWordCount.java:45

15/08/15 14:08:07 INFO FileInputFormat: Total input paths to process : 1

15/08/15 14:08:07 INFO SparkContext: Starting job: collect at JavaWordCount.java:68

15/08/15 14:08:07 INFO DAGScheduler: Registering RDD 3 (mapToPair at JavaWordCount.java:54)

15/08/15 14:08:07 INFO DAGScheduler: Got job 0 (collect at JavaWordCount.java:68) with 1 output partitions (allowLocal=false)

15/08/15 14:08:07 INFO DAGScheduler: Final stage: ResultStage 1(collect at JavaWordCount.java:68)

15/08/15 14:08:07 INFO DAGScheduler: Parents of final stage: List(ShuffleMapStage 0)

15/08/15 14:08:07 INFO DAGScheduler: Missing parents: List(ShuffleMapStage 0)

15/08/15 14:08:07 INFO DAGScheduler: Submitting ShuffleMapStage 0 (MapPartitionsRDD[3] at mapToPair at JavaWordCount.java:54), which has no missing parents

15/08/15 14:08:07 INFO MemoryStore: ensureFreeSpace(4768) called with curMem=156473, maxMem=278302556

15/08/15 14:08:07 INFO MemoryStore: Block broadcast_1 stored as values in memory (estimated size 4.7 KB, free 265.3 MB)

15/08/15 14:08:07 INFO MemoryStore: ensureFreeSpace(2678) called with curMem=161241, maxMem=278302556

15/08/15 14:08:07 INFO MemoryStore: Block broadcast_1_piece0 stored as bytes in memory (estimated size 2.6 KB, free 265.3 MB)

15/08/15 14:08:07 INFO BlockManagerInfo: Added broadcast_1_piece0 in memory on 10.128.184.131:60182 (size: 2.6 KB, free: 265.4 MB)

15/08/15 14:08:07 INFO SparkContext: Created broadcast 1 from broadcast at DAGScheduler.scala:874

15/08/15 14:08:07 INFO DAGScheduler: Submitting 1 missing tasks from ShuffleMapStage 0 (MapPartitionsRDD[3] at mapToPair at JavaWordCount.java:54)

15/08/15 14:08:07 INFO TaskSchedulerImpl: Adding task set 0.0 with 1 tasks

15/08/15 14:08:13 INFO SparkDeploySchedulerBackend: Registered executor: AkkaRpcEndpointRef(Actor[akka.tcp://sparkExecutor@10.128.184.131:45901/user/Executor#-784323527]) with ID 0

15/08/15 14:08:13 INFO TaskSetManager: Starting task 0.0 in stage 0.0 (TID 0, 10.128.184.131, PROCESS_LOCAL, 1469 bytes)

15/08/15 14:08:13 INFO SparkDeploySchedulerBackend: Registered executor: AkkaRpcEndpointRef(Actor[akka.tcp://sparkExecutor@10.128.184.131:58368/user/Executor#242744791]) with ID 1

15/08/15 14:08:13 INFO BlockManagerMasterEndpoint: Registering block manager 10.128.184.131:48659 with 265.4 MB RAM, BlockManagerId(0, 10.128.184.131, 48659)

15/08/15 14:08:13 INFO BlockManagerMasterEndpoint: Registering block manager 10.128.184.131:56613 with 265.4 MB RAM, BlockManagerId(1, 10.128.184.131, 56613)

15/08/15 14:08:16 INFO BlockManagerInfo: Added broadcast_1_piece0 in memory on 10.128.184.131:48659 (size: 2.6 KB, free: 265.4 MB)

15/08/15 14:08:16 INFO BlockManagerInfo: Added broadcast_0_piece0 in memory on 10.128.184.131:48659 (size: 12.3 KB, free: 265.4 MB)

15/08/15 14:08:16 INFO TaskSetManager: Finished task 0.0 in stage 0.0 (TID 0) in 3053 ms on 10.128.184.131 (1/1)

15/08/15 14:08:16 INFO TaskSchedulerImpl: Removed TaskSet 0.0, whose tasks have all completed, from pool

15/08/15 14:08:16 INFO DAGScheduler: ShuffleMapStage 0 (mapToPair at JavaWordCount.java:54) finished in 8.703 s

15/08/15 14:08:16 INFO DAGScheduler: looking for newly runnable stages

15/08/15 14:08:16 INFO DAGScheduler: running: Set()

15/08/15 14:08:16 INFO DAGScheduler: waiting: Set(ResultStage 1)

15/08/15 14:08:16 INFO DAGScheduler: failed: Set()

15/08/15 14:08:16 INFO DAGScheduler: Submitting ResultStage 1 (ShuffledRDD[4] at reduceByKey at JavaWordCount.java:61), which is now runnable

15/08/15 14:08:16 INFO MemoryStore: ensureFreeSpace(2408) called with curMem=163919, maxMem=278302556

15/08/15 14:08:16 INFO MemoryStore: Block broadcast_2 stored as values in memory (estimated size 2.4 KB, free 265.3 MB)

15/08/15 14:08:16 INFO MemoryStore: ensureFreeSpace(1458) called with curMem=166327, maxMem=278302556

15/08/15 14:08:16 INFO MemoryStore: Block broadcast_2_piece0 stored as bytes in memory (estimated size 1458.0 B, free 265.2 MB)

15/08/15 14:08:16 INFO BlockManagerInfo: Added broadcast_2_piece0 in memory on 10.128.184.131:60182 (size: 1458.0 B, free: 265.4 MB)

15/08/15 14:08:16 INFO SparkContext: Created broadcast 2 from broadcast at DAGScheduler.scala:874

15/08/15 14:08:16 INFO DAGScheduler: Submitting 1 missing tasks from ResultStage 1 (ShuffledRDD[4] at reduceByKey at JavaWordCount.java:61)

15/08/15 14:08:16 INFO TaskSchedulerImpl: Adding task set 1.0 with 1 tasks

15/08/15 14:08:16 INFO TaskSetManager: Starting task 0.0 in stage 1.0 (TID 1, 10.128.184.131, PROCESS_LOCAL, 1227 bytes)

15/08/15 14:08:16 INFO BlockManagerInfo: Added broadcast_2_piece0 in memory on 10.128.184.131:48659 (size: 1458.0 B, free: 265.4 MB)

15/08/15 14:08:16 INFO MapOutputTrackerMasterEndpoint: Asked to send map output locations for shuffle 0 to 10.128.184.131:45901

15/08/15 14:08:16 INFO MapOutputTrackerMaster: Size of output statuses for shuffle 0 is 143 bytes

15/08/15 14:08:16 INFO TaskSetManager: Finished task 0.0 in stage 1.0 (TID 1) in 110 ms on 10.128.184.131 (1/1)

15/08/15 14:08:16 INFO DAGScheduler: ResultStage 1 (collect at JavaWordCount.java:68) finished in 0.110 s

15/08/15 14:08:16 INFO TaskSchedulerImpl: Removed TaskSet 1.0, whose tasks have all completed, from pool

15/08/15 14:08:16 INFO DAGScheduler: Job 0 finished: collect at JavaWordCount.java:68, took 8.942894 s

this: 2

guideline: 1

is: 2

long: 1

Hello: 1

a: 1

development: 1

file!: 1

Fiori: 1

test: 2

world: 1

15/08/15 14:08:16 INFO SparkUI: Stopped Spark web UI at http://10.128.184.131:4045

15/08/15 14:08:16 INFO DAGScheduler: Stopping DAGScheduler

15/08/15 14:08:16 INFO SparkDeploySchedulerBackend: Shutting down all executors

15/08/15 14:08:16 INFO SparkDeploySchedulerBackend: Asking each executor to shut down

15/08/15 14:08:16 INFO MapOutputTrackerMasterEndpoint: MapOutputTrackerMasterEndpoint stopped!

15/08/15 14:08:16 INFO Utils: path = /tmp/spark-6fc6b901-3ac8-4acd-87aa-352fd22cf8d4/blockmgr-4c660a56-0014-4b1f-81a9-7ac66507b9fa, already present as root for deletion.

15/08/15 14:08:16 INFO MemoryStore: MemoryStore cleared

15/08/15 14:08:16 INFO BlockManager: BlockManager stopped

15/08/15 14:08:16 INFO BlockManagerMaster: BlockManagerMaster stopped

15/08/15 14:08:16 INFO OutputCommitCoordinator$OutputCommitCoordinatorEndpoint: OutputCommitCoordinator stopped!

15/08/15 14:08:16 INFO SparkContext: Successfully stopped SparkContext

15/08/15 14:08:16 INFO Utils: Shutdown hook called

15/08/15 14:08:16 INFO RemoteActorRefProvider$RemotingTerminator: Shutting down remote daemon.

15/08/15 14:08:16 INFO Utils: Deleting directory /tmp/spark-6fc6b901-3ac8-4acd-87aa-352fd22cf8d4

15/08/15 14:08:16 INFO RemoteActorRefProvider$RemotingTerminator: Remote daemon shut down; proceeding with flushing remote transports.

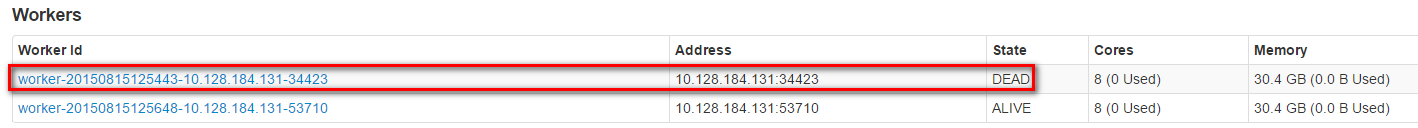

如果关掉一个worker:

217

217

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?