本文为丹麦奥胡斯大学(作者:Jan Depenau)的博士论文,共148页。

在过去的十年中,神经网络显示出了其潜在的价值,特别是在分类等领域。神经网络方法的成功在很大程度上取决于找到正确的网络结构。神经网络的结构决定了网络中神经元的数量和网络中连接的拓扑结构。本文的重点是神经网络体系结构的自动生成。第一章介绍了本文的研究背景,即工业化研究项目。描述围绕项目的研究计划,包括问题定义、目标和可能的解决方案。完整的计划见附录B。第二章介绍了多层前馈神经网络。本文从理论基础的简短描述入手,给出了本文所用的符号和基本定义。接下来的两章将讨论神经网络以及任何机器学习最基本的特性之一:从例子中学习的能力。在第三章中讨论了绩效的训练和衡量。本文综述了几种常用的训练算法和误差函数,进一步介绍了三个新的误差函数、两个软单调误差函数和MS-σ误差函数。这两种方法都发表在会议论文中,见附录D和G。训练一个网络的目的是使它能够找到根本的真相:即泛化。为了理解泛化的含义,如何估计泛化并指出测量中的一些问题,在第四章给出了各种定义。

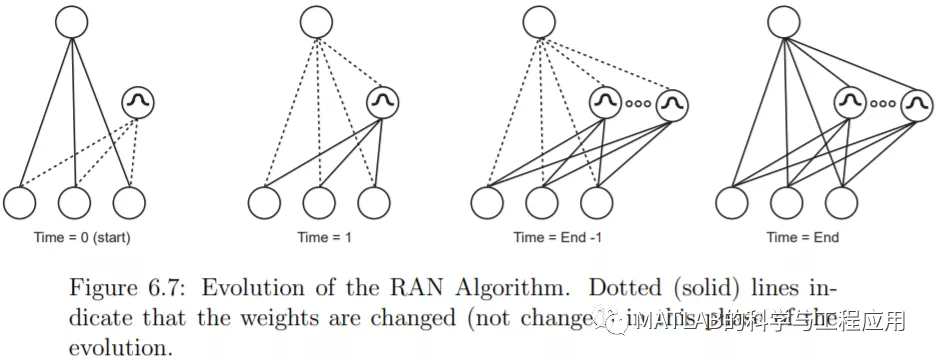

重点是实证风险最小化(ERM)和近似正确(PAC)学习理论的框架。ERM和PAC理论都是基于对机器复杂度的度量,即增长函数和VC维,而VC维通常是未知的。线性分类器是一个例外,研究表明,近年来发展起来的许多自构造算法都具有与线性分类器相同的VC维。各种泛化理论似乎一致认为,能够使用最少参数以令人满意的方式学习训练数据的模型/机器/神经网络是具有良好泛化能力的。(这种哲学通常被称为奥卡姆剃刀)。这意味着,例如,一个具有多个参数且较小学习误差的网络不应优先于具有少量参数且误差稍大但仍令人满意的学习误差的网络。从神经网络的角度出发,提出了几种实现这一目标的方法。一般来说,这些方法可以分为两组:一组方法试图减少工作良好网络中的单元或权重,另一组方法侧重于连续构建网络。第五章探讨了第一种方法。描述了多种方法,并测试了一些最流行的方法。这项工作已经发表在会议论文中,这些论文放在附录C中。第六章考虑了建设思路,回顾了已有的方法,其中对级联相关算法(CCA)的研究最多。附录E中提供了CCA的详细说明和分析。将单元与不同类型的传递函数(如径向基函数、sigmoid函数或阈值函数)组合的新思想导致了一种新的分类构造算法的发展。第6章对GLOCAL算法进行了全面的描述,而在会议记录中发布的较精简版本可在附录F中找到。前几章中描述的几种方法用于合成孔径雷达(SAR)的实际数据,以便对不同的冰层类型进行分类。第七章介绍了该地区的冰层问题、前人的工作以及初步调查中的实验结果。这一章与1995年6月发布的ERS-1 SAR图像冰类型分类的不同神经网络结构的技术报告实验相同。本文所做工作的总体结论是,有几种可能的方法或策略可用于自动构建神经网络,以解决像SAR任务这样的大型问题。这一结论在第八章中得到了支持,在第八章中,我们将前几章所描述的工作放在与三个计划子目标相关的角度,并强调了本文所贡献的新内容。并对今后的工作提出了建议和作者自己的意见。

During the last ten years neural networkshave shown their worth, particularly in areas such as classification. Thesuccess of a neural network approach is deeply dependent on finding the rightnetwork architecture. The architecture of a neural network determines thenumber of neurons in the network and the topology of the connections within thenetwork. The emphasis of this thesis is on automatic generation of networkarchitecture. The background for this thesis, the Industrial Research Project,is described in chapter 1. The description builds up around the educationalplan for the project and includes problem definition, goals and possiblesolution. The educational plan in full length can be found in appendix B. Inchapter 2 an introduction to the Multi-Layer feed-forward neural network isgiven. It starts with a short description of the theoretical foundation, toestablish the notation and basic definitions used in this thesis. The next twochapters deal with one of the most essential properties for neural networks aswell as for any learning machine: the ability to learn from examples. Inchapter 3 training and measuring of performance are discussed. A review of someof the most used training algorithms and error functions is given. Furtherthree new error functions, two Soft-monotonic error functions and the MS-σerror function are introduced. Both ideas have been published in conferencepapers, which are found in appendices D and G. The objective of training anetwork is to make it able to find the underlying truth: i.e. to generalise. Inorder to understand the meaning of generalisation, how to estimate it and pointout some problems with measuring, various definitions are provided in chapter4. The focus is on the framework of Empirical Risk Minimisation (ERM) andProbably Approximately Correct (PAC) learning theory. Both the ERM and the PACtheory are based on the measurement of a machine’s complexity, in terms of thegrowth function and the VC-dimension, which in general is unknown. An exceptionis the linear classifier and it is shown that many of the self-constructingalgorithms developed during the last few years have the same VC-dimension as alinear classifier. The various generalisation theories seem to agree that themodel/machine/neural network that is able to learn the training data in asatisfactory way using the smallest number of parameters, is themodel/machine/neural network with the largest probability of a goodgeneralisation ability. (This philosophy is often called Ockham’s Razor). Thismeans e.g. that a network with many parameters that gives a low learning errorshould not be preferred to a network with few parameters giving a slightlylarger, but still satisfactory, learning error. From a neural network point ofview several ways have been proposed to achieve this goal. In general themethods can be split into two groups: one where the methods try to reduce thenumber of units or weights in a well working network, and another where themethods focus on successively building a network. In chapter 5 the firstapproach is explored. Various methods are described and some of the mostpopular are tested. It has often been discussed which method is the best. Onthe basis of the theory from chapter 4 and experiments it is argued that noneof the methods is better than the others. This work has been published inconference papers, which are placed in appendix C. The construction ideas areconsidered in chapter 6. A review of well established methods is given. Amongthese the Cascade-Correlation Algorithm (CCA) has been studied the most. Inappendix E a detailed description and analysis of the CCA is provided. Newideas of combining units with different types of transfer functions like radialbasis functions and sigmoid or threshold functions led to the development of anew construction algorithm for classification. The algorithm called GLOCAL isfully described in chapter 6 while a shorter version published in conferenceproceedings can be found in appendix F. Several of the methods described in theprevious chapters were used on real life data from a Synthetic Aperture Radar(SAR) in order to classify different ice types. In chapter 7 a description ofthe ice problem, previous work in that area, and results from experiments madein a preliminary investigation are provided. The chapter is identical to thetechnical report Experiments with Different Neural Network Architectures forClassification of Ice Type Concentration from ERS-1 SAR Images released in June1995. The overall conclusion of the work performed in connection with theresearch and documented in this thesis is that there are several possiblemethods or strategies for automatically building neural networks that are ableto solve large problems like the SAR task. This conclusion is supported inchapter 8 where the work described in the previous chapters is put inperspective in relation to the three educational subgoals and the new things towhich this thesis has contributed are highlighted. Suggestions on future worksand remarks from the author are also included.

1. 引言

2. 神经网络

3. 学习

4. 泛化

5. 正则化与修剪——改进预先确定的体系架构

6. 构造算法

7. 冰层分类

8. 结论

更多精彩文章请关注公众号:

转载本文请联系原作者获取授权,同时请注明本文来自刘春静科学网博客。

链接地址:http://blog.sciencenet.cn/blog-69686-1234591.html

上一篇:[转载]【信息技术】【2016.10】车辆事故自动检测算法的实现

下一篇:[转载]【电信学】【2012.05】物联网在酒店业发展可持续竞争中的优势

217

217

被折叠的 条评论

为什么被折叠?

被折叠的 条评论

为什么被折叠?